SecondNet: A Scalable Data Center Network Virtualization

advertisement

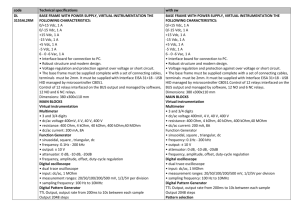

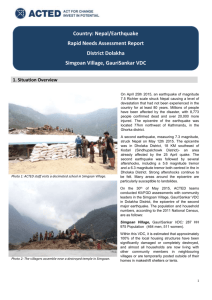

SecondNet: A Data Center Network Virtualization Architecture with Bandwidth Guarantees Chuanxiong Guo1, Guohan Lu1, Helen J. Wang2, Shuang Yang3, Chao Kong4, Peng Sun5, Wenfei Wu6, Yongguang Zhang1 1Microsoft Research Asia, 2Microsoft Research Redmond, 3Stanford University 4Huawei Technologies, 5Princeton University, 6University of Wisconsin-Madison Dec 2, 2010 Philadelphia, USA 1 Outline • • • • • • • • Background VDC abstraction and service model SecondNet architecture Port-Switching based Source Routing VDC allocation Experimental results Related work Conclusion 2 Background Source: Microsoft. • Network virtualization with bandwidth guarantee 3 VDC VDC VDC0 VDC VDC VDC1 VDC VDC VDCn IP packet DCN Virtualization SecondNet Control IF Data IF PSSR packet DCN Infrastructures Fat-tree VM, switch, server mgt Topology updates Control IF Data IF VL2 DCell BCube Others vm 0 VDC VDC VDC0 VDC VDC VDC1 vm 1 VDC 500Mb/s VDC VDC vm vm vm n 2 3 4 packetCenter (VDC) • VirtualIPData DCN – A set of VMs plus a SecondNet SLA Virtualization Controlspace IF – Every VDCData hasIF its own (private) IP address PSSR packet Topology updates • Service model VM, switch, server mgt Control IF Data IF – Best-effort DCN Infrastructures – Type-1: local egress/ingress bandwidth guarantee – Type-0: bandwidth guarantee between any two VMs Fat-tree VL2 DCell BCube Others Challenges in VDC bandwidth guarantee • Timely and efficient VDC allocation and expansion – NP-hard problem • Scalable VDC state maintenance – State: VM to physical server mapping, bandwidth reservation, routing path – The state number can easily reach tens of millions • Practical deployment – Applicable to various topologies (dcell, bcube, fattree, vl2) and addressing scheme – Implementable using commodity servers and switches – Failure handling 6 SecondNet • Logically centralized VDC manager – Efficient and low time-complexity VDC allocation – Failure handling • Put virtualization and bandwidth reservation state into servers – All the state at server hypervisors – Stateless switch-core • Port-switching based source routing – Make Secondnet applicable for all network topologies – Deployable with current commodity switches 7 PSSR path: VDC0 VM0->VM1 0 2 2 users 1 4 5 4 5 0 1 2 3 stateless switches s0 requests VDC Manager 0 1 2 3 2 3 2 3 2 3 2 3 0 1 0 1 0 1 0 1 0 1 hypervisor State: v2p, band resv, pssr paths VDC0 VM0 VDC1 VM0 0 stateful servers untrusted VMs hypervisor trusted domain 1 State: v2p, band resv, pssr paths VDC0 VM1 VDC1 VM1 s1 Port switching based source routing (PSSR) • Port-switching – Given the topology is known, port number based forwarding is possible – Simpler switching functionality • Source routing – Pin routing path for bandwidth guarantee – Keep state only at server hypervisors • PSSR • Stateless switch-core • Addressing agnostic • Can be implement using MPLS 9 PSSR example stateless 0 switches 2 0 s0 0 2 vdc 1 4 5 0 2 ip1 ip0 4 5 data 0 1 2 3 3 vdc 0 20 2 1 1 0 3 2 ip1 0 1ip0 data 0 3 0 1 1 vdc hypervisor0 2 2 1 0 VDC0 ip1 VM0(ip0) 2 0 1 2 3 ip0 2 2 2 vdc 3 1 0 10 0 ip1 data ip0 stateful servers data untrusted VMs 0 1 vdc 2 hypervisor1 2 1 0 VDC0 ip1 VM1(ip1) trusted data ip0 domain ip1 s1 ip1 ip0 ip0 data data VDC allocation 0: Cluster pre-calculation divide servers into clusters of different sizes 1: Cluster selection 2: Min-cost flow 3: Routing path 11 Simulation: VDC allocation time BCube with 4,096 servers Fat-tree with 27,648 servers VL2 with 103,680 servers 12 Implementation Child partition App TCP/IP VMNIC User space App Kernel space TCP/IP V-NIC Hyper-v mgr Root partition WMI VMSwitch VMBus secondnet.sys recv send Portswitching NDIS NIC Driver Policy mgr V2P table V2P V2P table table Neigh maint To VDC mgr Testbed • A BCube testbed – 16 servers (Dell Precision 490 workstation with Intel 2.00GHz dualcore CPU, 4GB DRAM, 160GB disk) – 8 8-port mini-switches (DLink 8-port Gigabit switch DGS-1008D) • NIC – Intel Pro/1000 PT quad-port Ethernet NIC – NetFPGA 14 Experiment: bandwidth guarantee Physical topology: fat-tree VDC1 and VDC2 both have 24 VMs Each server has one VM for each VDC VDC1 VDC2 0 1 7 8 9 15 16 17 23 15 Related work • DCN virtualization – Seawall, Netshare – VL2 – Amazon VPC, EC2 • Virtual network allocation – Simulated annealing – Virtual network embedding • Bandwidth guarantee – IntServ, DiffServ – VPN hose model 16 Summary • VDC as abstraction and resource allocation unit • SecondNet as the network virtualization layer for VDC isolation and performance guarantee – Virtualization and bandwidth guarantee state at server hypervisors – VDC manager for VDC allocation and failure handling – Port-switching based source routing for implementation • Future work 17 Q&A 18