P(t 1 )

Natural Language Processing

(8)

Zhao Hai 赵海

Department of Computer Science and Engineering

Shanghai Jiao Tong University

2010-2011 zhaohai@cs.sjtu.edu.cn

1

Overview

•

Models

–

HMM: Hidden Markov Model

– maximum entropy Markov model

– CRFs: Conditional Random Fields

• Tasks

– Chinese word segmentation

– part-of-speech tagging

– named entity recognition

2

What is an HMM?

• Graphical Model

• Circles indicate states

• Arrows indicate probabilistic dependencies between states

3

What is an HMM?

• Green circles are hidden states

• Dependent only on the previous state

• “The past is independent of the future given the present.”

4

What is an HMM?

• Purple nodes are observed states

• Dependent only on their corresponding hidden state

5

HMM Formalism

S S S S S

K K K K

• {

S, K ,

P, A, B }

•

S : {s

1

…s

N

} are the values for the hidden states

• K : {k

1

…k

M

} are the values for the observations

K

6

S

HMM Formalism

A

S

B

K

A

S

B

K

A

S

B

K

A

K

• {

S, K ,

P, A, B }

• P = {p i

} are the initial state probabilities

• A = {a ij

} are the state transition probabilities

•

B = {b ik

} are the observation state probabilities

S

K

7

Inference in an HMM

• Probability Estimation: Compute the probability of a given observation sequence

• Decoding: Given an observation sequence, compute the most likely hidden state sequence

• Parameter Estimation: Given an observation sequence, find a model that most closely fits the observation

8

Probability Estimation

o

1 o t-1 o t o t+1 o

T

Given an observation sequence and a model, compute the probability of the observation sequence

O

=

( o

1

...

o

T

) ,

=

( A , B ,

P

)

Compute P ( O |

)

9

x

1

Probability Estimation

x t-1 x t x t+1 x

T o

1 o t-1 o t

P ( O | X ,

)

= b x

1 o

1 b x

2 o

2

...

b x

T o

T o t+1 o

T

10

x

1

Probability Estimation

x t-1 x t x t+1 x

T o

1 o t-1 o t

P ( O |

P ( X |

X

)

,

)

= b x

1 o

1 b x

2 o

2

...

b x

T o

T

= p x

1 a x

1 x

2 a x

2 x

3

...

a x

T

1 x

T o t+1 o

T

11

x

1

Probability Estimation

x t-1 x t x t+1 x

T o

1 o t-1 o t o t+1

P ( O

P ( X

P ( O ,

|

|

X

)

X |

,

)

= b x

1 o

1 b x

2 o

2

...

b x

T o

T

=

) p

= x

1 a x

1 x

2

P ( O a x

2 x

3

| X ,

...

a x

T

1 x

T

) P ( X |

) o

T

12

x

1

Probability Estimation

x t-1 x t x t+1 x

T o

T o

1 o t-1 o t o t+1

P ( O

P ( X

P ( O ,

|

|

X

)

X |

,

)

= b x

1 o

1 b x

2 o

2

...

b x

T o

T

=

) p

= x

1 a x

1 x

2

P ( O a x

2 x

3

| X ,

...

a x

T

1 x

T

) P ( X

P ( O |

)

=

X

P ( O | X ,

) P ( X

|

)

|

)

13

x

1

Probability Estimation

x t-1 x t x t+1 x

T o

1 o t-1 o t o t+1 o

T

P ( O |

)

=

{ x

1

...

x

T p

} x

1 b x

1 o

1 t

1 T P

=

1 a x t x t

1 b x t

1 o t

1

14

x

1

Forward Procedure x t-1 x t x t+1 x

T o

1 o t-1 o t o t+1 o

T

• Special structure gives us an efficient solution using dynamic programming.

• Intuition : Probability of the first t observations is the same for all possible t +1 length state sequences.

•

Define:

i

(

t

)

=

P

(

o

1

...

o t

,

x t

= i

|

)

15

x

1

Forward Procedure x t-1 x t x t+1 x

T o

1 o t-1 o t o t+1 o

T

j

( t

1 )

=

=

=

=

P ( o

1

...

o t

1

,

P ( o

1

...

o t

, x t x t

1

1 j )

P ( o

1

...

o t

1

P ( o

1

...

o t

|

| x t x t

1

1

=

= j ) P ( x t j ) P ( o t

1

1

|

= x t

1 j )

=

=

= j ) P ( o t

1

| x t

1

= j j )

) P ( x t

1

= j )

16

x

1

Forward Procedure x t-1 x t x t+1 x

T o

1 o t-1 o t o t+1 o

T

j

( t

1 )

=

=

=

=

P ( o

1

...

o t

1

,

P ( o

1

...

o t

, x t x t

1

1 j )

P ( o

1

...

o t

1

P ( o

1

...

o t

|

| x t x t

1

1

=

= j ) P ( x t j ) P ( o t

1

1

|

= x t

1 j )

=

=

= j ) P ( o t

1

| x t

1

= j j )

) P ( x t

1

= j )

17

x

1

Forward Procedure x t-1 x t x t+1 x

T o

1 o t-1 o t o t+1 o

T

j

( t

1 )

=

=

=

=

P ( o

1

...

o t

1

,

P ( o

1

...

o t

, x t x t

1

1 j )

P ( o

1

...

o t

1

P ( o

1

...

o t

|

| x t x t

1

1

=

= j ) P ( x t j ) P ( o t

1

1

|

= x t

1 j )

=

=

= j ) P ( o t

1

| x t

1

= j j )

) P ( x t

1

= j )

18

x

1

Forward Procedure x t-1 x t x t+1 x

T o

1 o t-1 o t o t+1 o

T

j

( t

1 )

=

=

=

=

P ( o

1

...

o t

1

,

P ( o

1

...

o t

, x t x t

1

1 j )

P ( o

1

...

o t

1

P ( o

1

...

o t

|

| x t x t

1

1

=

= j ) P ( x t j ) P ( o t

1

1

|

= x t

1 j )

=

=

= j ) P ( o t

1

| x t

1

= j j )

) P ( x t

1

= j )

19

x

1

Forward Procedure x t-1 x t x t+1 x

T o

1 o t-1 o t o t+1 o

T

=

=

=

= i i i i

=

1 ...

N

P

=

1 ...

N

P

P

=

1 ...

N

=

1 ...

N i

( o

1

( o

1

( o

1

( t )

...

o t

...

o t

...

o t a ij b

,

,

, x t x t x t jo t

1

1

=

=

= i , j x t i ) P (

|

1 x t x t

=

1

= j

=

) P ( o t i ) P ( j | x t x t

1

=

=

| x t

1

= i ) P ( o t

1 j ) i ) P ( o t

1

|

| x t

1 x t

1

=

= j ) j )

20

x

1

Forward Procedure x t-1 x t x t+1 x

T o

1 o t-1 o t o t+1 o

T

=

=

=

= i i i i

=

1 ...

N

P

=

1 ...

N

P

P

=

1 ...

N

=

1 ...

N i

( o

1

( o

1

( o

1

( t )

...

o t

...

o t

...

o t a ij b

,

,

, x t x t x t jo t

1

1

=

=

= i , j x t i ) P (

|

1 x t x t

=

1

= j

=

) P ( o t i ) P ( j | x t x t

1

=

=

| x t

1

= i ) P ( o t

1 j ) i ) P ( o t

1

|

| x t

1 x t

1

=

= j ) j )

21

x

1

Forward Procedure x t-1 x t x t+1 x

T o

1 o t-1 o t o t+1 o

T

=

=

=

= i i i i

=

1 ...

N

P

=

1 ...

N

P

P

=

1 ...

N

=

1 ...

N i

( o

1

( o

1

( o

1

( t )

...

o t

...

o t

...

o t a ij b

,

,

, x t x t x t jo t

1

1

=

=

= i , j x t i ) P (

|

1 x t x t

=

1

= j

=

) P ( o t i ) P ( j | x t x t

1

=

=

| x t

1

= i ) P ( o t

1 j ) i ) P ( o t

1

|

| x t

1 x t

1

=

= j ) j )

22

x

1

Forward Procedure x t-1 x t x t+1 x

T o

1 o t-1 o t o t+1 o

T

=

=

=

= i i i i

=

1 ...

N

P

=

1 ...

N

P

P

=

1 ...

N

=

1 ...

N i

( o

1

( o

1

( o

1

( t )

...

o t

...

o t

...

o t a ij b

,

,

, x t x t x t jo t

1

1

=

=

= i , j x t i ) P (

|

1 x t x t

=

1

= j

=

) P ( o t i ) P ( j | x t x t

1

=

=

| x t

1

= i ) P ( o t

1 j ) i ) P ( o t

1

|

| x t

1 x t

1

=

= j ) j )

23

x

1

Backward Procedure x t-1 x t x t+1 x

T o

1 o t-1 o t o t+1 o

T

i

( T

1 )

=

1

i

(

t

)

=

P

(

o t

...

o

T

|

x t

= i

)

i

( t )

= j

=

1 ...

N a ij b io t

j

( t

1 )

Probability of the rest of the states given the first state

24

x

1

The

Solution

to Estimation

x t-1 x t x t+1 x

T o

1 o t-1

P ( O |

)

= i

N

=

1

i

( T )

P ( O |

)

= i

N

=

1 p i

i

( 1 )

P ( O |

)

= i

N

=

1

i

( t )

i

( t ) o t o t+1

Forward Procedure o

T

Backward Procedure

Combination

25

Decoding: Best State Sequence o

1 o t-1 o t o t+1 o

T

• Find the state sequence that best explains the observations

• Viterbi algorithm

• arg max

X

P ( X | O )

26

Viterbi Algorithm x t-1 j x

1 o

1 o t-1 o t o t+1 o

T

j

( t )

= max x

1

...

x t

1

P ( x

1

...

x t

1

, o

1

...

o t

1

, x t

= j , o t

)

The state sequence which maximizes the probability of seeing the observations to time t -1, landing in state j , and seeing the observation at time t

27

Viterbi Algorithm x t-1 x t x t+1 x

1 o

1 o t-1 o t o t+1 o

T

j

( t )

j

( t

= max x

1

...

x t

1

P ( x

1

...

x t

1

, o

1

...

o t

1

, x t

1 )

= max i

i

( t ) a ij b jo t

1

=

j

( t

1 )

= arg max i

i

( t ) a ij b jo t

1 j , o t

)

Recursive

Computation

28

Viterbi Algorithm x t-1 x t x t+1 x

1 x

T o

1 o t-1

X

ˆ

T

= arg max i

X

ˆ t

=

^

X t

1

( t

P ( X

ˆ

)

=

1 ) arg max i i

( T )

i

( T ) o t o t+1 o

T

Compute the most likely state sequence by working backwards

29

B o

1

Parameter Estimation

A A A A

B o t-1

B o t

B o t+1

B o

T

• Given an observation sequence, find the model that is most likely to produce that sequence.

• No analytic method => an EM algorithm ( Baum-Welch )

• Given a model and observation sequence, update the model parameters to better fit the observations.

30

Parameter Estimation

A A A A

B o

1

B o t-1

B o t p t

( i , j )

=

i

( t ) a ij

m

=

1 ...

N

b m jo t

( t

1

)

j m

( t

( t

)

1 )

i

( t )

= j

=

1 ...

N p t

( i , j )

B o t+1

B o

T

Probability of traversing an arc

Probability of being in state i

31

B o

1

Parameter Estimation

A A A A

B o t-1 p ˆ = i a

ˆ ij b

ˆ ik

=

=

( 1 ) i

t

T

=

1 t

T

{ t

=

1 p t

T

: o t t

=

1

=

k

} i

( i i

( t

( t

, t

) j

)

( i

)

)

B o t

B o t+1

B o

T

Now we can compute the new estimates of the model parameters.

32

HMM Applications

• Generating parameters for n-gram models

• Tagging speech

• Speech recognition

33

B o

1

The Most Important Thing

A A A A

B o t-1

B o t

B o t+1

B o

T

We can use the special structure of this model to do a lot of neat math and solve problems that are otherwise not solvable.

34

Overview

•

Models

– HMM: Hidden Markov Model

– maximum entropy Markov model

– CRFs: Conditional Random Fields

• Tasks

– Chinese word segmentation

– part-of-speech tagging

– named entity recognition

35

Limitations of HMM

“ US official questions regulatory scrutiny of Apple ”

•

Problem 1 : HMMs only use word identity. It cannot use richer representations.

– Apple is capitalized.

• MEMM Solution: Use more descriptive features

– ( b0:Is-capitalized, b1: Is-in-plural, b2: Has-wordnet-antonym, b3:Is-“the” etc)

– Real valued features can also be handled.

• Here features are pairs < b, s >: b is feature of observation and s is destination state e.g. <Is-capitalized, Company>

• Feature function: f

b,s

(o ,s ) t t

if b(o ) is true and s t

0 otherwise

= s t e.g. f

<Is-capitalized,Company>

(“Apple”, Company) = 1.

36

HMMs

HMMs vs. MEMMs (I)

MEMMs

( | ', )

| | : s

( | )

37

HMMs

HMMs vs. MEMMs (II)

MEMMs

α t

( s ) the probability of producing o

1

, . . . , o t and being in s at time t .

t

1

=

t

( ') ( | ') ( t

1

| )

α t

( s ) the probability of being in s at time t given o

1

, . . . , o t

.

t

1

=

s

S

t

( ') ( s t

1

)

δ t

( s ) the probability of the best path for producing o

1

, . . . , o t and being in s at time t.

δ t

( s ) the probability of the best path that reaches s at time t given o

1

, . . . , o t

.

t

1

( )

= max

t

( ') ( | ') ( t

1

| ) t

1

( )

= max

t s P s o t

1

)

38

Maximum Entropy

•

Problem 2 :

•

HMMs are trained to maximize the likelihood of the training set.

Generative, joint distribution.

•

But they solve conditional problems (observations are given).

•

MEMM Solution: Maximum Entropy (duh).

•

Idea: Use the least biased hypothesis, subject to what is known.

•

Constraints: The expectation E i of feature i in the learned distribution should be the same as its mean F i on the training set.

For every state s

0

E i

= n

1 s

F

i

=

n n

1 and feature i : s

k

=

1, s k

= s n k

=

1, s k

= s

i k

s

S s

( | ) ( k i k

, ')

39

More on MEMMs

• It turns out that the maximum entropy distribution is unique and has an exponential form: s

=

1 exp(

i i

• We can estimate λ i with Generalized Iterative Scaling.

– Adding a feature x x

( , )

= i

– Compute

F

– Set

(0) = i i

.

0 i

– Compute current expectation of feature i i from model.

i

( j

1) = i

1 log(

F i )

C E i

40

Extensions

• We can train even when the labels are not known using EM.

– E step: determine most probable state sequence and compute

F i

.

– M step: GIS.

• We can reduce the number of parameters to estimate by moving the previous state in the features: “Subject-is-female”, “Previous-wasquestion”, “Is-verb-and-no-noun-yet”.

• We can even add features regarding actions in a reinforcement learning setting: “Slow-vehicle-encountered-and-steer-left”.

• We can mitigate data sparseness problems by simplifying the model:

P ( s | s ' , o )

=

P ( s | s ' )

1

Z ( o , s ' ) exp(

i

i f i

( o , s ) )

41

Overview

•

Models

– HMM: Hidden Markov Model

– maximum entropy Markov model

– CRFs: Conditional Random Fields

• Tasks

– Chinese word segmentation

– part-of-speech tagging

– named entity recognition

42

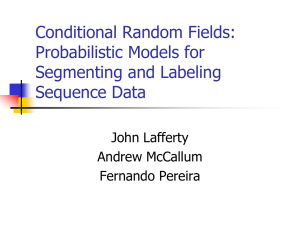

CRFs as Sequence Labeling Tool

• Conditional random fields (CRFs) are a statistical sequence modeling framework first introduced to the field of natural language processing (NLP) to overcome label-bias problem.

• John Lafferty, A. McCallum and F. Pereira. 2001.

Conditional Random Field: Probabilistic Models for Segmenting and Labeling

Sequence Data. In Proceedings of the Eighteenth International Conference on

Machine Learning, 282-289. June 28-July 01, 2001

43

Sequence Segmenting and Labeling

• Goal: mark up sequences with content tags

• Application in computational biology

– DNA and protein sequence alignment

– Sequence homolog searching in databases

– Protein secondary structure prediction

– RNA secondary structure analysis

• Application in computational linguistics & computer science

– Text and speech processing, including topic segmentation, part-of-speech

(POS) tagging

– Information extraction

– Syntactic disambiguation

44

Example: Protein secondary structure prediction

Conf: 977621015677468999723631357600330223342057899861488356412238

Pred: CCCCCCCCCCCCCEEEEEEECCCCCCCCCCCCCHHHHHHHHHHHHHHHCCCCEEEEHHCC

AA: EKKSINECDLKGKKVLIRVDFNVPVKNGKITNDYRIRSALPTLKKVLTEGGSCVLMSHLG

10 20 30 40 50 60

Conf: 855764222454123478985100010478999999874033445740023666631258

Pred: CCCCCCCCCCCCCCCCCCCCCCCCCCHHHHHHHHHHHHHCCCCCCCCCCCCHHHHHHCCC

AA: RPKGIPMAQAGKIRSTGGVPGFQQKATLKPVAKRLSELLLRPVTFAPDCLNAADVVSKMS

70 80 90 100 110 120

Conf: 874688611002343044310017899999875053355212244334552001322452

Pred: CCCEEEECCCHHHHHHCCCCCHHHHHHHHHHHHHCCEEEECCCCCCCCCCCCCCCCHHHH

AA: PGDVVLLENVRFYKEEGSKKAKDREAMAKILASYGDVYISDAFGTAHRDSATMTGIPKIL

130 140 150 160 170 180

45

HMMs as Generative Models

• Hidden Markov models (HMMs)

• Assign a joint probability to paired observation and label sequences

– The parameters typically trained to maximize the joint likelihood of train examples

46

HMMs as Generative Models

(cont’d)

• Difficulties and disadvantages

– Need to enumerate all possible observation sequences

– Not practical to represent multiple interacting features or long-range dependencies of the observations

– Very strict independence assumptions on the observations

47

Conditional Models

• Conditional probability P(label sequence y | observation sequence x) rather than joint probability P ( y, x )

– Specify the probability of possible label sequences given an observation sequence

• Allow arbitrary, non-independent features on the observation sequence X

• The probability of a transition between labels may depend on past and future observations

– Relax strong independence assumptions in generative models

48

Discriminative Models

Maximum Entropy Markov Models (MEMMs)

•

Exponential model

•

Given training set X with label sequence Y:

– Train a model θ that maximizes P(Y|X, θ)

– For a new data sequence x , the predicted label y maximizes P( y | x , θ)

– Notice the per-state normalization

49

MEMMs (cont’d)

• MEMMs have all the advantages of Conditional Models

• Per-state normalization: all the mass that arrives at a state must be distributed among the possible successor states

(“conservation of score mass”)

• Subject to Label Bias Problem

– Bias toward states with fewer outgoing transitions

50

Label Bias Problem

• Consider this MEMM:

• P(1 and 2 | ro) = P(2 | 1 and ro)P(1 | ro) = P(2 | 1 and o)P(1 | r)

P(1 and 2 | ri) = P(2 | 1 and ri)P(1 | ri) = P(2 | 1 and i)P(1 | r)

• Since P(2 | 1 and x) = 1 for all x, P(1 and 2 | ro) = P(1 and 2 | ri)

In the training data, label value 2 is the only label value observed after label value 1

Therefore P(2 | 1) = 1, so P(2 | 1 and x) = 1 for all x

• However, we expect P(1 and 2 | ri) to be greater than P(1 and 2 | ro).

• Per-state normalization does not allow the required expectation

• http://wing.comp.nus.edu.sg/pipermail/graphreading/2005-September/000032.html

• http://hi.baidu.com/%BB%F0%D1%BF_ayouh/blog/item/338f13510d38e8441038c250.html

51

Solve the Label Bias Problem

• Change the state-transition structure of the model

– Not always practical to change the set of states

• Start with a fully-connected model and let the training procedure figure out a good structure

– Prelude the use of prior, which is very valuable (e.g. in information extraction)

52

Random Field

53

Conditional Random Fields (CRFs)

• CRFs have all the advantages of MEMMs without label bias problem

– MEMM uses per-state exponential model for the conditional probabilities of next states given the current state

– CRF has a single exponential model for the joint probability of the entire sequence of labels given the observation sequence

• Undirected acyclic graph

• Allow some transitions “vote” more strongly than others depending on the corresponding observations

54

Definition of CRFs

X is a random variable over data sequences to be labeled

Y is a random variable over corresponding label sequences

55

Example of CRFs

56

HMM

Graphical comparison among

HMMs, MEMMs and CRFs

MEMM CRF

57

Conditional Distribution

If the graph G = ( V , E ) of Y is a tree, the conditional distribution over the label sequence Y = y, given X = x, by fundamental theorem of random fields is: p

(y | x)

exp

f e k k

( , y | , x) e

g v k k

( , y | , x) v

x is a data sequence y is a label sequence v is a vertex from vertex set V = set of label random variables e is an edge from edge set E over V f k and g k are given and fixed.

Boolean edge feature g k is a Boolean vertex feature; f k is a k is the number of features

=

1 2

, ,

n 1 2

, ,

n k and

k are parameters to be estimated y| e y| v is the set of components of y defined by edge e is the set of components of y defined by vertex v

58

Conditional Distribution (cont’d)

• CRFs use the observation-dependent normalization Z (x) for the conditional distributions: p

(y | x)

=

1

Z (x) exp

f e k k

( , y | , x) e

g v k k

( , y | v

, x)

Z (x) is a normalization over the data sequence x

59

Decoding: To label an unseen sequence

We compute the most likely labeling Y* as follows by dynamic programming (for efficient computation)

Y

* = arg max

Y

P ( Y | X )

60

Complexity Estimation

• The time complexity of an iteration of parameter estimation of L-BFGS algorithm is

•

O

(

L 2 NMF

)

• where L and N are, respectively, the numbers of labels and sequences (sentences),

•

M is the average length of sequences, and

•

F is the average number of activated features of each labelled clique.

61

CRF++: a CRFs Package

• CRF++ is a simple, customizable, and open source implementation of Conditional Random Fields (CRFs) for segmenting/labeling sequential data.

• http://crfpp.sourceforge.net/

• Requirements

– C++ compiler (gcc 3.0 or higher)

• How to make

– % ./configure

– % make

– % su

– # make install

62

CRF++

• Feature template representation and input file format

63

CRF++

• training

• % crf_learn -f 3 -c 1.5 template_file train_file model_file

• test

• % crf_test -m model_file test_files

64

Summary

• Discriminative models are prone to the label bias problem

• CRFs provide the benefits of discriminative models

• CRFs solve the label bias problem well, and demonstrate good performance

65

Overview

•

Models

– HMM: Hidden Markov Model

– maximum entropy Markov model

– CRFs: Conditional Random Fields

• Tasks

–

Chinese word segmentation

– part-of-speech tagging

– named entity recognition

66

What is Chinese Word Segmentation

• A special case of tokenization in natural language processing (NLP) for many languages that have no explicit word delimiters such as spaces.

• Original:

– 她来自苏格兰

– She comes from SU GE LAN

Meaningless!

• Segmented:

– 她 / 来 / 自 / 苏格兰

– She comes from

Scotland .

Meaningful!

67

Learning from a Lexicon:

maximal matching algorithm for word segmentation

• Input

–

A lexicon is pre-defined.

– An unsegmented sequence

•

The algorithm:

①

Start from the first character, try to find the longest matched word in the lexicon.

②

Set the next character after the above found word as the new start point.

③ If reaches the end of the sequence, the algorithm ends.

④ Otherwise, go to (1).

68

Learning from a segmented corpus:

Word segmentation as labeling

• 自然科学的研究不断深入

• natural science / of / research / uninterruptedly / deepen

• 自然科学 / 的 / 研究 / 不断 / 深入

• BMME S BE BE BE

• B: beginning, M: Middle, E: End, of a word

• S: single-character word

• Using CRFs as the learning model

69

CWS as Character-base Tagging:

From the begging to the latest

•

Nianwen Xue , 2003

Chinese Word Segmentation as Character Tagging, CLCLP, Vol. 8(1), 2003

• Xiaoqiang Luo , 2003

A Maximum Entropy Chinese Character-based Parser, EMNLP-2003

• Hwee Tou Ng and Kin Kiat Low , 2004

Chinese Part-of Speech Tagging: One-at-a-Time or All-at-Once? Word-based or

Character-Based? EMNLP-2004

•

Jin Kiat Low, Hwee Tou Ng, Wenyuan Guo, 2005

A Maximum Entropy Approach to Chinese Word Segmentation, The 4th SIGHAN

Workshop on CLP, 2005

• Huihsin Tseng, Pichuan Chang, Galen Andrew, Daniel Jurafsky, Christopher

Manning, 2005

A Conditional Random Field Word Segmenter for Sighan Bakeoff 2005, The 4th

SIGHAN Workshop on CLP, 2005

70

Label Set

Tag set Tags

2-tag

4-tag

Multi-character Word Reference

B, E B, BE, BEE, … Mostly for CRF

B, M, E, S

S, BE, BME, BMME, … Xue/Low/MaxEnt

6-tag B, M, E, S,

B

2

, B

3

S, BE, BB

2

BB

2

B

3

E, BB

ME, …

2

B

3

E, Zhao/CRF

More labels, better performance for CWS …

71

Feature Template Set

C

-1

, C

0

, C

1

, C

-1

C

0

, C

0

C

1

, C

-1

C

1

,

Where C

-1

C

0

C

1 is previous, current and next character

72

Overview

•

Models

– HMM: Hidden Markov Model

– maximum entropy Markov model

– CRFs: Conditional Random Fields

• Tasks

– Chinese word segmentation

– part-of-speech tagging

– named entity recognition

73

Parts of Speech

• Generally speaking, the “grammatical type” of word:

– Verb, Noun, Adjective, Adverb, Article, …

• We can also include inflection:

– Verbs: Tense, number, …

– Nouns: Number, proper/common, …

– Adjectives: comparative, superlative, …

– …

• Most commonly used POS sets for English have 50-

80 different tags

74

BNC Parts of Speech

• Nouns:

NN0 Common noun, neutral for number (e.g. aircraft

NN1 Singular common noun (e.g. pencil, goose, time

NN2 Plural common noun (e.g. pencils, geese, times

NP0 Proper noun (e.g. London, Michael, Mars , IBM

• Pronouns:

PNI Indefinite pronoun (e.g. none, everything, one

PNP Personal pronoun (e.g. I, you, them, ours

PNQ Wh -pronoun (e.g. who, whoever, whom

PNX Reflexive pronoun (e.g. myself, itself, ourselves

75

• Verbs:

VVB finite base form of lexical verbs (e.g. forget, send, live, return

VVD past tense form of lexical verbs (e.g. forgot, sent, lived

VVG -ing form of lexical verbs (e.g. forgetting, sending, living

VVI infinitive form of lexical verbs (e.g. forget, send, live, return

VVN past participle form of lexical verbs (e.g. forgotten, sent, lived

VVZ -s form of lexical verbs (e.g. forgets, sends, lives, returns

VBB present tense of BE, except for is

…and so on: VBD VBG VBI VBN VBZ

VDB finite base form of DO: do

…and so on: VDD VDG VDI VDN VDZ

VHB finite base form of HAVE: have, 've

…and so on: VHD VHG VHI VHN VHZ

VM0 Modal auxiliary verb (e.g. will, would, can, could , 'll , 'd )

76

• Articles

AT0 Article (e.g. the, a, an , no )

DPS Possessive determiner (e.g. your, their, his )

DT0 General determiner ( this, that )

DTQ Whdeterminer (e.g. which, what, whose, whichever )

EX0 Existential there , i.e. occurring in “ there is …” or “ there are …”

• Adjectives

AJ0 Adjective (general or positive) (e.g. good, old, beautiful )

AJC Comparative adjective (e.g. better, older )

AJS Superlative adjective (e.g. best, oldest )

• Adverbs

AV0 General adverb (e.g. often, well, longer (adv.), furthest .

AVP Adverb particle (e.g. up, off, out )

AVQ Wh -adverb (e.g. when, where, how, why, wherever )

77

• Miscellaneous:

CJC Coordinating conjunction (e.g. and, or, but )

CJS Subordinating conjunction (e.g. although, when )

CJT The subordinating conjunction that

CRD Cardinal number (e.g. one, 3, fifty-five, 3609 )

ORD Ordinal numeral (e.g. first, sixth, 77th, last )

ITJ Interjection or other isolate (e.g. oh, yes, mhm, wow )

POS The possessive or genitive marker 's or '

TO0 Infinitive marker to

PUL Punctuation: left bracket - i.e. ( or [

PUN Punctuation: general separating mark - i.e. . , ! , : ; - or ?

PUQ Punctuation: quotation mark - i.e. ' or "

PUR Punctuation: right bracket - i.e. ) or ]

XX0 The negative particle not or n't

ZZ0 Alphabetical symbols (e.g. A, a, B, b, c, d )

78

Task: Part-Of-Speech Tagging

•

Goal

: Assign the correct part-of-speech to each word (and punctuation) in a text.

• Example:

Two old men bet on the game .

CRD AJ0 NN2 VVD PP0 AT0 NN1 PUN

• Learn a

local

model of POS dependencies, usually from pre-tagged data

•

No

parsing

79

Hidden Markov Models

•

Assume: POS (state) sequence generated as timeinvariant random process, and each POS randomly generates a word (output symbol)

0.2

AJ0

0.3

“a” 0.6

AT0

0.2

0.5

“the” 0.4

0.3

“cat”

0.5

NN1

0.9

“bet”

0.1

NN2

“cats”

“men”

80

Definition of HMM for Tagging

• Set of states – all possible tags

• Output alphabet – all words in the language

• State/tag transition probabilities

• Initial state probabilities: the probability of beginning a sentence with a tag t (t

0

t)

• Output probabilities – producing word w at state t

• Output sequence – observed word sequence

• State sequence – underlying tag sequence

81

HMMs For Tagging

• First-order (bigram) Markov assumptions:

– Limited Horizon: Tag depends only on previous tag

P(t i+1

= t k | t

1

= t j

1

,…,t i

= t j i

) = P(t i+1

= t k | t

– Time invariance: No change over time i

= t j )

P(t i+1

= t k | t i

= t j ) = P(t

2

= t k | t

1

= t j ) = P( t j

t k )

• Output probabilities:

– Probability of getting word w k for tag t j : P(w k | t j )

– Assumption:

Not

dependent on other tags or words!

82

Combining Probabilities

• Probability of a tag sequence:

P(t

1 t

2

…t n

) = P(t

1

)P(t

1

t

2

)P(t

2

t

3

)…P(t n-1

t n

)

Assume t

0

– starting tag:

= P(t

0

t

1

)P(t

1

t

2

)P(t

2

t

3

)…P(t n-1

t n

)

• Prob. of word sequence

and

tag sequence:

P(W,T) =

P i

P(t i-1

t i

) P(w i

| t i

)

83

Training from Labeled Corpus

• Labeled training = each word has a POS tag

• Thus:

P

MLE

(t j ) = C(t j ) / N

P

MLE

(t j

t k ) = C(t j , t k ) / C(t j )

P

MLE

(w k | t j ) = C(t j :w k ) / C(t j )

• Smoothing can be applied.

84

Viterbi Tagging

• Most probable tag sequence given text:

T* = arg max

T

P m

(T | W)

= arg max

T

P m

(W | T) P

(Bayes’ Theorem) m

(T) / P m

(W)

= arg max

T

P m

(W | T) P m

(T)

(W is constant for all T)

= arg max

T

= arg max

T

P

i

[

m(t i log i-1

t i

) m(w i

[

m(t

| t i

) i-1

t i

) m(w i

]

| t i

)

]

• Exponential number of possible tag sequences – use dynamic programming for efficient computation

85

-2.3

w

1 t

1

-3 t

0

-1.7

t

2

-3.4

-1 t

3

-2.7

-1.7

-0.3

-1.3

-log m t 1 t 2 t 3 t 0

2.3

1.7

1 t 1

1.7

1 2.3

t 2

0.3

3.3

3.3

t 3 1.3

1.3

2.3

w

2 t

1

-6

-1.7

t

2

-4.7

-0.3

-1.3

t

3

-6.7

w

3 t

1

-7.3

t

2

-10.3

t

3

-9.3

-log m w 1 w 2 w 3 t 1 t 2 t 3

0.7

1.7

1.7

2.3

0.7

1.7

2.3

3.3

1.3

86

Viterbi Algorithm

1.

D(0, START) = 0

2.

for each tag t != START do : D(1, t) = -

3.

for i

1 t o N do : for each tag t j do :

D(i, t j )

max k

D(i-1,t k ) + lm (t k t j ) + lm (w i

|t j )

Record best( i,j ) =k which yielded the max

4.

log P(W,T) = max j

D( N , t j )

5.

Reconstruct path from max j backwards where: lm (.) = log m (.) and D(i, t j

) – max joint probability of state and word sequences till position i , ending at t j .

Complexity: O( N t

2 N )

87

Overview

•

Models

– HMM: Hidden Markov Model

– maximum entropy Markov model

– CRFs: Conditional Random Fields

• Tasks

– Chinese word segmentation

– part-of-speech tagging

– named entity recognition

88

Hidden Markov Model (HMM) based

NERC System

• Named Entity Recognition and Classification System

• HMM-

– Statistical construct used to solve classification problems, having an inherent state sequence representation

– Transition probability: Probability of traveling between two given states

– A set of output symbols (also known as observation) emitted by the process

– Emitted symbol depends on the probability distribution of the particular state

– Output of the HMM: Sequence of output symbols

– Exact state sequence corresponding to a particular observation sequence is unknown (i.e., hidden)

– Simple language model (n-gram) for NE tagging

• Uses very little amount of knowledge about the language, apart from simple context information

89

HMM based NERC System (Contd..)

HMM based NERC Architecture

90

HMM based NERC System (Contd..)

• Components of HMM based NERC system

– Language model

• Represented by the model parameters of HMM

• Model parameters estimated based on the labeled data during learning

– Possible class module

• Consists of a list of lexical units associated with the list of 17 tags

– NE disambiguation algorithm

• Input: List of lexical units with the associated list of possible tags

• Output: Output tag for each lexical unit using the encoded information from the language model

• Decides the best possible tag assignment for every word in a sentence according to the language model

• Viterbi algorithm (Viterbi, 1967)

– Unknown word handling

• Viterbi algorithm (Viterbi, 1967) assigns some tags to unknown words

• variable length NE suffixes

• Lexicon (Ekbal and Bandyopadhyay, 2008d)

91

HMM based NERC System (Contd..)

• Problem of NE tagging

Let W be a sequence of words

W = w

1

, w

2

, … , w n

Let T be the corresponding NE tag sequence

T = t

1

, t

2

, … , t n

Task : Find T which maximizes P ( T | W )

T’ = argmax

T

P ( T | W )

92

HMM based NERC System (Contd..)

By Bayes Rule,

P ( T | W ) = P ( W | T ) * P ( T ) / P ( W )

T’ = argmax

T

Models

P ( W | T ) * P ( T )

– Fisrt order model (Bigram): The probability of a tag depends only on the previous tag

– Second order model (Trigram): The probability of a tag depends on the previous two tags

Transition Probability

Bigram P ( T ) = P ( t

1

) * P ( t

2

| t

1

) * P ( t

3

| t

1 t

2

) …… * P ( t n

Trigram P ( T ) = P ( t

1

P ( T ) = P ( t

1

) * P ( t

| $ ) * P ( t

2

| $ t

1

2

| t

1

) * P ( t

) * P ( t

3

| t

1

3 t

2

| t

1 t

2

) …… * P ( t

) …… * P ( t n

| t n n-2 t

| t

1

| t n-2 n-1

… t

) t n-1 n-1

)

)

93

HMM based NERC System (Contd..)

• Estimation of unigram, bigram and trigram probabilities from the training corpus

: (

3

)

= freq t

Bigram P t t

3 2

)

=

N freq t t

2 3 fr ( )

2

• Emission Probability

P W T

P w t

1 1

P w t

2 2

• Estimation :

Trigra m t

3 1 2

* P w t n n

)

= freq t t t

1 2 3 freq t t

1 2

P w t i i

= freq w t i i

) i

)

94

HMM based NERC System (Contd..)

Context Dependency (Modification)

– To make Markov model powerful, introduce a 1st order context dependent feature

( | )

(

1

| $, )* (

1

P w t t

2 1 2

P w | t n n

1 t n

( | i i

1

, t i

)

= freq w t i i

1 t i freq t i

1

, t i

)

95

2nd order Hidden Markov Model

96

2nd order Hidden Markov Model (Proposed)

97

HMM based NERC System (Contd..)

•

Why Smoothing?

– All events may not be encountered in the limited training corpus

– Insufficient instances for each bigram or trigram to reliably estimate the probability

– Setting a probability to zero has an undesired effect

•

Procedure

– Transition probability :

P t t n n

2

, t n

1

)

1

2 3

=

1

=

1

P t n

2

P t t n n

1

)

3

P t t n n

2

, t n

1

)

– Emission probability :

( | i i

1

, ) i

1

=

2

1

=

1

P w t i i

2

P w t i i

1 t i

– Calculation of λs and Өs (Brants, 2000)

98

HMM based NERC System (Contd..)

Handling of unknown words

Viterbi algorithm (Viterbi, 1967) attempts to assign a tag to the unknown words

P( w i

| t i

) P( f i

| t i

)

Calculated based on the features of unknown word

Suffixes: Probability distribution of a particular suffix with respect to specific NE tags is generated from all words in the training set that share the same suffix

Variable length person name suffixes (e.g., bAbu [-babu], -dA [-da] , di [-di] etc)

Variable length location name suffixes (e.g., - lYAnd [-land], pur [-pur],

liYA [-lia]) etc)

Lexicon

Lexicon contains the root words and their basic POS information

Unknown word that is found to appear in the lexicon is most likely not a NE

99

Supervised NERC Systems (ME, CRF and SVM)

• Limitations of HMM

– Use of only local features may not work well

– Simple HMM models do not work well when large data are not used to estimate the model parameters

– Incorporating a diverse set features in an HMM based NE tagger is difficult and complicates the smoothing

• Solution:

– Maximum Entropy (ME) model, Conditional Random Field (CRF) or

Support Vector Machine (SVM)

– ME, CRF or SVM can make use of rich feature information

• ME model

– Very flexible method of statistical modeling

– A combination of several features can be easily incorporated

– Careful feature selection plays a crucial role

– Does not provide a method for automatic selection of useful features

– Features selected using heuristics

– Adding arbitrary features may result in overfitting

100

Supervised NERC Systems (ME, CRF and SVM)

• CRF

– CRF does not require careful feature selection in order to avoid overfitting

– Freedom to include arbitrary features

– Ability of feature induction to automatically construct the most useful feature combinations

– Conjunction of features

– Infeasible to incorporate all possible conjunction features due to overflow of

• SVM memory

– Good to handle different types of data

– Predict the classes depending upon the labeled word examples only

– Predict the NEs based on feature information of words collected in a predefined window size only

– Can not handle the NEs outside tokens

– Achieves high generalization even with training data of a very high dimension

– Can handle non-linear feature spaces with

101

Named Entity Features

•

Language Independent Features

– Can be applied for NERC in any language

•

Language Dependent Features

– Generated from the language specific resources like gazetteers and POS taggers

– Indian languages are resource-constrained

– Creation of gazetteers in resource-constrained environment requires a priori knowledge of the language

– POS information depends on some language specific phenomenon such as person, number, tense, gender etc

– POS tagger (Ekbal and Bandyopadhyay, 2008d) makes use of the several language specific resources such as lexicon, inflection list and a NERC system to improve its performance

• Language dependent features improve system performance

102

Language Independent Features

– Context Word: Preceding and succeeding words

– Word Suffix

• Not necessarily linguistic suffixes

• Fixed length character strings stripped from the endings of words

• Variable length suffix -binary valued feature

– Word Prefix

• Fixed length character strings stripped from the beginning of the words

– Named Entity Information: Dynamic NE tag (s) of the previous word (s)

– First Word (binary valued feature): Check whether the current token is the first word in the sentence

103

Language Independent Features (Contd..)

• Length (binary valued): Check whether the length of the current word less than three or not (shorter words rarely NEs)

• Position (binary valued): Position of the word in the sentence

• Infrequent (binary valued): Infrequent words in the training corpus most probably NEs

• Digit features: Binary-valued

– Presence and/or the exact number of digits in a token

• CntDgt : Token contains digits

• FourDgt: Token consists of four digits

• TwoDgt: Token consists of two digits

• CnsDgt: Token consists of digits only

104

Language Independent Features (Contd..)

– Combination of digits and punctuation symbols

• CntDgtCma: Token consists of digits and comma

• CntDgtPrd: Token consists of digits and periods

– Combination of digits and symbols

• CntDgtSlsh: Token consists of digit and slash

• CntDgtHph: Token consists of digits and hyphen

• CntDgtPrctg: Token consists of digits and percentages

– Combination of digit and special symbols

• CntDgtSpl: Token consists of digit and special symbol such as $, # etc.

105

Language dependent Features (Contd..)

– Part of Speech (POS) Information : POS tag(s) of the current and/or the surrounding word(s)

• SVM-based POS tagger (Ekbal and Bandyopadhyay, 2008b)

• Accuracy=90.2% of 27 tags

– Nominal, PREP (Postpositions) and Other

– Gazetteer based features (binary valued): Several features extracted from the gazetteers

106

ME based NERC System (Contd..)

• Language model : Represented by ME model parameters

•

Possible class module : Consists of a list of lexical units for each word associated with the list of 17 tags

•NE disambiguation : Decides the most probable tag sequence for a given word sequence

• beam search algorithm

• Elimination of inadmissible sequences:

Removes the inadmissible tag sequences from the output of the ME model

Tool: C++ based ME Package

(http://homepages.inf.ed.ac.uk/s0450736/software/m axent/maxent-20061005.tar.bz2)

107

ME based NERC System

• Elimination of Inadmissible Tag Sequences

– Inadmissible tag sequence (e.g., B-PER followed by LOC)

– Transition probability

P c c j

)

1, if the sequence is admissible

0, otherwise

– Probability of the classes assigned to the words in a sentence ‘s’ in a document ‘D’ defined as :

( ,...,

1 c n

| , )

= i n

=

1

P c s D i

P c c i i

1

)

108

CRF based NERC System

Tool: C++ based CRF++ package

(http://crfpp.sourceforge.net )

• Language model: Represented by

CRF model parameters

• Possible class module: Consists of a list of lexical units for each word associated with the list of 17 tags

• NE disambiguation: Decides the most probable tag sequence for a given word sequence

• Forward Viterbi and backword A* search algorithm (Rabiner, 1989) for disambiguation

• Elimination of inadmissible sequences: Removes the inadmissible tag sequences from the output of the CRF model

(Same as ME model)

109

CRF based NERC System (Contd..)

• Feature Template: Feature represented in terms of feature template

Feature template used in the experiment

110

SVM based NERC System

•

Language model: Represented by SVM model parameters

•

Possible class module: Considers any of the 17 NE tags to each word

•

NE disambiguation: Beam search (Selection of beam width (i.e., N) is very important, as larger beam width does not always give a significant improvement in performance)

• Elimination of inadmissible tag sequences:

Same as ME and CRF

Training: YamCha toolkit (http://chasen-org/~taku/software/yamcha/)

Classification: TinySVM-0.07 (http://cl.aist-nara.ac.jp/~takuku/ software/TinySVM )

one vs rest and pairwise multi-class decision methods

Polynomial kernel function

111

SVM based NERC System (Contd..)

Feature representation in SVM Features such as words, their lexical

•w

•p i i

word appearing at the

POS feature of w i

, ith

•t i

NE label for the ith word position features, and the various orthographic wordlevel features as well as the NE labels

•Reverse parsing direction is possible (from right to left)

•Models of SVM:

•SVM-F: Parses from left to right

•SVM-B: Parses from right to left

112

Best Feature Sets for ME, CRF and SVM

Model Feature

ME

CRF

Word, Context (Preceding one and following one word), Prefixes and suffixes of length up to three characters of the current word only, Dynamic NE tag of the previous word,

First word of the sentence, Infrequent word, Length of the word, Digit features

Word, Context (Preceding two and following two words), Prefixes and suffixes of length up to three characters of the current word only, Dynamic NE tag of the previous word, First word of the sentence, Infrequent word, Length of the word, Digit features

SVM-F Word, Context (Preceding three and following two words), Prefixes and suffixes of length up to three characters of the current word only, Dynamic NE tag of the previous two words, First word of the sentence, Infrequent word, Length of the word, Digit features

SVM-B Word, Context (Preceding three and following two words), Prefixes and suffixes of length up to three characters of the current word only, Dynamic NE tag of the previous two words, First word of the sentence, Infrequent word, Length of the word,

Digit features

Best Feature set Selection:

Training with language independent features and tested with the development set

113

Language Dependent Evaluation

(ME, CRF and SVM)

• Observations:

Classifiers trained with best set of language independent as well as language dependent features

POS information of the words are very effective

Coarse-grained POS tagger (Nominal, PREP and Other) for ME and CRF

Fine-grained POS tagger (developed with 27 POS tags) for SVM based

Systems

Best Performance of ME: POS information of the current word only (an improvement of 2.02% F-Score )

Best Performance of CRF: POS information of the current, previous and next words (an improvement of 3.04% F-Score )

Best Performance of SVM: POS information of the current, previous and next words (an improvement of 2.37% F-Score in SVM-F and 2.32% in SVM-B )

NE suffixes, Organization suffix words, person prefix words, designations and common location words are more effective than other gazetteers

114

Reference

• HMM http://www-nlp.stanford.edu/fsnlp/hmm-chap/blei-hmm-ch9.ppt

• MEMM www.cs.cornell.edu/courses/cs778/2006fa/lectures/05-memm.pdf

• CRFs web.engr.oregonstate.edu/~tgd/classes/539/slides/Shen-CRF.ppt

• PoS-tagging cs.haifa.ac.il/~shuly/teaching/04/statnlp/pos-tagging.ppt

• NER www.cl.uni-heidelberg.de/colloquium/docs/ekbal_abstract.pdf

115

Assignment

• States: {S sunny

,S rainy

• Observations: {O skirt

,S snowy

,O coat

}

,O

• State transition probabilities: umbrella

}

A

=

.8 .15 .05

.38 .6 .02

.75 .05 .2

• Emission probability:

• Initial state distribution: B

.6 .3 .1

.05 .3 .65

0 .5 .5

p =

(.7 .25 .05)

• Given O: O coat

O coat

O umbrella

O umbrella

O skirt

O umbrella

O umbrella

• What is the probability that the given sequence appears?

(forward procedure)

• What is the most possible weather states given the observations?

116