Lecture 10 Multilayer Perceptron (2): Learning

advertisement

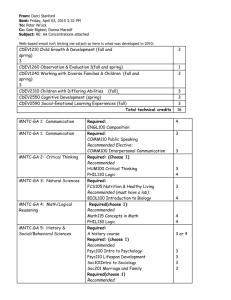

Intro. ANN & Fuzzy Systems Lecture 10. MLP (II): Single Neuron Weight Learning and Nonlinear Optimization Intro. ANN & Fuzzy Systems Outline • Single neuron case: Nonlinear error correcting learning • Nonlinear optimization (C) 2001 by Yu Hen Hu 2 Intro. ANN & Fuzzy Systems Solving XOR Problem A training sample consists of a feature vector (x1, x2) and a label z. In XOR problem, there are 4 training samples (0, 0; 0), (0, 1; 1), (1, 0; 1), and (1, 1; 0). These four training samples give four equations for 9 variables (x1, x2) Z (0, 1) (1, 0) (1, 1) ( 2) ( 2) (1) ( 2) (1) 0 f w10 w11 f ( w10 ) w12 f ( w20 ) (0, 0) 1 f w 0 f w ) ( 2) ( 2) (1) (1) ( 2) (1) (1) 1 f w10 w11 f ( w10 w12 ) w12 f ( w20 w22 ) (C) 2001 by Yu Hen Hu ( 2) 10 ( 2) (1) (1) ( 2) (1) (1) w11 f ( w10 w11 ) w12 f ( w20 w21 ( 2) 10 ( 2) (1) (1) (1) ( 2) (1) (1) (1) w11 f ( w10 w11 w12 ) w12 f ( w20 w21 w22 ) 3 Intro. ANN & Fuzzy Systems Finding Weights of MLP • In MLP applications, often there are large number of parameters (weights). • Sometimes, the number of unknowns is more than the number of equations (as in XOR case). In such a case, the solution may not be unique. • The objective is to solve for a set of weights that minimize the actual output of the MLP and the corresponding desired output. Often, the cost function is a sum of square of such difference. • This leads to a nonlinear least square optimization problem. (C) 2001 by Yu Hen Hu 4 Intro. ANN & Fuzzy Systems Single Neuron Learning d 1 w0 w1 x1 w2 x2 u f() z f'(z) + e Nonlinear least square cost function: K K K k 1 k 1 k 1 E [e(k )] 2 [d (k ) z (k )] 2 [d (k ) f ( Wx (k ))] 2 Goal: Find W = [w0, w1, w2] by minimizing E over given training samples. (C) 2001 by Yu Hen Hu 5 Intro. ANN & Fuzzy Systems Gradient based Steepest Descent The weight update formula has the format of E wi (t 1) wi (t ) wi E wi (t ) wi (t ) t is the epoch index. During each epoch, K training samples will be applied and the weight will be updated once. Since And Hence (C) 2001 by Yu Hen Hu K K z (k ) E [e(k )] 2 2[d (k ) z (k )] wi k 1 wi k 1 wi z (k ) f (u ) u f ' (u ) wi u wi wi 2 w j x j f ' (u ) xi j 0 K E 2[d (k ) z (k )] f ' (u(k )) xi (k ) wi k 1 6 Intro. ANN & Fuzzy Systems Delta Error Denote (k) = [d(k)z(k)]f’(u(k)), then K E 2 (k )xi (k ) wi k 1 (k) is the error signal e(k) = d(k)z(k) modulated by the derivative of the activation function f’(u(k)) and hence represents the amount of correction needs to be applied to the weight wi for the given input xi(k). Therefore, the weight update formula for a single-layer, single neuron perceptron has the following format K wi (t 1) wi (t ) (k ) xi (k ) k 1 This is the error-correcting learning formula discussed earlier. (C) 2001 by Yu Hen Hu 7 Intro. ANN & Fuzzy Systems Nonlinear Optimization Problem: Given a nonlinear cost function E(W) 0. Find W such that E(W) is minimized. Taylor’s Series Expansion E(W) E(W(t)) + (WE)T(WW(t)) + (1/2)(WW(t))THW(E)(WW(t)) T where E E E W ( E ) , , , WN W1 W2 2E 2E 2E 2 W W W W W 1 2 1 N 1 2 2 E E 2 H W ( E ) W W W 2 2 1 2 2 2 E E E W W W W 2 W N 2 N N 1 (C) 2001 by Yu Hen Hu 8 Intro. ANN & Fuzzy Systems Steepest Descent • Use a first order approximation: E(W) E(W(t)) + (W(n)E)T(WW(t)) • The amount of reduction of E(W) from E(W(t)) is proportional to the inner product of W(t)E and W = W W(t). • If |W| is fixed, then the inner product is maximized when the two vectors are aligned. Since E(W) is to be reduced, we should have W W(t)E g(t) • This is called the steepest descent method. (C) 2001 by Yu Hen Hu E(W) gradient WE WE W W(t) 9 Intro. ANN & Fuzzy Systems Line Search • Steepest descent only gives the direction of W but not its magnitude. • Often we use a present step size to control the magnitude of W • Line search allows us to find the locally optimal step size along g(t). • Set E(W(t+1), ) = E(W(t) + (g)). Our goal is to find so that E(W(t+1)) is minimized. (C) 2001 by Yu Hen Hu • Along g, select points a, b, c such that E(a) > E(b), E(c) > E(b). • Then is selected by assuming E() is a quadratic function of . E() a b c 10 Intro. ANN & Fuzzy Systems Conjugate Gradient Method Let d(n) be the search direction. The conjugate gradient method decides the new search direction d(n+1) as: d (t 1) g (t 1) (t 1)d (t ) where (t 1) [ g (t 1)]T [ g (t 1) g (t )] [ g (t )]T g (t ) (t+1) is computed here using a Polak-Ribiere formula. (C) 2001 by Yu Hen Hu Conjugate Gradient Algorithm 1. Choose W(0). 2. Evaluate g(0) = W(0)E and set d(0) = g(0) 3. W(t+1)= W(t)+ d(t). Use line search to find that minimizes E(W(t)+ d(t)). 4. If not converged, compute g(t+1), and (t+1). Then d(t+1)= g(t+1)+(t+1)d(t) go to step 3. 11 Intro. ANN & Fuzzy Systems Newton’s Method 2nd order approximation: Difficulties H-1 : too complicate to compute! E(W) E(W(t)) + Solution (g(t))T(WW(t)) + Approximate H-1 by different T (1/2) (WW(t)) HW(t)(E)(WW(t)) matrices: Setting WE = 0 w.r.p. W (not W(t)), 1. Quasi-Newton Method and solve W = W W(t), we have 2. Levenberg-marquardt HW(t)(E) W + g(W(t)) = 0 method Thus, W = H1 g(t) This is the Newton’s method. (C) 2001 by Yu Hen Hu 12