Representation of Musical Information

advertisement

Representation of Musical Information

Donald Byrd

School of Music

Indiana University

Updated 8 March 2006

Copyright © 2003-06, Donald Byrd

1

Classification: Surgeon General’s Warning

• Classification (ordinary hierarchic) is dangerous

–

–

–

–

Almost everything in the real world is messy

Absolute correlations between characteristics are rare

Example: some mammals lay eggs; some are “naked”

Example: musical instruments (piano as percussion,

etc.)

• Nearly always, all you can say is “an X has

characteristic A, and usually also B, C, D…”

• Leads to:

– People who know better claiming absolute correlations

– Arguments among experts over which characteristic is

most fundamental

– Don changing his mind

30 Jan. 06

2

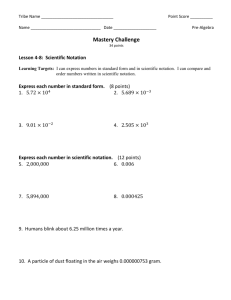

Dimensions of Music Representations (1)

• Waveform

• Csound

Expressive

Completeness

• MusicXML

• Notelist

• MIDI (SMF)

Structural Generality

(After Wiggins et al (1993). A Framework for the Evaluation of Music

Representation Systems.)

rev. 3 Feb.

3

Dimensions of Music Representations (2)

• Expressive completeness

– How much of all possible music can the representation

express?

– Includes synthesized as well as acoustic sounds!

– Waveform (=audio) is truly “complete”

– Exception, sort of: conceptual music

• E.g., Tom Johnson: Celestial Music for Imaginary Trumpets

(notes on 100 ledger lines), Cage: 4’ 33” (of silence), etc.

• Structural generality

– How much of the structure in any piece of music can

the representation express?

– Music notation with repeat signs, etc. still expresses

nowhere near all possible structure

30 Jan. 06

4

Representation vs. Encoding

• Representation: what information is conveyed?

– More abstract (conceptual)

– Basic = general type of info; specific = exact type

• Encoding: how is the information conveyed?

– More concrete: in computer (“bits”)…or on paper

(“atoms”)!)

• One representation can have many encodings

– “Atoms” example: music notation in printed or Braille

form

– “Bits” example: any kind of text in ASCII vs. Unicode

– “Bits” example: formatted text in HTML, RTF, .doc

30 Jan. 06

5

Basic Representations of Music & Audio

Digital Audio

Audio (e.g., CD, MP3):

like speech

Time-stamped

Time-stamped

Events Events

(e.g., MIDI file): like

unformatted text

Musiclike

Notation

Music Notation:

text with complex

formatting

27 Jan.

6

Basic Representations of Music & Audio

Audio

Time-stamped Events

Music Notation

Common examples

CD, MP3 file

Standard MIDI File

Sheet music

Unit

Sample

Event

Note, clef, lyric, etc.

Explicit structure

none

little (partial voicing

information)

much (complete

voicing information)

Avg. rel. storage

2000

1

10

Convert to left

-

easy

OK job: easy

Convert to right

1 note: pretty easy

OK job: fairly hard

other: hard or very hard

-

Ideal for

music

bird/animal sounds

sound effects

speech

music

music

27 Jan.

7

Basic and Specific Representations vs. Encodings

Basic and Specific Representations (above the line)

Audio

Time-stamped Events

Waveform

Time-stamped MIDI

Csound score

Time-stamped expMIDI

SMF

.WAV

Red Book (CD)

Csound score

Music Notation

Gamelan not.

Notelist

expMIDI File

Tablature

CMN

Mensural not.

MusicXML

Finale

ETF

Encodings (below the line)

rev. 15 Feb.

8

Selfridge-Field on Describing Musical Information

• Cf. Selfridge-Field, E. (1997). Describing Musical Information.

• What is Music Representation? (informal use of term!)

– Codes in Common Use: solfegge (pitch only), CMN, etc.

– “Representations” for Computer Application: “total”, MIDI

• Parameters of Musical Information

– Contexts: sound, notation/graphical, analytic, semantic; gestural?

– Concentrates on 1st three

• Processing Order: horizontal or vertical priority

• Code Categories

–

–

–

–

–

Sound Related Codes: MIDI and other

Music Notation Codes: DARMS, SCORE, Notelist, Braille!?, etc.

Musical Data for Analysis: Plaine and Easie, Kern, MuseData, etc.

Representations of Musical Patterns and Process

Interchange Codes: SMDL, NIFF, etc.; almost obsolete!

30 Jan. 06

9

Review: The Four Parameters of Notes

• Four basic parameters of a definite-pitched musical note

1. pitch: how high or low the sound is: perceptual analog of

frequency

2. duration: how long the note lasts

3. loudness: perceptual analog of amplitude

4. timbre or tone quality

• Above is decreasing order of importance for most Western

music

• …and decreasing order of explicitness in CMN!

10

Review: How to Read Music Without Really Trying

• CMN shows at least six aspects of music:

–

–

–

–

NP1. Pitches (how high or low): on vertical axis

NP2. Durations (how long): indicated by note/rest shapes

NP3. Loudness: indicated by signs like p , mf , etc.

NP4. Timbre (tone quality): indicated with words like

“violin”, “pizzicato”, etc.

– Start times: on horizontal axis

– Voicing: mostly indicated by staff; in complex cases also

shown by stem direction, beams, etc.

• See “Essentials of Music Reading” musical example.

11

Complex Notation (Selfridge-Field’s Fig. 1-4)

Complications on staff 2:

• Editorial additions (small notes)

• Instruments sharing notes only some of the time

• Mixed durations in double stops

• Multiple voices (divisi notation)

• Rapidly gets worse with more than 2!

10 Feb.

12

Complex Notation (Selfridge-Field’s Fig. 1-4)

Multiple voices rapidly gets worse with more than 2

• 2 voices in mm. 5-6: not bad: stem direction is enough

• 3 voices in m. 7: notes must move sideways

• 4 voices in m. 8: almost unreadable—without color!

• Acceptable because exact voice is rarely important

rev. 12 Feb.

13

Domains of Musical Information

• Independent graphic and performance info common

– Cadenzas (classical), swing (jazz), rubato passages (all music)

• CMN “counterexamples” show importance of independent

graphic and logical info

– Debussy: bass clef below the staff

– Chopin: noteheads are normal 16ths in one voice, triplets in another

• Mockingbird (early 1980’s) pioneered three domains:

– Logical: “ note is a qtr note” (= ESF(Selfridge-Field)’s “notation”)

– Performance: “ note sounds for 456/480ths of a quarter” (= ESF’s

“sound”; also called gestural)

– Graphic: “ notehead is diamond shaped” (= ESF’s “ notation”)

– Nightingale and other programs followed

• SMDL added fourth domain

– Analytic: for Roman numerals, Schenkerian level, etc. (= ESF’s

“analytic”)

1 Feb. 06

14

Different Classifications of Music Encodings

Selfridge-Field

Sound-related codes (1): M IDI

Sound-related codes (2): Other Codes for

Representation and Control

Musical Notation Codes (1): D ARMS

Musical Notation Codes (2): O ther ASCII

Representations

Musical Notation Codes (3): G raphical-object

Descriptions

Musical Notation Codes (4): B raille

Codes for Data Management and Analys is (1):

Monophonic Representations

Codes for Data Management and Analys is (2):

Polyphonic Representations

Representations of Musical Patterns and

Processes

Interchange Codes

10 Feb.

Byrd

Time-stamped MIDI

Time-stamped Events + Audio

CMN (domains L, G)

CMN (domains L, G)

CMN (domains L, P, G)

CMN: non-computer

representation!

CMN (emphasizes domain A)

CMN (emphasizes domain A)

“CMN” (abstracted; emphasizes

A)

CMN (domains L, P, G, A)

15

Mozart: Variations for piano, K. 265, on

“Ah, vous dirais-je, Maman”, a.k.a. Twinkle

Theme

2 œ œ

&4

2

? 4 œ

œ

Variation 2

&

?

`

œ

œ

œ œ

œ œ

œ œ

œ œ

œ œ

œ œ

Ý

œ œ

œ œ

œ œ

œ œ

œ œ

œ œ

Ý

œ

œœ

œ

œ

œ

œ

œ

œ

œ

ݜ

Ý

œ

ݜ

Ý

œ

œœœœœœœ œœœœœœœ œœœœœœœ œœœœœœœ œ#œœ œ#œœ

œ

œ

œ

œ

œ

œ

œœ

& œ

œœ œ

? œ

16

Representation Example: a Bit of Mozart

The first few measures of Variation 8 of the “Twinkle” Variations

27 Jan.

17

In Notation Form: Nightingale Notelist

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

%%Notelist-V2 file='MozartRepresentationEx' partstaves=2 0 startmeas=193

C stf=1 type=3

C stf=2 type=10

K stf=1 KS=3 b

K stf=2 KS=3 b

T stf=1 num=2 denom=4

T stf=2 num=2 denom=4

A v=1 npt=1 stf=1 S1 'Variation 8'

D stf=1 dType=5

N t=0 v=1 npt=1 stf=1 dur=5 dots=0 nn=72 acc=0 eAcc=3 pDur=228 vel=55 ...... appear=1

R t=0 v=2 npt=1 stf=2 dur=-1 dots=0 ...... appear=1

N t=240 v=1 npt=1 stf=1 dur=5 dots=0 nn=74 acc=0 eAcc=3 pDur=228 vel=55 ...... appear=1

N t=480 v=1 npt=1 stf=1 dur=5 dots=0 nn=75 acc=0 eAcc=2 pDur=228 vel=55 ...... appear=1

N t=720 v=1 npt=1 stf=1 dur=5 dots=0 nn=77 acc=0 eAcc=3 pDur=228 vel=55 ...... appear=1

/ t=960 type=1

N t=960 v=1 npt=1 stf=1 dur=4 dots=0 nn=79 acc=0 eAcc=3 pDur=456 vel=55 ...... appear=1

(etc. File size: 1862 bytes)

27 Jan.

18

An Event Form: Standard MIDI File (file dump)

•

•

•

•

•

•

•

•

•

•

•

•

•

0:

16:

32:

48:

64:

80:

96:

112:

128:

144:

160:

176:

192:

4D54 6864

726B 0000

0402 0218

0055 00FF

6480 4840

3881 6480

904F 3883

3883 4880

804D 400D

FF03 0550

4140 0C90

6480 4440

00 .

0000

0014

0896

0305

0C90

4B40

4880

4F40

FF2F

6961

4330

0C90

0006

00FF

34FF

5069

4A38

0C90

4F40

1890

004D

6E6F

8164

4647

0001

5103

2F00

616E

8164

4D38

1890

4D38

5472

8F00

8043

8164

0003

0B70

4D54

6F00

804A

8164

4F38

8330

6B00

9041

400C

8046

5 Feb.

01E0

C000

726B

9048

400C

804D

8360

8050

0000

2B81

9044

4001

4D54

FF58

0000

3881

904B

400C

9050

4018

3200

6480

3181

FF2F

MThd.........‡MT

rk......Q..p¿..X

.....ñ4./.MTrk..

.U....Piano.êH8Å

dÄH@.êJ8ÅdÄJ@.êK

8ÅdÄK@.êM8ÅdÄM@.

êO8ÉHÄO@.êO8É`êP

8ÉHÄO@.êM8É0ÄP@.

ÄM@../.MTrk...2.

...Pianoè.êA+ÅdÄ

A@.êC0ÅdÄC@.êD1Å

dÄD@.êFGÅdÄF@../

19

An Event Form: Standard MIDI File (interpreted)

•

Header format=1 ntrks=3 division=480

•

•

•

•

•

Track #1 start

t=0 Tempo microsec/MIDI-qtr=749760

t=0 Time sig=2/4 MIDI-clocks/click=24 32nd-notes/24-MIDI-clocks=8

t=2868 Meta event, end of track

Track end

•

•

•

•

•

•

•

•

Track #2 start

t=0 Meta Text, type=0x03 (Sequence/Track Name) leng=5

Text = <Piano>

t=0 NOn ch=1 num=72 vel=56

t=228 NOff ch=1 num=72 vel=64

t=240 NOn ch=1 num=74 vel=56

t=468 NOff ch=1 num=74 vel=64

(etc. File size: 193 bytes)

27 Jan.

20

MIDI (Musical Instrument Digital Interface) (1)

• Invented in early 80’s

– Dawn of personal computers

– Designed as simple (& cheap to implement) real-time

protocol for communication between synthesizers

– Low bandwidth: 31.25 Kbps

• Top bit of byte: 1 = status, 0 = data

– Numbers usually 7 bits (range 0-127); sometimes 14 or even 21

• Message types

–

–

–

–

–

Channel Voice

Channel Mode

System Common

System Real-Time

System Exclusive

5 Feb. 06

21

MIDI (2)

• Important standard Events are mostly Channel Voice msgs

– Note On: channel (1-16), note number (0-127), on velocity

– Note Off: channel, note number, off velocity

• Can change “voice” any time with Program Change msg

• A way around the 16-channel limit: cables

– may or may not correspond to a physical cable

– each cable supports 16 channels independent of others

– Systems with 4 (=64 channels) or 8 cables (=128) are common

• MIDI Monitor allows watching MIDI in real time

– Freeware and open source!

5 Feb. 06

22

MIDI Sequencers

• Record, edit, & play SMFs (Standard MIDI Files)

• Standard views

– Piano roll

• often with velocity, controllers, etc., in parallel

– Event list

– Other: Mixer, “Music notation”, etc.

– Standard editing

• Adding digital audio

– Personal computers & software-development tools have gotten

more & more powerful

– => "digital audio sequencers”: audio & MIDI (stored in hybrid

encodings)

• Making results more musical: “Humanize”

– Timing, etc. isn’t mechanical—but not really musical!

8 Feb. 06

23

Another Warning: Terminology (1)

• A perilous question: “How many voices does this

synthesizer have?”

• Syllogism

– Careless and incorrect use of technical terms is

dangerous to your learning very much

– Experts use technical terms carelessly most of the time

– Beginners often use technical terms incorrectly

– Therefore, your learning very much is in danger

• Somewhat exaggerated, but only somewhat

5 Feb. 06

24

Another Warning: Terminology (2)

• Not-too-serious case: “system”

– Confusion because both standard (common) computer

term & standard (rare but useful) music term

• Serious case: patch, program, timbre, or voice

– Vocabulary def.: Patch: referring to event-based systems such as MIDI

and most synthesizers (particularly hardware synthesizers), a setting that

produces a specific timbre, perhaps with additional features. The terms

"voice", "timbre", and "program" are all used for the identical concept;

all have the potential to cause substantial confusion and should be

avoided as much as possible

– “Patch” is the only unambiguous term of the four

– …but the official MIDI specification (& almost everything else)

talks about “voices” (as in “Channel Voice messages control the

instrument's 16 voices”)

– …and to change the “voice”, you use a “program change”!

6 Feb. 06

25

Another Warning: Terminology (3)

• Some terminology is just plain difficult

• Example: “Representation” vs. “Encoding”

– Distinction: 1st is more abstract, 2nd more concrete

– …but what does that mean?

– Explaining milk to a blind person: “a white liquid...”

• Don’s precision involves being very careful with

terminology, difficult or not

– Vocabulary is important source

– Cf. other sources

– Contributions are welcome

6 Feb. 06

26

Standard MIDI Files (1)

•

•

•

•

•

File format = encoding

Standard approved in 1988

Very compact

Files made up of chunks with 4-character type

One Header chunk (“Mthd”)

– Gives format, number of tracks, basis for timing

• Any number of Track chunks (“MTrk”)

– Stream of MIDI events and metaevents preceded by time

– 1st track is always timing track

5 Feb.

27

Standard MIDI Files (2)

• Metaevents

– Set Tempo (in timing track only)

– Text, Lyrics, Key/time signatures, instrument name, etc.

• What’s missing?

– Voice information limited to 16 channels

– Dynamics, beams, tuplets, articulation, expression marks,

note spelling, etc.: much less structure than CMN

• Attempts to overcome limitations

–

–

–

–

Expressive MIDI, NotaMIDI, etc.

ZIPI

In a (more ambitious) way, Csound, etc.

None of the limited attempts caught on

5 Feb. 06

28

Separating Representations Doesn’t Work! (1)

• Really “doesn’t work well for many purposes”

• We shouldn’t be surprised

– Close relative of “Classification is Dangerous to Your

Health”

• Example: many popular notation encodings (e.g.,

MusicXML) add event info

• Example: multiple domains for notation add in

event info (performance domain)

• Example: Csound combines audio & events

• Hybrid systems

12 Feb. 06

29

Separating Representations Doesn’t Work! (2)

• Extreme example of musical necessity: Jimi

Hendrix’s version of the Star-Spangled Banner at

Woodstock (1969)

– Goes from pure melody => noteless texture => back

repeatedly

– What would music-IR system do to recognize the StarSpangled Banner?

– …or Taps? (a very different problem!)

• Attempts have been/are being made to combine all

three basic representations

3 Feb. 06

30

Even One Note can be Hairy

• Experience in the early days of Kurzweil (ca.

1983)

– Piano middle C(!) never sounded “good”

• ...except first, low-quality recording

• Couldn’t tell why from waveform, spectrogram, etc.

– Variable sampling rates were unusable

• An expensive mistake: cost ca. $1,000,000

– Scale on the flute didn’t sound realistic to a flutist—but

it was

– Lesson 1: expectations influence perception

– Lesson 2: nothing about music is clear-cut or simple

31

Musical Acoustics (1)

• Acoustics involves physics

• Musical (opposed to architectural, etc.) acoustics

–

–

–

–

Frequency (=> pitch)

Amplitude (=> loudness)

Spectrum, envelope, & “other” characteristics => timbre

Partials vs. harmonics

• Psychoacoustics involves psychology/perception

– Perceptual coding (“lossy” compression via MP3, etc.)

• Timbre

– Old idea (thru ca. 1960’s?): timbre is produced by static

relationships of partials

– …but attack helps identification more than steady state!

– Reality: rich (interesting) sounds are complex; nothing is static

– Time domain (waveform) vs. frequency domain (spectrogram) views

11 Feb. 06

32

Musical Acoustics (2): addsynenv

• addsynenv does additive synthesis of up to six partials

– Each has arbitrary partial no., starting phase, and "ADSR" type

envelope

– Partial no. can be non-integer => not harmonic

– ADSR = Attack/Decay/Sustain/Release (3 breakpoints)

– …but addsynenv allows much more complex envelopes

– Plays a single note in the waveform specified by partials &

envelopes

– Simultaneously displays “spectrogram” or “sonogram”

– …but not waveform

– Phase in real world normally has little effect, but can be critical in

recording & digital worlds (e.g., cancellation)

• Additive synthesis can’t create aperiodic (non-definite

pitch) sounds, or realistic attacks

11 Feb. 06

33

Musical Acoustics (3): Frequency, Temperament,

Scales

• Frequency: A4 (A above middle C) = 440 Hz, etc.

• Why do we need temperaments (tuning systems)?

–

–

–

–

–

Frequency ratio (FR) of notes an octave (12 semitones) apart = 2:1

FR of notes a Perfect 5th (7 st, e.g., C up to G) = 3:2

84 st = 7 octaves; around “circle of fifths”, 84 st = 12 P5s

But (3/2)^12 = 129.746 does not = 2^7!

The standard (but not only) solution: equal temperament

• With 12 semitones & equal temperament, semitone FR =

12th root of 2 : 1 = ca. 1.059463…:1

• Etc.: see table in Wikipedia “Musical Acoustics” article

• Scales

– Diatonic (major, minor, etc.), chromatic, other

– Is addsynenv “whole tone” preset a timbre, or a scale!?

10 Feb. 06

34

An Audio Form: AIFF, 22K, 8 bit mono (file dump)

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

0: 464F 524D 0001

16: 0000 0012 0001

32: 0000 0000 0000

48: 0000 0000 0000

64: 0000 0000 0000

80: FBFB FCFC FCFD

96: 0303 0201 0101

112: FFFF FFFF FF00

128: FDFE 0000 0000

144: FEFE FF00 0001

160: 0504 01FF FEFE

176: 0000 0000 00FF

192: 0302 0101 0201

208: FFFD FBFA FAFC

224: FDFC FBFB FCFE

240: 0102 0507 0705

256: F9FB FDFD FDFE

(etc. File size: 99,254 bytes)

83AE

0001

5353

0000

0000

FEFF

0000

0203

0001

0000

FDFD

FEFE

0000

FDFF

0103

0200

FEFE

4149

8380

4E44

0000

00FF

0001

0000

00FE

0102

0000

FBFA

FEFD

0205

FF00

0405

FFFD

FEFE

4646

0008

0001

0000

0000

0102

0100

FCFC

0201

0001

FBFC

FDFE

0605

00FF

0402

FBF8

FEFD

31 Jan.

434F

400D

8388

0000

0000

0303

FFFF

FCFC

00FF

0100

FEFF

FF02

0200

FFFF

0203

F6F5

FDFC

4D4D

AC44

0000

0000

FEFC

0303

FEFE

FBFC

FFFF

0103

FFFF

0303

0000

FEFD

0302

F5F7

FDFF

FORM..ÉÆAIFFCOMM

........ÉÄ..@.¨D

......SSND..Éà..

................

..............˛¸

˚˚¸¸¸˝˛.........

..............˛˛

.........˛¸¸¸¸˚¸

˝˛..............

˛˛..............

....˛˛˝˝˚˙˚¸˛...

......˛˛˛˝˝˛....

................

.˝˚˙˙¸˝.......˛˝

˝¸˚˚¸˛..........

.........˝˚¯ˆıı˜

˘˚˝˝˝˛˛˛˛˛˛˝˝¸˝.

35

Uncompressed Audio Files: Formats

• File formats are (containers for) encodings

• Only common formats are “PCM”: WAVE (.wav:

especially Windows), AIFF (especially Macintosh)

• Sampling rate, sample size, no. of channels independent of

format

• Typically in file header (different between formats)

• Higher sampling rate => reproduce higher frequencies

(sampling theorem, a.k.a. Nyquist theorem)

– Max. possible frequency = 1/2 SR

• Larger sample size => better signal-to-noise ratio (SNR)

and/or dynamic range

– Rule of thumb: 6 dB/bit

– 16-bit samples (CD) = maximum of ca. 96 dB SNR

10 Feb. 06

36

Uncompressed Audio Files: Size

• Header size usually negligible compared to data

• Mozart AIFF file is low fidelity mono

– 22,050 samples/channel/sec. * 1 byte/sample * 1 channels * 4.5

sec. = 99,000 bytes

– At CD quality, would take 44,100 samples/channel/sec. * 2

bytes/sample * 2 channels * 4.5 sec. = 792,000 bytes

• CD can store up to 74 min.(or 80) of music

• Total amount of digital data

– 44,100 samples/channel/sec. * 2 bytes/sample * 2 channels * 74

min. * 60 sec./min. = 783,216,000 bytes

1 Feb. 06

37

Specs for Some Common Audio Formats

Format

Encoding Type

Details

Fidelity

“Red Book” (CD)

Unco mpressed,

li nea r

44.1KHz, 16 bits/ sample,

stereo

Very h igh

Bandwidth

(Kbps)

ca. 1400

Early game aud io

Unco mpressed,

li nea r

22.05KHz, 8 bits/ sample,

mono

Low

176

MLP, Apple

Lossle ss

Compression, etc.

MP2 (Variations1)

Lossle ss comp.

compression c a. 2:1

Very h igh

ca. 700

Lossy comp.

compression c a. 3:1

High

ca. 400

MP3 (Variations2),

AAC, WMA

Lossy comp.

compression c a. 7:1 to

ove r 10:1

High to

very h igh

ca. 128-192

compression more than

20:1

Medium

ca. 28-64

AAC (Variations2), Lossy comp.

WMA

13 Feb. 06

38

An Event/Audio Hybrid Form: Csound

•

•

•

•

Developed by Barry Vercoe (MIT) in early 80’s

Builds on Music IV, Music V, etc. (Bell Labs in early 60’s)

Widely used in electronic/computer music studios

Hybrid representation: orchestra = audio, score = events

– Orchestra: defines “instruments” (timbre, articulation, dynamics)

– Score: tells instruments when to play and with what parameter

values

• One statement per line and comments after a semicolon

• First character of score statement is opcode

• Remaining data consists of numeric parameter fields

(pfields) to be used by that action

11 Feb. 06

39

Csound Example

•

Sine-tone generator to play the first five notes of the Mozart example, at quarter

note = 80 => an eighth note lasts 3/8 = .375 sec. Score:

•

•

•

•

•

•

f1

0

256

10

; a few notes of Mozart

i1

0

.375

0

i1

.375

.

.

i1

.75

.

.

i1

1.125

.

1

•

i1

9.07

•

Opcode f interprets parameter fields as follows:

•

p1 - function table number being created

p2 - creation time, or time at which the table becomes readable

p3 - table size (number of points)

p4 - generating subroutine, chosen from a prescribed list.

•

i (note) statements invoke the p1 instrument at time p2, duration p3 seconds;

passes all p-fields to that instrument.

1.5

.75

.

9.00

9.02

9.03

.

3 Feb.

; a sine wave function table

9.05

40

Compressed Audio Files: Formats

• Don’t confuse data compression with dynamic-range

compression (a.k.a. audio level compression or limiting)

• Codec = compressor/decompressor

• Lossless compression

– Standard methods (e.g., LZW: .zip, etc.) don’t do much for audio

– Sometimes called packing

– Audio specific methods

• MLP used for DVD-Audio

• Apple and Microsoft Lossless

• Nearly lossless compression : DTS

• Lossy compression

– Depends on psychoacoustics (“perceptual coding”)

16 Feb. 06

41

Compressed Audio Files: Lossy Compression

• Depends on psychoacoustics (“perceptual

coding”)

1. Divide signal into sub-bands by frequency

2. Take advantage of:

•

•

•

•

Masking (“shadows”), via amplitude within critical bands

Threshhold of audibility (varies with frequency)

Redundancy among channels (5.1 even more than stereo!)

Etc.

• MPEG-1 layers I thru III (MP-1, 2, 3), AAC get better &

better compression via more & more complex techniques

– “There is probably no limit to the complexity of psychoacoustics.”

--Pohlmann, 5th ed.

– However, there probably is an “asymptotic” limit to compression!

• Implemented in hardware or software codecs

22 Feb. 06

42

Compressed Audio Files: Lossy Compression

• Evaluation via critical listening is essential

– ITU 5-point scale

• 5 = imperceptible, 4 = perceptible but not annoying, 3 = slightly

annoying, 2 = annoying, 1 = very annoying

– Careful tests: often double-blind, triple-stimulus, hidden reference

• E.g., ISO qualifying AAC with 31 expert listeners (cf. Hall article)

– Test materials chosen to stress codecs

• Common useful tests: glockenspiel, castanets, triangle, harpsichord,

speech, trumpet

• Soulodre’s worst-case tracks: bass clarinet arpeggio, bowed double

bass, harpsichord arpeggio, pitch pipe, muted trumpet

• References: Hall article “Cramped Quarters”, Pohlmann Principles of

Digital Audio (both on my reserve)

17 Feb. 06

43

Hybrid Representation & Compression

• Events (with “predefined” timbre) take very little space

–

–

–

–

Mozart fragment AIFF (CD-quality audio): 794,166 bytes

Mozart fragment MIDI file: 193 bytes

Timbre takes same amount of space, regardless of music length!

Problem: don’t have exact timbre for any performance

• Mike Hawley’s approach: find structure in audio; create

events & timbre definition

– Hawley, Michael J. (1990). The Personal Orchestra, or, Audio Data

Compression by 10000:1. Usenix Computing Systems Journal 3(2),

pp. 289—329.

• Could hybrid event/audio representation lead to his “audio

data compression by a factor of 10,000”?

• Maybe, but no time soon!

17 Feb. 06

44

Explicit Structure & Music Notation (1)

• David Huron on “Explanatory Goals of Music Analysis”

• “Music exhibits a multitude of different kinds of structures.”

– Formal: Symmetrical partitioning of tone row in Webern's Op. 24 Concerto

– Functional: Defensive vigil and the early morning singing of the Mekranoti

indians of Brazil

– Physiological: Critical bands & Haydn's String Quartet Op. 17, No. 3

– Perceptual: Auditory attention & ramp dynamics in Beethoven's Piano

Sonata No. 4, Op. 7

– Idiomatic: Arnold's Fantasy for Trumpet

– Economic: Prokofiev's piano Concerto No. 4 for left-handed pianist

– Personal: B-A-C-H and J.S. Bach's Brandenburg Concerto No. 2

– Social: Frank Sinatra's All or Nothing at All heard in 1959 vs. 1985

– Cultural: Geographical difference between Slavic & Germanic folk

cadences

– Linguistic: Rhythmic contrast between Frère Jacques & English Country

Garden

20 Feb. 06

45

Explicit Structure & Music Notation (2)

• Music notation is about explicit information/structure

• Huron was talking about explanation; do we really need

explicit information for IR?

– O’Maidin (and others): no need for logical domain

• Interesting examples

– Unmarked triplets: obvious in Schubert, etc.; not in Bartok Qtet 5, I

– Bach Goldberg Variations; D-major Prelude, WTC Book II: notation

sidesteps triplets by changing time signature (and tempo)

– Hendrix’ Star-Spangled Banner improvisation

• Byrd & Isaacson

– Need logical domain because (for many purposes) must interpret at

some point, & silly for everyone who needs it to re-do!

– Byrd & Isaacson (2005). A Music Representation Requirement

Specification for Academia. CMJ 27, no. 4 (2003), pp. 43–57

– But logical info may be less authoritative

7 Mar. 06

46

Explicit Structure & Music Notation (3)

• Joan Public’s problem: find a song, given some of

the melody and some lyrics

– Needs notes and text (lyrics)

– Common question for music librarians, esp. in public

libraries

• Musicologist’s problem: authorship/origin of

works in manuscripts

– Full symbolic data is important, even “insignificant”

details of notation (John Howard)

47

Music Notation: Fully Structured Representation

• Many forms (“specific” representations)

– Early and modern Western, Gamelan notation (Java), Indian, etc.

• Evolved with music they represent

• CMN probably most elaborate, especially w/r/t time

– Western polyphony very demanding of synchronization

• History of Western music notation in ten minutes

–

–

–

–

Manuscript Facsimiles at www.nd.edu/~medvlib/musnot.html

Neumes (6th to 13th century)

Mensural notation: black (ca. 1250-1450), white (ca. 1450-1600)

CMN (a.k.a. TMN) is really “CWMN” (ca. 1600-present)

• included barlines, piano/forte from beginning

• …but other dynamics gradually: pp/ff c.1750, mf c.1762, mp c.1837,

etc.; Romanticism => wider range

• …and more and more specific (metronome & expression marks, etc.)

– Tablature goes back centuries, too: important for lute (& viols?)

22 Feb. 06

48

A Systematic Approach to Music Representation

• Byrd & Isaacson (2003). A Music Representation Requirement

Specification for Academia. Computer Music Journal 27(4), pp. 43-57

• Intended to help choose representation for encoded music in

Variations2

• Purpose influences choice of encoding as well as representation

• Wiggins et al. (1993) give three sorts of tasks:

1. recording: user wants record of musical object, to be retrieved later

2. analysis: user wants analyzed version, not “raw” musical object

3. generation/composition

• Descriptive vs. prescriptive notation :: pitch in CMN vs. tablature

• Tuplets are exceptionally complex & subtle; often invisible

(unmarked)

• A “huge table” takes up half of the article

• CMN has endless details, but most aren’t important for most purposes!

• Assigned importance “Required”, “Very Desirable”, “Desirable”

• Result: chose MusicXML (but priorities changed & little done with it)

2 Mar. 06

49

Music Notation Software and Intelligence (1)

• Cf. Byrd, D. (1994). Music Notation Software and Intelligence.

• Cases where famous composers flagrantly violate

important rules, yet results are easily readable

Fig. 1. Changing time signature in middle of the measure (J.S. Bach)

Fig. 2. A measure with four horizontal positions for notes that are all

on the downbeat (Brahms)

Very different ways to have two clefs in effect at the same time:

Fig. 3. Bizarrely obvious (Debussy)

Fig. 4. So subtle, must think about the 3/8 meter to see bass and treble

clefs are both in effect throughout the measure (Ravel)

• Really nothing very strange going on in any of these

rev. 15 Feb.

50

Music Notation Software and Intelligence (2)

• Rules of CMN interact and aren’t always consistent

• Programmers try to help users by having programs do

things “automatically”

• A good idea if software knows enough to do the right thing

“almost all” the time

• Notation programs convert CMN to performance (MIDI)

and vice-versa => makes things worse

• Severo Ornstein’s complaint: programs that assume a

defined rhythmic structure

22 Feb. 06

51

Surprise: Music Notation has Meta-Principles!

1. Maximize readability (intelligibility)

–

–

–

–

Avoid clutter = “Omit Needless Symbols”

Try to assume just the right things for audience

Audience for CMN is (primarily) performers

General principle of any communication

• Applies to talks as well as music notation!

– Examples: Schubert (avoid tuplet numerals), Bach (avoid tuplets)

2. Minimize space used

– Save space => fewer page turns (helps performer); also cheaper to

print (helps publisher)

– Squeezing much music into little space is a major factor in

complexity of CMN

– Especially important for music: real-time, performer’s hands full

– Examples: Telemann, Debussy, Ravel (for all, reduce staves)

22 Feb. 06

52

Music Notation: Attempts at Standard Encodings (1)

•

•

•

•

Structured encodings on computers (all CMN)

Almost all are text; a few (e.g., NIFF) are binary

Three “generations”

Early (DARMS, MUSTRAN, SCORE, IML, etc.)

– From 1960’s: mainframe computers, batch processing (no

interactive editing), punch card input

– Logical and (usually limited) Graphic domains

– IML used for Princeton’s Josquin Masses project: first(?) music IR

– SCORE important in music publishing bcs emphasizes Graphic

– Mozart (“Twinkle” Variation no. 8) in MUSTRAN (first 5 notes)

• GS, K3$K, 2=4, WMPW, ‘Variation 8’, 8C+, 8D+, 8E+, 8F+, /, 4G+

• 2nd generation (Humdrum/kern, MuseData, Notelist, etc.)

– From 1970’s and 80’s: mini and early personal computers

– Added Performance domain

– Far more verbose: 1st 5 notes of Mozart in Notelist = c. 12 lines

27 Feb. 06

53

Music Notation: Attempts at Standard Encodings (2)

• Recent (3rd generation)

–

–

–

–

NIFF, GUIDO, SMDL, MusicXML, MEI, etc.

Almost all intended as interchange codes

Some are extensible (GUIDO, MEI)

Most based on XML

• Non-XML

– NIFF = Notation Interchange File Format

• Binary, not text—probably a mistake

• Mostly a flop: maybe because apparent complexity scared developers

– GUIDO

• text, but not XML

27 Feb. 06

54

GUIDO and Representational Adequacy

• Representational adequacy: simple things have simple representations

– Simple (really “encodings”!) => almost as compact as MUSTRAN

– Mozart first 5 notes: Mozart first 5 notes: [ \clef<"treble"> \key<-3>

\meter<type="2/4"> c+2/8 d e& f g/4 ]

– …but with other symbols: [ \clef<"treble"> \text<"Variation 8"> \key<-3>

\meter<type="2/4"> \intens<"mf"> c+2/8 d e& f g/4 ]

• Design Layers: Basic (Logical domain only), Advanced (adds

Graphic), Extended (adds Performance, user extensible; unfinished?)

• “GUIDO Music Notation Fmt” talk: www.salieri.org/guido/doc.html

• GUIDO is (was?) an academic project => free, open-source

• GUIDO NoteServer: http://www.noteserver.org/noteserver.html

• Other GUIDO tools: MIDI => GMN, GMN => gif (like NoteServer)

– Used in Variations2 to display themes

• GUIDOXML exists, but never much done with it

– GUIDO’s developers never liked XML, felt it accomplished nothing

• GUIDO was fairly popular, but seems to be dying out—too bad

– Almost everyone else likes XML: cause and effect?

27 Feb. 06

55

Music Notation: Attempts at Standard Encodings (3)

• XML-based (concept of markup language)

– SGML = Standard Generalized Markup Language

– “Application” of SGML for music

• SMDL = Standard Music Description Language: early & v. powerful, but a flop

– XML = eXtensible Markup Language is hugely popular

– Applications of XML for music

• MusicXML is by far most popular; most verbose (5 notes of Mozart = 270 lines!)

• MEI also significant; others include MusiXML, MNML, NIFFML, etc. etc.

• Castan’s site www.music-notation.info lists programs importing &

exporting each encoding

– Gives an idea of which are most important/popular

– MusicXML is hands-down winner; next are GUIDO, NIFF, SCORE

27 Feb. 06

56

HTML, XML, and Markup Languages

• Basic limitation of HTML: too concrete

– Prescriptive, not descriptive

– Says what to show, but no way to say what “G minor” is (semantics)

– Limits reuse of information

• Solution: markup languages

– SGML = Standard Generalized Markup Language

– “Applications” of SGML

• HTML = HyperText Markup Language (Web pages)

• SMDL = Standard Music Description Language: early & v. powerful, but a flop

– XML = eXtensible Markup Language is hugely popular

•

•

•

•

Subset of/simpler than SGML; mostly replaced it (except for HTML)

Stricter than SGML => HTML replaced by XHTML

Application defined by DTD or schema (cf. MusicXML’s)

Musical metadata examples: iTunes Music Library.xml, Variations2

– Can say “G minor” is a “key”, but still no explicit semantics

• Improvement: the Semantic Web (?): see www.w3.org

– XML-based; still no real semantics, but more explicit structure

5 Mar. 06

57

MusicXML

• MusicXML from Michael Good/Recordare, Inc.

– Cf. www.recordare.com

• Designed as a “practical interchange format”

• Now the de facto standard encoding of CMN

– Interfaces to MusicPad, karaoke, etc., as well as notation programs

• “Recordare editions” (songs) & examples on Web site

– http://www.recordare.com/xml/samples.html

• Heavily influenced by MuseData; also Humdrum/kern(?)

– => Conversion to/from can be very high-quality

– Dolet for Finale does MusicXML <=> Finale

– Dolet 1.0 also did MuseData (CCARH encoding)

• 1.0 emphasizes Logical; much Performance info too

• MusicXML 1.1 improves formatting (Graphical)

• Also has support for tablature

3 Mar. 06

58

MusicXML and Variations2

• Real-world situation: choice of encoding for Variations2

– In Byrd & Isaacson Requirements, MusicXML 1.0 implemented

all “Required” and most “Very Desirable”; so did MEI; others?

– Decision came to “MusicXML vs. Everything Else” (Jan. 2004)

– Also considered MEI (Perry Roland), GUIDO, Humdrum/kern,

NIFF

– Did MusicXML 1.0 “have serious enough drawbacks, considering

the whole situation and not just the representation itself, to make

anything else worth considering?”

• Most important: software support

• ...other programs and ours (planned an integrated notation engine)

• Other considerations: complexity, migration paths (future

conversion), flexibility/extensibility, etc.

– Conclusion: no.

1 Mar. 06

59

Declarative vs. Procedural Representations

• Representation in cognition: Hofstadter’s example

– Declarative: How many people live in Chicago?

– Procedural: How many chairs are there in your living room?

– Probably mixed: Recall of a melody

• Principle (and application to programming)

– Declarative: information is explicit (programming: what to do)

– Procedural: information is implicit (programming: how to do it)

– In programming languages, well-known ones are procedural

• All representations we’ve looked at are declarative

– Exception: possible to use CSound procedurally

– Very limited exception: repeat signs, D.C., D.S., etc.

• Procedural representations of music much more specialized

– Pla(?), Common Music, Stella, BP1(?)

14 Feb.

60

Declarative vs. Procedural Representations and

Variations2

• Wiggins et al. (1993) give three sorts of tasks:

1. recording: user wants record of musical object, to be retrieved later

2. analysis: user wants analyzed version, not “raw” musical object

3. generation/composition

• Variations2 concerned most with 1st, less with 2nd, least with

3rd

• Declarative representations (not procedural) are much more

appropriate for 1st and usually 2nd => considered only

declarative for Variations2

• Cf. Byrd & Isaacson Requirements

14 Feb.

61

Procedural Representation of a Simplified Version of

the Mozart Example

• In C-like syntax

•

int scale[] = { 0, 2, 3, 5, 7, 8, 10 };

•

•

•

•

•

•

•

•

procedure PlayTetrachord(float startTime, int startNoteNum, float

duration)

{

float time = startTime;

for (int i = 0; i<4; i++) {

PlayNote(time, duration, startNoteNum+scale[i]);

time += duration;

}

}

•

•

•

•

•

•

•

procedure main()

{

PlayTetrachord(0, 72, duration, .5);

PlayNote(2, 72+scale[4], 1);

PlayNote(3, 72+scale[4], 2);

PlayTetrachord(4, 53, duration, .5);

}

rev. 15 Feb.

62

Music Collections: Available or Not

• Cf. “Candidate Music IR Test Collections” list

– http://mypage.iu.edu/~donbyrd/MusicTestCollections.HTML

• How much music content is available in digital form?

– In audio form, lots and lots

• At cost: iTunes, Rhapsody, Napster, etc. (millions of tracks)

• “Free”: Classical Music Archives, etc. (tens of 1000s of tracks)

– In event (mostly MIDI file) form, far less

• At cost: ??

• “Free”: Classical Music Archives, etc. (tens of 1000s of tracks)

– In notation (CMN and tablature) form, similar to event form

• At cost: Sunhawk, MusicNotes, etc. (tens of 1000s of movements)

• Free: CCARH (1000s of mvmts), “tab” sites (tens of 1000s)

• Not even much in notation form that’s not available; why?

– No standard encoding (until now) => must usually convert

– Much smaller audience for notation than audio

2 Mar. 06

63

Encoding & Representation Conversion (1)

• Converting specific representations should be easier than

basic representations

– Example: CMN => tablature or vice-versa

• Converting encodings of the same representation should be

easier than specific representations

– Example: MusicXML <=> Finale; MP3 <=> .wav

• Generally true, but:

– Any encoding of audio to any other is relatively simple

– Likewise for time-stamped events

– Notation is by far most difficult: with complex music, big problem

• Examples

– Nightingale to MIDI File, back to Nightingale

– Finale equivalents with MusicXML and MIDI File

3 Mar. 06

64

Encoding & Representation Conversion (2)

• Nightingale to Notelist, back to Nightingale

– Loses text font/position, articulation mark and slur position,

instrument names, etc.

• Nightingale to MIDI File, back to Nightingale

– Totally loses text, articulation marks and slurs, etc.—but keeps

instrument names!

– Distorts logical note duration (uses approx. performance duration);

loses note spelling, clefs, dynamic marks.

• Finale equivalents with MusicXML and MIDI File

– Finale/MusicXML probably better than Nightingale/Notelist

– Finale/MIDI can be better than Nightingale/MIDI—but why?

• Both representations and programs lose/distort information!

• Murphy’s Law(?): “Anything that can go wrong, will.”

rev. 22 Feb.

65

Encoding Conversion Demo (1)

ORIGINAL

Nightingale: Open Webern example

20 Feb.

NOTELIST

66

Encoding Conversion Demo (2)

NOTELIST

MIDI

Nightingale: Open Webern, Save Notelist, Open Notelist

20 Feb.

67

Representation Conversion Demo

MIDI

Nightingale: Open Webern, Export MIDI File, Import MIDI File

20 Feb.

68

Encoding & Representation Conversion

• Why is information distorted/lost?

• Two steps in getting from one program to another

• 1. Export: notation program converts internal form to the one it’s

saving

– If the format it’s saving can’t represent some information, it can’t put that

information into the file; but even if format can, program might not do it

correctly—or might not try.

• 2. Import: notation program converts format it’s opening into its

internal form

– Even if some kind of information is in the file and program can handle it,

it might not do it correctly—or might not try.

• What information is distorted/lost because of limits of encoding vs.

limits of programs?

• Can tell only by looking at encoding , and may not be easy

• Example: notation program => MIDI file => same notation program

– MIDI files can handle text, but both Nightingale & (default) Finale lose it!

3 Mar. 06

69

Converting to Notation from Audio or “Non-music”

• Audio Music Recognition (AMR)

–

–

–

–

Monophonic (not too hard) vs. polyphonic (very hard)

Programs available since ca. 2000, but little visible progress

Still subject of much serious research

Not “ready for prime time” for anything but monophonic

• Optical Music Recognition (OMR)

–

–

–

–

–

Landmark report by Selfridge-Field et al (1994)

Programs available since ca. 1994; significant progress

Table of programs available on my Web site

Still subject of some serious research, e.g., Byrd & Schindele

Far more promising than AMR in near future

• Chris Raphael (1999): “OMR is orders of magnitude easier”

6 Mar. 06

70

OMR: The State of the Art (1)

• Byrd & Schindele (2006), Prospects for Improving OMR

with Multiple Recognizers proposes multiple recognizers

–

–

–

–

Recognition = segmentation + classification

Programs studied: PhotoScore, SharpEye, SmartScore

Hand error count (with “feel”, some automatic) of not much music

Cf. Craig Sapp’s error analysis of Mozart page with SharpEye

• http://craig.sapp.org/omr/sharpeye/mozson13/

– Main conclusions

• MROMR development requires much music => automatic

comparison of results

• Will “always” need access to specialized editing UI to correct errors

– Probably not hard to get MROMR output back into SharpEye

• SharpEye is most accurate, but error rates still too high

• MROMR is worth trying

• Evaluation is a major roadblock

– Difficult to evaluate: see Droettboom & Fujinaga, etc.

– Not even a standard test database(s)

– But prospects for improvement are good

8 Mar. 06

71

OMR: The State of the Art (2)

• Practical now for much music, but nowhere near all

• Bill Clemmons’ 1-to-10 scale of music suitability for OMR

(2004)

– “SmartScore works amazingly well for some things and so badly

with others that creating a file from scratch is less time consuming”

– Has students scan standard SATB choral octavo, parts &

accompaniment (1), & piano reduction of Parsifal prelude (10)

– Choral octavo is so clean, can get accurate file after only a handful

of corrections; Parsifal import is essentially unusable

• Lots of already-scanned images: in Variations2, CD Sheet

Music, on the Web

5 Mar. 06

72