Document

advertisement

Computational Grids

ITCS 4010 Grid Computing, 2005, UNC-Charlotte, B. Wilkinson.

12.1

Computational Problems

• Problems that have lots of computations

and usually lots of data.

12.2

Demand for Computational Speed

• Continual demand for greater computational

speed from a computer system than is

currently possible

• Areas requiring great computational speed

include numerical modeling and simulation of

scientific and engineering problems.

• Computations must be completed within a

“reasonable” time period.

12.3

Grand Challenge Problems

One that cannot be solved in a reasonable

amount of time with today’s computers.

Obviously, an execution time of 10 years is

always unreasonable.

Examples

• Modeling large DNA structures

• Global weather forecasting

• Modeling motion of astronomical bodies.

12.4

Weather Forecasting

• Atmosphere modeled by dividing it into 3dimensional cells.

• Calculations of each cell repeated many

times to model passage of time.

12.5

Global Weather Forecasting Example

• Suppose whole global atmosphere divided into cells of size

1 mile 1 mile 1 mile to a height of 10 miles (10 cells

high) - about 5 108 cells.

• Suppose each calculation requires 200 floating point

operations. In one time step, 1011 floating point operations

necessary.

• To forecast the weather over 7 days using 1-minute

intervals, a computer operating at 1Gflops (109 floating

point operations/s) takes 106 seconds or over 10 days.

• To perform calculation in 5 minutes requires computer

operating at 3.4 Tflops (3.4 1012 floating point

operations/sec).

12.6

Modeling Motion of Astronomical Bodies

• Each body attracted to each other body by

gravitational forces. Movement of each body

predicted by calculating total force on each

body.

• With N bodies, N - 1 forces to calculate for

each body, or approx. N2 calculations. (N log2

N for an efficient approx. algorithm.)

• After determining new positions of bodies,

calculations repeated.

12.7

• A galaxy might have, say, 1011 stars.

• Even if each calculation done in 1 ms

(extremely optimistic figure), it takes 109

years for one iteration using N2 algorithm

and

almost a year for one iteration using an

efficient N log2 N approximate algorithm.

12.8

Astrophysical N-body simulation by Scott Linssen (undergraduate

UNC-Charlotte student).

12.9

High Performance Computing

(HPC)

• Traditionally, achieved by using the

multiple computers together - parallel

computing.

• Simple idea! -- Using multiple computers

(or processors) simultaneously should be

able can solve the problem faster than a

single computer.

12.10

High Performance Computing

• Long History:

– Multiprocessor system of various

types (1950’s onwards)

– Supercomputers (1960s-80’s)

– Cluster computing (1990’s)

– Grid computing (2000’s) ??

Maybe, but let’s first look at how to achieve HPC.

12.11

Speedup Factor

ts

Execution time using one processor (best sequential algorithm)

S(p) =

Execution time using a multiprocessor with p processors

=

tp

where ts is execution time on a single processor and tp is

execution time on a multiprocessor.

S(p) gives increase in speed by using multiprocessor.

Use best sequential algorithm with single processor

system. Underlying algorithm for parallel implementation

might be (and is usually) different.

12.12

Maximum Speedup

Maximum speedup is usually p with p

processors (linear speedup).

Possible to get superlinear speedup (greater

than p) but usually a specific reason such as:

• Extra memory in multiprocessor system

• Nondeterministic algorithm

12.13

Maximum Speedup

Amdahl’s law

ts

fts

(1 - f)ts

Serial section

Parallelizable sections

(a) One processor

(b) Multiple

processors

p processors

tp

(1 - f)ts/p

12.14

Speedup factor is given by:

ts

p

S(p)

fts (1 f )ts /p

1 (p 1)f

This equation is known as Amdahl’s law

12.15

Speedup against number of processors

f = 0%

20

16

Speedup

factor,

S(p)

12

f = 5%

8

f = 10%

f = 20%

4

4

8

12

16

20

Number of processors, p

12.16

Even with infinite number of processors,

maximum speedup limited to 1/f.

Example

With only 5% of computation being serial,

maximum speedup is 20, irrespective of number

of processors.

12.17

Superlinear Speedup

Example - Searching

(a) Searching each sub-space sequentially

Start

Time

ts

t s/p

Sub-space

search

Dt

x t s /p

Solution found

x indeterminate

12.18

(b) Searching each

sub-space in

parallel

Dt

Solution found

12.19

Question

What is the speed-up now?

12.20

Speed-up then given by

t

x s + Dt

p

S(p) =

Dt

12.21

Worst case for sequential search when solution

found in last sub-space search.

Then parallel version offers greatest benefit, i.e.

p – 1 t + Dt

s

p

S(p) =

Dt

as Dt tends to zero

12.22

Least advantage for parallel version when

solution found in first sub-space search of

the sequential search, i.e.

S(p) =

Dt

Dt

=1

Actual speed-up depends upon which

subspace holds solution but could be

extremely large.

12.23

Computing Platforms for

Parallel Programming

12.24

Types of Parallel Computers

Two principal types:

1. Single computer containing multiple

processors - main memory is shared, hence

called “Shared memory multiprocessor”

2. Interconnected multiple computer systems

12.25

Conventional Computer

Consists of a processor executing a program stored

in a (main) memory:

Main memory

Instructions (to processor)

Data (to or from processor)

Processor

Each main memory location located by its address.

Addresses start at 0 and extend to 2b - 1 when there

are b bits (binary digits) in address.

12.26

Shared Memory Multiprocessor

• Extend single processor model - multiple

processors connected to a single shared memory

with a single address space:

Memory

Processors

A real system will have cache memory

associated with each processor

12.27

Examples

• Dual Pentiums

• Quad Pentiums

12.28

Quad Pentium Shared

Memory Multiprocessor

Processor

Processor

Processor

Processor

L1 cache

L1 cache

L1 cache

L1 cache

L2 Cache

L2 Cache

L2 Cache

L2 Cache

Bus interface

Bus interface

Bus interface

Bus interface

Processor/

memory

bus

I/O interface

Memory controller

I/O bus

Shared memory

Memory

12.29

Programming Shared Memory

Multiprocessors

• Threads - programmer decomposes program into

parallel sequences (threads), each being able to

access variables declared outside threads.

Example: Pthreads

• Use sequential programming language with

preprocessor compiler directives, constructs,

or syntax to declare shared variables and

specify parallelism.

Examples: OpenMP (an industry standard), UPC

(Unified Parallel C) -- needs compilers.

12.30

• Parallel programming language with syntax

to express parallelism. Compiler creates

executable code -- not now common.

• Use parallelizing compiler to convert regular

sequential language programs into parallel

executable code - also not now common.

12.31

Message-Passing Multicomputer

Complete computers connected through an

interconnection network:

Interconnection

network

Messages

Processor

Local

memory

Computers

12.32

Dedicated cluster with a

master node

External network

User

Cluster

Master

node

Switch

2nd Ethernet

interface

Ethernet

interface

Compute nodes

12.33

UNC-C’s cluster used for grid course

(Department of Computer Science)

3.4 GHz

dual Xeon

Pentiums

To

External

network

coit-grid01

coit-grid02

coit-grid03

coit-grid04

M

M

M

M

P

P

P

P

P

P

P

P

Switch

Funding for this cluster provided by the University of North Carolina,

Office of the President, specificially for the grid computing course.

12.34

Programming Clusters

• Usually based upon explicit messagepassing.

• Common approach -- a set of user-level

libraries for message passing. Example:

– Parallel Virtual Machine (PVM) - late 1980’s.

Became very popular in mid 1990’s.

– Message-Passing Interface (MPI) - standard

defined in 1990’s and now dominant.

12.35

MPI

(Message Passing Interface)

• Message passing library standard

developed by group of academics and

industrial partners to foster more

widespread use and portability.

• Defines routines, not implementation.

• Several free implementations exist.

12.36

MPI designed:

• To address some problems with earlier

message-passing system such as PVM.

• To provide powerful message-passing

mechanism and routines - over 126

routines

(although it is said that one can write reasonable MPI

programs with just 6 MPI routines).

12.37

Message-Passing Programming using

User-level Message Passing Libraries

Two primary mechanisms needed:

1. A method of creating separate processes for

execution on different computers

2. A method of sending and receiving messages

12.38

Multiple program, multiple data (MPMD)

model

Source

file

Source

file

Compile to suit

processor

Executable

Processor 0

Processor p - 1

12.39

Single Program Multiple Data (SPMD) model

.

Different processes merged into one program. Control

statements select different parts for each processor to

execute.

Source

file

Basic MPI way

Compile to suit

processor

Executables

Processor 0

Processor p - 1

12.40

Multiple Program Multiple Data (MPMD) Model

Separate programs for each processor. One processor

executes master process. Other processes started from within

master process - dynamic process creation.

Process 1

spawn();

Start execution

of process 2 Process 2

Time

Can be done

with MPI

version 2

12.41

Communicators

• Defines scope of a communication operation.

• Processes have ranks associated with

communicator.

• Initially, all processes enrolled in a “universe”

called MPI_COMM_WORLD, and each

process is given a unique rank, a number from

0 to p - 1, with p processes.

• Other communicators can be established for

groups of processes.

12.42

Using SPMD Computational Model

main (int argc, char *argv[]) {

MPI_Init(&argc, &argv);

.

.

MPI_Comm_rank(MPI_COMM_WORLD,&myrank); /*find rank */

if (myrank == 0)

master();

else

slave();

.

.

MPI_Finalize();

}

where master() and slave() are to be executed by master

process and slave process, respectively.

12.43

Basic “point-to-point”

Send and Receive Routines

Passing a message between processes using

send() and recv() library calls:

Process 1

Process 2

x

y

send(&x, 2);

Movement

of data

recv(&y, 1);

Generic syntax (actual formats later)

12.44

Message Tag

• Used to differentiate between different types

of messages being sent.

• Message tag is carried within message.

• If special type matching is not required, a wild

card message tag is used, so that the recv()

will match with any send().

12.45

Message Tag Example

To send a message, x, with message tag 5 from a

source process, 1, to a destination process, 2, and

assign to y:

Process 1

Process 2

x

y

send(&x,2, 5);

Movement

of data

recv(&y,1,

5);

Waits for a message from process 1 with a tag of 5

12.46

Synchronous Message Passing

Routines return when message transfer

completed.

Synchronous send routine

• Waits until complete message can be accepted by

the receiving process before sending the

message.

Synchronous receive routine

• Waits until the message it is expecting arrives.

12.47

Synchronous send() and recv() using 3-way

protocol

Process 1

Time

Suspend

process

Both processes

continue

send();

(a) When send()

Process 2

Request to send

Acknowledgment

recv();

Message

occurs before recv()

12.48

Synchronous send() and recv() using 3-way

protocol

Process 1

Process 2

Time

recv();

send();

Both processes

continue

(b) When recv()

Request to send

Suspend

process

Message

Acknowledgment

occurs before send()

12.49

• Synchronous routines intrinsically

perform two actions:

– They transfer data and

– They synchronize processes.

12.50

Asynchronous Message Passing

• Routines that do not wait for actions to complete

before returning. Usually require local storage

for messages.

• More than one version depending upon the

actual semantics for returning.

• In general, they do not synchronize processes

but allow processes to move forward sooner.

Must be used with care.

12.51

MPI Blocking and Non-Blocking

• Blocking - return after their local actions

complete, though the message transfer may not

have been completed.

• Non-blocking - return immediately. Assumes

that data storage used for transfer not modified

by subsequent statements prior to being used for

transfer, and it is left to the programmer to ensure

this.

These terms may have different interpretations in

other systems.

12.52

How message-passing routines return

before message transfer completed

Message buffer needed between source and

destination to hold message:

Process 1

Process 2

Message buffer

Time

send();

Continue

process

recv();

Read

message buffer

12.53

Asynchronous routines changing to

synchronous routines

• Buffers only of finite length and a point could

be reached when send routine held up

because all available buffer space exhausted.

• Then, send routine will wait until storage

becomes re-available - i.e then routine

behaves as a synchronous routine.

12.54

Parameters of MPI blocking send

MPI_Send(buf, count, datatype, dest, tag, comm)

Datatype

Message tag

Address of

of each

send buffer

item

Number of

Rank of

Communicator

items to send

destination

process

12.55

Parameters of MPI blocking receive

MPI_Recv(buf,count,datatype,dest,tag,comm,status)

Datatype

Message tag

Address of

of each

receive

item

buffer

Max. number of

Rank of source Communicator

items to

process

Status

receive

after

operation

12.56

Example

To send an integer x from process 0 to process 1,

MPI_Comm_rank(MPI_COMM_WORLD,&myrank); /* find rank */

if (myrank == 0) {

int x;

MPI_Send(&x,1,MPI_INT,1,msgtag,MPI_COMM_WORLD);

} else if (myrank == 1) {

int x;

MPI_Recv(&x,1,MPI_INT,0,msgtag,MPI_COMM_WORLD,status);

}

12.57

MPI Nonblocking Routines

• Nonblocking send - MPI_Isend() - will return

“immediately” even before source location is

safe to be altered.

• Nonblocking receive - MPI_Irecv() - will

return even if no message to accept.

12.58

Detecting when message receive if

sent with non-blocking send routine

Completion detected by MPI_Wait() and MPI_Test().

MPI_Wait() waits until operation completed and returns

then.

MPI_Test() returns with flag set indicating whether

operation completed at that time.

Need to know which particular send you are waiting for.

Identified with request parameter.

12.59

Example

To send an integer x from process 0 to process 1 and

allow process 0 to continue,

MPI_Comm_rank(MPI_COMM_WORLD, &myrank);/* find rank */

if (myrank == 0) {

int x;

MPI_Isend(&x,1,MPI_INT,1,msgtag,MPI_COMM_WORLD, req1);

compute();

MPI_Wait(req1, status);

} else if (myrank == 1) {

int x;

MPI_Recv(&x,1,MPI_INT,0,msgtag, MPI_COMM_WORLD,

status);

}

12.60

“Group” message passing routines

Have routines that send message(s) to a group

of processes or receive message(s) from a

group of processes

Higher efficiency than separate point-to-point

routines although not absolutely necessary.

12.61

Broadcast

Sending same message to a group of processes.

(Sometimes “Multicast” - sending same message to

defined group of processes, “Broadcast” - to all processes.)

Process 0

data

Process 1

Processp - 1

data

data

MPI_bcast();

MPI_bcast();

Action

buf

Code

MPI_bcast();

MPI form

12.62

MPI Broadcast routine

int MPI_Bcast(void *buf, int count,

MPI_Datatype datatype, int root, MPI_Comm

comm)

Actions:

Broadcasts message from root process to al

l processes in comm and itself.

Parameters:

*buf

count

datatype

root

message buffer

number of entries in buffer

data type of buffer

rank of root

12.63

Scatter

Sending each element of an array in root process

to a separate process. Contents of ith location of

array sent to ith process.

Process 0

Process 1

data

data

Processp - 1

data

Action

buf

Code

MPI_scatter();

MPI_scatter();

MPI_scatter();

MPI form

12.64

Gather

Having one process collect individual values

from set of processes.

Process 0

Process 1

Processp - 1

data

data

data

MPI_gather();

MPI_gather();

MPI_gather();

Action

buf

Code

MPI form

12.65

Reduce

Gather operation combined with specified

arithmetic/logical operation.

Example: Values could be gathered and then

added together by root:

Action

Code

Process 0

Process 1

data

data

data

MPI_reduce();

MPI_reduce();

buf

MPI_reduce();

Processp - 1

+

MPI form

12.66

Collective Communication

Involves set of processes, defined by an intra-communicator.

Message tags not present. Principal collective operations:

•

•

•

•

•

•

•

MPI_Bcast()

- Broadcast from root to all other processes

MPI_Gather()

- Gather values for group of processes

MPI_Scatter()

- Scatters buffer in parts to group of processes

MPI_Alltoall() - Sends data from all processes to all processes

MPI_Reduce()

- Combine values on all processes to single value

MPI_Reduce_scatter() - Combine values and scatter results

MPI_Scan()

- Compute prefix reductions of data on processes

12.67

Example

To gather items from group of processes into process

0, using dynamically allocated memory in root

process:

int data[10]; /*data to be gathered from processes*/

MPI_Comm_rank(MPI_COMM_WORLD, &myrank);/* find rank */

if (myrank == 0) {

MPI_Comm_size(MPI_COMM_WORLD,&grp_size);/*find size*/

/*allocate memory*/

buf = (int *)malloc(grp_size*10*sizeof (int));

}

MPI_Gather(data,10,MPI_INT,buf,grp_size*10,MPI_INT,0,

MPI_COMM_WORLD);

MPI_Gather() gathers from all processes, including root.

12.68

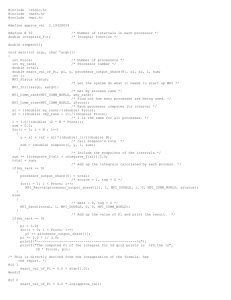

#include “mpi.h”

#include <stdio.h>

#include <math.h>

#define MAXSIZE 1000

void main(int argc, char *argv) {

int myid, numprocs;

int data[MAXSIZE], i, x, low, high, myresult, result;

char fn[255];

char *fp;

MPI_Init(&argc,&argv);

MPI_Comm_size(MPI_COMM_WORLD,&numprocs);

MPI_Comm_rank(MPI_COMM_WORLD,&myid);

if (myid == 0) { /* Open input file and initialize data */

strcpy(fn,getenv(“HOME”));

strcat(fn,”/MPI/rand_data.txt”);

if ((fp = fopen(fn,”r”)) == NULL) {

printf(“Can’t open the input file: %s\n\n”, fn);

exit(1);

}

for(i = 0; i < MAXSIZE; i++) fscanf(fp,”%d”, &data[i]);

}

MPI_Bcast(data, MAXSIZE, MPI_INT, 0, MPI_COMM_WORLD); /* broadcast data */

x = n/nproc; /* Add my portion Of data */

low = myid * x;

high = low + x;

for(i = low; i < high; i++)

myresult += data[i];

printf(“I got %d from %d\n”, myresult, myid); /* Compute global sum */

MPI_Reduce(&myresult, &result, 1, MPI_INT, MPI_SUM, 0, MPI_COMM_WORLD);

if (myid == 0) printf(“The sum is %d.\n”, result);

MPI_Finalize();

}

Sample MPI program

12.69

Debugging/Evaluating Parallel

Programs Empirically

12.70

Visualization Tools

Programs can be watched as they are executed in

a space-time diagram (or process-time diagram):

Process 1

Process 2

Process 3

Computing

Time

Waiting

Message-passing system routine

Message

Visualization tools available for MPI. An example - Upshot

12.71

Evaluating Programs Empirically

Measuring Execution Time

To measure the execution time between point L1 and point

L2 in the code, we might have a construction such as

.

t1 = MPI_Wtime();

/* start */

.

.

t2 = MPI_Wtime();

/* end */

.

elapsed_time = t2 - t1);

/*elapsed_time */

printf(“Elapsed time = %5.2f seconds”, elapsed_time);

MPI provides the routine MPI_Wtime() for returning time

(in seconds).

12.72

Executing MPI programs

• MPI version 1 standard does not

address implementation and did not

specify how programs are to be started

and each implementation has its own

way.

12.73

Compiling/Executing MPI

Programs

Basics

For MPICH, use two commands:

• mpicc to compile a program

• mirun to execute program

12.74

mpicc

Example

mpicc –o hello

hello.c

compiles hello.c to create the executable hello.

mpicc is (probably) a script calling cc and hence

all regular cc flags can be attached.

12.75

mpirun

Example

mpirun –np 3

hello

executes 3 instances of hello on the local

machine (when using MPICH).

12.76

Using multiple computers

First create a file (say called “machines”)

containing list of computers you what to use.

Example

coit-1grid01.uncc.edu

coit-2grid01.uncc.edu

coit-3grid01.uncc.edu

coit-4grid01.uncc.edu

12.77

Then specify machines file in mpirun command:

Example

mpirun –np 3 -machinefile machines hello

executes 3 instances of hello using the computers

listed in the file. (Scheduling will be round-robin unless

otherwise specified.)

12.78

MPI-2

• The MPI standard, version 2 does

recommend a command for starting MPI

programs, namely:

mpiexec -n # prog

where # is the number of processes and

prog is the program.

12.79

Sample MPI Programs

12.80

Hello World

Printing out rank of process

#include "mpi.h"

#include <stdio.h>

int main(int argc,char *argv[]) {

int myrank, numprocs;

MPI_Init(&argc, &argv);

MPI_Comm_rank(MPI_COMM_WORLD,&myrank);

MPI_Comm_size(MPI_COMM_WORLD,&numprocs)

printf("Hello World from process %d of %d\n",

myrank, numprocs);

MPI_Finalize();

return 0;

}

12.81

Question

Suppose this program is compiled as

helloworld and is executed on a single

computer with the command:

mpirun -np 4 helloworld

What would the output be?

12.82

Answer

Several possible outputs depending upon

order processes are executed.

Example

Hello World from process 2 of 4

Hello World from process 0 of 4

Hello World form process 1 of 4

Hello World form process 3 of 4

12.83

Adding communication to get process 0 to print all messages:

#include "mpi.h"

#include <stdio.h>

int main(int argc,char *argv[]) {

int myrank, numprocs;

char greeting[80];

/* message sent from slaves to master */

MPI_Status status;

MPI_Init(&argc, &argv);

MPI_Comm_rank(MPI_COMM_WORLD,&myrank);

MPI_Comm_size(MPI_COMM_WORLD,&numprocs);

sprintf(greeting,"Hello World from process %d of %d\n",rank,size);

if (myrank == 0 ) {

/* I am going print out everything */

printf("s\n",greeting);

/* print greeting from proc 0 */

for (i = 1; i < numprocs; i++) {

/* greetings in order */

MPI_Recv(geeting,sizeof(greeting),MPI_CHAR,i,1,MPI_COMM_WORLD,

&status);

printf(%s\n", greeting);

}

} else {

MPI_Send(greeting,strlen(greeting)+1,MPI_CHAR,0,1,

MPI_COMM_WORLD);

}

MPI_Finalize();

return 0;

}

12.84

MPI_Get_processor_name()

Return name of processor executing code

(and length of string). Arguments:

MPI_Get_processor_name(char *name,int *resultlen)

Example

returned in here

int namelen;

char procname[MPI_MAX_PROCESSOR_NAME];

MPI_Get_processor_name(procname,&namelen);

12.85

Easy then to add name in greeting with:

sprintf(greeting,"Hello World from process %d

of %d on $s\n", rank, size, procname);

12.86

Pinging processes and timing

Master-slave structure

#include <mpi.h>

void master(void);

void slave(void);

int main(int argc, char **argv){

int myrank;

printf("This is my ping program\n");

MPI_Init(&argc, &argv);

MPI_Comm_rank(MPI_COMM_WORLD, &myrank);

if (myrank == 0) {

master();

} else {

slave();

}

MPI_Finalize();

return 0;

}

12.87

Master routine

void master(void){

int x = 9;

double starttime, endtime;

MPI_Status status;

printf("I am the master - Send me a message when you

receive this number %d\n", x);

starttime = MPI_Wtime();

MPI_Send(&x,1,MPI_INT,1,1,MPI_COMM_WORLD);

MPI_Recv(&x,1,MPI_INT,1,1,MPI_COMM_WORLD,&status);

endtime = MPI_Wtime();

printf("I am the master. I got this back %d \n", x);

printf("That took %f seconds\n",endtime - starttime);

}

12.88

Slave routine

void slave(void){

int x;

MPI_Status status;

printf("I am the slave - working\n");

MPI_Recv(&x,1,MPI_INT,0,1,MPI_COMM_WORLD,&status);

printf("I am the slave. I got this %d \n", x);

MPI_Send(&x, 1, MPI_INT, 0, 1, MPI_COMM_WORLD);

}

12.89

Example using collective routines

MPI_Bcast()

MPI_Reduce()

Adding numbers in a file.

12.90

#include “mpi.h”

#include <stdio.h>

#include <math.h>

#define MAXSIZE 1000

void main(int argc, char *argv){

int myid, numprocs;

int data[MAXSIZE], i, x, low, high, myresult, result;

char fn[255];

char *fp;

MPI_Init(&argc,&argv);

MPI_Comm_size(MPI_COMM_WORLD,&numprocs);

MPI_Comm_rank(MPI_COMM_WORLD,&myid);

if (myid == 0) {

/* Open input file and initialize data */

strcpy(fn,getenv(“HOME”));

strcat(fn,”/MPI/rand_data.txt”);

if ((fp = fopen(fn,”r”)) == NULL) {

printf(“Can’t open the input file: %s\n\n”, fn);

exit(1);

}

for(i = 0; i < MAXSIZE; i++) fscanf(fp,”%d”, &data[i]);

}

MPI_Bcast(data, MAXSIZE, MPI_INT, 0, MPI_COMM_WORLD); /* broadcast data */

x = n/nproc; /* Add my portion Of data */

low = myid * x;

high = low + x;

for(i = low; i < high; i++)

myresult += data[i];

printf(“I got %d from %d\n”, myresult, myid); /* Compute global sum */

MPI_Reduce(&myresult, &result, 1, MPI_INT, MPI_SUM, 0, MPI_COMM_WORLD);

if (myid == 0) printf(“The sum is %d.\n”, result);

MPI_Finalize();

}

12.91

C Program Command Line Arguments

A normal C program specifies command line

arguments to be passed to main with:

int main(int argc, char *argv[])

where

• argc is the argument count and

• argv[] is an array of character pointers.

– First entry is a pointer to program name

– Subsequent entries point to subsequent strings

on the command line.

12.92

MPI

C program command line arguments

• Implementations of MPI remove from

the argv array any command line

arguments used by the implementation.

• Note MPI_Init requires argc and argv

(specified as addresses)

12.93

Example

Getting Command Line Argument

#include “mpi.h”

#include <stdio.h>

int main (int argc, char *argv[]) {

int n;

/* get and convert character string

argument to integer value /*

n = atoi(argv[1]);

return 0;

}

12.94

Executing MPI program with

command line arguments

mpirun -np 2 myProg 56 123

argv[0]

Removed by

MPI - probably

by

MPI_Init()

argv[1]

argv[2]

Remember these array

elements hold pointers to the

arguments.

12.95

More Information on MPI

• Books: “Using MPI Portable Parallel

Programming with the Message-Passing

Interface 2nd ed.,” W. Gropp, E. Lusk, and A.

Skjellum, The MIT Press,1999.

• MPICH: http://www.mcs.anl.gov/mpi

• LAM MPI: http://www.lam-mpi.org

12.96

Parallel Programming Home

Page

http://www.cs.uncc.edu/par_prog

Gives step-by-step instructions for

compiling and executing programs, and

other information.

12.97

Grid-enabled MPI

12.98

Several versions of MPI developed for a

grid:

• MPICH-G, MPICH-G2

• PACXMPI

MPICH-G2 is based on MPICH and uses

Globus.

12.99

MPI code for the grid

No difference in code from regular MPI

code.

Key aspect is MPI implementation:

• Communication methods

• Resource management

12.100

Communication Methods

• Implementation should take into account

whether messages are between processor

on the same computer or processors on

different computers on the network.

• Pack messages into less larger message,

even if this requires more computations

12.101

MPICH-G2

• Complete implementation of MPI

• Can use existing MPI programs on a grid

without change

• Uses Globus to start tasks, etc.

• Version 2 a complete redesign from

MPICH-G for Globus 2.2 or later.

12.102

Compiling Application Program

As with regular MPI programs, compile on

each machine you intend to use and make

accessible to computers.

12.103

Running an MPICH-G2 Program

mpirun

• submits a Globus RSL script (Resource

Specification Language Script) to

launch application

• RSL script can be created by mpirun

or you can write your own.

• RSL script gives powerful mechanism to

specify different executables etc., but

low level.

12.104

mpirun

(with it constructing RSL script)

• Use if want to launch a single

executable on binary compatible

machines with a shared file system.

• Requires a “machines” file - a list of

computers to be used (and job

managers)

12.105

“Machines” file

• Computers listed by their Globus job

manager service followed by optional

maximum number of node (tasks) on

that machine.

• If job manager omitted (i.e., just name

of computer), will default to Globus job

manager.

12.106

Location of “machines” file

• mpirun command expects the “machines” file

either in

– the directory specified by -machinefile flag

– the current directory used to execute the mpirun

command, or

– in <MPICH_INSTALL_PATH>/bin/machines

12.107

Running MPI program

• Uses the same command line as a

regular MPI program:

mpirun -np 25 my_prog

creates 25 tasks allocated on machines

in “machines’ file in around robin

fashion.

12.108

Example

With the machines file containing:

“coit-0grid01.uncc.edu” 4

“coit-grid02.uncc.edu” 5

and the command:

mpirun -np 10 myProg

the first 4 processes (jobs) would run on coitgrid01, the next 5 on coit-grid02, and the

remaining one on coit-grid01.

12.109

mpirun

with your own RSL script

• Necessary if machines not executing same

executable.

• Easiest way to create script is to modify

existing one.

• Use mpirun –dumprsl

– Causes script printed out. Application program

not launched.

12.110

Example

mpirun -dumprsl -np 2 myprog

will generate appropriate printout of an rsl

document according to the details of the

job from the command line and machine

file.

12.111

Given rsl file, myRSL.rsl, use:

mpirun -globusrsl myRSL.rsl

to submit modified script.

12.112

MPICH-G2 internals

• Processes allocated a “machine-local”

number and a “grid global” number translated into where process actually

resides.

• Non-local operations uses grid services

• Local operations do not.

• globusrun command submits

simultaneous job requests

12.113

Limitations

• “machines” file limits computers to

those known - no discovery of

resources

• If machines heterogeneous, need

appropriate executables available, and

RSL script

• Speed an issue - original version MPI-G

slow.

12.114

More information on MPICH-G2

http://www.niu.edu/mpi

http://www.globus.org/mpi

http://www.hpclab.niu.edu/mpi/g2_body.htm

12.115

Parallel Programming

Techniques

Suitable for a Grid

12.116

Message-Passing on a Grid

• VERY expensive, sending data across

network costs millions of cycles

• Bandwidth shared with other users

• Links unreliable

12.117

Computational Strategies

• As a computing platform, a grid favors

situations with absolute minimum

communication between computers.

12.118

Strategies

With no/minimum communication:

• “Embarrassingly Parallel” Computations

– those computations which obviously can be

divided into parallel independent parts. Parts

executed on separate computers.

• Separate instance of the same problem

executing on each system, each using

different data

12.119

Embarrassingly Parallel Computations

A computation that can obviously be divided into a

number of completely independent parts, each of

which can be executed by a separate process(or).

Input data

Processes

Results

No communication or very little communication between processes.

Each process can do its tasks without any interaction with other processes

12.120

Monte Carlo Methods

• An embarrassingly parallel computation.

• Monte Carlo methods use of random

selections.

12.121

Simple Example: To calculate

Circle formed within a square, with radius of 1.

Square has sides 2 2.

Total area = 4

2

Area =

2

12.122

Ratio of area of circle to square given by

Area of circle = (1)2

Area of square 2 x 2

=

4

• Points within square chosen randomly.

• Score kept of how many points happen to

lie within circle.

• Fraction of points within circle will be /4,

given a sufficient number of randomly

selected samples.

12.123

Method actually computes an integral.

One quadrant of the construction can be

described by integral

1 1 – x2 d x =

--0

4

1

f(x)

y =

1

1 – x2

x

1

12.124

So can use method to compute any

integral!

Monte Carlo method very useful if

the function cannot be integrated

numerically (maybe having a large

number of variables).

12.125

Alternative (better) “Monte Carlo” Method

Use random values of x to compute f (x) and sum

values of f (x)

y

x

X1

X2

N

x2

1 f( xr) (x – x )

Area = f(x) dx = lim ---2

1

r

x1

N N i = 1

where xr are randomly generated values of x

between x1 and x2.

12.126

Example

Computing the integral

x2

x (x2 – 3x) dx

1

Sequential Code

sum = 0;

for (i = 0; i < N; i++) {

/* N random samples */

xr = rand_v(x1, x2);

/* next random value */

sum = sum + xr * xr - 3 * xr /* compute f(xr)*/

}

area = (sum / N) * (x2 - x1);

randv(x1, x2) returns pseudorandom number between x1

and x2.

12.127

For parallelizing Monte Carlo code, must

address best way to generate random

numbers in parallel.

Can use SPRNG (Scalable Pseudorandom Number Generator) -- supposed

to be a good parallel random number

generator.

12.128

Executing separate problem

instances

In some application areas, same program

executed repeatedly - ideal if with

different parameters (“parameter sweep”)

Nimrod/G -- a grid broker project that

targets parameter sweep problems.

12.129

Techniques to reduce effects

of network communication

• Latency hiding with

communication/computation overlap

• Better to have fewer larger messages

than many smaller ones

12.130

Synchronous Algorithms

• Many tradition parallel algorithms

require the parallel processes to

synchronize at regular and frequent

intervals to exchange data and continue

from known points

This is bad for grid computations!!

All traditional parallel algorithms books have to

be thrown away for grid computing.

12.131

Techniques to reduce actual

synchronization communications

• Asynchronous algorithms

– Algorithms that do not use synchronization

at all

• Partially synchronous algorithms

– those that limit the synchronization, for

example only synchronize on every n

iterations

– Actually such algorithms known for many

years but not popularized.

12.132

Big Problems

“Grand challenge” problems

Most of the high profile projects on the

grid involve problems that are so big

usually in number of data items that they

cannot be solved otherwise

12.133

Examples

•

•

•

•

•

High-energy physics

Bioinformatics

Medical databases

Combinatorial chemistry

Astrophysics

12.134

Workflow Technique

• Use functional decomposition - dividing

problem into separate functions which

take results from other functions units

and pass on results to functional units interconnection patterns depends upon

the problem.

• Workflow - describes the flow of

information between the units.

12.135

Example

Climate Modeling

water vapor content, humidity , pressure,

wind v elocities, temperature

Atmospheric Model

Atmospheric

Circulation Model

Hydrology

heating rates

Atmospheric

Chemistry

wind stress,

heat flux,

water flux

sea surf ace temperature

Ocean Model

Land Surf ace Model

Oceanic Circulation

Model

Ocean Chemistry

12.136