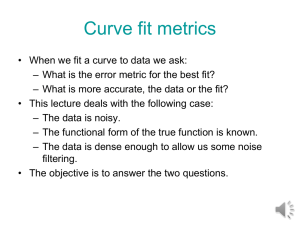

Curve fit metrics

advertisement

Curve fit metrics • When we fit a curve to data we ask: – What is the error metric for the best fit? – What is more accurate, the data or the fit? • This lecture deals with the following case: – The data is noisy. – The functional form of the true function is known. – The data is dense enough to allow us some noise filtering. • The objective is to answer the two questions. Curve fit • We sample the function y=x (in red) at x=1,2,…,30, add noise with standard deviation 1 and fit a linear polynomial (blue). • How would you check the statement that fit is more accurate than the data? 35 30 25 20 With dense data, functional form is clear. Fit serves to filter out noise 15 10 5 0 0 5 10 15 20 25 30 Regression • The process of fitting data with a curve by minimizing the mean square difference from the data is known as regression • Term originated from first paper to use regression dealt with a phenomenon called regression to the mean http://www.jcu.edu.au/cgc/RegMean.html • The polynomial regression on the previous slide is a simple regression, where we know or assume the functional shape and need to determine only the coefficients. Surrogate (metamodel) • The algebraic function we fit to data is called surrogate, metamodel or approximation. • Polynomial surrogates were invented in the 1920s to characterize crop yields in terms of inputs such as water and fertilizer. • They were called then “response surface approximations.” • The term “surrogate” captures the purpose of the fit: using it instead of the data for prediction. • Most important when data is expensive and noisy, especially for optimization. Surrogates for fitting simulations • Great interest now in fitting computer simulations • Computer simulations are also subject to noise (numerical) • Simulations are exactly repeatable, so noise is hidden. • Some surrogates (e.g. polynomial response surfaces) cater mostly to noisy data. • Some (e.g. Kriging) interpolate data. Surrogates of given functional form y yˆ (x, b) • Noisy response • Linear approximation • Rational approximation • Data from ny experiments • Error (fit) metrics erms ŷ b1 b2 x b1 yˆ x b2 yi yˆ (xi , b) i 1 ny 1 eav ny ny yi yˆ (xi , b) 2 i 1 ny y yˆ (x , b) i 1 i i emax max yi yˆ (xi , b) xi Linear Regression nb • Functional form yˆ bii (x) i 1 • For linear 1 1 2 x approximation • Error or difference e y b (x ) between data and surrogate 1 T erms e e • Rms error ny • Minimize rms error eTe=(y-XbT)T(y-XbT) • Differentiate to obtain X T Xb X T y nb j j i 1 i i j Beware of ill-conditioning! e y Xb Example • Data: y(0)=0, y(1)=1, y(2)=0 • Fit linear polynomial y=b0+b1x y (0) b0 y (1) b0 b1 y (2) b0 2b1 0 1 0 • Then 3b0 3b1 1 3b0 5b1 1 • Obtain b0=1/3, b1=0, 𝑦 = 1 3. • Surrogate preserves the average value of the data at data points. Other metric fits • Assuming other fits will lead to the form 𝑦 = 𝑏, • For average error minimize 3eav | 0 b | |1 b | | 0 b | Obtain b=0. • For maximal error minimize • emax max | 0 b |,|1 b |,| 0 b | obtain b=0.5 Rms fit Av. Err. fit Max err. fit RMS error 0.471 0.577 0.5 Av. error 0.444 0.333 0.5 Max error 0.667 1 0.5 Three lines Original 30-point curve fit 35 • With dense data difference due to metrics is small y yav yrms ymax 30 25 20 15 10 5 0 . 0 5 10 Rms fit Av. Err. fit Max err. fit RMS error 1.278 1.283 1.536 Av. error 0.958 0.951 1.234 Max error 3.007 2.987 2.934 15 20 25 30 problems 1. Find other metrics for a fit beside the three discussed in this lecture. 2. Redo the 30-point example with the surrogate y=bx. Use the same data. 3. Redo the 30-point example using only every third point (x=3,6,…). You can consider the other 20 points as test points used to check the fit. Compare the difference between the fit and the data points to the difference between the fit and the test points. It is sufficient to do it for one fit metric. Source: Smithsonian Institution Number: 2004-57325