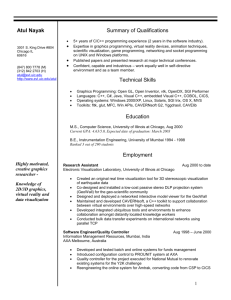

motion blur, lens flare, real time shadows, reflections

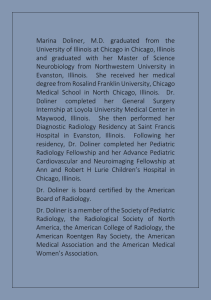

advertisement

CS 426 Graphics Hardware Architecture & Miscellaneous Real Time Special Effects © 2003-2008 Jason Leigh Electronic Visualization Lab, University of Illinois at Chicago Electronic Visualization Laboratory (EVL) University of Illinois at Chicago • Modern graphics accelerators are called GPUs (Graphics Processing Units) • 2 ways GPUs speed up graphics: – Pipelining: similar to pipelining in CPUs. • Each instruction may require multiple stages to completely process. Rather than waiting for all stages to complete before processing the next instruction, begin processing the next instruction as soon as the previous instruction has finished its first stage. • CPUs like Pentium 4 has 20 pipeline stages. • GPUs typically have 600-800 stages. Ie very few branches & most of the functionality is fixed. – Parallelizing • Process the data in parallel within the GPU. In essence multiple pipelines running in parallel. • Basic model is SIMD (Single Instruction Multiple Data) – ie same graphics algorithms but lots of polygons to process. Electronic Visualization Laboratory (EVL) University of Illinois at Chicago Typical Parallel Graphics Architecture Application Geometry Unit G R G R . . . . G R Geometry Stage Rasterizer Stage (Transforms geometry) (Turns geometry into pixels) Electronic Visualization Laboratory (EVL) Display Rasterizer Unit University of Illinois at Chicago Taxonomy of Parallel Graphics Architectures • G=Geometry Engine (scale, rotate, translate..) • FG=Fragment Generation (rasterizes the geometry) • FM=Fragment Merge (merges fragments with color and zbuffers) Electronic Visualization Laboratory (EVL) Application G G G FG FG FG Geometry stage Rasterizer stage FM FM FM Display University of Illinois at Chicago Imagine this is my screen and the polygons that will occupy my screen Electronic Visualization Laboratory (EVL) University of Illinois at Chicago How Polygons Are Processed (Sort-Last Fragment) Equally divide up the polygons Generate fragment for each group of polygons Sort out where portions of the fragments need to go to merge to form the whole image G G G FG FG FG FM FM FM • More practical than previous Sort-Last method • Used in XBOX. • Geometry processing is balanced. • Rendering is balanced. • Merging involves compositing color and z-buffer. Display University of Illinois at Chicago Electronic Visualization Laboratory (EVL) PCs & XBOX • • • • • • • • • • PCs are historically multi-purpose machines (e.g. runs word processors etc..) Word processors tend to use little data but have large code bases that need to be accessed. Hence large caches in CPU helps performance greatly. This isn’t really the way multimedia applications work. Multimedia apps tend to have small code bases but process lots of data repetitively. In order to accommodate this in PCs they have had to build increasingly large memory caches- e.g. Nvidia’s latest graphics cards have 512M of RAM. Large caches are also needed to compensate for PCs small bus bandwidth between connected components. XBOX is a perfect e.g. of American Excess 733MhZ CPU, 64M shared RAM, custom GeForce3 Contrast this with PS2… Electronic Visualization Laboratory (EVL) University of Illinois at Chicago PS2 • Like most modern gaming architectures it consists of multiple processors • Some dedicated for I/O and sound • PS2’s uniqueness lies in the EMOTION Engine• A lean mean data processing machine. Electronic Visualization Laboratory (EVL) Rendering and Compositing (internals proprietary) University of Illinois at Chicago PS2 Emotion Engine • • • • • • • • • • • Designed by Toshiba. Philosophy is that multimedia apps have relatively small codes but process large amounts of data- too large for caches to be useful. Enormous data paths between components E.g. DMA controller (DMAC) has 10, 128bit channels 48GB/s bandwidth between graphics synthesizer and RAM!!! Compare to XBOX- 6.4GB/s. Very small caches (~16-32K) in CPU, VU0, VU1 compared to PC Pentium processors. Video RAM- 4M only. Main memory – 32M only. Processor is only 250MHz- but 128bit with 128bit buses. Challenging for programmers who are not used to this paradigm. Graphics Interface to Graphics Synthesizer Image decompression (Mpeg) University of Illinois at Chicago Electronic Visualization Laboratory (EVL) PS3 • Cell Processor consists of: – Power Processor Element (PPE) is controller for entire system. – Synergistic Processing Element (SPE) performs game logic, physics, dynamic vertex manipulations. • RSX functions as main pixel painter, and also static geometry. QuickTime™ and a decompressor are needed to see this picture. Electronic Visualization Laboratory (EVL) QuickTime™ and a decompressor are needed to see this picture. University of Illinois at Chicago PS3 (cont) • • • • PS3 engine will start off with PPE spawning off tasks to the SPEs. Static geometry is put onto the RSX. PPE start cranking through tasks in parallel, usually setup to double buffer the data they operate on. A SPE will have its code uploaded, then it starts a DMA fetch for its initial data into to one half its local memory, and then it starts ping ponging back and forth: work through one half the local memory while the second half is being DMAed in, then swap. Ideally you have it setup so that you are effectively hiding almost all of your data loading latencies with the double buffer setup and chaining SPEs together where you do animation, deformation, physics, transformation, lighting all going on in parallel. • Data is then sent off to the RSX to be rasterized along with the resident static vertex data. So in effect the PS3's Cell RSX combo is one giant unified rendering system. • Depending on the nature of your game, your division of labor between the RSX and Cell will be different. It is entirely possible to do all vertex work on Cell or none. And the same for pixel painting. University of Illinois at Chicago Electronic Visualization Laboratory (EVL) • PS3's rendering allows the unification of your physics, collision, dynamics, and geometry. • On systems like desktop PCs or the Xbox 360 you have a division between your geometric data and collision/physics data with each of them sitting in GPU and CPU space respectively. Electronic Visualization Laboratory (EVL) University of Illinois at Chicago More Hardware Specifics: The Stencil Buffer • A “floating” buffer that can be displayed independent of main graphics buffer. • So needs to be rendered only once. • Similar to the traditional concept of “Sprites”. • Used historically to add control panels or cockpit interiors to a game. Also used to draw the mouse (on the Amiga). • In modern graphics it is often used as a cookie cutter for graphics. • E.g. Using stenciling to generate planar reflections. Electronic Visualization Laboratory (EVL) University of Illinois at Chicago Planar Reflections (Using the Stencil Buffer) • E.g. reflection on a mirror 1. Draw shape of the mirror into stencil buffer (creating a hole). 2. Enable stencil buffer and draw reflected objects into the hole cut out by the stencil buffer. Note: Reflected objects are rendered by taking the geometry and inverting it along the reflection axis. 3. Reverse the stencil buffer and then draw the main object. 4. Draw the mirror as a semitransparent object. • There are as always a host of other techniques… Electronic Visualization Laboratory (EVL) 1 3 2 4 University of Illinois at Chicago More Hardware Specifics: The Accumulation Buffer • Essentially a deeper color buffer. • 48bit buffer and a collection of operators like add, subtract, multiply. • Can perform operations like: – acc_buffer = acc_buffer + color_buffer • Used for a variety of graphics effects like motion blur. Electronic Visualization Laboratory (EVL) University of Illinois at Chicago Motion Blur (Using the Accumulation Buffer) • Clear accumulation buffer. • For each frame: – Render the scene – Multiply accumulation buffer by a fraction (f) to fade old images • E.g. Let f=0.2; Acc_buffer = Acc_buffer * 0.2; • (smaller f means faster fade) – Add in scene multiplied by (1-f). Ie scene is prominent initially • E.g. Acc_buffer = Acc_buffer + (scene * (1-0.2)) – Show the new buffer Electronic Visualization Laboratory (EVL) University of Illinois at Chicago More Common Way of Implementing Motion Blur • Draw additional polygon “trails” extending from the edges of the object. • Trail is opaque near the object and gradually becomes more transparent. Electronic Visualization Laboratory (EVL) University of Illinois at Chicago • Or draw multiple copies of the object with increasing levels of transparency Electronic Visualization Laboratory (EVL) University of Illinois at Chicago Shadows (Using the Z-Buffer) • Recall: Main use of Z-buffer is to determine visibility of polygons in a scene to decide whether to draw it or not. • Can also be used for creating real time shadows using a technique called Shadow Mapping. Electronic Visualization Laboratory (EVL) University of Illinois at Chicago Shadows using Shadow Mapping 1. Render the image from the point of view of the light. Discard the image but save the Z-buffer data. (This is the shadow map). Light Shadow map Viewpoint from light Electronic Visualization Laboratory (EVL) Z-Buffer (Shadow Map) Light = far Dark = near University of Illinois at Chicago Shadows using Shadow Mapping (cont) 2. Render the image from the point of view of the camera BUT as you are about to render each pixel on the surface of a polygon figure out the distance from that point on the surface to light. 3. If the distance of that point to the light is greater than the shadow buffer value then that point is in shadow, so draw that pixel dark. • E.g. • db > shadow z-buffer value at b (ie Sb) so it is in shadow • da <= shadow z-buffer value at a so it is lit. • This can be performed entirely in hardware using a number of Z-buffer tricks- not described here. • XBOX and SGI Infinite Reality are capable of this. • Notice objects cast shadows on each other. • Also quality of shadow depends on resolution of shadow map. If low resolution, the shadow will appear blocky. Electronic Visualization Laboratory (EVL) Camera a b da Sb + db University of Illinois at Chicago Texture Baking / Light Mapping • Conceptually similar to texture mapping. • Precompute lighting at each surface of a polygon using raytracing / raycasting / radiosity and store that info as a “texture map” file that can be applied in real-time when drawing the polygon. • Sometimes this is described as Texture Baking. • Can be accomplished in Blender. • Useful for scenes with static light sources. • Produces very realistic scenes that are difficult to achieve in real-time. Electronic Visualization Laboratory (EVL) University of Illinois at Chicago QuickTime™ and a H.264 decompressor are needed to see this picture. Electronic Visualization Laboratory (EVL) University of Illinois at Chicago Lens Flare • Occurs when the multiple lenses in a camera passes close to a light source. Each ring in a lens flare is an artifact of one of the lenses in your camera. • In computer graphics there is no physical lens- so we have to fake it (as always). • Classically photographers and film makers avoided this because it is considered bad technique. • It’s first use in film was accidental and from then on it became a stylistic mechanism to suggest that a scene is very very bright- like in a desert scene or if you are looking into the sun from outer space. Electronic Visualization Laboratory (EVL) University of Illinois at Chicago Implementing Lens Flare • Created by pasting flare and halo images along a line between the 2D position of the light source and the center of the screen. • Experiment with different positions along the line. • Also experiment with different sizes of images. Electronic Visualization Laboratory (EVL) University of Illinois at Chicago Trees • 2D Tree picture with a transparent (alpha) channel • Map picture to 2 crisscrossing polygons Electronic Visualization Laboratory (EVL) University of Illinois at Chicago Rocket Engines Alpha channel of image 100% Opaque 100% Transparent Electronic Visualization Laboratory (EVL) University of Illinois at Chicago Smoke Trails • • • • • Create a smoke texture with an alpha channel where the center is opaque and the edges are transparent. Create a FIFO queue where a new smoke texture plane is created and placed at front of queue At the other end of queue, smoke texture objects are deleted As each new texture plane is created randomly size and rotate it slightly. Position it at previous location of rocket. Apply billboarding to each texture plane. Camera/Viewer Electronic Visualization Laboratory (EVL) University of Illinois at Chicago Toon Shading • Toon Shading is a class of shading technique called Non-Photorealistic Rendering (NPR). • Used to create images of 3D objects that appear cartoon-like rather than realistic. • Many possible techniques but there are fundamentally 2 main steps. – 1. developing a way to create shading effect on the surface of the polygons; – 2. developing a way to create the silhouette (or outline) around the object being drawn. • 1. Creating shading effect – Modify the Gouraud shading algorithm so that rather than gradually interpolate intensities between the intensity values at the vertices, declare a threshold where the intensity of the interpolated pixel will be either the full diffuse color of the object, or will show only the ambient material color. • 2. Creating silhouette – Render a slightly larger copy of the object in black and with the normals reversed. – By superimposing the larger copy of the object over the original object and rendering the object with normals reversed, only the rear polygons in the object are drawn. This creates the illusion of a silhouette around the original object. Electronic Visualization Laboratory (EVL) University of Illinois at Chicago Toon Shading (cont) Gouraud Shaded Modify Gouraud shading so that instead of interpolating smoothly, either make it light or ambient (dark) Silhouette created with larger version of donut with normals inverted and material values set to black. Electronic Visualization Laboratory (EVL) University of Illinois at Chicago Vertex Shaders and Pixel Shaders • Emerged in GPUs roughly after 1999 • Vertex Shader – Vertices have more than just X,Y,Z info. – They can include color, alpha, specularity, texture info – A vertex shader can be programmed to transform a vertex to create a visual effect – E.g. interpolation between keyframe animations, creating rippling flags, morphing, fake motion blurring – Operates after the Geometry pipeline and before the rasterization pipeline. – Main advantage is that all this is done in the GPU, not the CPU, and so it can be about 100x faster Electronic Visualization Laboratory (EVL) University of Illinois at Chicago Pixel Shader • A pixel shader allows programmers to control how pixels are rendered by changing the nature of the rendering algorithm. • E.g. modify gouraud shading model to do toon-shading; also implement bump mapping or normal mapping in real time. • Main advantage is that it allows you to do these advanced effects in REAL TIME. Bump mapping used to be only possible through lengthy raycasting/raytracing that was CPU intensive. • Vertex/Pixel shading supported by DirectX8+. Blitz3D is written with DirectX7- so not available. • DarkBASIC supports it. • Languages / Extensions for Shader Programming: – CG Toolkit (by Nvidia), DirectX8+, OpenGL Shading Language • To learn more take Andy Johnson’s GPU class. Electronic Visualization Laboratory (EVL) University of Illinois at Chicago References • Real-Time Rendering – Tomas Akenine-Moller, Eric Haines (AK Press) • GameDev.net • Game Developers Conference – Shadow Mapping with Today’s OpenGL Hardware, Mark J. Kilgard, NVidia Corp. • Arstechnica.com • www.nvidia.com/object/feature_vertexshader.html • www.nvidia.com/object/feature_pixelshader.html Electronic Visualization Laboratory (EVL) University of Illinois at Chicago Game Cube • IBM PowerPC RISC CPU – “Gekko” – 485MHz – 256K CPU cache – 64bit bus • Data compression to increase throughput when moving graphics over its bus. • ATI Graphics processor – “Flipper” – 24M RAM (16MB frame buffer; 8M texture) – 12.8GB/s bandwidth to texture memory. • Ie. Pretty “standard” PC+graphics style design Electronic Visualization Laboratory (EVL) University of Illinois at Chicago Taxonomy of Parallel Graphics Architectures • Sort-First (Used alot in cluster computing) • Sort-Middle (Silicon Graphics Infinite Realityused for the CAVE) • Sort-Last Fragment Generation (Xbox) • Sort-Last Image (HP’s Sepia) Application G G G FG FG FG Geometry stage Rasterizer stage FM FM FM Display Electronic Visualization Laboratory (EVL) University of Illinois at Chicago Sort-First Divide the screen up into N equal parts (e.g. 3) Assign the polygons / objects that belong to each part to its respective Geometry unit (This is what is meant by SORTING) G G • Not used much in game systems. • Used mainly in clusters of PCs for tiled displays. • Excessive polygon replication may occur during sort • Load can becoming very imbalanced if there are no polygons occupying a portion of the screen or display. G Geometry unit transforms (scale, rotate, translate, clip) polygons FG FG FG FG rasterizes polygons to pixels. FM FM FM FM merges them (by simple tiling) Display Electronic Visualization Laboratory (EVL) University of Illinois at Chicago Sort-Middle Equally divide up the polygons • Geometry Unit transforms polygons (rotate, scale, etc..) Used in SGI Infinite Reality (which drives the CAVE) • Geometry processing is balanced. • But you could end up with unbalanced fragment generators Send transformed polygons to FG that is responsible for each portion of the screen. G G G FG FG FG FM FM FM Display University of Illinois at Chicago Electronic Visualization Laboratory (EVL) Sort-Last Image No screen partitioning here Generate fragment for each group of polygons G Each FG renders to a buffer that is the full size of the screen Merge color and z-buffers G • Geometry processing is balanced. • Rendering is balanced. • Large bus bandwidth needed to do compositing at the end. • Used typically in PC clusters driving a tiled display with specialized compositing hardware. G FG FG FG FM FM FM Display University of Illinois at Chicago Electronic Visualization Laboratory (EVL)