OpenMP

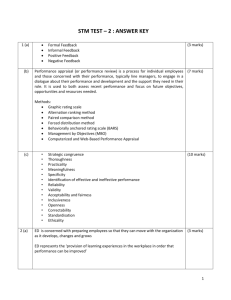

OpenMP

Mark Reed

UNC Research Computing markreed@unc.edu

References

See online tutorial at www.openmp.org

OpenMP Tutorial from SC98

• Bob Kuhn, Kuck & Associates, Inc.

• Tim Mattson, Intel Corp.

• Ramesh Menon, SGI

SGI course materials

“Using OpenMp” book

• Chapman, Jost, and Van Der Past

UNC Research Computing 2

Logistics

Course Format

Lab Exercises

Breaks

Getting started on Emerald

• http://help.unc.edu/?id=6020

UNC Research Computing

• http://its.unc.edu/research-computing.html

UNC Research Computing 3

UNC Research Computing

OpenMP Introduction

4

Course Objectives

Introduction and comprehensive coverage of the OpenMP standard

After completion of this course users should be equipped to implement

OpenMP constructs in their applications and realize performance improvements on shared memory machines

UNC Research Computing 5

UNC Research Computing

Course Overview

Introduction

• Objectives, History, Overview,

Motivation

Getting Our Feet Wet

• Memory Architectures, Models

(programming, execution, memory, …), compiling and running

Diving In

• Control constructs, worksharing, data scoping, synchronization, runtime control

6

Course Overview Cont.

Swimming Like a Champion

• more on parallel clauses, all work sharing constructs, all synchronization constructs, orphaned directives, examples and tips

UNC Research Computing

On to the Olympics

• Performance! Coarse/Fine grain parallelism, data decomposition and

SPMD, false sharing and cache considerations, summary and suggestions

7

Motivation

No portable standard for shared memory parallelism existed

• Each SMP vendor has proprietary API

• X3H5 and PCF efforts failed

Portability only through MPI

Parallel application availability

• ISVs have big investment in existing code

• New parallel languages not widely adopted

UNC Research Computing 8

In a Nutshell

Portable, Shared Memory Multiprocessing API

• Multi-vendor support

• Multi-OS support (Unixes, Windows, Mac)

Standardizes fine grained (loop) parallelism

Also supports coarse grained algorithms

The MP in OpenMP is for multi-processing

Don’t confuse OpenMP with Open MPI! :)

UNC Research Computing 9

Version History

First

• Fortran 1.0 was released in October 1997

• C/C++ 1.0 was approved in November 1998

Recent

• version 2.5 joint specification in May 2005

• this may be the version most available

Current

• OpenMP 3.0 API released May 2008

• check compiler for level of support

UNC Research Computing 10

Who’s Involved

OpenMP Architecture Review Board

(ARB)

Permanent Members of ARB:

AMD

SGI

Cray

Sun

Fujitsu

Microsoft

HP

Auxiliary Members of ARB:

IBM

ASC/LLNL

Intel

cOMPunity

NEC

EPCC

The Portland Group

NASA

UNC Research Computing

RWTH Aachen University

Why choose OpenMP ?

Portable

• standardized for shared memory architectures

Simple and Quick

• incremental parallelization

• supports both fine grained and coarse grained parallelism

• scalable algorithms without message passing

Compact API

• simple and limited set of directives

UNC Research Computing 12

UNC Research Computing

Getting our feet wet

13

CPU

Memory

CPU

Memory

Distributed

CPU

Memory

CPU

Memory

UNC Research Computing

Memory Types

Shared

CPU

CPU

Memory

CPU

CPU

14

Clustered SMPs

Cluster Interconnect Network

Memory Memory

UNC Research Computing

Multi-socket and/or Multi-core

Memory

15

Distributed vs. Shared

Memory

Shared - all processors share a global pool of memory

• simpler to program

• bus contention leads to poor scalability

Distributed - each processor physically has it’s own (private) memory associated with it

• scales well

• memory management is more difficult

UNC Research Computing 16

No Not These!

Models

…

Nor These Either!

We want programming models, execution models, communication models and memory models!

UNC Research Computing 17

OpenMP - User Interface

Model

Shared Memory with thread based parallelism

Not a new language

Compiler directives, library calls and environment variables extend the base language

• f77, f90, f95, C, C++

Not automatic parallelization

• user explicitly specifies parallel execution

• compiler does not ignore user directives even if wrong

UNC Research Computing 18

What is a thread?

A thread is an independent instruction stream, thus allowing concurrent operation

threads tend to share state and memory information and may have some (usually small) private data

Similar (but distinct) from processes.

Threads are usually lighter weight allowing faster context switching

in OpenMP one usually wants no more than one thread per core

UNC Research Computing 19

Execution Model

OpenMP program starts single threaded

To create additional threads, user starts a parallel region

• additional threads are launched to create a team

• original (master) thread is part of the team

• threads “go away” at the end of the parallel region: usually sleep or spin

Repeat parallel regions as necessary

• Fork-join model

UNC Research Computing 20

Communicating Between

Threads

Shared Memory Model

• threads read and write shared variables

no need for explicit message passing

• use synchronization to protect against race conditions

• change storage attributes for minimizing synchronization and improving cache reuse

UNC Research Computing 21

Storage Model – Data Scoping

Shared memory programming model: variables are shared by default

Global variables are SHARED among threads

• Fortran: COMMON blocks, SAVE variables, MODULE variables

• C: file scope variables, static

Private Variables:

• exist only within the new scope, i.e. they are uninitialized and undefined outside the data scope

• loop index variables

• Stack variables in sub-programs called from parallel regions

UNC Research Computing 22

Creating Parallel Regions

Only one way to create threads in OpenMP API:

Fortran:

!$OMP parallel

< code to be executed in parallel >

C

!$OMP end parallel

}

{

#pragma omp parallel code to be executed by each thread

UNC Research Computing 23

UNC Research Computing 24

UNC Research Computing 25

Feature

Portable

Scalable

Incremental

Parallelization

Fortran/C/C++

Bindings

High Level

UNC Research Computing

Comparison of

Programming Models

Open MP yes less so yes yes yes

MPI yes yes no yes mid level

26

Compiling

UNC Research Computing

Intel (icc, ifort, icpc)

• -openmp

PGI (pgcc, pgf90, …)

• -mp

GNU (gcc, gfortran, g++)

• -fopenmp

• need version 4.2 or later

• g95 was based on GCC but branched off

I don’t think it has Open MP support

27

Compilers

No specific Fortran 90 or C++ features are required by the OpenMP specification

Most compilers support OpenMP, see compiler documentation for the appropriate compiler flag to set for other compilers, e.g.

IBM, SGI, …

UNC Research Computing 28

Compiler Directives

C Pragmas

C pragmas are case sensitive

Use curly braces, {}, to enclose parallel regions

Long directive lines can be "continued" by escaping the newline character with a backslash ("\") at the end of a directive line.

Fortran

!$OMP, c$OMP, *$OMP – fixed format

!$OMP – free format

Comments may not appear on the same line

continue w/ &, e.g. !$OMP&

UNC Research Computing 29

Specifying threads

The simplest way to specify the number of threads used on a parallel region is to set the environment variable (in the shell where the program is executing)

• OMP_NUM_THREADS

For example, in csh/tcsh

• setenv OMP_NUM_THREADS 4

in bash

• export OMP_NUM_THREADS=4

Later we will cover other ways to specify this

UNC Research Computing 30

UNC Research Computing

OpenMP – Diving In

31

UNC Research Computing 32

OpenMP Language Features

Compiler Directives – 3 categories

• Control Constructs

parallel regions, distribute work

• Data Scoping for Control Constructs

control shared and private attributes

• Synchronization

barriers, atomic, …

Runtime Control

• Environment Variables

OMP_NUM_THREADS

• Library Calls

OMP_SET_NUM_THREADS(…)

UNC Research Computing 33

UNC Research Computing 34

Parallel Construct

Fortran

• !$OMP parallel [clause[[,] clause]… ]

• !$OMP end parallel

C/C++

• #pragma omp parallel [clause[[,] clause]… ]

• {structured block}

UNC Research Computing 35

Supported Clauses for the

Parallel Construct

Valid Clauses:

• if (expression)

• num_threads (integer expression)

• private (list)

• firstprivate (list)

• shared (list)

• default (none|shared|private

*fortran only*

)

• copyin (list)

• reduction (operator: list)

More on these later …

UNC Research Computing 36

Loop Worksharing directive

Loop directives:

• !$OMP DO [clause[[,] clause]… ]

• [!$OMP END DO [NOWAIT]]

• #pragma omp for [clause[[,] clause]… ]

Clauses:

• PRIVATE(list)

• FIRSTPRIVATE(list)

• LASTPRIVATE(list)

• REDUCTION({op|intrinsic}:list})

• ORDERED

• SCHEDULE(TYPE[, chunk_size])

• NOWAIT

UNC Research Computing

Fortran do optional end

C/C++ for

37

All Worksharing Directives

Divide work in enclosed region among threads

Rules:

• must be enclosed in a parallel region

• does not launch new threads

• no implied barrier on entry

• implied barrier upon exit

• must be encountered by all threads on team or none

UNC Research Computing 38

Loop Constructs

Note that many of the clauses are the same as the clauses for the parallel region. Others are not, e.g. shared must clearly be specified before a parallel region.

Because the use of parallel followed by a loop construct is so common, this shorthand notation is often used (note: directive should be followed immediately by the loop)

• !$OMP parallel do …

• !$OMP end parallel do

• #pragma parallel for …

UNC Research Computing 39

Private Clause

Private, uninitialized copy is created for each thread

Private copy is not storage associated with the original program wrong

I = 10

!$OMP parallel private(I)

I = I + 1

!$OMP end parallel print *, I

UNC Research Computing

Uninitialized!

Undefined!

40

Firstprivate Clause

Firstprivate, initializes each private copy with the original program correct

I = 10

Initialized!

!$OMP parallel firstprivate(I)

I = I + 1

!$OMP end parallel

UNC Research Computing 41

LASTPRIVATE clause

Useful when loop index is live out

• Recall that if you use PRIVATE the loop index becomes undefined do i=1,N-1 a(i)= b(i+1) enddo a(i) = b(0)

In Sequential case i=N

!$OMP PARALLEL

!$OMP DO LASTPRIVATE(i) do i=1,N-1 a(i)= b(i+1) enddo a(i) = b(0)

!$OMP END PARALLEL

UNC Research Computing 42

NOWAIT clause

NOWAIT clause

By default loop index is PRIVATE

!$OMP PARALLEL

!$OMP DO do i=1,n a(i)= cos(a(i)) enddo

!$OMP END DO Implied

BARRIER

!$OMP DO do i=1,n b(i)=a(i)+b(i) enddo

!$OMP END DO

!$OMP END PARALLEL

!$OMP PARALLEL

!$OMP DO do i=1,n a(i)= cos(a(i)) enddo

!$OMP END DO NOWAIT

!$OMP DO do i=1,n

No

BARRIER b(i)=a(i)+b(i) enddo

!$OMP END DO

UNC Research Computing 43

Reductions

Assume no reduction clause do i=1,N

X = X + a(i) enddo

Sum Reduction

Wrong!

!$OMP PARALLEL DO SHARED(X) do i=1,N

X = X + a(i) enddo

!$OMP END PARALLEL DO

What’s wrong?

!$OMP PARALLEL DO SHARED(X) do i=1,N

!$OMP CRITICAL

X = X + a(i)

!$OMP END CRITICAL enddo

!$OMP END PARALLEL DO

UNC Research Computing 44

REDUCTION clause

Parallel reduction operators

• Most operators and intrinsics are supported

• +, *, -, .AND. , .OR., MAX, MIN

Only scalar variables allowed

!$OMP PARALLEL DO REDUCTION(+:X) do i=1,N do i=1,N

X = X + a(i)

X = X + a(i) enddo enddo

!$OMP END PARALLEL DO

UNC Research Computing 45

Ordered clause

Executes in the same order as sequential code

Parallelizes cases where ordering needed do i=1,N call find(i,norm) print*, i,norm enddo

1 0.45

2 0.86

3 0.65

!$OMP PARALLEL DO ORDERED PRIVATE(norm) do i=1,N call find(i,norm)

!$OMP ORDERED print*, i,norm

!$OMP END ORDERED enddo

!$OMP END PARALLEL DO

UNC Research Computing 46

Schedule clause

the Schedule clause controls how the iterations of the loop are assigned to threads

There is always a trade off between load balance and overhead

Always start with static and go to more complex schemes as load balance requires

UNC Research Computing 47

The 4 choices for schedule clauses

static : Each thread is given a “chunk” of iterations in a round robin order

• Least overhead - determined statically

dynamic : Each thread is given “chunk” iterations at a time; more chunks distributed as threads finish

• Good for load balancing

guided : Similar to dynamic, but chunk size is reduced exponentially

runtime : User chooses at runtime using environment variable (note no space before chunk value)

• setenv OMP_SCHEDULE “dynamic,4”

UNC Research Computing 48

Performance Impact of

Schedule

Static vs. Dynamic across multiple do loops

!$OMP DO SCHEDULE(STATIC) do i=1,4

• In static, iterations of the do loop executed by the same thread in both loops

• If data is small enough, may be still in cache, good performance

!$OMP DO SCHEDULE(STATIC) do i=1,4

Effect of chunk size

• Chunk size of 1 may result in multiple threads writing to the same cache line

• Cache thrashing, bad performance a(1,1) a(1,2) a(2,1) a(2,2) a(3,1) a(3,2) a(4,1) a(4,2)

UNC Research Computing 49

Barrier Synchronization

Atomic Update

Critical Section

Master Section

Ordered Region

Flush

Synchronization

UNC Research Computing 50

Barrier Synchronizaton

Syntax:

• !$OMP barrier

• #pragma omp barrier

Threads wait until all threads reach this point

implicit barrier at the end of each parallel region

UNC Research Computing 51

Atomic Update

Specifies a specific memory location can only be updated atomically, i.e. 1 thread at a time

Optimization of mutual exclusion for certain cases

(i.e. a single statement CRITICAL section)

• applies only to the statement immediately following the directive

• enables fast implementation on some HW

Directive:

• !$OMP atomic

• #pragma atomic

UNC Research Computing 52

UNC Research Computing 53

UNC Research Computing 54

Environment Variables

These are set outside the program and control execution of the parallel code

Prior to OpenMP 3.0 there were only 4

• all are uppercase

• values are case insensitive

OpenMP 3.0 adds four new ones

Specific compilers may have extensions that add other values

• e.g. KMP* for Intel and GOMP* for GNU

UNC Research Computing 55

Environment Variables

OMP_NUM_THREADS – set maximum number of threads

• integer value

OMP_SCHEDULE – determines how iterations are scheduled when a schedule clause is set to

“runtime”

• “type[, chunk]”

OMP_DYNAMIC – dynamic adjustment of threads for parallel regions

• true or false

OMP_NESTED – nested parallelism

• true or false

UNC Research Computing 56

Run-time Library Routines

There are 17 different library routines, we will cover just 3 of them now

omp_get_thread_num()

• Returns the thread number (w/i the team) of the calling thread. Numbering starts w/ 0.

integer function omp_get_thread_num()

#include <omp.h> int omp_get_thread_num()

UNC Research Computing 57

Run-time Library: Timing

There are 2 portable timing routines

omp_get_wtime

• portable wall clock timer returns a double precision value that is number of elapsed seconds from some point in the past

• gives time per thread - possibly not globally consistent

• difference 2 times to get elapsed time in code

omp_get_wtick

• time between ticks in seconds

UNC Research Computing 58

Run-time Library: Timing

double precision function omp_get_wtime()

include <omp.h> double omp_get_wtime()

double precision function omp_get_wtick()

include <omp.h> double omp_get_wtick()

UNC Research Computing 59

Swimming Like a Champion

UNC Research Computing

Michael Phelps

60

Supported Clauses for the

Parallel Construct

Valid Clauses:

• if (expression)

• num_threads (integer expression)

• private (list)

• firstprivate (list)

• shared (list)

• default (none|shared|private

*fortran only*

)

• copyin (list)

• reduction (operator: list)

More on these later …

UNC Research Computing 61

Shared Clause

Declares variables in the list to be shared among all threads in the team.

Variable exists in only 1 memory location and all threads can read or write to that address.

It is the user’s responsibility to ensure that this is accessed correctly, e.g. avoid race conditions

Most variables are shared by default (a notable exception, loop indices)

UNC Research Computing 62

Changing the default

List the variables in one of the following clauses

• SHARED

• PRIVATE

• FIRSTPRIVATE, LASTPRIVATE

• DEFAULT

• THREADPRIVATE, COPYIN

UNC Research Computing 63

Default Clause

Note that the default storage attribute is

DEFAULT (SHARED)

To change default: DEFAULT(PRIVATE)

• each variable in static extent of the parallel region is made private as if specified by a private clause

USE THIS!

• mostly saves typing

DEFAULT(none): no default for variables in static extent. Must list storage attribute for each variable in static extent

UNC Research Computing 64

If Clause

if (logical expression)

executes (in parallel) normally if the expression is true, otherwise it executes the parallel region serially

Used to test if there is sufficient work to justify the overhead in creating and terminating a parallel region

UNC Research Computing 65

Num_threads clause

num_threads(integer expression)

executes the parallel region on the number of threads specified

applies only to the specified parallel region

UNC Research Computing 66

How many threads?

Order of precedence:

• if clause

• num_threads clause

• omp_set_num_threads function call

• OMP_NUM_THREADS environment variable

• implementation default

(usually the number of cores on a node)

UNC Research Computing 67

Work Sharing Constructs -

The Complete List

Loop directives (DO/for)

sections

single

workshare

UNC Research Computing 68

SECTIONS directive

Enclosed sections are divided among threads in the team

Each section is executed by exactly one thread. A thread may execute more than one section

#pragma omp sections [clause …]

!$OMP SECTIONS [clause … ]

!$OMP SECTION

!$OMP SECTION

{

#pragma omp section structured_block

#pragma omp section structured_block

…

…

!$OMP END SECTIONS [nowait]

}

UNC Research Computing 69

SECTIONS directive

If there are N sections then there can be at most N threads executing in parallel

Any thread can execute a particular section.

This may vary from one execution to the next (non-deterministic)

The shorthand for combining sections with the parallel directive is:

• $!OMP parallel sections …

• #pragma parallel sections

UNC Research Computing 70

SINGLE directive

SINGLE specifies that the enclosed code is executed by only 1 thread

This may be useful for sections of code that are not thread safe, e.g. some I/O

It is illegal to branch into or out of a single block

!$OMP single [clause …]

!$OMP end single [nowait]

#pragma single [clause …]

UNC Research Computing 71

WORKSHARE directive

Fortran only . It exists to allow work sharing for code using f90 array syntax, forall statement, and where statements

!$OMP workshare [clause …]

!$OMP end workshare [nowait]

can combine with parallel construct

• !$OMP parallel workshare …

UNC Research Computing 72

Clauses by Directives Table

UNC Research Computing https://computing.llnl.gov/tutorials/openMP

73

Barrier Synchronization

Atomic Update

Critical Section

Master Section

Ordered Region

Flush

Synchronization

UNC Research Computing 74

Mutual Exclusion - Critical

Sections

Critical Section

• only 1 thread executes at a time, others block

• can be named (names are global entities and must not conflict with subroutine or common block names)

• It is good practice to name them

• all unnamed sections are treated as the same region

Directives:

• !$OMP CRITICAL [name]

• !$OMP END CRITICAL [name]

• #pragma omp critical [name]

UNC Research Computing 75

Master Section

Only the master (0) thread executes the section

Rest of the team skips the section and continues execution from the end of the master

No barrier at the end (or start) of the master section

The worksharing construct, OMP single is similar in behavior but has an implied barrier at the end.

Single is performed by any one thread.

Syntax:

• !$OMP master

• !$OMP end master

• #pragma omp master

UNC Research Computing 76

Ordered Section

Enclosed code is executed in the same order as would occur in sequential execution of the loop

Directives:

• !$OMP ORDERED

• !$OMP END ORDERED

• #pragma omp ordered

UNC Research Computing 77

Flush

synchronization point at which the system must provide a consistent view of memory

• all thread visible variables must be written back to memory (if no list is provided), otherwise only those in the list are written back

Implicit flushes of all variables occur automatically at

• all explicit and implicit barriers

• entry and exit from critical regions

• entry and exit from lock routines

Directives

• !$OMP flush [(list)]

• #pragma omp flush [(list)]

UNC Research Computing 78

Sub-programs in parallel regions

Sub-programs can be called from parallel regions

static extent is code contained lexically

dynamic extent includes static extent + the statements in the call tree

the called sub-program can contain OpenMP directives to control the parallel region

• directives in dynamic extent but not in static extent are called Orphan directives

UNC Research Computing 79

UNC Research Computing 80

Threadprivate

Makes global data private to a thread

• Fortran: COMMON blocks

• C: file scope and static variables

Different from making them PRIVATE

• with PRIVATE global scope is lost

• THREADPRIVATE preserves global scope for each thread

Threadprivate variables can be initialized using COPYIN

UNC Research Computing 81

UNC Research Computing 82

UNC Research Computing 83

Run-time Library Routines

omp_set_num_threads(integer)

• Set the number of threads to use in next parallel region.

• can only be called from serial portion of code

• if dynamic threads are enabled, this is the maximum number allowed, if they are disabled then this is the exact number used

omp_get_num_threads

• returns number of threads currently in the team

• returns 1 for serial (or serialized nested) portion of code

UNC Research Computing 84

Run-time Library Routines

Cont.

omp_get_max_threads

• returns maximum value that can be returned by a call to omp_get_num_threads

• generally reflects the value as set by

OMP_NUM_THREADS env var or the omp_set_num_threads library routine

• can be called from serial or parall region

omp_get_thread_number

• returns thread number. Master is 0. Thread numbers are contiguous and unique.

UNC Research Computing 85

Run-time Library Routines

Cont.

omp_get_num_procs

• returns number of processors available

omp_in_parallel

• returns a logical (fortran) or int (C/C++) value indicating if executing in a parallel region

UNC Research Computing 86

Run-time Library Routines

Cont.

omp_set_dynamic (logical(fortran) or int(C))

• set dynamic adjustment of threads by the run time system

• must be called from serial region

• takes precedence over the environment variable

• default setting is implementation dependent

omp_get_dynamic

• used to determine of dynamic thread adjustment is enabled

• returns logical (fortran) or int (C/C++)

UNC Research Computing 87

Run-time Library Routines

Cont.

omp_set_nested (logical(fortran) or int(C))

• enable nested parallelism

• default is disabled

• overrides environment variable OMP_NESTED

omp_get_nested

• determine if nested parallelism is enabled

There are also 5 lock functions which will not be covered here.

UNC Research Computing 88

!$OMP PARALLEL DO

!$OMP& default(shared)

!$OMP& private (i,k,l) do 50 k=1,nztop do 40 i=1,nx cWRM remove dependency cWRM l = l+1 l=(k-1)*nx+i dcdx(l)=(ux(l)+um(k))

*dcdx(l)+q(l)

40 continue

50 continue

!$OMP end parallel do

UNC Research Computing

Weather Forecasting

Example 1

Many parallel loops simply use parallel do

autoparallelize when possible (usually doesn’t work)

simplify code by removing unneeded dependencies

Default (shared) simplifies shared list but Default

(none) is recommended.

89

Weather - Example 2a

cmass = 0.0

!$OMP parallel default

(shared)

!$OMP& private(i,j,k,vd,help,..

)

!$OMP& reduction(+:cmass) do 40 j=1,ny

!$OMP do do 50 i=1,nx vd = vdep(i,j) do 10 k=1,nz help(k) = c(i,j,k)

10 continue

UNC Research Computing

Parallel region makes nested do more efficient

• avoid entering and exiting parallel mode

Reduction clause generates parallel summing

90

… do 30 k=1,nz c(I,j,k)=help(k) cmass=cmass+help(k)

30 continue

50 continue

!$OMP end do

40 continue

!$omp end parallel

Weather - Example 2a

Continued

Reduction means

• each thread gets private cmass

• private cmass added at end of parallel region

• serial code unchanged

UNC Research Computing 91

Weather Example - 3

!$OMP parallel do 40 j=1,ny

!$OMP do schedule(dynamic) do30 i=1,nx if(ish.eq.1)then else call upade(…) call ucrank(…) endif

30 continue

40 continue

!$OMP end parallel

UNC Research Computing

Schedule(dynamic) for load balancing

92

Weather Example - 4

!$OMP parallel !don’t it slows down

!$OMP& default(shared)

!$OMP& private(i) do 30 I=1,loop y2=f2(I) f2(i)=f0(i) +

2.0*delta*f1(i) f0(i)=y2

30 continue

!$OMP end parallel do

Don’t over parallelize small loops

Use if(<condition>) clause when loop is sometimes big, other times small

UNC Research Computing 93

Weather Example - 5

!$OMP parallel do schedule(dynamic)

!$OMP& shared(…)

!$OMP& private(help,…)

!$OMP& firstprivate

(savex,savey) do 30 i=1,nztop

…

30 continue

!$OMP end parallel do

First private (…) initializes private variables

UNC Research Computing 94

On to the Olympics -

Advanced Topics

UNC Research Computing

The Water Cube

Beijing National Aquatics Center

95

Loop Level Paradigm

Execute each loop in parallel

Easy to parallelize code

Similar to automatic parallelization

• Automatic Parallelization

Option (API) may be good start

Incremental

• One loop at a time

• Doesn’t break code

!$OMP PARALLEL DO do i=1,n

………… enddo alpha = xnorm/sum

!$OMP PARALLEL DO do i=1,n

………… enddo

!$OMP PARALLEL DO do i=1,n

………… enddo

UNC Research Computing 96

Performance

Fine Grain

Overhead

• Start a parallel region each time

• Frequent synchronization

Fraction of non parallel work will dominate

• Amdahl’s law

Limited scalability

UNC Research Computing

!$OMP PARALLEL DO do i=1,n

………… enddo alpha = xnorm/sum

!$OMP PARALLEL DO do i=1,n

………… enddo

!$OMP PARALLEL DO do i=1,n

………… enddo

97

Reducing Overhead

Convert to coarser grain model:

• More work per parallel region

• Reduce synchronization across threads

Combine multiple DO directives into single parallel region

• Continue to use Work-sharing directives

Compiler does the work of distributing iterations

Less work for user

• Doesn’t break code

UNC Research Computing 98

!$OMP PARALLEL DO do i=1,n

………… enddo

!$OMP PARALLEL DO do i=1,n

………… enddo

Coarser Grain

!$OMP PARALLEL

!$OMP DO do i=1,n

………… enddo

!$OMP DO do i=1,n

………… enddo

!$OMP END PARALLEL

99 UNC Research Computing

Using Orphaned Directives

!$OMP PARALLEL DO do i=1,n

………… enddo call MatMul(y)

!$OMP PARALLEL

!$OMP DO do i=1,n

………… enddo call MatMul(y)

!$OMP END PARALLEL subroutine MatMul(y)

!$OMP PARALLEL DO do i=1,n

………… enddo

Orphaned

Directive subroutine MatMul(y)

!$OMP DO do i=1, N

…… enddo

UNC Research Computing 100

Statements Between Loops

!$OMP PARALLEL DO

!$OMP& REDUCTION(+: sum) do i=1,n sum = sum + a[i] enddo alpha = sum/scale

!$OMP PARALLEL DO do i=1,n a[i] = alpha * a[i] enddo

!$OMP PARALLEL

!$OMP DO REDUCTION(+: sum) do i=1,n sum = sum + a[i] enddo

!$OMP SINGLE alpha = sum/scale

!$OMP END SINGLE

!$OMP DO do i=1,n

Load

Imbalance a[i] = alpha * a[i] enddo

!$OMP END PARALLEL

UNC Research Computing 101

Loops (cont.)

!$OMP PARALLEL

!$OMP DO REDUCTION(+: sum) do i=1,n sum = sum + a[i] enddo Cannot use

!$OMP SINGLE NOWAIT alpha = sum/scale

!$OMP END SINGLE

!$OMP DO do i=1,n a[i] = alpha * a[i] enddo

!$OMP END PARALLEL

!$OMP PARALLEL PRIVATE(alpha)

!$OMP DO REDUCTION(+: sum) do i=1,n sum = sum + a[i] enddo alpha = sum/scale

!$OMP DO do i=1,n a[i] = alpha * a[i] enddo

!$OMP END PARALLEL Replicated execution

UNC Research Computing 102

Coarse Grain Work-sharing

Reduced overhead by increasing work per parallel region

But…work-sharing constructs still need to compute loop bounds at each construct

Work between loops not always parallelizable

Synchronization at the end of directive not always avoidable

UNC Research Computing 103

Domain Decomposition

We could have computed loop bounds once and used that for all loops

• i.e., compute loop decomposition a priori

Enter Domain Decomposition

• More general approach to loop decomposition

• Decompose work into the number of threads

• Each threads gets to work on a sub-domain

UNC Research Computing 104

Domain Decomposition

(cont.)

Transfers onus of decomposition from compiler (work-sharing) to user

Results in Coarse Grain program

• Typically one parallel region for whole program

• Reduced overhead good scalability

Domain Decomposition results in a model of programming called SPMD

UNC Research Computing 105

SPMD Programming

SPMD: Single Program Multiple Data

Program Program

n threads

n sub-domains

1 Domain

UNC Research Computing 106

Implementing SPMD

program work

!$OMP PARALLEL DEFAULT(PRIVATE)

!$OMP& SHARED(N,global) nthreads = omp_get_num_threads() iam = omp_get_thread_num() ichunk = N/nthreads istart = iam*ichunk

Decomposition iend = (iam+1)*ichunk -1 done manually call my_sum(istart, iend, local)

!$OMP ATOMIC global = global + local

!$OMP END PARALLEL print*, global end

Program

Each thread works on its piece.

UNC Research Computing 107

Implementing SPMD

(contd.)

Decomposition is done manually

• Implement to run on any number of threads

Query for number of threads

Find thread number

Each thread calculates its portion (sub-domain) of work

Program is replicated on each thread, but with different extents for the sub-domain

• all sub-domain specific data are PRIVATE

UNC Research Computing 108

Handling Global Variables

Global variables: spans whole domain

• Field variables are usually shared

Synchronization required to update shared variables

!$OMP ATOMIC

• ATOMIC p(i) = p(i) + plocal

• CRITICAL usually better than

!$OMP CRITICAL p(i) = p(i) + plocal

!$OMP END CRITICAL

UNC Research Computing 109

Global private to thread

Use threadprivate for all other sub-domain data that need file scope or common blocks parameter (N=1000) real A(N,N)

!$OMP THREADPRIVATE(/buf/) common/buf/lft(N),rht(N)

!$OMP PARALLEL call init call scale call order

!$OMP END PARALLEL buf is common within each thread

UNC Research Computing 110

Threadprivate

subroutine scale parameter (N=1000)

!$OMP THREADPRIVATE(/buf/) common/buf/lft(N),rht(N) do i=1, N subroutine order lft(i)= const* A(i,iam) end do parameter (N=1000)

!$OMP THREADPRIVATE(/buf/) return common/buf/lft(N),rht(N) end do i=1, N

A(i,iam) = lft(index) end do return end

UNC Research Computing 111

Cache Considerations

Typical shared memory model: field variables shared among threads

• Each thread updates field variables for its own subdomain

• Field variable is a shared variable with a size same as the whole domain

For fixed problem size scaling subdomain size decreases s1

• Fortran Example

• P(20,20) Field variable

1 cache line s2

128 bytes on Origin

(16 double words)

P(20,20)

UNC Research Computing s3 s4

112

False sharing

Two threads need to write the same cache line

Cacheline pingpongs between the two processors

• A cacheline is the smallest unit of transport

Thread A writes

1 cache line

Thread B writes

Cache line must move from Processor A to Processor B

Poor performance: must avoid at all costs

UNC Research Computing 113

Fixing False sharing

Pay attention to granularity

Diagnose by using performance tools if available

Pad false shared arrays or make private

UNC Research Computing 114

Stacksize

OpenMP standard does not specify how much stack space a thread should have. Default values vary with operating system and implementation.

Typically stack size is easy to exhaust.

Threads that exceed their stack allocation may or may not seg fault. An application may continue to run while data is being corrupted .

To modify:

• limit stacksize unlimited (for csh/tcsh shells)

• ulimit –s unlimited (for bash/ksh shells)

• (Note: here unlimited means increase up to the system maximum)

UNC Research Computing 115

Reducing Barriers

Reduce BARRIERs to the minimum

This synchronization is very often done using barrier

• But OMP_BARRIER synchronizes all threads in the region

• BARRIERS have high overhead at large processor counts

• Synchronizing all threads makes load imbalance worse

UNC Research Computing 116

Summary

Loop level paradigm is easiest to implement but least scalable

Work-sharing in coarse grain parallel regions provides intermediate performance

SPMD model provides good scalability

• OpenMP provides ease of implementation compared to message passing

Shared memory model forgiving of use error

• Avoid common pitfalls to obtain good performance

UNC Research Computing 117

Suggestions from a tutorial at WOMPAT 2002 at ARSC

Scheduling - use static scheduling unless load balancing is a real issue. If necessary use static with chunk specified; dynamic or guided schedule types require too much overhead.

Synchronization - Don't use them if at all possible, esp. 'flush'

Common Problems with OpenMP programs

• Too fine grain parallelism

• Overly synchronized

• Load Imbalance

• True Sharing (ping-ponging in cache)

• False Sharing (again a cache problem)

UNC Research Computing 118

Suggestions from a tutorial at WOMPAT 2002 at ARSC

Tuning Keys

• Know your application

• Only use the parallel do/for statements

• Everything else in the language only slows you down, but they are necessary evils.

Use a profiler on your code whenever possible.

This will point out bottle necks and other problems.

Common correctness issues are race conditions and deadlocks.

UNC Research Computing 119

Suggestions from a tutorial at WOMPAT 2002 at ARSC

Remember that reduction clauses need a barrier so make sure not to use the 'nowait' clause when a reduction is being computed.

Don't use locks if at all possible! If you have to use them, make sure that you unset them!

If you think you have a race condition, run the loops backwards and see if you get the same result.

Thanks to Tom Logan at ARSC for this report and

Tim Mattson & Sanjiv Shah for the tutorial

UNC Research Computing 120

What’s new in OpenMP 3.0?

- some highlights

task parallelism is the big news!

• Allows to parallelize irregular problems

unbounded loops

recursive algorithms

producer/consumer

Loop Parallelism Improvements

• STATIC schedule guarantees

• Loop collapsing

• New AUTO schedule

OMP_STACK_SIZE environment variable

UNC Research Computing 121

Tasks

Tasks are work units which execution may be deferred

• they can also be executed immediately

Tasks are composed of:

• code to execute

• data environment

• internal control variables (ICV)

UNC Research Computing 122

OpenMP 3.0 Compiler

Support

Intel 11.0: Linux (x86), Windows (x86) and

MacOS (x86)

Sun Studio Express 11/08: Linux (x86) and

Solaris (SPARC + x86)

PGI 8.0: Linux (x86) and Windows (x86)

IBM 10.1: Linux (POWER) and AIX (POWER)

GCC 4.4 will have support for OpenMP 3.0

UNC Research Computing 123