Part I

advertisement

Quantum Information

Stephen M. Barnett

University of Strathclyde

steve@phys.strath.ac.uk

The Wolfson Foundation

1. Probability and Information

2. Elements of Quantum Theory

3. Quantum Cryptography

4. Generalized Measurements

Quantum Information

5. Entanglement

Stephen M. Barnett

6. Quantum Information Processing

7. Quantum Computation

8. Quantum Information Theory

Oxford University Press

(2009)

1. Probability and Information

2. Elements of Quantum Theory

1.1

1.2

1.3

1.4

3. Quantum Cryptography

4. Generalized Measurements

5. Entanglement

6. Quantum Information Processing

7. Quantum Computation

8. Quantum Information Theory

Introduction

Conditional probabilities

Entropy and information

Communications theory

1.1 Introduction

There is a fundamental link between probabilities and information

Reverend Thomas Bayes 1702 - 1761

Probabilities depend on what we know.

If we acquire additional information then this

modifies the probability.

Entropy is a function of probabilities

Ludwig Boltzmann 1844 - 1906

The entropy depends on the number of

(equiprobable) microstates W.

S k log W

The quantity of information is the entropy of the associated probability distribution

Claude Shannon 1916 - 2001

H pi log pi

i

The extent to which a message can be compressed

is determined by its information content.

Given sufficient redundancy, all errors in

transmission can be corrected.

Probabilities depend

on available information

Information is a function

of the probabilities

Information theory is applicable to any statistical or probabilistic problem.

Quantum theory is probabilistic and so there must be a quantum information theory.

But probability amplitudes are the primary quantity so QI is different ...

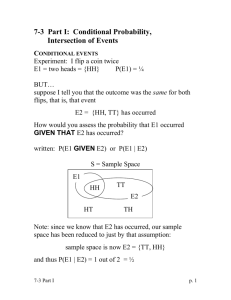

1.2 Conditional probabilities

Suppose we have a single event A with possible outcomes {ai}.

Everything we know is specified by the probabilities for

the possible outcomes: P(ai).

For the coin toss the possible outcomes are “heads” and “tails”:

P(heads) = 1/2 & P(tails) = 1/2.

More generally:

0 P(ai ) 1

i

P(ai ) 1

Two events:

Add a second event B with outcomes {bj} and probabilities P(bj).

Complete description provided by the joint probabilities:

P(ai,bj)

If A and B are independent and uncorrelated then

and

P(ai,bj) = P(ai) P(bj)

Single event probabilities

and joint probabilities

related by:

P(ai ) P(ai , b j )

j

P(b j ) P(ai , b j )

i

What does learning the value of A tell us about the probabilities for the value of B?

If we learn that A = a0, then the quantities of interest are the

conditional probabilities:

P(bj|a0)

This conditional probability is

proportional to the joint probability:

given that

P(b j | a0 ) P(a0 , b j )

Finding the constant of proportionality leads to Bayes’ rule:

P(ai , b j ) P(b j | ai ) P(ai ) or

P(ai , b j ) P(ai | b j ) P(b j )

Probability tree:

B

b1

1/4

b2

A

1/4

a1

1/2

b3

b1

1/2

2/3

1/3

1/3

a2

b3

b1

0

1/3

1/6

b2

1/3

a3

P(ai)

b2

b3

1/3

P(bj|ai)

Bayes’ theorem

Relation between the two types of conditional probability

P(ai | b j )

P(b j | ai ) P(ai )

P(b j )

or as an alternative

P(ai | b j ) P(b j | ai ) P(ai )

P(ai | b j )

P(b j | ai ) P(ai )

k

P(b j | ak ) P(ak )

Example

Each day I take a long (l) or

short (s) route to work and may

arrive on time (O) or late (L).

long

P(bO | as ) 1

Work

P(bO | al ) 3 / 4

P(al ) 1 / 4

short

Home

Given that you see me arrive

on time, what is the probability

that I took the long route?

P(bO | al ) P(al )

3 / 16

P(al | bO )

1/ 5

P(bO | as ) P(as ) P(bO | al ) P(al ) 15 / 16

1.3 Entropy and information

The quantity of information is the entropy of the

associated probability distribution for the event

Suppose we have a single event A with possible outcomes {ai}.

If one, a0, is certain to occur, P(a0) = 1, then we acquire no

information by observing A.

If A = a0 is very likely then we might have confidently

expected it and so learn very little.

If A = a0 is highly unlikely then we might need to

drastically change our plans.

Learning the value of A provides a quantity of information that increases as

the corresponding probability decreases.

h[P(ai)]

P(ai)

We think of learning about something new as adding to the available information.

For two independent events we have:

h[P(ai,bj)] = h[P(ai)P(bj)] = h[P(ai)] + h[P(bj)]

This suggests logarithms:

h[P(ai)] = -K log P(ai)

It is useful to define information as an average for the event A:

H ( A) P(ai )h[ P(ai )] K P(ai ) log P(ai )

i

i

Entropy!!

We can absorb K into the choice of basis

of the logarithm:

log b x log b a log a x

log base 2:

H ( A) P(ai ) log P(ai ) bits

i

log base e:

H e ( A) P(ai ) ln P(ai ) nats

i

For two possible outcomes:

H

H p log p (1 p) log( 1 p)

one bit of information

p

Mathematical properties of entropy

• H(A) is zero if and only if one of the probabilities is unity.

Otherwise it is positive.

• If A can take n possible values a1, a2, …, an, then the maximum value is

H(A) = logn, which occurs for P(ai) = 1/n.

• Any change towards equalising the probabilities will cause H(A) to increase:

P(ai ) ij P(a j )

j

i

ij 1 ij , ij 0

j

H ( A) H ( A)

Entropy for two events

H ( A, B) P(ai , b j ) log P(ai , b j )

ij

H ( A) P(ai , b j ) log P(ai , bk )

ij

k

H ( B) P(ai , b j ) log P(al , b j )

ij

l

H ( A) H ( B) H ( A, B)

ij

P(ai , b j )

0

P(ai , b j ) log

P(a ) P(b )

i

j

Mutual information

• A measure of the correlation between two events

H ( A : B) H ( A) H ( B) H ( A, B)

• The mutual information is bounded

0 H ( A : B) H ( A), H ( B)

Problems for Bayes’ theorem?

(i) Can we interpret probabilities as frequencies of occurrence?

(ii) How should we assign prior probabilities?

(i) This is the way probabilities are usually interpreted in statistical mechanics,

communications theory and quantum theory.

(ii) Minimise any bias by maximising the

entropy subject to what we know. This is

Jaynes’ MaxEnt principle.

It makes the prior probabilities as near to

equal as is possible, consistent with any

information we have.

Edwin Jaynes

1922 - 1998

Max Ent examples

A true die has probability 1/6 for each of the possible scores.

This means a mean score of 3.5

What probabilities should we assign given only that

the mean score is 3.47?

6

H e pn ln pn

n 1

Maximise this entropy subject to the known constraints by varying

6

6

~

H H e 1 pn 3.47 npn

n 1

n 1

n

e

(1 ) n

pn e

e 6 k , 0.010

k 1 e

Max Ent examples

Should we treat variables with known separate statistical properties as

correlated or not?

90% of Finns have blue eyes

94% of Finns are native Finnish speakers

Probabilities:

Swedish

Finnish

x

0.10 - x

10%

0.06 - x

0.84 + x

90%

6%

94%

Maximising He gives x = 0.006 = 6% . 10% Independent!

Max Ent and thermodynamics

Consider a physical system that can have a number of states labelled by n with

an associated energy En. What is the probability for occupying this state?

Maximise the entropy

H e pn ln pn

n

subject to the constraint

E pn En

n

~

H H e 1 p n E p n E n

n

n

~

dH ln pn 1 En dpn 0

n

The solution is

pn e

(1 ) En

e

En

e

En

pn

, Z ( ) e

Z ( )

n

We recognise this as the Boltzmann distribution

Z ( )

k BT

1

is the partition function

is the inverse temperature

Information and thermodynamics

Information is physical: it is stored as the arrangement of physical systems.

Second law of thermodynamics:

“During real physical processes, the entropy of an

isolated system always increases. In the state of

equilibrium the entropy attains its maximum value.”

Rudolf Clausius

1822 - 1888

“A process whose effect is the complete conversion of

heat into work cannot occur.”

Lord Kelvin

(William Thomson)

1824 - 1907

On the decrease of entropy in a thermodynamics system by the intervention of

intelligent beings

Box of volume V0, containing a single molecule and

surrounded by a heat bath at temperature T

Leo Szilard

1898 - 1964

T

V0

On the decrease of entropy in a thermodynamics system by the intervention of

intelligent beings

Box of volume V0, containing a single molecule and

surrounded by a heat bath at temperature T

Leo Szilard

1898 - 1964

T

V0

Work W

Heat transferred Q = W

PV kBT

Inserting the partition reduces the volume from V0 to V0/2.

The work done by the expanding gas is

W

V0

PdV k BT ln 2, Q W

V0 / 2

The expansion of the gas increases the entropy by the amount

Q

S k B ln 2

T

Kelvin’s formulation of the second law is saved if the process of measuring

and recording the position of the molecule produces at least this much

entropy. Here entropy is clearly linked to information.

Irreversibility and heat generation in the computing process

Erasing an unknown bit of information requires

the dissipation of at least kBTln2 of energy.

Rolf Landauer

1927-1999

Bit value 1

Irreversibility and heat generation in the computing process

Erasing an unknown bit of information requires

the dissipation of at least kBTln2 of energy.

Rolf Landauer

1927-1999

Bit value 0

Irreversibility and heat generation in the computing process

Rolf Landauer

1927-1999

Remove the partition, then push in a new partition from the right to reset

the bit value to 0.

The work done to

compress the gas is

dissipated as heat.

V0 / 2

W

V0

PdV k BT ln 2 Q

1.4 Communications theory

Communications systems exist for the purpose of conveying information

between two or more parties.

Alice

Bob

Communications systems are necessarily probabilistic...

If Bob knows the message before it is sent then there is no need to send it!

Bob may know the probability for Alice to select each of the possible

messages, but not which one until the signal is received.

Shannon’s communications model

Information

source

Receiver

Transmitter

Signal

Destination

Received

signal

Bob

Alice

Noise source

• Noiseless coding theorem - data compression

• Noisy coding theorem - channel capacity

Let A denote the events in Alice’s domain.

The choices of message and associated prepared signals are {ai}

The probability that Alice selects and prepares the signal ai is P(ai)

Let B denote the reception event - the receipt of the signal and its

decoding to produce the message.

The set of possible received messages is {bj}

The operation of the channel is described by the conditional

probabilities:

{P(bj|ai)}

From Bob’s perspective:

{P(ai|bj)}

Each received signal is perfectly decodable if P(ai|bj) = dij

Shannon’s coding theorems

Most messages have an element of redundancy and can

be compressed and still be readable.

TXT MSSGS SHRTN NGLSH SNTNCS

TEXT MESSAGES SHORTEN ENGLISH SENTENCES

The eleven missing vowels were a redundant component.

Removing these has not impaired its understandability.

Shannon’s noiseless coding theorem quantifies the redundancy.

It tells us by how much the message can be shortened and still

read without error.

Shannon’s coding theorems

Why do we need redundancy?

To correct errors.

RQRS BN MK WSAGS NFDBL

This message has been compressed and then errors introduced.

Lets try the effect of errors on the uncompressed message:

ERQORS BAN MAKE WESAAGIS UNFEADCBLE

ERRORS CAN MAKE MESSAGES UNREADABLE

Shannon’s noisy channel coding theorem tells us how much

redundancy we need in order to combat the errors.

Noiseless coding theorem

Consider a string of N bits, 0 and 1, with P(0) = p and P(1) = 1-p: (p > 1/2)

Number of possible different strings is 2N, the most probable is 0000 … 0

The probability that any given string selected has n zeros and N - n ones is:

N!

P ( n)

p n (1 p) N n

n!( N n)!

In the limit of long strings (N >> 1) it is overwhelmingly likely that the

number of zeros will be close to Np.

Hence we need only consider typical string with

n pN

All other possibilities are sufficiently unlikely that they can be ignored.

If we consider coding only messages for which n = pN then the number of

strings reduces to

N!

W

n!( N n)!

Taking the logarithm of this and using Stirling’s approximation gives

log W N log N n log n ( N n) log( N n)

N [ p log p (1 p) log( 1 p)] NH ( p)

The number of equiprobable typical messages is

W 2

NH ( p )

2

N

This is Shannon’s noiseless coding theorem: we can compress a

message by reducing the number of bits by a factor of up to H.

Example

Consider a message formed from the alphabet A, B, C and D, with probabilities:

P( A)

1

1

1

, P( B) , P(C ) P( D)

2

4

8

The simplest coding scheme would use two bits for each letter (in the form

00, 01, 10 and 11). Hence a sequence of N letters would require 2N bits.

The information for the letter probabilities is

7

H log log 2 log bits

4

1

2

1

2

1

4

1

4

1

8

1

8

which gives a Shannon limit of 1.75N bits for the sequence of N letters.

For this simple example the Shannon limit can be reached by the following

coding scheme:

A=0

B = 10

C = 110

D = 111

The average number of bits used to encode a sequence of N letters is then:

1

2 7

1

N 1 2 3 N HN .

4

8 4

2

Noisy channel coding theorem

We can combat errors by introducing redundancy but how much do we need?

Consider an optimally compressed message comprising N0 bits so that the

probability for each is 2-N0.

Let bit errors occur with probability q:

1-q

1

1

q

0

1-q

0

The number or errors will be close to qN0 and we need to correct these.

The number of ways in which qN0 errors can be distributed among N0 bits is

N 0!

E

(qN 0 )!( N 0 qN 0 )!

Bob can correct the errors if he knows where they are so ...

logE bits

Alice

Bob

N0 bits

log E N 0 [q log q (1 q) log( 1 q)] N 0 H (q)

At least N0[1 + H(q)] bits are required in the combined signal and correction

channels.

If the correction channel also has error rate q then we can correct the errors

on it with a second correction channel.

N0H2(q) bits

N0H(q) bits

Alice

Bob

N0 bits

We can correct the errors on this second channel with a third one and so on.

The total number of bits required is then:

N0

N 0 [1 H (q) H (q) ]

1 H (q)

2

All of the correction channels have the same bit error rate so we

can replace the entire construction by the original noisy channel!!

We can do this by selecting messages that are sufficiently distinct so that

Bob can associate each likely or typical message with a unique original

message.

Shannon’s noisy channel coding theorem:

• We require at least N0/[1 - H(q)] bits in order to faithfully

encode 2N0 messages.

• N bits of information can be used to carry faithfully not

more than 2N[1 - H(q)] distinct messages.

• Each binary digit can carry not more than

log 2 N [1 H ( q )]

1 H (q)

N

bits of information.

q = 0, 1/2, 1 ??

Summary

Probabilities depend

on available information

Information is physical

Information is a function

of the probabilities

It is the information that

limits communications.

Three or more events

We can add a third event C with outcomes {ck} and probabilities P(ck).

The complete description is then given by the joint probabilities

P(ai,bj,ck)

We can also write conditional probabilities but need to be careful with

the notation!

P(ai|bj,ck)

Bayes’ theorem

still works:

vs

P(ai,bj|ck)

P(ai , b j , ck ) P(ai | b j , ck ) P(b j , ck )

P(ai | b j , ck ) P(b j | ck ) P(ck )

P(ai | b j , ck ) P(b j , ck | ai ) P(ai )

Fisher’s likelihood

How does learning that B = bj affect the

probability that A = ai?

P(ai | b j ) (ai | b j ) P(ai )

Bayes’ theorem tells us that

Sir Ronald Aylmer Fisher

1890 - 1962

(ai | b j ) P(b j | ai )

ai given bj

bj given ai

The likelihood quantifies the effect of what we learn:

P(ai | b j , ck ) (ai | ck ) P(ai | b j ) (ai | ck )(ai | b j ) P(ai )

Example from genetics

Mice can be black or brown. Black, B, is the dominant gene and

brown, b, is recessive.

bb

BB or Bb (bB)

If we mate a black mouse with a brown one then how does the colour of the off spring

modify the probabilities for the black mouse’s genes?

In the absence of any information about ancestry we can only assign equal

probabilities to each of the 3 possible arrangements:

P(BB) = 1/3

P(Bb) = 2/3

If any of the off spring are brown then the test mouse must be Bb.

If they are all black then the probability that it is BB increases with each birth.

( BB | xi black ) P( xi black | BB ) 1

1

( Bb | xi black ) P( xi black | Bb )

2

After one birth:

1

P( BB | x1 black ) 1

3

1 2

P( Bb | x1 black )

2 3

1

1

P( BB | x1 black ) , P( Bb | x1 black )

2

2

P(BB) = 1/3

P(Bb) = 2/3

1:2

P(BB|1brown)=0

P(BB|3black)=4/5

P(BB|1black)=1/2

P(Bb|1brown)=1

P(Bb|3black)=1/5

P(Bb|1black)=1/2

4:1

P(BB|4black)=8/9

1:1

P(BB|2black)=2/3

P(Bb|4black)=1/9

P(Bb|2black)=1/3

8:1

2:1

Summary

Probabilities depend on what we know.

If we acquire additional information then this

modifies the probability.

Bayes’ theorem relates

different types of

conditional probabilities:

P(ai | b j )

P(b j | ai ) P(ai )

P(b j )

The likelihood tells us how probabilities change

when we acquire information:

P(ai | b j ) (ai | b j ) P(ai )

(ai | b j ) P(b j | ai )

Information Bayes’ rule

The positive quantity H(A,B) - H(A) is a function of the conditional probabilities:

H ( A, B) H ( A)

i

P(ai ) P(b j | ai ) log P(b j | ai )

j

It tells us about the information to be gained about B by learning A.

We can define information for the conditional probabilities:

H ( B | ai ) P(b j | ai ) log P(b j | ai )

j

Or, on average:

H ( B | A) P(ai ) H ( B | ai ) H ( A, B) H ( A)

i

Summary

The quantity of information is the entropy of the

associated probability distribution for the event

The mutual information is a measure of the correlation between two events

H ( A : B) H ( A) H ( B) H ( A, B)

The Max Ent principle requires us to assign probabilities so as to maximise

the information. This is useful in statistics and in thermodynamics.

There is a fundamental link between information and thermodynamic

entropy. Information is physical.

We have made a number of drastic approximations.

In particular there are many messages for which n differs from pN by a very

small number. Including these increases the number of typical messages to:

W 2

N [ H ( p ) d ]

d tends to zero as N tends to infinity.

The message can be compressed by the factor H(p) + d and this tends to H(p)

in the limit of long messages.

More generally if each symbol can take m values then the

number of strings is 2Nlogm. If these symbols have probabilities

{P(ai)} then the Nlogm bits can be compressed by up to NH(A).

Channel capacity

The likely number of bit errors means that a sequence of N bits will, with very

high probability be transformed into one of 2NH(q) sequences.

We can combat the noise by selecting 2N[1 - H(q)] sufficiently different messages.

More generally, a string of N symbols, each taking one of the values {ai}:

Alice can produce about 2NH(A) likely messages.

Each of these messages can produce 2NH(B|A) likely received strings.

The number of of messages that can be sent and reconstructed by Bob is:

2 N[ H ( B)H ( B| A)] 2 NH ( A:B)

mutual information

We can maximise the information rate by maximising the H(A:B).

This maximum is the channel capacity:

C max H ( A : B)

N symbols can reliably encode 2NC messages and no more than this.

1-q

1

1

q

0

0

1-q

H ( A : B ) [ P (ai )(1 q ) P (ai )q ] log[ P (ai )(1 q ) P (ai )q ]

i 1, 2

q log q (1 q ) log( 1 q )

C 1 H (q)

Example

b1

H ( A : B) 12 ( P(a1 ) P(a2 )) log[ 12 ( P(a1 ) P(a2 ))]

a2

b2

12 ( P(a3 ) P(a4 )) log[ 12 ( P(a3 ) P(a4 ))]

a3

b3

a4

b4

a1

All conditional

probabilities 1/2

12 ( P(a2 ) P(a3 )) log[ 12 ( P(a2 ) P(a3 ))]

12 ( P(a4 ) P(a1 )) log[ 12 ( P(a4 ) P(a1 ))]

1

C 1 bit

e.g. just use the symbols a1 and a3.

Summary

It is the information that limits communications.

Noiseless coding: Most messages contain

redundancy and can be compressed. If each letter

A can take m possible values then mN = 2Nlogm

possible bit strings can be compressed to 2NH(A).

Noisy coding: We can combat errors by using redundancy. The

number of messages that can be sent and decoded cannot exceed

2NH(A:B).

The maximum communication rate is set by the channel capacity

C = max H(A:B).