Lecture 1

advertisement

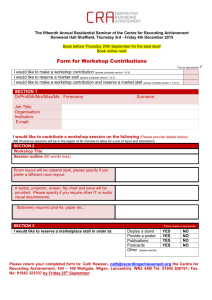

ELEC 669 Low Power Design Techniques Lecture 1 Amirali Baniasadi amirali@ece.uvic.ca ELEC 669: Low Power Design Techniques Instructor: Amirali Baniasadi EOW 441, Only by appt. Call or email with your schedule. Email: amirali@ece.uvic.ca Office Tel: 721-8613 Web Page for this class will be at http://www.ece.uvic.ca/~amirali/courses/ELEC669/elec669.html Will use paper reprints Lecture notes will be posted on the course web page. 2 Course Structure Lectures: 1-2 weeks on processor review 5 weeks on low power techniques 6 weeks: discussion, presentation, meetings Reading paper posted on the web for each week. Need to bring a 1 page review of the papers. Presentations: Each student should give to presentations in class. 3 Course Philosophy Papers to be used as supplement for lectures (If a topic is not covered in the class, or a detail not presented in the class, that means I expect you to read on your own to learn those details) One Project (50%) Presentation (30%)- Will be announced in advance. Final Exam: take home (20%) IMPORTANT NOTE: Must get passing grade in all components to pass the course. Failing any of the three components will result in failing the course. 4 Project More on project later 5 Topics High Performance Processors? Low-Power Design Low Power Branch Prediction Low-Power Register Renaming Low-Power SRAMs Low-Power Front-End Low-Power Back-End Low-Power Issue Logic Low-Power Commit AND more… 6 A Modern Processor 1-What do each do? 2-Possible Power Optimizations? Fetch Decode Issue Complete Commit Front-end Back-end 7 Power Breakdown Alpha 21464 Front-end 6% PentiumPro Front-end 28% REST 37% Rest 26% Back-end 68% Back-end 35% 8 Instruction Set Architecture (ISA) •Instruction Execution Cycle Fetch Instruction From Memory Decode Instruction determine its size & action Fetch Operand data Execute instruction & compute results or status Store Result in memory Determine Next Instruction’s address 9 What Should we Know? A specific ISA (MIPS) Performance issues - vocabulary and motivation Instruction-Level Parallelism How to Use Pipelining to improve performance Exploiting Instruction-Level Parallelism w/ Dynamic Approach Memory: caches and virtual memory 10 What is Expected From You? • Read papers! • Be up-to-date! • Come back with your input & questions for discussion! 11 Power? Everything is done by tiny switches Their charge represents logic values Changing charge energy Power energy over time Devices are non-ideal power heat Excess heat Circuits breakdown Need to keep power within acceptable limits 12 N iu m III II iu m uc IV le ar R ea ct or Pe nt Pe nt Pr o iu m iu m iu m Pe nt Pe nt Pe nt i4 86 i3 86 W/cm2 POWER in the real world 1000 100 10 1 13 Power as a Performance Limiter Conventional Performance Scaling: Goal: Max. performance w/ min cost/complexity How: -More and faster xtors. -More complex structures. Power: Don’t fix if it ain’t broken Not True Anymore: Power has increased rapidly Power-Aware Architecture a Necessity 14 Power-Aware Architecture Conventional Architecture: Goal: Max. performance How: Do as much as you can. This Work Power-Aware Architecture Goal: Min. Power and Maintain Performance How: Do as little as you can, while maintaining performance Challenging and new area 15 Why is this challenging Identify actions that can be delayed/eliminated Don’t touch those that boost performance Cost/Power of doing so must not out-weight benefits 16 Definitions Performance is in units of things-per-second bigger is better If we are primarily concerned with response time performance(x) = 1 execution_time(x) " X is n times faster than Y" means Performance(X) n = ---------------------Performance(Y) 17 Amdahl's Law Speedup due to enhancement E: ExTime w/o E Speedup(E) = -------------------ExTime w/ E Performance w/ E = --------------------Performance w/o E Suppose that enhancement E accelerates a fraction F of the task by a factor S and the remainder of the task is unaffected then, ExTime(with E) = ((1-F) + F/S) X ExTime(without E) Speedup(with E) = ExTime(without E) ÷ ((1-F) + F/S) X ExTime(without E) Speedup(with E) =1/ ((1-F) + F/S) Amdahl's Law-example A new CPU makes Web serving 10 times faster. The old CPU spent 40% of the time on computation and 60% on waiting for I/O. What is the overall enhancement? Fraction enhanced= 0.4 Speedup enhanced = 10 Speedup overall = 1 0.6 +0.4/10 = 1.56 Why Do Benchmarks? How we evaluate differences Different systems Changes to a single system Provide a target Benchmarks should represent large class of important programs Improving benchmark performance should help many programs For better or worse, benchmarks shape a field Good ones accelerate progress good target for development Bad benchmarks hurt progress help real programs v. sell machines/papers? Inventions that help real programs don’t help benchmark SPEC first round First round 1989; 10 programs, single number to summarize performance One program: 99% of time in single line of code New front-end compiler could improve dramatically 800 700 500 400 300 200 100 Benchmark tomcatv fpppp matrix300 eqntott li nasa7 doduc spice epresso 0 gcc SPEC Perf 600 SPEC95 Eighteen application benchmarks (with inputs) reflecting a technical computing workload Eight integer go, m88ksim, gcc, compress, li, ijpeg, perl, vortex Ten floating-point intensive tomcatv, swim, su2cor, hydro2d, mgrid, applu, turb3d, apsi, fppp, wave5 Must run with standard compiler flags eliminate special undocumented incantations that may not even generate working code for real programs 23 Summary CPU time = Seconds Program = Instructions x Cycles Program Instruction x Seconds Cycle Time is the measure of computer performance! Remember Amdahl’s Law: Improvement is limited by unimproved part of program Execution Cycle Instruction Obtain instruction from program storage Fetch Instruction Determine required actions and instruction size Decode Operand Locate and obtain operand data Fetch Execute Result Compute result value or status Deposit results in storage for later use Store Next Determine successor instruction Instruction 25 What Must be Specified? Instruction Fetch ° Instruction Decode Instruction Format or Encoding – how is it decoded? ° Location of operands and result – where other than memory? Operand Fetch – how many explicit operands? – how are memory operands located? – which can or cannot be in memory? Execute ° Data type and Size ° Operations Result Store – what are supported ° Successor instruction – jumps, conditions, branches Next Instruction 26 What Is an ILP? Principle: Many instructions in the code do not depend on each other Result: Possible to execute them in parallel ILP: Potential overlap among instructions (so they can be evaluated in parallel) Issues: Building compilers to analyze the code Building special/smarter hardware to handle the code ILP: Increase the amount of parallelism exploited among instructions Seeks Good Results out of Pipelining 27 What Is ILP? CODE A: LD R1, (R2)100 ADD R4, R1 SUB R5,R1 CMP R1,R2 ADD R3,R1 CODE B: LD R1,(R2)100 ADD R4,R1 SUB R5,R4 SW R5,(R2)100 LD R1,(R2)100 Code A: Possible to execute 4 instructions in parallel. Code B: Can’t execute more than one instruction per cycle. Code A has Higher ILP 28 Out of Order Execution Programmer: Instructions execute in-order Processor: Instructions may execute in any order if results remain the same at the end In-Order A: LD R1, (R2) B: ADD R3, R4 C: ADD R3, R5 D: CMP R3, R1 A D B Out-of-Order C B: ADD R3, R4 C: ADD R3, R5 A: LD R1, (R2) D: CMP R3, R1 29 Assumptions Five-stage integer pipeline Branches have delay of one clock cycle ID stage: Comparisons done, decisions made and PC loaded No structural hazards Functional units are fully pipelined or replicated (as many times as the pipeline depth) FP Latencies Integer load latency: 1; Integer ALU operation latency: 0 Source instruction Dependant instruction Latency (clock cycles) FP ALU op Another FP ALU op 3 FP ALU op Store double 2 Load double FP ALU op 1 Load double Store double 0 30 Simple Loop & Assembler Equivalent for (i=1000; i>0; i--) x[i] = x[i] + s; Loop: LD ADDD SD SUBI BNE • x[i] & s are double/floating point type • R1 initially address of array element with the highest address • F2 contains the scalar value s • Register R2 is pre-computed so that 8(R2) is the last element to operate on F0, 0(R1) F4, F0, F2 F4 , 0(R1) R1, R1, #8 R1, R2, Loop ;F0=array element ;add scalar in F2 ;store result ;decrement pointer 8bytes (DW) ;branch R1!=R2 31 Where are the stalls? Unscheduled Loop: LD F0, 0(R1) stall ADDD F4, F0, F2 stall stall SD F4, 0(R1) SUBI R1, R1, #8 stall BNE R1, R2, Loop stall Schedule Scheduled Loop: LD SUBI ADDD stall BNE SD 10 clock cycles Can we minimize? F0, 0(R1) R1, R1, #8 F4, F0, F2 R1, R2, Loop F4, 8(R1) 6 clock cycles 3 cycles: actual work; 3 cycles: overhead Can we minimize further? Source instruction Dependant instruction Latency (clock cycles) FP ALU op FP ALU op Load double Another FP ALU op Store double FP ALU op 3 2 1 Load double Store double 0 32 Loop Unrolling Four copies of loop LD ADDD SD SUBI BNE F0, 0(R1) F4, F0, F2 F4 , 0(R1) R1, R1, #8 R1, R2, Loop LD ADDD SD SUBI BNE F0, 0(R1) F4, F0, F2 F4 , 0(R1) R1, R1, #8 R1, R2, Loop LD ADDD SD SUBI BNE F0, 0(R1) F4, F0, F2 F4 , 0(R1) R1, R1, #8 R1, R2, Loop LD ADDD SD SUBI BNE F0, 0(R1) F4, F0, F2 F4 , 0(R1) R1, R1, #8 R1, R2, Loop Eliminate Incr, Branch LD ADDD SD SUBI BNE LD ADDD SD SUBI BNE LD ADDD SD SUBI BNE LD ADDD SD SUBI BNE F0, 0(R1) F4, F0, F2 F4 , 0(R1) R1, R1, #8 R1, R2, Loop F0, -8(R1) F4, F0, F2 F4 , -8(R1) R1, R1, #8 R1, R2, Loop F0, -16(R1) F4, F0, F2 F4 , -16(R1) R1, R1, #8 R1, R2, Loop F0, -24(R1) F4, F0, F2 F4 , -24(R1) R1, R1, #32 R1, R2, Loop Four iteration code Loop: LD ADDD SD LD ADDD SD LD ADDD SD LD ADDD SD SUBI BNE F0, 0(R1) F4, F0, F2 F4, 0(R1) F6, -8(R1) F8, F6, F2 F8, -8(R1) F10, -16(R1) F12, F10, F2 F12, -16(R1) F14, -24(R1) F16, F14, F2 F16, -24(R1) R1, R1, #32 R1, R2, Loop Assumption: R1 is initially a multiple of 32 or number of loop iterations is a multiple of 4 33 Loop Unroll & Schedule Loop:LD stall ADDD stall stall SD LD stall ADDD stall stall SD LD stall ADDD stall stall SD LD stall ADDD stall stall SD SUBI stall BNE stall F0, 0(R1) F4, F0, F2 F4, 0(R1) F6, -8(R1) F8, F6, F2 Schedule F8, -8(R1) F10, -16(R1) F12, F10, F2 F12, -16(R1) F14, -24(R1) Loop:LD LD LD LD ADDD ADDD ADDD ADDD SD SD SD SUBI BNE SD F0, 0(R1) F6, -8(R1) F10, -16(R1) F14, -24(R1) F4, F0, F2 F8, F6, F2 F12, F10, F2 F16, F14, F2 F4, 0(R1) F8, -8(R1) F12, -16(R1) R1, R1, #32 R1, R2, Loop F16, 8(R1) F16, F14, F2 F16, -24(R1) R1, R1, #32 R1, R2, Loop No stalls! 14 clock cycles or 3.5 per iteration Can we minimize further? 28 clock cycles or 7 per iteration Can we minimize further? 34 Summary Iteration 10 cycles Unrolling 7 cycles Scheduling Scheduling 6 cycles 3.5 cycles (No stalls) 35 Multiple Issue • Multiple Issue is the ability of the processor to start more than one instruction in a given cycle. • Superscalar processors • Very Long Instruction Word (VLIW) processors 36 A Modern Processor Multiple Issue Fetch Decode Issue Complete Commit Front-end Back-end 37 1990’s: Superscalar Processors Bottleneck: CPI >= 1 Limit on scalar performance (single instruction issue) Hazards Superpipelining? Diminishing returns (hazards + overhead) How can we make the CPI = 0.5? Multiple instructions in every pipeline stage (super-scalar) 1 2 3 4 5 6 7 Inst0 Inst1 Inst2 Inst3 Inst4 Inst5 IF IF ID ID IF IF EX EX ID ID IF IF MEM MEM EX EX ID ID WB WB MEM MEM EX EX WB WB MEM MEM WB WB 38 Elements of Advanced Superscalars High performance instruction fetching Good dynamic branch and jump prediction Multiple instructions per cycle, multiple branches per cycle? Scheduling and hazard elimination Dynamic scheduling Not necessarily: Alpha 21064 & Pentium were statically scheduled Register renaming to eliminate WAR and WAW Parallel functional units, paths/buses/multiple register ports High performance memory systems Speculative execution 39 SS + DS + Speculation Superscalar + Dynamic scheduling + Speculation Three great tastes that taste great together CPI >= 1? Overcome with superscalar Superscalar increases hazards Overcome with dynamic scheduling RAW dependences still a problem? Overcome with a large window Branches a problem for filling large window? Overcome with speculation 40 The Big Picture issue Static program Fetch & branch predict execution & Reorder & commit 41 Superscalar Microarchitecture Floating point register file Predecode Inst. Cache Inst. buffe r Functional units Floating point inst. buffer Decode rename dispatch Memory interface Integer address inst buffer Functional units and data cache Integer register file Reorder and commit 42 Register renaming methods First Method: Physical register file vs. logical (architectural) register file. Mapping table used to associate physical reg w/ current value of log. Reg use a free list of physical registers Physical register file bigger than log register file Second Method: physical register file same size as logical Also, use a buffer w/ one entry per inst. Reorder buffer. 43 Register Renaming Example Loop:LD stall ADDD stall stall SD LD stall ADDD stall stall SD LD stall ADDD stall stall SD LD stall ADDD stall stall SD SUBI stall BNE stall F0, 0(R1) F4, F0, F2 F4, 0(R1) F6, -8(R1) F8, F6, F2 Schedule F8, -8(R1) F10, -16(R1) F12, F10, F2 F12, -16(R1) F14, -24(R1) Loop:LD LD LD LD ADDD ADDD ADDD ADDD SD SD SD SUBI BNE SD F0, 0(R1) F6, -8(R1) F10, -16(R1) F14, -24(R1) F4, F0, F2 F8, F6, F2 F12, F10, F2 F16, F14, F2 F4, 0(R1) F8, -8(R1) F12, -16(R1) R1, R1, #32 R1, R2, Loop F16, 8(R1) F16, F14, F2 F16, -24(R1) R1, R1, #32 R1, R2, Loop No stalls! 14 clock cycles or 3.5 per iteration Can we minimize further? 28 clock cycles or 7 per iteration Can we minimize further? 44 Register renaming: first method Mapping table Mapping table r0 R8 r0 R8 r1 R7 r1 R7 r2 R5 r2 R5 r3 R1 r3 R2 r4 R9 r4 R9 R2 R6 R13 Free List Add r3,r3,4 R6 R13 Free List 45 Superscalar Processors • Issues varying number of instructions per clock • Scheduling: Static (by the compiler) or dynamic(by the hardware) • Superscalar has a varying number of instructions/cycle (1 to 8), scheduled by compiler or by HW (Tomasulo). • IBM PowerPC, Sun UltraSparc, DEC Alpha, HP 8000 46 More Realistic HW: Register Impact 60 Effect of limiting the number of 59 renaming registers FP: 11 - 45 54 49 IPC Instruction issues per cycle 50 45 40 44 35 Integer: 5 - 15 30 29 28 20 20 15 15 11 10 10 10 16 13 12 12 12 11 10 9 5 5 4 11 6 4 15 5 5 5 4 7 5 5 0 gcc espresso li fpppp doducd tomcatv Program Infinite 256 128 64 32 None 47 Reorder Buffer Place data in entry when execution finished Reserve entry at tail when dispatched Remove from head when complete Bypass to other instructions when needed 48 register renaming:reorder buffer Before add r3,r3,4 Add r3, rob6, 4 add rob8,rob6,4 r0 R8 r0 R8 r1 R7 r1 R7 r2 R5 r2 R5 r3 rob6 r3 rob8 r4 R9 r4 R9 8 7 Reorder buffer 6 r3 7 6 0 R3 0 0 …..….. R3 …. Reorder buffer 49 Instruction Buffers Floating point register file Predecode Inst. Cache Inst. buffe r Functional units Floating point inst. buffer Decode rename dispatch Memory interface Integer address inst buffer Functional units and data cache Integer register file Reorder and commit 50 Issue Buffer Organization a) Single, shared queue b)Multiple queue; one per inst. type No out-of-order No Renaming No out-of-order inside queues Queues issue out of order 51 Issue Buffer Organization c) Multiple reservation stations; (one per instruction type or big pool) NO FIFO ordering Ready operands, hardware available execution starts Proposed by Tomasulo From Instruction Dispatch 52 Typical reservation station Operation source 1 data 1 valid 1 source 2 data 2 valid 2 destination 53 Memory Hazard Detection Logic Load address buffer Instruction issue loads Address add & translation To memory Address compare Hazard Control stores Store address buffer 54