What could go wrong?

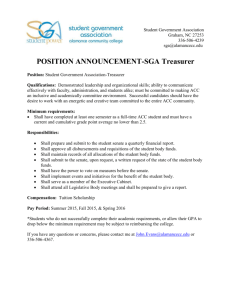

advertisement

OpenACC for Fortran

PGI Compilers for Heterogeneous Supercomputing

Advanced GPU Programming

Data management API routines

Multiple devices

Atomic operations

Derived types

Managed memory

Conditional GPU code

Multicore as a target

Interoperability with OpenMP

Interoperability with CUDA C and CUDA Libraries

Interoperability with CUDA Fortran

Data Management API

acc_copyin(a(:))

acc_create(b(:))

acc_copyout(a(:))

acc_delete(b(:))

acc_is_present(a(2:n-1))

acc_update_host(a(2:n-1))

acc_update_device(b(2:n))

acc

acc

acc

acc

enter data copyin

enter data create

exit data copyout

exit data delete

acc update host

acc update device

Multiple Devices

Environment Variable ACC_DEVICE_NUM

API routine acc_set_device_num

- call acc_set_device_num( 1, acc_device_nvidia )

OpenMP, based on thread number

- nd = acc_get_num_devices( acc_device_nvidia )

- ign = mod(omp_get_thread_num(),nd)

- call acc_set_device_num( ign, acc_device_nvidia )

MPI, based on rank

-

nd = acc_get_num_devices( acc_device_nvidia )

call mpi_comm_rank( mpi_comm_world, irank, ierror )

ign = mod(irank,nd)

call acc_set_device_num( ign, acc_device_nvidia )

Multiple devices with OpenMP

nd = acc_get_num_devices( acc_device_nvidia )

!$omp parallel private(ign)

ign = mod(omp_get_thread_num(),nd)

call acc_set_device_num( ign, acc_device_nvidia )

!$omp end parallel

...

!$acc data copy(a(:,:))

!$omp parallel do

do j = 1, n

!$acc parallel loop

do i = 1, n

a(i,j) = ...

enddo

enddo

!$acc end data

What could go wrong?

Multiple devices with OpenMP

...

!$omp parallel

!$acc data copy( a(:,:) )

!$omp do

do j = 1, n

!$acc parallel loop present(a)

do i = 1, n

a(i,j) = ...

enddo

enddo

!$acc end data

!$omp end parallel

What could go wrong?

Multiple devices with one thread

nd = acc_get_num_devices( acc_device_nvidia )

nchunk = (n+nd-1)/nd

do ign = 0, nd-1

call acc_set_device_num( ign, acc_device_nvidia )

jlow = ign*nchunk + 1

jhigh = max(n, (ign+1)*nchunk)

!$acc enter data copyin(a(:,jlow:jhigh)) async

!$acc parallel loop async

do j = jlow, jhigh

do i = 1, n

a(i,j) = ...

enddo

enddo

!$acc exit data copyout(a(:,jlow:jhigh)) async

enddo

!$acc wait

What could go wrong?

Multiple devices with MPI

No sharing between ranks, even on same GPU

Can run out of memory (no virtual memory on GPU)

Atomic Operations

OpenACC atomic construct, like OpenMP atomic construct

- some constructs will generate hardware atomic operations

!$acc atomic update

x = x + a(i)

!$acc atomic update

y = min(y,b(i))

!$acc atomic capture

ix = ix + 1

ime = ix

!$acc atomic

Fortran Derived Types

Arrays of derived type work just like arrays

Derived type with fixed size array members, should just work

Derived type with allocatable array members

- Deep copy not implemented (or defined)

- Workaround for PGI

type mdt

integer :: n

real, dimension(:), allocatable :: xm

end type

type(dt) :: x

...

!$acc enter data copyin(x)

!$acc enter data copyin(x%xm)

....

!$acc exit data copyout(x%xm)

!$acc exit data delete(x)

type mdt

integer :: n

real, dimension(:), allocatable :: xm

end type

type(dt), allocatable :: x(:)

...

!$acc enter data copyin(x)

do i = 1, n

!$acc enter data copyin(x(i)%xm)

enddo

....

Managed Memory

Compile and link with –ta=tesla:managed

Allocate statements will allocate in CUDA Unified Memory

Advantages

-

Most data clauses can be skipped, and in fact are ignored

If locality works, most data stays on the GPU

Data transfers use fast pinned data transfers

Good for initial porting

Derived type allocatable members automatically work

Managed Memory

Disadvantages

- All managed memory is moved to the GPU for each kernel launch

- No prefetch, no asynchronous data movement

- Only works for dynamically allocated memory

- local variables, module variables, static symbols are not managed

-

Limited to memory size of the GPU

Allocate and Deallocate are expensive

Kepler only

Only one device

Your program can segfault(!) if the host code accesses managed data GPU is busy

Conditional GPU code

if clause on acc parallel / acc kernels

acc_on_device(acc_device_...)

subroutine host_or_device( a, ongpu )

real, dimension(:) :: a

logical :: ongpu

!$acc parallel loop if(ongpu) default(present)

do i = 1, ubound(a,1)

if( acc_on_device( acc_device_nvidia) )then

a(i) = hostfoo( a(i) )

else

a(i) = devfoo( a(i) )

endif...

Compile for GPU and Host

–ta=tesla,host

- compiles each compute region for Tesla and sequential host code

ACC_DEVICE_TYPE

- nvidia or host

acc_set_device_type( acc_device_nvidia | acc_device_host )

Compile for Multicore

–ta=multicore

- compiles each compute region for parallel multicore host execution

- –ta=tesla,multicore will work in 2016

Currently being beta tested

- only one outer parallel loop is run in parallel

- no tuning for multicore execution (yet)

- no data movement (data clauses ignored)

Useful for initial code development

Useful for multi-target code deployment

Interoperability with OpenMP

–acc –mp to enable OpenACC and OpenMP

Threads can share a GPU

Shared data on host will be shared on the GPU as well

Data regions can overlap for shared data

- data created / copied in at entry to first data region

- data copied out / deleted at exit from last data region, even if different thread

Interoperability with OpenMP 4

No existing implementation of OpenMP 4 and OpenACC (Cray?)

Data management of both are coherent

- copy == map(inout), copyin == map(in), copyout == map(out), create == map(alloc)

- OpenACC defines two copies kept coherent by program

- OpenMP defines mapping a single copy from host to device and back

Parallelism management is very different

-

OpenMP has teams, threads, SIMD lanes

OpenACC has gangs, workers, vector lanes

OpenMP is strictly prescriptive, parallel loop is a loop that runs in parallel

OpenACC is more descriptive, parallel loop is a real parallel loop

Same runtime should be able to handle both in a single program

Interoperability with OpenMP 4

No existing implementation of OpenMP 4 and OpenACC (Cray?)

Data management of both are coherent

- copy == map(inout), copyin == map(in), copyout == map(out), create == map(alloc)

- OpenACC defines two copies kept coherent by program

- OpenMP defines mapping a single copy from host to device and back

Parallelism management is very different

-

OpenMP has teams, threads, SIMD lanes

OpenACC has gangs, workers, vector lanes

OpenMP is strictly prescriptive, parallel loop is a loop that runs in parallel

OpenACC is more descriptive, parallel loop is a real parallel loop

Same runtime should be able to handle both in a single program

Interoperability with CUDA

The device kernels are CUDA kernels, the data is CUDA data

Data interoperability

-

Calling OpenACC C with data from CUDA C

Calling OpenACC Fortran with data from CUDA C

Calling OpenACC Fortran with data from CUDA Fortran

Calling CUDA C with OpenACC data

Calling CUDA Fortran with OpenACC data

Compute interoperability

- Calling CUDA C device routines from OpenACC

- Calling CUDA Fortran device routines from OpenACC

OpenACC data in CUDA C

#pragma acc data copyin(a[0:n]) copy(x[0:n])

{

...

#pragma acc host_data use_device(a)

{

cuda_routine( a );

}

...

#pragma acc parallel loop

for( j = 0; j < n; ++j ) a[j] = ...

}

CUDA C data in OpenACC

float *a;

cudaMalloc( &a, sizeof(float)*n );

...

openacc_routine( a );

...

void openacc_routine( float* a ){

...

#pragma acc parallel loop deviceptr(a)

for( j = 0; j < n; ++j ) a[j] = ...

}

CUDA C data in OpenACC

float *a;

cudaMalloc( &a, sizeof(float)*n );

...

openacc_routine_( a );

...

subroutine openacc_routine( a )

real a(*)

!$acc parallel loop deviceptr(a)

do j = 1, n

a(j) = ...

enddo

end subroutine

CUDA Fortran data in OpenACC

real, allocatable, device :: a(:)

allocate(a(n))

...

call openacc_routine(a)

...

subroutine openacc_routine( a )

real, device :: a(*)

!$acc parallel loop

do j = 1, n

a(j) = ...

enddo

end subroutine

OpenACC data in CUDA Fortran

real, allocatable :: a(:)

allocate(a(n))

!$acc data copyin(a)

...

call cuf_routine(a)

...

!$acc end data

...

subroutine cuf_routine( a )

real, device :: a(*)

!$cuf kernels do<<<*,64>>>

do i = 1, n

....

OpenACC data in CUDA Fortran

real, allocatable :: a(:)

allocate(a(n))

!$acc data copyin(a)

...

call cuf_kernel<<<n/64,64>>>(a)

...

!$acc end data

...

attributes(global) subroutine cuf_routine( a )

real, device :: a(*)

....

CUDA device routines in OpenACC

interface

subroutine cudadev( a, i, x ) bind(c)

real a(*)

real, value :: x

integer, value :: i

!$acc routine seq

end subroutine

end interface

...

!$acc parallel loop gang vector present(a)

do i = 1, n

call cudadev( a, i, x )

enddo

CUDA device routines in OpenACC

__device__ void cudadev( float* a, int i, float x ){

a[i] *= x;

}

CUDA device routines in OpenACC

module mm

contains

attributes(device) subroutine cudadev( a, i, x )

real a(*)

real, value :: x

integer, value :: i

a(i) = x*a(i)

end subroutine

end module

use mm

!$acc parallel loop gang vector present(a)

do i = 1, n

call cudadev( a, i, x )

enddo

CUDA Fortran and OpenACC

Data with device attribute can be used in OpenACC

regions

Data transfers with pinned attribute will be faster

OpenACC compute regions may call CUDA library

OpenACC compute regions may call user device

procedures

OpenACC data may be passed to arguments with device

attribute

CUDA Libraries

-Mcudalib=cublas|cufft|curand|cusparse

CUBLAS

- use cublas or use cublasxt or use openacc_cublas

CUFFT

- use cufft

- cufftSetStream(plan,acc_get_cuda_stream(acc_async_sync))

CURAND

- use curand or use openacc_curand

THRUST

- interface blocks, acc_get_cuda_stream(acc_async_sync)

OpenACC and OpenMP 4

OpenACC

OpenMP

Focused on accelerated computing

General purpose parallelism

More agile

More measured

Performance Portability

Performance Portability a challenge

Descriptive

Prescriptive

Parallel loops

Loops that run across threads

Extensive interoperability

Limited interoperability

More mature for accelerators

More mature for multi-core

Modern HPC Node

X86 CPU

X86 CPU

$

$

$

$

$

$

$

$

$

$

$

$

$

$

$

$

$

$

$

$

$

$

$

$

HT/QPI

Shared Cache

Shared Cache

High

Capacity

Memory

High

Capacity

Memory

Modern HPC Node

X86 CPU

GPU Accelerator

$

$

$

$

$

$

$

$

$

$

$

$

PCIe 3

Shared Cache

$

$

$

$

$

$

$

Shared Cache

High

Capacity

Memory

High Bandwidth

Memory

$

Latency- vs Throughput-Optimized Cores

CPU, LOC

Fast clock (2.5-3.5 GHz)

More work per clock

-

deep pipelining

3-5 wide multiscalar instruction issue

4-16 wide SIMD instructions

4-24 cores

Fewer stalls

-

Large 10-24MB cache

Complex branch prediction

Out-of-order execution

2-4 wide multithreading

Accelerator, GPU, TOC

Slow clock (.8-1.2 GHz)

More work per clock

-

shallow pipelining

1-2 wide multiscalar instruction issue

16-64 wide SIMD instructions

24-72 cores

Fewer stalls

-

Small .25-2MB cache

Little branch prediction

In-order execution

15-32 wide multithreading

Modern HPC Node

X86 CPU

Xeon Phi

$

$

$

$

$

$

$

$

$

$

$

$

PCIe 3

Shared Cache

$

$

$

$

$

$

$

Shared Cache

High

Capacity

Memory

High Bandwidth

Memory

$

Modern HPC Node

APU

$

$

$

$

$

$

Shared Cache

High Capacity Memory

Modern HPC Node

Knights Landing

$

$

$

$

$

$

$

Shared Cache

High

Capacity

Memory

High Bandwidth

Memory

$

Modern HPC Node

APU

$

$

$

$

$

$

High Bandwidth

Memory

Shared Cache

High Capacity Memory

Modern HPC Node

POWER CPU

$

$

$

$

$

$

$

$

$

$

$

$

Tesla Accelerator

NVLink

Shared Cache

$

$

$

$

$

$

$

Shared Cache

High

Capacity

Memory

High Bandwidth

Memory

$

Modern HPC Node

ARM CPU

Tesla Accelerator

$

$

$

$

$

$

$

$

PCIe 3

Shared Cache

$

$

$

$

$

$

$

Shared Cache

High

Capacity

Memory

High Bandwidth

Memory

$

Modern HPC Node

ARM CPU

Tesla Accelerator

$

$

$

$

$

$

$

$

NVLink

Shared Cache

$

$

$

$

$

$

$

Shared Cache

High

Capacity

Memory

High Bandwidth

Memory

$

Performance Portability

The same program

runs and runs well

across multiple targets

Performance Portability

program

seq(s)

gpu(s)

speedup

m-core(s)

speedup

clvrleaf

2698

161.73

16.7

511.1

5.2

13463

115.43

116.0

400.03

33.6

1062

146.13

7.3

319.93

3.3

449

305.97

1.4

95.75

4.7

13835

60.13

230.0

2276.57

6.0

537

83.98

6.4

121.20

4.4

md

minighost

olbm

ostencil

swim

These are SPECAccel benchmark estimates

dual-processor Intel Haswell (32 cores) with NVIDIA K80 GPU

OpenACC Course – Starts Oct 1st

A Free Online Course

Experienced Instructors

OpenACC Toolkit

GPU Access

4 Classes

4 Office Hours

Hands-on Labs

Register at https://developer.nvidia.com/openacc_course

Performance Portable Programming

Challenges and Opportunities

- high core count devices

- large system memories, smaller high-bandwidth memories

OpenACC is demonstrating performance portability

Data management: as important as parallelism

- data location as well as data layout

Parallelism: Expose, Express, Exploit

- performance, not parallelism

https://www.pgroup.com/userforum

https://developer.nvidia.com/openacc

https://developer.nvidia.com/openacc_course

openacc@nvidia.com

OpenACC 1, 2, 2.5, 3, ...

OpenACC 1.0: data region, compute region, update, async

OpenACC 2.0: +routine, +atomics, +enter data/exit data

OpenACC 2.5: +default(present), -present_or_, +profile interface

OpenACC 3.0 (planned): deep copy, shared memory options

Backup, backup, backup

backup

`

!$acc data copyin(a(:,:), v(:)) copy(x(:))

!$acc parallel

!$acc loop gang

do j = 1, n

sum = 0.0

!$acc loop vector reduction(+:sum)

do i = 1, n

sum = sum + a(i,j) + v(i)

enddo

x(j) = sum

enddo

!$acc end parallel

!$acc end data

`

!$acc data copyin(a(:,:), v(:)) copy(x(:))

call matvec( a, v, x, n )

!$acc end data

...

subroutine matvec( m, v, r, n )

real :: m(:,:), v(:), r(:)

!$acc parallel present(a,v,r)

!$acc loop gang

do j = 1, n

sum = 0.0

!$acc loop vector reduction(+:sum)

do i = 1, n

sum = sum + m(i,j) + v(i)

enddo

r(j) = sum

enddo

!$acc end parallel

end subroutine

`

!$acc data copyin(a(:,:), v(:)) copy(x(:))

call matvec( a, v, x, n )

!$acc end data

...

subroutine matvec( m, v, r, n )

real :: m(:,:), v(:), r(:)

!$acc parallel default(present)

!$acc loop gang

do j = 1, n

sum = 0.0

!$acc loop vector reduction(+:sum)

do i = 1, n

sum = sum + m(i,j) + v(i)

enddo

r(j) = sum

enddo

!$acc end parallel

end subroutine

call init( v, n )

call fill( a, n )

!$acc data copy( x )

do iter = 1, niter

call matvec( a, v, x, n )

call interp( b, x, n )

!$acc update host( x )

write(...) x

call exch( x )

!$acc update device( x )

enddo

!$acc end data

...

subroutine init( v, n )

real, allocatable :: v(:)

allocate(v(n))

v(1) = 0

do i = 2, n

v(i) = ....

enddo

!$acc enter data copyin(v)

end subroutine