Slides on Information Theory

advertisement

Informatics I101

February 25, 2003

John C. Paolillo, Instructor

Electronic Text

• ASCII — American Standard Code for

Information Interchange

• EBCDIC (IBM Mainframes, not standard)

• Extended ASCII (8-bit, not standard)

– DOS Extended ASCII

– Windows Extended ASCII

– Macintosh Extended ASCII

• UNICODE (16-bit, standard-in-progress)

ASCII

01000001

means

Alphabet letter "A"

is displayed as

Screen Representation

AAA A

0

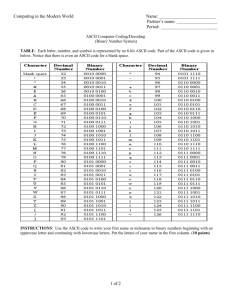

The ASCII Code

1

2

3

4

5

6

7

0

NUL

DLE

blank

0

@

P

`

p

1

SOH

DC1

!

1

A

Q

a

q

2

STX

DC2

"

2

B

R

b

r

3

ETX

DC3

#

3

C

S

c

s

4

EOT

DC4

$

4

D

T

d

t

5

ENQ

NAK

%

5

E

U

e

u

6

ACK

SYN

&

6

F

V

f

v

7

BEL

ETB

'

7

G

W

g

w

8

BS

CAN

(

8

H

X

h

x

9

HT

EM

)

9

I

Y

i

y

A

LF

SUB

*

:

J

Z

j

z

B

VT

ESC

+

;

K

[

k

{

C

FF

FS

`

<

L

\

l

|

D

CR

GS

-

=

M

]

m

}

E

SO

RS

.

>

N

^

n

~

F

SI

US

/

?

O

~

o

DEL

An Example Text

T h

i

s

i

s

a n

e

x

a

m p

84 104 105 115 32 105 115 32 97 110 32 101 120 97 109 112 108 101

Note that each ASCII character corresponds to a number,

including spaces, carriage returns, etc. Everything must be

represented somehow, otherwise the computer couldn’t do

anything with it.

l

e

Representation in Memory

01101010

01101001

01101000

01100111

01100110

01100101

01100100

01100011

01100010

01100001

01100000

32

101

108

112

109

97

120

101

32

110

97

_

e

l

p

m

a

x

e

_

n

a

Features of ASCII

• 7 bit fixed-length code

– all codes have same number of bits

• Sorting: A precedes B, B precedes C, etc.

• Caps + 32 = Lower case (A + space = a)

• Word divisions, etc. must be parsed

ASCII is very widespread and almost

universally supported.

Variable-Length Codes

• Some symbols (e.g. letters) have shorter codes

than others

– E.g. Morse code:

e = dot, j = dot-dash-dash-dash

– Use frequency of symbols to assign code lentgths

• Why? Space efficiency

– compression tools such as gzip and zip use variablelength codes (based on words)

Requirements

Starting and ending points of symbols must be clear

(simplistic) example: four symbols must be encoded:

0

10

110

1110

All symbols end with a zero

Any zero ends a symbol

Any one continues a symbol

Average number of bits per symbol = 2

Example

• 12 symbols

– digits 0-9

– decimal point and space (end of number)

0

0

0

1

0

1

0

0

1

0

1

1

1

0

1

0

1

0

1

0

1

0 1 2 3 4 5 6 7 8 9 _ .

1

0

1

2

3

4

5

6

7

8

9

_

.

00

010

0110

01110

011110

011111

10

110

1110

11110

111110

111111

Efficient Coding

Huffman coding (gzip)

1. count the number of times each symbol occurs

2. start with the two least frequent symbol

a) combine them using a tree

b) put 0 on one branch, 1 on the other

c) combine counts and treat as a single symbol

3. continue combining in the same way until every

symbol is assigned a place in the tree

4. read the codes from the top of the tree down to each

symbol

Information Theory

• Mathematical theory of communication

– How many bits in an efficient variable-length

encoding?

– How much information is in a chunk of data?

– How can the capacity of an information medium be

measured?

• Probabilistic model of information

– “Noisy channel” model

– less frequent ≈ more surprising ≈ more informative

• Measures information using the notion entropy

Noisy Channel

Source

Destination

1

1

0

0

We measure the probability of each

possible path (correct reception and

errors)

Entropy

• Entropy of a symbol is calculated from its

probability of occurrence

Number of bits required hs = log2 ps

Average entropy: H(p) = – sum( pi log pi )

• Related to variance

• Measured in bits (log2)

Base 2 Logarithms

2log2x = x ; e.g. log22 = 1, log24 = 2, log28 = 3,

etc.

Often we round up to the nearest power of

two (= min number of bits)

Unicode

• Administered by the Unicode Consortium

• Assigns unique code to every written

symbol (21 bits: 2,097,152 codes)

– UTF-32: four-byte fixed-length code

– UTF-16: two to four-byte variable-length code

– UTF-8: one to 4-byte variable length code

• ASCII Block (one byte) + basic multilingual plane

(2-3 bytes) + supplementary (4 bytes)