Document

advertisement

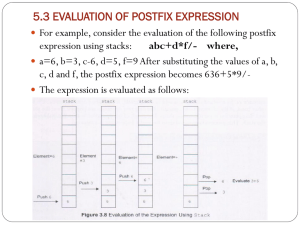

1

Recursion

Algorithm Analysis

Standard Algorithms

Chapter 7

2

Recursion

• Consider things that reference themselves

– Cat in the hat in the hat in the hat …

– A picture of a picture

– Having a dream in your dream!!

• Recursion has its base

in mathematical induction

• Recursion always has

– an anchor (or base or trivial) case

– an inductive case

3

Recursion

• A recursive function will call or reference

itself.

The problem is that this

function has no anchor.

• Consider

int R(int x)

{

return 1 + R(x); }

• What is wrong with this picture?

– Nothing will stop repeated recursion

– Like an endless loop, but will eventually cause

your program to run out of memory

4

Recursion

A proper recursive function will have

• An anchor or base case

– the function’s value is defined for one or more

values of the parameters

• An inductive or recursive step

– the function’s value (or action) for the current

parameter values is defined in terms of …

– previously defined function values (or

actions) and/or parameter values.

5

Recursive Example

int Factorial(int n)

{ if (n == 0)

return 1;

else

return n * Factorial(n - 1);

}

• Which is the anchor?

• Which is the inductive or recursive part?

• How does the anchor keep it from going forever?

6

A Bad Use of Recursion

• Fibonacci numbers

1, 1, 2, 3, 5, 8, 13, 21, 34

f1 = 1, f2 = 1 … fn = fn -2 + fn -1

– A recursive function

double Fib (unsigned n)

{ if (n <= 2)

return 1;

else

return Fib (n – 1) + Fib (n – 2);

• Why is this inefficient?

– Note the recursion tree on pg 327

}

7

Uses of Recursion

• Easily understood recursive functions are not

always the most efficient algorithms

• "Tail recursive" functions

– When the last statement in the recursive function is a

recursive invocation.

– These are much more efficiently written with a loop

• Elegant recursive algorithms

–

–

–

–

Binary search (see pg 328)

Palindrome checker (pg 330)

Towers of Hanoi solution (pg 336)

Parsing expressions (pg 338)

8

Comments on Recursion

• Many iterative tasks can be written

recursively

– but end up inefficient

However

• There are many problems with good

recursive solutions

• And their iterative solutions are

– not obvious

– difficult to develop

9

Algorithm Efficiency

• How do we measure efficiency

– Space utilization – amount of memory

required

– Time required to accomplish the task

• Time efficiency depends on :

– size of input

– speed of machine

– quality of source code

– quality of compiler

These vary from one

platform to another

10

Algorithm Efficiency

• We can count the number of times

instructions are executed

– This gives us a measure of efficiency of an

algorithm

• So we measure computing time as:

T(n)= computing time of an algorithm for input of size n

= number of times the instructions are executed

11

Example: Calculating the Mean

Task

1.

2.

3.

4.

5.

6.

Initialize the sum to 0

Initialize index i to 0

While i < n do following

a) Add x[i] to sum

b) Increment i by 1

Return mean = sum/n

Total

# times executed

1

1

n+1

n

n

1

3n + 4

12

Computing Time Order of

Magnitude

• As number of inputs increases

T(n) = 3n + 4 grows at a rate proportional to n

• Thus T(n) has the "order of magnitude" n

• The computing time of an algorithm on input

of size n,

T(n) said to have order of magnitude f(n),

written T(n) is O(f(n))

if … there is some constant C such that

T(n) < Cf(n) for all sufficiently large values of n

13

Big Oh Notation

Another way of saying this:

• The complexity of the algorithm is

O(f(n)).

•

Example: For the Mean-Calculation

Algorithm:

T(n) is O(n)

•

Note that constants and multiplicative

factors are ignored.

14

Big Oh Notation

• f(n) is usually simple:

n, n2, n3, ...

2n

1, log2n

n log2n

log2log2n

15

Big-O Notation

• Cost function

– A numeric function that gives performance of

an algorithm in terms of one or more variables

– Typically the variable(s) capture number of

data items

• Actual cost functions are hard to develop

• Generally we use approximating functions

16

Function Dominance

• Asymptotic dominance

– g dominates f if there is a positive constant c

such that

c g ( x) f ( x)

for sufficiently

large values of n

• Example: suppose the actual cost

function is

T (n) 1.01 n2

• Both of these

will dominate T(n)

T 2(n) 1.5 n

T 3(n) n

3

2

17

Estimating Functions

Characteristics for good estimating functions

• It asymptotically dominates the actual time

function

• It is simple to express and understand

• It is as close an estimate as possible

Tactual (n) n 5n 100

2

Testimate (n) n

2

Because any constant

c > 1 will make n2 larger

18

Estimating Functions

• Note how the c*n2 dominates

Time

Function Dominance

80000

60000

40000

20000

0

Actual

n^2

1.5n^2

0

100

200

Number of data items

300

Thus we use n2 as

an estimate of the

time required

19

Order of a Function

• To express time estimates concisely we

use the concept “order of a function”

• Definition:

Given two nonnegative functions f and g,

the order of f is g, iff g asymptotically

dominates f

• Stated

– “f is of order g”

– “f = O(g)”

big-O notation

O stands for “Order”

20

Order of a Function

• Note the possible confusion

– The notation does NOT say “the order of g is f”

nor does it say “f equals the order of g”

– It does say “f

is of order g”

f O( g )

21

Big-O Arithmetic

•

Given f and g functions, k a constant

1. O (k f ) O ( f )

2. O( f g ) O( f ) O( g ) and

O( f / g ) O( f ) / O( g )

3. O ( f ) O( g )

f dominates g

4. O( f g ) Max[O ( f ), O ( g )]

22

Example: Calculating the Mean

Task

1.

2.

3.

4.

5.

6.

Initialize the sum to 0

Initialize index i to 0

While

i <on

n do

following

Based

Big-O

arithmetic

a) Add

x[i] tothis

sum

algorithm

has O(n)

b)

Increment

i by 1

Return mean = sum/n

Total

# times executed

1

1

n+1

n

n

1

3n + 4

23

Worst-Case Analysis

• The arrangement of the input items may affect

the computing time.

• How then to measure performance?

– best case

– average

– worst case

not very informative

too difficult to calculate

usual measure

• Consider Linear search of the list

a[0], . . . , a[n – 1].

24

Worst-Case Analysis

Algorithm:

Linear search of

a[0] … a[n-1]

1.

2.

3.

4.

5.

found = false.

loc = 0.

While (loc < n && !found )

If item = a[loc] found = true

Else

Increment loc by 1

•

Worst case: Item not in the list:

TL(n) is O(n)

Average case (assume equal distribution

of values) is O(n)

•

// item found

// keep searching

25

Binary Search

1. found = false.

2. first = 0.

Binary search of

3. last = n – 1.

a[0] … a[n-1]

4. While (first < last && !found )

5.

Calculate loc = (first + last) / 2.

6.

If item < a[loc] then

7.

last = loc – 1.// search first half

8.

Else if item > a[loc] then

9.

first = loc + 1.// search last half

10. Else found = true.

// item found

• Each pass cuts the list in half

• Worst case : item not in list TB(n) = O(log2n)

26

Common Computing Time

Functions

log2n

n

n log2n

n2

n3

2n

0

1

0

1

1

2

0.00

1

2

2

4

8

4

1.00

2

4

8

16

64

16

1.58

3

8

24

64

512

256

2.00

4

16

64

256

4096

65536

2.32

5

32

160

1024

32768

4294967296

2.58

6

64

384

4096

262144

1.84467E+19

3.00

8

256

2048

65536

16777216

1.15792E+77

3.32

10

1024

10240

1048576

1.07E+09 1.8E+308

4.32

20

1048576

20971520

1.1E+12

1.15E+18 6.7E+315652

log2log2n

---

For our binary search

27

Computing in Real Time

• Suppose each instruction can be done in 1

microsecond

• For n = 256 inputs how long for various f(n)

Function

Time

log2log2n

3 microseconds

Log2n

8 microseconds

n

.25 milliseconds

n log2n

2 milliseconds

n2

65 milliseconds

n3

17 seconds

2n

3.7+E64 centuries!!

28

Conclusion

• Algorithms with exponential complexity

– practical only for situations where number of

inputs is small

• Bubble sort has O(n2)

– OK for n < 100

– Totally impractical for large n

29

Computing Times Of Recursive Functions

// Towers of Hanoi

void Move(int n, char source, char destination, char

spare)

{

if (n <= 1)

// anchor (base) case

cout << "Move the top disk from " << source

<< " to " << destination << endl;

else

{

// inductive case

Move(n-1, source, spare, destination);

Move(1, source, destination, spare);

Move(n-1, spare, destination, source);

}

}

T(n) = O(2n)