An Introduction To Matrix Decomposition

advertisement

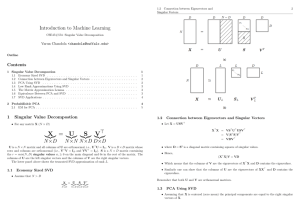

An Introduction To Matrix Decomposition Lei Zhang/Lead Researcher Microsoft Research Asia 2012-04-17 Outline • Matrix Decomposition – PCA, SVD, NMF – LDA, ICA, Sparse Coding, etc. What Is Matrix Decomposition? • We wish to decompose the matrix A by writing it as a product of two or more matrices: An×m = Bn×kCk×m • Suppose A, B, C are column matrices – An×m = (a1, a2, …, am), each ai is a n-dim data sample – Bn×k = (b1, b2, …, bk), each bj is a n-dim basis, and space B consists of k bases. – Ck×m = (c1, c2, …, cm), each ci is the k-dim coordinates of ai projected to space B Why We Need Matrix Decomposition? • Given one data sample: a1 = Bn×kc1 (a11, a12, …, a1n)T = (b1, b2, …, bk) (c11, c12, …, c1k)T • Another data sample: a2 = Bn×kc2 • More data sample: am = Bn×kcm • Together (m data samples): (a1, a2, …, am) = Bn×k (c1, c2, …, cm) An×m = Bn×kCk×m Why We Need Matrix Decomposition? (a1, a2, …, am) = Bn×k (c1, c2, …, cm) An×m = Bn×kCk×m • We wish to find a set of new basis B to represent data samples A, and A will become C in the new space. • In general, B captures the common features in A, while C carries specific characteristics of the original samples. • • • • In PCA: B is eigenvectors In SVD: B is right (column) eigenvectors In LDA: B is discriminant directions In NMF: B is local features PRINCIPLE COMPONENT ANALYSIS Definition – Eigenvalue & Eigenvector Given a m x m matrix C, for any λ and w, if Then λ is called eigenvalue, and w is called eigenvector. Definition – Principle Component Analysis – Principle Component Analysis (PCA) – Karhunen-Loeve transformation (KL transformation) • Let A be a n × m data matrix in which the rows represent data samples • Each row is a data vector, each column represents a variable • A is centered: the estimated mean is subtracted from each column, so each column has zero mean. • Covariance matrix C (m x m): Principle Component Analysis • C can be decomposed as follows: C=UΛUT • Λ is a diagonal matrix diag(λ1 λ2,…,λn), each λi is an eigenvalue • U is an orthogonal matrix, each column is an eigenvector UTU=I U-1=UT Maximizing Variance • The objective of the rotation transformation is to find the maximal variance • Projection of data along w is Aw. • Variance: σ2w= (Aw)T(Aw) = wTATAw = wTCw where C = ATA is the covariance matrix of the data (A is centered!) • Task: maximize variance subject to constraint wTw=1. Optimization Problem • Maximize λ is the Lagrange multiplier • Differentiating with respect to w yields • Eigenvalue equation: Cw = λw, where C = ATA. • Once the first principal component is found, we continue in the same fashion to look for the next one, which is orthogonal to (all) the principal component(s) already found. Property: Data Decomposition • PCA can be treated as data decomposition a=UUTa =(u1,u2,…,un) (u1,u2,…,un)T a =(u1,u2,…,un) (<u1,a>,<u2,a>…,<un,a>)T =(u1,u2,…,un) (b1, b2, …, bn)T = Σ bi·ui Face Recognition – Eigenface • Turk, M.A.; Pentland, A.P. Face recognition using eigenfaces, CVPR 1991 (Citation: 2654) • The eigenface approach – – – – images are points in a vector space use PCA to reduce dimensionality face space compare projections onto face space to recognize faces PageRank – Power Iteration • Column j has nonzero elements in positions corresponding to outlinks of j (Nj in total) • Row i has nonzero element in positions corresponding to inlinks Ii. Column-Stochastic & Irreducible • Column-Stochastic • where • Irreducible Iterative PageRank Calculation • For k=1,2,… • Equivalently (λ=1, A is a Markov chain transition matrix) • Why can we use power iteration to find the first eigenvector? Convergence of the power iteration • Expand the initial approximation r0 in terms of the eigenvectors SINGULAR VALUE DECOMPOSITION SVD - Definition • Any m x n matrix A, with m ≥ n, can be factorized • Singular Values And Singular Vectors • The diagonal elements σj of are the singular values of the matrix A. • The columns of U and V are the left singular vectors and right singular vectors respectively. • Equivalent form of SVD: Matrix approximation • Theorem: Let Uk = (u1 u2 … uk), Vk = (v1 v2 … vk) and Σk = diag(σ1, σ2, …, σk), and define • Then • It means, that the best approximation of rank k for the matrix A is SVD and PCA • We can write: • Remember that in PCA, we treat A as a row matrix • V is just eigenvectors for A – Each column in V is an eigenvector of row matrix A – we use V to approximate a row in A • Equivalently, we can write: • U is just eigenvectors for AT – Each column in U is an eigenvector of column matrix A – We use U to approximate a column in A Example - LSI • Build a term-by-document matrix A • Compute the SVD of A: • Approximate A by A = UΣVT – Uk: Orthogonal basis, that we use to approximate all the documents – Dk: Column j hold the coordinates of document j in the new basis – Dk is the projection of A onto the subspace spanned by Uk. SVD and PCA • For symmetric A, SVD is closely related to PCA • PCA: A = UΛUT – U and Λ are eigenvectors and eigenvalues. • SVD: A = UΛVT – U is left(column) eigenvectors – V is right(row) eigenvectors – Λ is the same eigenvalues • For symmetric A, column eigenvectors equal to row eigenvectors • Note the difference of A in PCA and SVD – SVD: A is directly the data, e.g. term-by-document matrix – PCA: A is covariance matrix, A=XTX, each row in X is a sample Latent Semantic Indexing (LSI) 1. Document file preparation/ preprocessing: – Indexing: collecting terms – Use stop list: eliminate ”meaningless” words – Stemming 2. Construction term-by-document matrix, sparse matrix storage. 3. Query matching: distance measures. 4. Data compression by low rank approximation: SVD 5. Ranking and relevance feedback. Latent Semantic Indexing • Assumption: there is some underlying latent semantic structure in the data. • E.g. car and automobile occur in similar documents, as do cows and sheep. • This structure can be enhanced by projecting the data (the term-by-document matrix and the queries) onto a lower dimensional space using SVD. Similarity Measures • Term to term AAT = UΣ2UT = (UΣ)(UΣ)T UΣ are the coordinates of A (rows) projected to space V • Document to document ATA = VΣ2VT = (VΣ)(VΣ)T VΣ are the coordinates of A (columns) projected to space U Similarity Measures • Term to document A = UΣVT = (UΣ½)(VΣ½)T UΣ½ are the coordinates of A (rows) projected to space V VΣ½ are the coordinates of A (columns) projected to space U HITS (Hyperlink Induced Topic Search) • Idea: Web includes two flavors of prominent pages: – authorities contain high-quality information, – hubs are comprehensive lists of links to authorities • A page is a good authority, if many hubs point to it. • A page is a good hub if it points to many authorities. • Good authorities are pointed to by good hubs and good hubs point to good authorities. Hubs Authorities Power Iteration • Each page i has both a hub score hi and an authority score ai. • HITS successively refines these scores by computing • Define the adjacency matrix L of the directed web graph: • Now HITS and SVD • L: rows are outlinks, columns are inlinks. • a will be the dominant eigenvector of the authority matrix LTL • h will be the dominant eigenvector of the hub matrix LLT • They are in fact the first left and right singular vectors of L!! • We are in fact running SVD on the adjacency matrix. HITS vs PageRank • PageRank may be computed once, HITS is computed per query. • HITS takes query into account, PageRank doesn’t. • PageRank has no concept of hubs • HITS is sensitive to local topology: insertion or deletion of a small number of nodes may change the scores a lot. • PageRank more stable, because of its random jump step. NMF – NON-NEGATIVE MATRIX FACTORIZATION Definition • Given a nonnegative matrix Vn×m, find non-negative matrix factors Wn×k and Hk×m such that Vn×m≈Wn×kHk×m • V: column matrix, each column is a data sample (n-dimension) • Wi: k-basis represents one base • H: coordinates of V projected to W vj ≈ Wn×khj Motivation • Non-negativity is natural in many applications... • Probability is also non-negative • Additive model to capture local structure Multiplicative Update Algorithm • Cost function Euclidean distance • Multiplicative Update Multiplicative Update Algorithm • Cost function Divergence – Reduce to Kullback-Leibler divergence when – A and B can be regarded as normalized probability distributions. • Multiplicative update • PLSA is NMF with KL divergence NMF vs PCA • n = 2429 faces, m = 19x19 pixels • Positive values are illustrated with black pixels and negative values with red pixels • NMF Parts-based representation • PCA Holistic representations Reference • D. D. Lee and H. S. Seung. Algorithms for non-negative matrix factorization. (pdf) NIPS, 2001. • D. D. Lee and H. S. Seung. Learning the parts of objects by non-negative matrix factorization. (pdf) Nature 401, 788-791 (1999). Major Reference • Saara Hyvönen, Linear Algebra Methods for Data Mining, Spring 2007, University of Helsinki (Highly recommend)