USC Viterbi School of Engineering

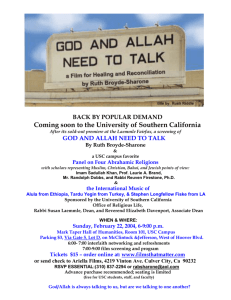

advertisement

Resource Management Ewa Deelman USC Viterbi School of Engineering Outline • General resource management framework • Managing a set of resources – Locally – Across the network • Managing distributed resources USC Viterbi School of Engineering Types of resources Myrinet, infiniband headnode Local system Loose set of resources LAN O(100GB-1TB) ethernet cluster USC Viterbi School of Engineering Local Resource Remote Submission Workload Management Workflow Management network USC Viterbi School of Engineering Local Scheduler “Super” Scheduler Remote Connectivity Resource managers/schedulers Remote Resource Basic Resource Management Functionality • • • • • • • • Accept requests Start jobs on the resource Log information about jobs Reply to queries about request status Prioritize among requests Provide fault tolerance Provide resource reservation Management of dependent jobs USC Viterbi School of Engineering Schedulers/Resource Managers • Local system – OS, scheduling across multiple cores • Clusters – Basic Scheduler • PBS, LSF, torque, Condor – “Super” Scheduler • Maui • Loose set of resources – Condor USC Viterbi School of Engineering Schedulers/Resource Managers • Remote Connectivity – Globus GRAM • Remote Submission to possibly many sites – Condor-G • Workload management – Condor • Workflow management – DAGMan – Pegasus USC Viterbi School of Engineering Local Scheduler “Super” Scheduler network Remote Connectivity Remote Submission Workload Management Local Resource Workflow Management Resource managers/schedulers Remote Resource headnode PBS O(100GB-1TB) USC Viterbi School of Engineering cluster Cluster Scheduling--PBS – Aggregates all the computing resources (cluster nodes) into a single entity – Schedules and distributes applications • On a single node, across multiple nodes – Supports various types of authentication • User name based • X509 certificates – Supports various types of authorization • User, group, system – Provides job monitoring • Collects real time data on the state of the system and job status • Logs real time data and other info on queued jobs, configuration information, etc USC Viterbi School of Engineering Cluster Scheduling PBS • • • • Performs data stage-in and out onto the nodes Starts the computation on a node Uses backfilling for job scheduling Supports simple dependencies – – – – – Run Y after X If X succeeds run Y otherwise run Z Run Y after X regardless of X success Run Y after specified time Run X anytime after specified time USC Viterbi School of Engineering Cluster Scheduling--PBS • Advanced features – Resource reservation • Specified time (start_t) and duration (delta) • Checks whether reservation conflicts with running jobs, and other confirmed reservation • If OK—sets up a queue for the reservation with a user-level acl • Queue started at start_t • Jobs killed past the reservation time – Cycle harvesting • Can include idle resources into the mix – Peer scheduling • To other PBS systems • Pull-based USC Viterbi School of Engineering Cluster Scheduling--PBS • Allocation properties – Administrator can revoke any allocation (running or queued) – Jobs can be preempted • Suspended • Checkpointed • Requeued – Jobs can be restarted X times USC Viterbi School of Engineering Single Queue Backfilling • A job is allowed to jump in the queue ahead of jobs that are delayed due to lack of resources • Non-preemptive • Conservative backfilling – A job is allowed to jump ahead provided it does not delay any previous job in the queue • Aggressive backfilling – A job is allowed to jump ahead if the first job is not affected – Better performing then conservative backfilling USC Viterbi School of Engineering Aggressive backfilling • Job (arrival time, number of procs, expected runtime) • Any job that executes longer than expected runtime is killed • Define pivot—first job in the queue • If enough resources for pivot, execute pivot and define a new pivot • Otherwise sort all currently executing jobs in order of their expected completion time – Determine pivot time—when sufficient resources for pivot are available USC Viterbi School of Engineering Aggressive backfilling • At pivot time, any idle processors not required for pivot job are defined as extra processors • The scheduler searches for the first queued job that – Requires no more than the currently idle processors and will finish by the pivot time, or – Requires no more than the minimum currently idle processors and the extra processors • Once a job becomes a pivot, it cannot be delayed • Possible that a job will end sooner and pivot starts before pivot time USC Viterbi School of Engineering Aggressive backfilling example 10 Job A (10 procs,40mins) processors 8 time Queue Job B (12 procs, 1 hour) Job C (20 procs, 2 hours) Job D (2 procs, 50 mins) Job E (6 procs, 1 hour) Job F (4 procs, 2 hours) Running Job A(10 procs, 40 mins) USC Viterbi School of Engineering Aggressive backfilling example 10 Job A (10 procs,40mins) PIVOT Job B processors 8 JOB D time queue Job B (12 procs, 1 hour) Job C (20 procs, 2 hours) Job E (6 procs, 1 hour) Job F (4 procs, 2 hours) Running Job A(10 procs, 40 mins) Job D (2 procs, 50 mins) USC Viterbi School of Engineering Aggressive backfilling example 10 8 Job A (10 procs,40mins) PIVOT Job B processors Job F Job D time Queue Job B (12 procs, 1 hour) Job C (20 procs, 2 hours) Job E (6 procs, 1 hour) Running Job A(10 procs, 40 mins) Job D (2 procs, 50 mins) Job F (4 procs, 2 hours) USC Viterbi School of Engineering Aggressive backfilling example 10 8 Job A (10 procs,40mins) Job B processors Job F Job D time Queue Job C (20 procs, 2 hours) Job E (6 procs, 1 hour) Running Job D (2 procs, 50 mins) Job F (4 procs, 2 hours) Job B (12 procs, 1 hour) USC Viterbi School of Engineering Aggressive backfilling example 10 8 Job A (10 procs,40mins) processors Job B Pivot Job C (20 procs, 2 hours) Job F Job D time Queue Job C (20 procs, 2 hours) Job E (6 procs, 1 hour) Running Job D (2 procs, 50 mins) Job F (4 procs, 2 hours) Job B (12 procs, 1 hour) USC Viterbi School of Engineering Multiple-Queue Backfill From “Self-adapting Backfilling Scheduling for Parallel Systems”, B.G.Lawson et al. Proceedings of the 2002 International Conference on Parallel Processing (ICPP'02), 2002 USC Viterbi School of Engineering Local Resource Remote Submission Workload Management Workflow Management network USC Viterbi School of Engineering Maui Local Scheduler “Super” Scheduler Remote Connectivity Resource managers/schedulers Remote Resource “Super”/External Scheduler--Maui • External scheduler – Does not mandate a specific resource manager – Fully controls the workload submitted through the local management system – Enhances resource manager capabilities – Supports advance reservations • <start-time, duration, acl> • Irrevocable allocations – For time critical tasks such as weather modeling – The reservation is guaranteed to start at a given time and last for a given duration regardless of future workload • Revocable allocations – The reservation would be released if it precludes a higher priority request from being granted USC Viterbi School of Engineering Maui • Retry “X” times mechanism for finding resources and starting a job – If job does not start—can be put on hold • Can be configured preemptive/nonpreemptive • Exclusive allocations – No sharing of resources • Malleable allocations – Changes can be made in time and space • Increases if possible • Decreases always USC Viterbi School of Engineering Maui • Resource Querying – Request (account mapping, minimum duration, processors) – Maui returns a list of tuples • <feasible start_time, total available resources, duration, resource cost, quality of the information> – Can also return a single value which incorporate • Anticipated, non-submitted jobs • Workload profiles • Provides event notifications – Solitary and threshold events – Job/reservation: creation, start, preemption, completion, various failures USC Viterbi School of Engineering Maui • Courtesy allocation – Placeholder which is released if confirmation is not received within a time limit • Depending on the underlying scheduler Maui can – Suspend/resume – Checkpoint/restart – Requeue/restart USC Viterbi School of Engineering Globus GRAM USC Viterbi School of Engineering Local Scheduler “Super” Scheduler network Remote Connectivity Remote Submission Workload Management Local Resource Workflow Management Resource managers/schedulers Remote Resource GRAM - Basic Job Submission and Control Service • A uniform service interface for remote job submission and control – – – – – Includes file staging and I/O management Includes reliability features Supports basic Grid security mechanisms Asynchronous monitoring Interfaces with local resource managers, simplifies the job of metaschedulers/brokers • GRAM is not a scheduler. – No scheduling – No metascheduling/brokering Slide courtesy of Stuart Martin USC Viterbi School of Engineering Grid Job Management Goals Provide a service to securely: • Create an environment for a job • Stage files to/from environment • Cause execution of job process(es) – Via various local resource managers • Monitor execution • Signal important state changes to client • Enable client access to output files – Streaming access during execution Slide courtesy of Stuart Martin USC Viterbi School of Engineering Job Submission Model • Create and manage one job on a resource • Submit and wait • Not with an interactive TTY – File based stdin/out/err – Supported by all batch schedulers • More complex than RPC – Optional steps before and after submission message – Job has complex lifecycle • Staging, execution, and cleanup states • But not as general as Condor DAG, etc. – Asynchronous monitoring Slide courtesy of Stuart Martin USC Viterbi School of Engineering Job Submission Options • Optional file staging – Transfer files “in” before job execution – Transfer files “out” after job execution • Optional file streaming – Monitors files during job execution • Optional credential delegation – Create, refresh, and terminate delegations – For use by job process – For use by GRAM to do optional file staging Slide courtesy of Stuart Martin USC Viterbi School of Engineering Job Submission Monitoring • Monitor job lifecycle – GRAM and scheduler states for job • StageIn, Pending, Active, Suspended, StageOut, Cleanup, Done, Failed – Job execution status • Return codes • Multiple monitoring methods – Simple query for current state – Asynchronous notifications to client Slide courtesy of Stuart Martin USC Viterbi School of Engineering Secure Submission Model • Secure submit protocol – PKI authentication – Authorization and mapping • Based on Grid ID – Further authorization by scheduler • Based on local user ID • Secure control/cancel – Also PKI authenticated – Owner has rights to his jobs and not others’ Slide courtesy of Stuart Martin USC Viterbi School of Engineering Secure Execution Model • After authorization… • Execute job securely – User account “sandboxing” of processes • According to mapping policy and request details – Initialization of sandbox credentials • Client-delegated credentials • Adapter scripts can be customized for site needs – AFS, Kerberos, etc Slide courtesy of Stuart Martin USC Viterbi School of Engineering Secure Staging Model • Before and after sandboxed execution… • Perform secure file transfers – Create RFT request • To local or remote RFT service • PKI authentication and delegation • In turn, RFT controls GridFTP – Using delegated client credentials – GridFTP • PKI authentication • Authorization and mapping by local policy files • further authorization by FTP/unix perms Slide courtesy of Stuart Martin USC Viterbi School of Engineering Users/Applications: Job Brokers, Portals, Command line tools, etc. GRAM WSDLs + Job Description Schema (executable, args, env, …) GRAM4 WS standard interfaces for subscription, notification, destruction Resource Managers: PBS, Condor, LSF, SGE, Loadleveler, Fork Slide courtesy of Stuart Martin USC Viterbi School of Engineering GRAM4 Approach compute element and service host(s) compute element Delegation sudo xfer request client GRAM services GRAM adapter GridFTP RFT FTP control FTP data GridFTP Slide courtesy of Stuart Martin remoteofstorage element(s) USC Viterbi School Engineering local sched. user job File staging retry policy • If a file staging operation fails, it may be nonfatal and retry may be desired – Server defaults for all transfers can be configured – Defaults can be overridden for a specific transfer • Output can be streamed Slide courtesy of Stuart Martin USC Viterbi School of Engineering Throttle staging work Headnode GRAM/PBS O(100GB-1TB) cluster • A GRAM submission that specifies file staging imposes load on the service node executing the GRAM service. – GRAM is configured for a maximum number of “worker” threads and thus a maximum number of concurrent staging operations. USC Viterbi School of Engineering Local Resource Manager Interface • The GRAM interface to the LRM to submit, monitor, and cancel jobs. – Perl scripts + SEG • Scheduler Event Generator (SEG) generated by local resource manager provides efficient monitoring between the GRAM service and the LRM for all jobs for all users Slide courtesy of Stuart Martin USC Viterbi School of Engineering Fault tolerance • GRAM can recover from a container or host crash. Upon restart, GRAM will resume processing of the users job submission – Processing resumes for all jobs once the service container has been restarted Job cancellation • Allow a job to be cancelled – WSRF standard “Destroy” operation Slide courtesy of Stuart Martin USC Viterbi School of Engineering State Access: Push • Allow clients to request notifications for state changes – WS Notifications • Clients can subscribe for notifications to the “job status” resource property State Access: Pull • Allow clients to get the state for a previously submitted job – The service defines a WSRF resource property that contains the value of the job state. A client can then use the standard WSRF getResourceProperty operation. Slide courtesy of Stuart Martin USC Viterbi School of Engineering