First Two Years Project, St. Lawrence University

advertisement

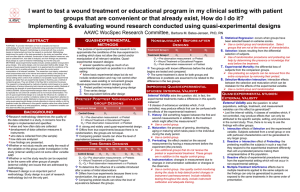

First Two Years Project Cathy Crosby-Currie Christine Zimmerman Bringing Theory to Practice March 2007 Modeling the Multiple Influences on Civic Development and Well-Being Astin’s Theory of Involvement: I-E-O Model = How is the output influenced/do students learn (forces behind something; program/intervention) Academic or co-curr. program Engaged Learning Pedagogy Community Service Program ENVIRONMENT B im pa ct A C Impact OUTPUT INPUT = Who is learning (pre-test attributes: demographics, abilities, views, etc.) = What are students learning (goals and objectives; post-test) Cognitive Critical thinking Academic ability Voting behavior Mental Health Affective Values Interests Satisfaction Attitudes&Beliefs Adopted from Astin, A. (1993). Assessment For Excellence: The Philosophy and Practice of Assessment and Evaluation in Higher Educaiton. Phonenix: ORYX Press, 18 Terenzini’s General Conceptual Model of College Influence on Student Learning Terenzini, P., Springer, L., Pascarella, E., Nora, A.(1995). Influences Affecting the Development of Students’ Critical Thinking Skills. Research in Higher Education, 36, 23-39. Methodological Considerations Experimental v. Quasi-Experimental Design Key difference between experimental and quasi-experimental Researcher’s control over the “input” variable Experimental: YES! -> cause/effect conclusions Quasi-Experimental: NO! -> examine relationships Control v. comparison groups Experimental v. Quasi-Experimental Design Quasi-experimental power comes from: Ability to detect change through design e.g., interrupted time series design Equivalence of comparison group to experimental group Longitudinal v. Cross-Sectional Designs Cross-sectional: Comparing groups of different ages at one point in time Convenient but lacks statistical and conceptual power Longitudinal: Comparing individuals to themselves across time Multiple cohorts is ideal St. Lawrence’s Quasi-Experimental, Longitudinal Design Participants: Two Cohorts – Selected Students from Classes ‘09 and ’10 Experimental Group – students in Brown College Second year added a second experimental group Comparison Group – non-equivalent group matched on key variables of interest Comparison Group Sample II.xls St. Lawrence’s Quasi-Experimental, Longitudinal Design Data Collection Pretest (9/05 & 9/06) Posttest (2/06 & 2/07) Follow-up (4/07 & 4/08) Challenges of Quasi-Experimental and/or Longitudinal Designs Creating comparison group(s) Participant attrition Communication incl. letter from president Personalized letters & email Contacting students multiple times/multiple ways Accommodate students’ schedules Institutional Review Board Approval Reframe as a positive contribution to your research not a hurdle to overcome Challenges of Measurement • Valid and Reliable Measures • Direct - Indirect Measures • Quantitative - Qualitative Data • Process - Outcomes Measures Reliability … is the consistency or repeatability of responses Random error (noise) Systematic error (bias) Reliability (cont.) Ways to increase data reliability: Clear directions Clear questions Consistent order of questions Clear survey layout Trained proctors/interviewers Consistent data entry and scoring Reliability (cont.) How to assess the reliability of your instrument: Pilot-test your study Test-retest your survey Focus-group survey or interview questions Include similar questions in same questionnaire Validity … the extent to which the instrument truthfully measures what we want to measure How well does the instrument content match what we want to measure? Do respondents interpret the questions correctly? Do respondents’ answers reflect what they think? Are the inferences we make from this study accurate? Can they be generalized? Validity (cont.) How to establish validity: Use multiple measures and multiple methods Derive measures from literature review & existing research / participate in national survey instruments and tests Expert review Pilot-test your own survey Direct – Indirect Measures Direct: tangible, actual evidence Indirect: proxy for what we try to measure Direct Measures Portfolio Indirect Measures Self-reported behavior, attitudes, gains Essay/reflection Performance task/test Grades Actual student behavior Participation rates Time spent at task Qualitative – Quantitative Measures Qualitative: unit of data = words Quantitative: unit of data = numbers Qualitative Measures Focus groups Structured interviews Self-reflections/diaries Open-ended survey questions Quantitative Measures Surveys with closed questions (Likert scale, check list, etc.) Grades Actuary data such as participation rates, attendance, etc. Process – Outcomes Measures Process Measures What did we do? (=data to demonstrate the implementation of an activity/program) Outcomes Measures What are the results? (= data used to measure the achievement of an objective/goal) Initial Intermediate Long term Administrative Challenges And Best Practices Buy-in and Support Form campus partnerships early on Build on existing data collections Institutional survey cycles and survey timing Copyrights of survey instruments Liability for use of certain measures Survey recruitment & retention Select Survey Instruments and Literature Sampling of Survey Instruments Entering Student Survey CIRP Freshman Survey (HERI, UCLA) College Students Expectations Questionnaire CSXQ (Indiana) Enrolled Undergraduate Students/Alumni As a continuation of CIRP: Your First College Year/College Senior Survey As a continuation of CSXQ: College Student Experience Questionnaire (CSEQ) National Survey of Student Engagement (NSSE) Consortia Senior and Alumni Surveys (e.g. HEDS. COFHE) Sampling of Survey Instruments Depression/Mental Health Measures Optimism/Pessimism/Happiness Scales Beck’s Depression Inventory (BDI II) Brief Symptoms Inventory (BSI) Mehrabian Optimism/Pessimism Scale http://www.authentichappiness.sas.upenn.edu/ Alcohol/Drugs/General Wellness CORE Alcohol And Other Drugs Survey ACHA-NCHA Sampling of Survey Instruments Civic Development (from Lynn Swaner) Other National Surveys CASA TELEPHONE SURVEY INSTRUMENT HERI Faculty Survey Other In-House Institutional Surveys Course evaluations Program evaluations Satisfaction studies Select Literature The National Center on Addiction and Substance Abuse at Columbia University (2003): Depression, Substance Abuse, and College Student Engagement: A Review of the Literature. Report to The Charles Engelhard Foundation and The Bringing Theory to Practice Planning Group. http://www.aacu.org/bringing_theory/research.cfm The National Center on Addiction and Substance Abuse at Columbia University (2005): Substance Abuse, Mental Health and Engaged Learning: Summary of Findings from CASA’s Focus Groups and National Survey. Report to Sally Engelhard Pingree and The Charles Engelhard Foundation for the Bringing Theory to Practice Project, in partnership with the Association of American Colleges and Universities. http://www.aacu.org/bringing_theory/research.cfm Swaner, L.E. (2005). Linking Engaged Learning, Student Mental Health and Well-being, and Civic Development: A Review of the Literature. Prepared for BTtoP http://www.aacu.org/bringing_theory/research.cfm Select Literature Pascarella, E., Terenzini, P.(1991). How College Affects Students: Findings and Insights from Twenty Years of Research. San Francisco: Jossey-Bass. Bringle, R. G., Phillips, M.A., Hudson, M. (2004). The measure of service learning: Research scales to assess student experiences. Washington, D.C. American Psychological Association Suskie, L. (1996). Questionnaire Survey Research: What works. Tallahassee: Association for Institutional Research