The Microarchitecture of FPGA

advertisement

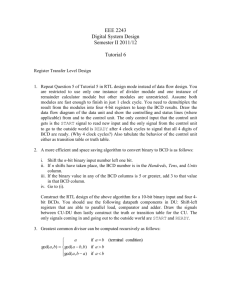

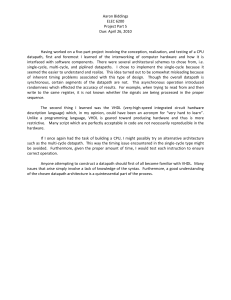

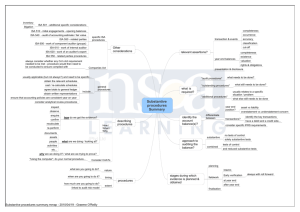

The Microarchitecture of FPGA-Based Soft Processors Peter Yiannacouras Jonathan Rose Greg Steffan University of Toronto Electrical and Computer Engineering Processors and FPGAs Processors present in many digital systems FPGA Custom Logic Processor Soft processors - implemented in FPGA fabric Our goal is to study the architecture of soft processors 2 Motivation for understanding soft processor architecture 1. Soft processors are popular 16% of FPGA designs use a soft processor 2. FPGA Journal, November 2003 This number has and will continue to increase Soft processors are end-user customizable Application-specific architectural tradeoffs Can be tuned by designers 3 Don’t we already understand processor architecture? Not accurately/completely Accurate cycle-to-cycle behaviour Estimated area/power No clock frequency impact Not in FPGA domain Lookup tables vs transistors Dedicated RAMs and Multipliers fast Must revisit processor architecture in FPGA context 4 Research Goals 1. Generate soft processor implementations 2. Develop measurement methodology 3. System for generating RTL Metrics for comparing soft processors Develop understanding of architectural tradeoffs Analyze area/performance/power space Explore soft processor architecture experimentally 5 Soft Processor Rapid Exploration Environment (SPREE) ■ ISA ■ Datapath SPREE RTL 6 Input: Instruction Set Architecture (ISA) Description ■ ISA ■ Datapath ■ Graph of Generic Operations (GENOPs) ■ Edges indicate flow of data MIPS ADD – add rd, rs, rt FETCH SPREE RFREAD RFREAD ADD RTL RFWRITE ISA currently fixed (subset of MIPS I) 7 Input: Datapath Description ■ Interconnection of hand-coded components ■ ISA ■ Datapath ■ Allows efficient synthesis ■ Described using C++ Ifetch Ifetch Mul Reg RegFile File Ifetch SPREE RTL Mul Mul Data Mem ALU ALU Write Shifter Back SPREE Component Library Reg file Write Back ALU Data Mem Limited to simple in-order issue pipelines 8 Step 1. ISA vs Datapath Verification ■ ISA ■ Datapath ■Components described using GENOPs Verify FETCH SPREE RFREAD RFREAD ADD RTL RFWRITE Ifetch Reg File FETCH RFREAD Mul Write Back ADD ALU RFREAD RFWRITE Data Mem 9 Step 2. Datapath Instantiation ■ ISA ■ Datapath SPREE ■ Multiplexer insertion ■ Unused connection/component removal Ifetch Reg File Mul Write Back ALU RTL Data Mem 10 Step 3. Control Generation ■ ISA ■ Datapath SPREE RTL Control Control Ifetch Reg File Control Control Mul Write Back ALU Data Mem Laborious step performed automatically 11 Output: Verilog RTL Description ■ ISA ■ Datapath SPREE Verilog RTL Control Control Ifetch Reg File Control Mul Write Back ALU RTL Control Data Mem 12 Back-end Infrastructure RTL Benchmarks (MiBench, Dhrystone 2.1, RATES, XiRisc) Modelsim Quartus II 4.2 RTL Simulator CAD Software Stratix 1S40 1. Cycle Count 2. Resource Usage 3. Clock Frequency 4. Power In this work we can measure each accurately! 13 Metrics for Measurement Area: Equivalent Stratix Logic Elements (LEs) Relative silicon areas used for RAMs/Multipliers Performance: Wall clock time count ÷ clock frequency Arithmetic mean across benchmark set Cycle Energy: Dynamic Energy (eg. nJ/instr) Excluding I/O 14 Trace-Based Verification Ensure SPREE generates functional processors Trace RTL Modelsim (RTL Simulator) 110100 101011 111101 Compare Trace Benchmark Applications MINT (Instruction-set Simulator) 110100 101011 111101 All generated soft processors are verified this way 15 Architectural Exploration Results 16 Architectural Features Explored Hardware vs software multiplication Shifter implementation Pipelining Depth Organization Forwarding 17 Validation of SPREE Through Comparison to Altera’s Nios II Has three variations: II/e – unpipelined, no HW multiplier Nios II/s – 5-stage, with HW multiplier Nios II/f – 6-stage, dynamic branch prediction Nios Caveats – not completely fair comparison Very similar but tweaked ISA Nios II Supports exceptions, OS, and caches We do not and save on the hardware costs We believe the comparison is meaningful 18 _ SPREE vs Nios II faster Average Wall Clock Time (us) 9000 SPREE Processors 8000 Altera Nios II/e Altera Nios II/s 7000 Altera Nios II/f 6000 5000 -3-stage pipe -HW multiply -Multiply-based shifter 4000 3000 2000 1000 500 700 900 1100 1300 1500 1700 1900 Area (Equivalent LEs) smaller Competitive and can dominate (9% smaller, 11% faster) 19 Architectural Features Explored Hardware vs software multiplication Shifter implementation Pipelining Depth Organization Forwarding 20 Hardware vs Software Multiplication Hardware multiply is fast but not always needed 8 7 6.90 Wastes area (220 LEs) and can waste energy 7.41 Hardware Multiply Software Multiply 6 2.81 3 2.93 4 3.18 5 3.30 Energy/instruction (nJ/instr) 2 1 0 2-stage 3-stage 5-stage Processor Total energy wasted if few multiply instructions, saved if many 21 Shifter Implementation Shifters are expensive in FPGAs We explore three implementations: Serial shifter (shift register) 2. Multiplier-based barrel shifter (hard multiplier) 3. LUT-based barrel shifter (multiplexer tree) 1. 22 Performance-Area of Different Shifter Implementations faster Average Wall Clock Time (us) 4000 3500 3-stage 4-stage Serial 5-stage 3000 2500 2000 1500 Multiplierbased LUT-based 1000 900 1000 1100 1200 1300 1400 1500 Area (Equivalent LEs) smaller Multplier-based shifter is a good compromise 23 Pipeline Depth Explored between 2 and 7 stages 1-stage and 6-stage pipeline not interesting 2-stage F/D/R/EX/M 3-stage 4-stage 5-stage (new) 7-stage WB F/D R/EX/M WB F D F D F D R/EX/M WB R/EX R EX/M EX EX WB EX/M WB 24 Pipeline Depth and Performance 3000 3.00 100.00 80.00 60.00 40.00 20.00 0.00 2-stage 3-stage 2500 2.50 Cycles Per Instruction Average Wall Clock Time (us) Clock Frequency (MHz) 120.00 2000 1500 1000 2.00 1.50 1.00 0.50 500 0.00 4-stage 5-stage 7-stage 2-stage 3-stage 4-stage 5-stage 7-stage 0 2-stage 3-stage 4-stage 5-stage 7-stage 2-stage pipeline and 7-stage pipeline suffers from nuances 3,4, and 5-stage pipelines perform the same 25 4-stage (A) F D R/EX/M WB 4-stage (B) F/D R/EX EX/M WB Average Wall Clock Time (us) Pipeline Organization Tradeoff 4000 4-Stage (A) 3500 4-Stage (B) 3000 2500 2000 1500 1000 1000 1100 1200 1300 1400 Area (Equivalent LEs) 4-stage (B) is 15% faster but requires up to 70 more LEs 26 Pipeline Forwarding F D/R EX M WB Prevent stalls when data hazards occur MIPS has two source operands (rs & rt) Four forwarding configuration are possible: No forwarding Forward rs Forward rt Forward both rs and rt 27 _ Pipeline Forwarding 1650 Average Wall Clock Time (us) 1600 no forwarding 1550 forward rt 1500 9% 3-stage 1450 1400 20% 4-stage forward rs 5-stage 1350 1300 forward rs&rt 1250 1200 800 900 1000 1100 1200 1300 1400 Area (Equivalent LEs) Up to 20% speed improvement for both operands The rs operand benefits more than rt (9% faster) 28 Summary of Presented Architectural Conclusions Hardware multiplication can be wasteful Multiplier-based shifter is a sweet spot 3-stage pipelines are attractive Tradeoffs exist within pipeline organization Forwarding Improves performance by 20% Favours the rs operand 29 Future Work Explore other exciting architectural axes Branch prediction, aggressive forwarding ISA changes VLIW datapaths Caches and memory hierarchy Compiler optimizations Port to other devices Explore aggressive customization Add exceptions and OS support 30