Chen Li

advertisement

and the

Big Data Landscape

Chen Li

Information Systems Group

CS Department

UC Irvine

0

Big Data / Web Warehousing

So what went

on – and why?

What’s going

on right now?

What’s going on

#AsterixDB

1

Big Data in the Database World

• Enterprises needed to store and query historical

business data (data warehouses)

– 1980’s: Parallel database systems based on “sharednothing” architectures (Gamma/GRACE, Teradata)

– 2000’s: Netezza, Aster Data, DATAllegro, Greenplum,

Vertica, ParAccel (“Big $”acquisitions!)

• OLTP is another category (a source of Big Data)

– 1980’s: Tandem’s NonStop SQL system

Notes:

• Storage manager

per node

• Upper layers

orchestrate them

• One way in/out:

via the SQL door

2

Big Data in the Systems World

• Late 1990’s brought a need to index and query

the rapidly exploding content of the Web

– DB technology tried but failed (e.g., Inktomi)

– Google, Yahoo! et al needed to do something

• Google responded by laying a new foundation

– Google File System (GFS)

• OS-level byte stream files spanning 1000’s of machines

• Three-way replication for fault-tolerance (availability)

– MapReduce (MR) programming model

• User functions: Map and Reduce (and optionally Combine)

• “Parallel programming for dummies” – MR runtime does the

heavy lifting via partitioned parallelism

3

(MapReduce: Word Count Example)

Input Splits

(distributed)

Mapper

Outputs

...

SHUFFLE PHASE

(based on keys)

Reducer

Inputs

...

Partitioned

Parallelism!

Reducer

Outputs

(distributed)

4

Soon a Star Was Born…

• Yahoo!, Facebook, and friends read the papers

– HDFS and Hadoop MapReduce now in wide use for indexing,

clickstream analysis, log analysis, …

• Higher-level languages subsequently developed

– Pig (Yahoo!), Hive (Facebook), Jaql (IBM)

• Key-value (“NoSQL”) stores are another category

– Used to power scalable social sites, online games, …

– BigTableHBase, DynamoCassandra, MongoDB, …

Notes:

• Giant byte sequence

files at the bottom

• Map, sort, shuffle,

reduce layer in middle

• Possible storage layer

in middle as well

• Now at the top: HLL’s

5

Apache Pig (PigLatin)

• Scripting language inspired by the relational algebra

– Compiles down to a series of Hadoop MR jobs

– Relational operators include LOAD, FOREACH, FILTER,

GROUP, COGROUP, JOIN, ORDER BY, LIMIT, ...

6

Apache Hive (HiveQL)

• Query language inspired by an old favorite: SQL

– Compiles down to a series of Hadoop MR jobs

– Supports various HDFS file formats (text, columnar, ...)

– Numerous contenders appearing that take a non-MRbased runtime approach (duh!) – these include Impala,

Stinger, Spark SQL, ...

7

Other Up-and-Coming Platforms (I)

• Spark for in-memory cluster computing – for doing repetitive

data analyses, iterative machine learning tasks, ...

query 1

one-time processing

query 2

query 3

Input

Distributed

memory

...

iterative processing

iter. 1

iter. 2

...

(Especially gaining traction

for scaling Machine Learning)

Input

8

Other Up-and-Coming Platforms (II)

• Bulk Synchronous Programming (BSP) platforms, e.g., Pregel,

Giraph, GraphLab, ..., for Big Graph analytics

“Think Like a Vertex”

– Receive messages

– Update state

– Send messages

(“Big” is the platform’s concern)

• Quite a few BSP-based platforms available

–

–

–

–

–

–

–

Pregel (Google)

Giraph (Facebook, LinkedIn, Twitter, Yahoo!, ...)

Hama (Sogou, Korea Telecomm, ...)

Distributed GraphLab (CMU, Washington)

GraphX (Berkeley)

Pregelix (UCI)

...

9

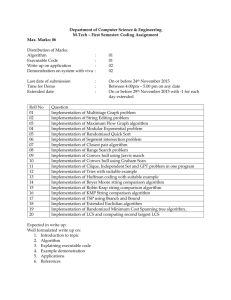

No Shortage of “NoSQL”

Big Data Analysis Platforms...

Query/Scripting

Language

SCOPE

Pig

Sopremo

Algebricks

Cascading

Dremel

SQL

Dremel

SQL

FlumeJava

Cascading

Java/Scala

Spark

Sawzall

Jaql

FlumeJava

Execution Engine

Spark

Hyracks

LSM

Storage

Data Store

Cosmos

Resource

Management

Hadoop

MapReduce

Mesos

Dataflow

Processor

Google

MapReduce

HBase

TidyFS

Quincy

Dremel

MapReduce

Tez

RDDs

Nephele

Dryad

Low-Level API

Hyracks

PACT

Compiler/Optimizer

DryadLINQ

High-Level API

Jaql

PigLatin

Meteor

AQL

SCOPE

Bigtable

HDFS

GFS

YARN

Omega

Relational

Row/

Column

Storage

10

Also: Today’s Big Data Tangle

(Pig)

11

AsterixDB: “One Size Fits a Bunch”

Semistructured

Data Management

BDMS Desiderata:

•

•

•

•

•

•

Parallel

Database Systems

World of

Hadoop & Friends

Flexible data model

Efficient runtime

Full query capability

Cost proportional to

task at hand (!)

Designed for

continuous data

ingestion

Support today’s “Big

Data data types”

•

•

•

12

ASTERIX Data Model (ADM)

create dataverse TinySocial;

use dataverse TinySocial;

create type MugshotUserType as {

id: int32,

alias: string,

name: string,

user-since: datetime,

address: {

street: string,

city: string,

state: string,

zip: string,

country: string

},

friend-ids: {{ int32 }},

employment: [EmploymentType]

}

create type EmploymentType as open {

organization-name: string,

start-date: date,

end-date: date?

}

create dataset MugshotUsers(MugshotUserType)

primary key id;

Highlights include:

• JSON++ based data model

• Rich type support (spatial, temporal, …)

• Records, lists, bags

• Open vs. closed types

13

ASTERIX Data Model (ADM)

create dataverse TinySocial;

use dataverse TinySocial;

create type MugshotUserType as {

id: int32,

int32

} alias: string,

name: string,

user-since: datetime,

address: {

street: string,

city: string,

state: string,

zip: string,

country: string

},

friend-ids: {{ int32 }},

employment: [EmploymentType]

}

create type EmploymentType as open {

organization-name: string,

start-date: date,

end-date: date?

}

create dataset MugshotUsers(MugshotUserType)

primary key id;

Highlights include:

• JSON++ based data model

• Rich type support (spatial, temporal, …)

• Records, lists, bags

• Open vs. closed types

14

ASTERIX Data Model (ADM)

create dataverse TinySocial;

use dataverse TinySocial;

create type MugshotUserType as {

id: int32,

int32

} alias: string,

name: string,

user-since:

datetime,

create

type MugshotMessageType

asaddress:

closed { {

street: string,

message-id:

int32,

city: string,

author-id:

int32,

state: string,

timestamp:

datetime,

zip: string, int32?,

in-response-to:

country: string point?,

sender-location:

},

tags:

{{ string }},

friend-ids:string

{{ int32 }},

message:

} employment: [EmploymentType]

}

create type EmploymentType as open {

organization-name: string,

start-date: date,

end-date: date?

}

create dataset MugshotUsers(MugshotUserType)

primary key id;

create dataset

MugshotMessages(MugshotMessageType)

primary key message-id;

Highlights include:

• JSON++ based data model

• Rich type support (spatial, temporal, …)

• Records, lists, bags

• Open vs. closed types

15

Ex: MugshotUsers Data

{ "id":1, "alias":"Margarita", "name":"MargaritaStoddard", "address”:{

"street":"234 Thomas Ave", "city":"San Hugo", "zip":"98765",

"state":"CA", "country":"USA" }

"user-since":datetime("2012-08-20T10:10:00"),

"friend-ids":{{ 2, 3, 6, 10 }}, "employment":[{

"organization-name":"Codetechno”, "start-date":date("2006-08-06") }] }

{ "id":2, "alias":"Isbel", "name":"IsbelDull", "address":{

"street":"345 James Ave", "city":"San Hugo", "zip":"98765”,

"state":"CA", "country":"USA" },

"user-since":datetime("2011-01-22T10:10:00"),

"friend-ids":{{ 1, 4 }}, "employment":[{

"organization-name":"Hexviafind”, "start-date":date("2010-04-27") }] }

{ "id":3, "alias":"Emory", "name":"EmoryUnk", "address":{

"street":"456 Jose Ave", "city":"San Hugo", "zip":"98765",

"state":"CA", "country":"USA" },

"user-since”: datetime("2012-07-10T10:10:00"),

"friend-ids":{{ 1, 5, 8, 9 }}, "employment”:[{

"organization-name":"geomedia”,

"start-date":date("2010-06-17"), "end-date":date("2010-01-26") }] }

...

16

Other DDL Features

create index msUserSinceIdx on MugshotUsers(user-since);

create index msTimestampIdx on MugshotMessages(timestamp);

create index msAuthorIdx on MugshotMessages(author-id) type btree;

create index msSenderLocIndex on MugshotMessages(sender-location) type rtree;

create index msMessageIdx on MugshotMessages(message) type keyword;

create type AccessLogType as closed

{ ip: string, time: string, user: string, verb: string, path: string, stat: int32, size: int32 };

create external dataset AccessLog(AccessLogType) using localfs

(("path"="{hostname}://{path}"), ("format"="delimited-text"), ("delimiter"="|"));

create feed socket_feed using socket_adaptor

(("sockets"="{address}:{port}"), ("addressType"="IP"),

("type-name"="MugshotMessageType"), ("format"="adm"));

connect feed socket_feed to dataset MugshotMessages;

External data highlights:

• Common HDFS file

formats + indexing

• Feed adaptors for

17

sources like Twitter

ASTERIX Query Language (AQL)

• Ex: List the user name and messages sent by those users who

joined the Mugshot social network in a certain time window:

from $user in dataset MugshotUsers

where $user.user-since >= datetime('2010-07-22T00:00:00')

and $user.user-since <= datetime('2012-07-29T23:59:59')

select {

"uname" : $user.name,

"messages" :

from $message in dataset MugshotMessages

where $message.author-id = $user.id

select $message.message

};

18

AQL (cont.)

• Ex: Identify active users and group/count them by country:

with $end := current-datetime( )

with $start := $end - duration("P30D")

from $user in dataset MugshotUsers

where some $logrecord in dataset AccessLog

satisfies $user.alias = $logrecord.user

and datetime($logrecord.time) >= $start

and datetime($logrecord.time) <= $end

group by $country := $user.address.country with $user

select {

"country" : $country,

AQL highlights:

• Lots of other features (see website!)

"active users" : count($user)

• Spatial predicates and aggregation

}

• Set-similarity (fuzzy) matching

•

And plans for more…

19

Fuzzy Queries in AQL

• Or:

Ex: Find Tweets with

aboutsimilar

similarcontent:

topics:

for $tweet1 in dataset('TweetMessages')

for $tweet2 in dataset('TweetMessages')

where $tweet1.tweetid != $tweet2.tweetid

and $tweet1.referred-topics

$tweet1.message-text ~=~=$tweet2.message-text

$tweet2.referred-topics

return {

"tweet1-text": $tweet1.message-text,

"tweet2-text": $tweet2.message-text

}

20

Updates (and Transactions)

• Ex: Add a new user to Mugshot.com:

insert into dataset MugshotUsers

({

"id":11, "alias":"John", "name":"JohnDoe",

"address":{

"street":"789 Jane St", "city":"San Harry",

"zip":"98767", "state":"CA", "country":"USA"

},

"user-since":datetime("2010-08-15T08:10:00"),

"friend-ids":{ { 5, 9, 11 } },

"employment":[{

"organization-name":"Kongreen",

"start-date":date("20012-06-05")

}] } );

• Key-value storelike transaction

semantics

• Insert/delete ops

with indexing

• Concurrency

control (locking)

• Crash recovery

• Backup/restore

21

AsterixDB System Overview

22

ASTERIX Software Stack

AQL

AsterixDB

HiveQL

Hivesterix

XQuery

Apache

VXQuery

Algebricks Algebra Layer

Pregel Job

Pregelix

Hadoop

M/R Job

M/R

Layer

Hyracks

Job

Hyracks Data-Parallel Platform

23

Native Storage Management

Datasets

Manager

Transaction Sub-System

Memory

Working

Memory

Buffer

Cache

Disk 1

In-Memory

Components

Transaction

Manager

Lock

Manager

Log

Manager

Recovery

Manager

Disk n

IO

Scheduler

(

+

24

)

LSM-Based Storage + Indexing

Memory

Sequential writes to disk

Disk

Periodically merge disk trees

25

LSM-Based Filters

Memory

Intuition: Do NOT touch unneeded records

T16, T17

Idea: Utilize LSM partitioning to prune disk components

Disk

Q: Get all tweets > T14

[ T12, T15 ]

[ T7, T11 ]

[ T1, T6 ]

T12, T13,

T14, T15

T7, T8, T9,

T10, T11

T1, T2, T3,

T4, T5, T6

Oldest Component

26

Some Example Use Cases

• Recent/projected use case areas include

–

–

–

–

–

–

–

Behavioral science (at UCI)

Social data analytics

Cell phone event analytics

Education (MOOC analytics)

Power usage monitoring

Public health (joint effort with UCLA)

Cluster management log analytics

27

Behavioral Science (HCI)

• First study to use logging and biosensors to measure

stress and ICT use of college students in their real

world environment (Gloria Mark, UCI Informatics)

– Focus: Multitasking and stress among “Millennials”

• Multiple data channels

–

–

–

–

–

Computer logging

Heart rate monitors

Daily surveys

General survey

Exit interview

Learnings for AsterixDB:

• Nature of their analyses

• Extended binning support

• Data format(s) in and out

• Bugs and pain points

28

Social Data Analysis

(Based on 2 pilots)

The underlying AQL query is:

use dataverse twitter;

for $t in dataset TweetMessagesShifted

let $region := create-rectangle(create-point(…, …),

create-point(…, …))

let $keyword := "mind-blowing"

where spatial-intersect($t.sender-location,

$region)

Learnings for AsterixDB:

and $t.send-time > datetime("2012-01-02T00:00:00Z”)

• Nature of their analyses

and $t.send-time < datetime("2012-12-31T23:59:59Z”)

• Real vs. synthetic data

and contains($t.message-text,

$keyword)

• Parallelism

(grouping)

group by $c := spatial-cell($t.sender-location,

• Avoiding materialization

create-point(…),

3.0,points

3.0) with $t

• Bugs and pain

return

{ "cell” : $c, "count”: count($t) }

29

#AsterixDB

Current Status

• 4 year initial NSF project (250+ KLOC @ UCI/UCR)

• AsterixDB BDMS is here! (Shared on June 6th, 2013)

–

–

–

–

–

–

–

–

Semistructured “NoSQL” style data model

Declarative (parallel) queries, inserts, deletes, …

LSM-based storage/indexes (primary & secondary)

Internal and external datasets both supported

Rich set of data types (including text, time, location)

Fuzzy and spatial query processing

NoSQL-like transactions (for inserts/deletes)

Data feeds and external indexes in next release

• Performance competitive (at least!) with a popular

parallel RDBMS, MongoDB, and Hive (see papers)

• Now in Apache incubation mode!

30

For More Info

AsterixDB project page: http://asterixdb.ics.uci.edu

Open source code base:

• ASTERIX: http://code.google.com/p/asterixdb/

• Hyracks: http://code.google.com/p/hyracks

• (Pregelix: http://hyracks.org/projects/pregelix/)

31