Threats to IV

Data Evaluation

Objectives

►

Describe how and to what extent to which singlesubject designs address major threats to internal validity

►

Summarize the issues concerning the standards for determining treatment effectiveness/effect size and evidence-based practices

►

Given a case description (study), evaluate the design and, if applicable, the evidence with respect to the extent it meets the single-case design standards for demonstrating a causal relation as proposed by

Kratochwill et al. (2010).

Objectives

►

Calculate PND for a set of data and describe the advantages and limitations of that approach

►

Describe and evaluate arguments concerning the use of statistical approaches for meta-analysis in single-subject designs

Single Subject Design

Subject

►

The individual or “subject” is the…

Single Subject Design

Condition 2

►

Individual serves as his/her own

…

Single Subject Design

►

The outcome variable measured repeatedly within and across conditions

Evidence Standards

Meets Evidence Standards

Meets Evidence Standards with Reservations

Does Not Meet Evidence

Standards

Most to Least

Evidence

Meets Evidence Standards

Baseline Treatment

►

Systematic manipulation of the IV

Meets Evidence Standards

►

Systematic manipulation of the IV

Does Not Meet Evidence Standards

Meets Evidence Standards

►

Systematic measurement of the outcome variables over time

Does Not Meet Evidence Standards

Meets Evidence Standards

►

Must include 3 attempts to demonstrate an intervention effect at 3 different points in time

Meets Evidence Standards

Does NOT Meet Evidence Standards

Meets Evidence Standards: Reversal Design

1 2 3

►

ABAB design

4 phases

5 data points per phase

4

Meets Evidence Standards: Reversal Design

1 2 3

►

ABAB design

4 phases

5 data points per phase

4

► To meet standards with reservations:

4 phases

3 data points per phase

Meets Evidence Standards: Multiple Baseline Design

►

Multiple Baseline

6 phases

5 data points per phase

1 2

3 4

5 6

Meets Evidence Standards: Multiple Baseline Design

►

Multiple Baseline

6 phases

5 data points per phase

1 2

3 4

► To Meet standards with reservations:

6 phases

3 data points per phase

5

6

Meets Evidence Standards: Alternating Treatment Design

► Minimum of 5 repetitions of the alternating sequence

(e.g., ABABBABAABBA)

100

90

80

70

60

0

-10

20

10

50

40

30

Extinction alone

DRA

1 2 3 4 5

Sessions

6 7 8 9 10

Treatm ent 1

Meets Evidence Standards: Alternating Treatment Design

►

►

Minimum of 5 repetitions of the alternating sequence

(e.g., ABABBABAABBA)

100

90

80

70

60

To meet standards with reservations: Minimum of 4 repetitions of the alternating sequence (e.g., ABABBAAB)

0

-10

20

10

50

40

30

Extinction alone

DRA

1 2 3 4 5

Sessions

6 7 8 9 10

Treatm ent 1

Review Basic

Terms

&

Examine

Confounds

Terms

►

Independent Variables

Baseline Intervention

Terms

►

Independent Variables

Must take on at least 2 levels (e.g., treatment and control)

►

Dependent Variables (DVs)

Terms

►

Independent Variables

Must take on at least 2 levels (e.g., treatment and control)

►

Dependent Variables (DVs)

►

Extraneous Variables (EVs)

Confounding variables (CVs)

EVs: Confounds

►

Confounding variable:

A variable that systematically varies with the levels of the IV

EVs

CVs Confounding Variable

EVs: Confounds

►

Confounding variable:

No glasses

A variable that varies with the levels of the

Confounding

Variable

NO GLASSES

CV

No glasses/glasses

No glasses Glasses

EVs: Confounds

►

Confounding variable:

A variable that varies with the levels of the

GLASSES

Glasses

No glasses

CV

No glasses/glasses

EVs: Confounds

►

Confounding variable:

No glasses Glasses

A variable that varies with the levels of the

Glasses

No glasses

CV

No glasses/glasses

Internal Validity (IV)

Internal Validity

(IV)

What is IV?

Threats to IV?

How can we reduce

IV threats?

What is Internal Validity (IV)?

The degree to which the results are attributable only to the independent variable

Internal Validity

►

Is the investigator’s conclusion correct?

►

Are the changes in the independent variable indeed responsible for the observed variation in the dependent variable?

►

Might the variation in the dependent variable be attributable to other causes?

Why is Internal Validity Important?

►

If a study shows a high degree of internal validity, then we can conclude that we have strong evidence of causality

►

If a study has low internal validity, then we must conclude that we have little or no evidence of causality

Threats to IV: Ambiguous Temporal Precedence

►

Cannot determine which variable is the cause and which is the effect

Threats to IV: Ambiguous Temporal Precedence

►

Threat is controlled by:

Actively manipulating the independent variable

Measuring the DV throughout the experiment

Threats to IV:

Selection

►

Systematic differences in participant characteristics that occur between and among conditions

Threats to IV: Selection

►

Not a big concern when using single-subject designs because:

The participant is exposed to all the conditions of the experiment

The participant serves as his/her own control

Threats to IV: Selection

►

Selection can be a problem if the:

Researcher is examining between-case intervention conditions using intact “units”

Threats to IV: Selection

Treatment A Treatment B

Threats to IV: History

►

An unplanned event that occurs during the course of a study that can affect the participants’ responses

Most important threat to single-subject designs

Threats to IV: History

Threats to IV: History

History

Began in the late 1960s

Also began in the late 1960s

Threats to IV: History

►

History effects lessened by multiple introductions of the treatment overtime

(e.g., ABAB)

Threats to IV: Maturation

►

Changes during an intervention are due to factors associated with the passage of time & not due to the intervention itself

Beginning of the semester

Maturation

Mid year

Threats to IV: Maturation

►

Threat is accounted for & minimized by:

Demonstrating 3 replications of the effect at

3 different points in time

Selecting an appropriate design

Using repeated measures overtime

Threats to IV: Maturation

►

Threat is accounted for & minimized by:

Demonstrating 3 replications of the effect at

3 different points in time

Selecting an appropriate design

Using repeated measures overtime

Control group

Threats to IV: Regression

►

When cases are selected because of unusually low (or high) scores, their scores on other measured variables will be lees extreme

Threats to IV: Regression

►

When cases are selected because of unusually low (or high) scores, their scores on other measured variables will be lees extreme

Threats to IV: Regression

►

Threat is minimized with repeated measurements

Threats to IV: Attrition

►

A change in overall scores can be attributed to loss of participants

►

Attrition may be a huge threat when the loss is systematically related to the experimental conditions

Threats to IV: Attrition

►

Occurs when an:

Individual does not complete all required phases

Individual does not have enough data points in a phase

• Premature departure may render data series too short to examine variability, level, and trend

Threats to IV: Testing

►

Exposure to a test can affect scores on subsequent exposers to that test

Threats to IV: Testing

►

Exposure to a test can affect scores on subsequent exposers to that test

►

Assessment process may influence study outcomes

(e.g., reactivity)

Before observation During observation

Threats to IV: Testing

►

Threat is minimized with repeated measurement of the DV across phases

Threats to IV: Instrumentation

►

Any change that takes place in the measuring instrument or assessment procedure over time (e.g., observer drift/bias)

Threats to IV: Additive or Interactive

►

Threats to IV can combine to produce a bias in the study

Selection History Testing

+ +

Confound

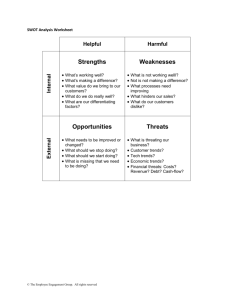

Ambiguous Temporal

Precedence

Selection

History

Maturation

Statistical Regression

Attrition

Testing

Instrumentation

Additive or Interactive

Description

Cannot determine which variable is the cause and which the effect

Systematic differences in participant characteristics

Event other than the intervention that can influence results

(e.g., changes in living arrangements, medication)

Change that may result from maturing (e.g., growing older)

Reversion of scores toward the mean if scores are at the extremes during one assessment session

Change in result based on participant drop out

Exposure to a test can affect scores on another test

Changes based on the instrument, assessment, or observation procedure (e.g., observer drift/bias)

A combination of the aforementioned threats

Effect Sizes

Effect Sizes

Effect Sizes

Effect Sizes

Effect Sizes

Effect Size

►

A measure of the differences between two groups

The estimated magnitude of a treatment effect

►

There are several tests (parametric and nonparametric

Measures), which can be used to calculate effect size

Effect Size: Group

Design

The P value does not indicate the size of the treatment effect

Effect Size: Group

Design

Effect size

Effect Size & SS Design

►

The effect size does not account for change over time

Did the effect occur in one week or two months?

►

In single subject research, there are no agreed upon standards for effect size estimation

►

Questions arise:

Should an effect size apply to…

Effect Size & SS Design

Take Home Message…

There are assumptions in every statistical test

A few Statistical Assumptions

Data have = variances

Independent Observations

There is no agreement on how to apply these assumptions to SS designs

Data have a linear relationship

Populations must be normally distributed

Meta-analyses

►

Compare and contrast results from different studies to identify patterns among the results of the studies

Meta-analyses

►

Compare and contrast results from different studies to identify patterns among the results of the studies

►

Some literature addressing how best to meta-analyze single-subject designs

Meta-analyses

►

Researchers tend not to include SS research designs in their meta-analyses

Not included

What’s key here is that the methods for synthesizing these designs are diverse

&

There is no consensus regarding what is the best method to use

Meta-analyses Limitations

►

Difficulties:

Different participants

Different dependent variables

Different (related) treatments

Different designs (e.g., AB, ABAB, ABC)

Meta-analyses Limitations

►

Existing methods are valid only if the assumptions are met

► It does not account for socially meaningful behavior change

►

Obtaining large sample sizes are difficult with low incidence disabilities

Type 1 and Type 2 Errors- Group Design

►

Type 1: Say there is a differences when there is NOT

►

Type 2: Say there is no differences when there IS

Type 1 and Type 2 Errors

►

Type 1: Say there is a differences when there is NOT

Less likely

►

Type 2: Say there is no differences when there IS

More likely

Type 1 and Type 2 Errors

►

Type 1: Say there is a differences when there is NOT

►

There is a way to calculate Type 1 errors in SS design

Problem: repeated measures overtime (sometimes similar sometimes different conditions) violate the independence of data assumption