Coda

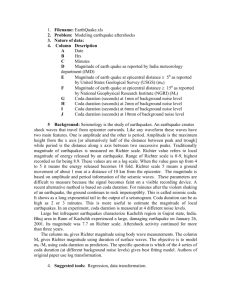

advertisement

CODA: A HIGHLY AVAILABLE FILE SYSTEM FOR A DISTRIBUTED WORKSTATION ENVIRONMENT M. Satyanarayanan, J. J. Kistler, P. Kumar, M. E. Okasaki, E. H. Siegel, D. C. Steere Carnegie-Mellon University Paper highlights • A decentralized distributed file system to be accessed from autonomous workstations – Most of these features were already present in AFS (Andrew File System) • An optimistic mechanism to handle inconsistent updates: – Coda does not prevent inconsistencies, it detects them Introduction (I) • AFS was a very successful DFS for a campus-sized user community – Even wanted to extend it nationwide but WWW took over instead • Key ideas include – Close-to-open consistency – Callbacks Introduction (I) • CODA extends AFS by – Providing constant availability through replication – Introducing a disconnected mode for portable computers • Most lasting contribution Hardware Model (I) • CODA and AFS assume that client workstations are personal computers owned by their users – Fully autonomous – Cannot be trusted • CODA allows owners of laptops to operate them in disconnected mode – Opposite of ubiquitous connectivity Hardware Model (II) • Coda added later a weak connectivity mode for portable computers linked to the CODA servers through slow links (like modems) – Allows for slow reintegration Other Models • Plan 9 and Amoeba – All computing is done by pool of servers – Workstations are just display units • NFS and XFS – Clients are trusted and always connected • Farsite – Untrusted clients double as servers Design Rationale • CODA has three fundamental objectives – Scalability: to build a system that could grow without major problems – Fault-Tolerance: system should remain usable in the presence of server failures, communication failures and voluntary disconnections – Unix Emulation Scalability • AFS was scalable because – Clients cache entire files on their local disks – Server uses callbacks to maintain client cache coherence • Reduces server’s involvement at open time • Coda keeps same general philosophy – Accessibility and scalability are more important than data consistency Accessibility • Must handle two types of failures – Server failures: • Data servers are replicated – Communication failures and voluntary disconnections • Coda uses optimistic replication and file hoarding Optimistic replication (I) • Pessimistic replication control protocols guarantee the consistency of replicated in the presence of any non-Byzantine failures – Typically require a quorum of replicas to allow access to the replicated data – Would not support disconnected mode Example • Majority consensus voting: – Every update must involve a majority of replicas – Every majority contains at least one replica 2 3 3 Optimistic replication (II) • Optimistic replication control protocols allow access in disconnected mode – Tolerate temporary inconsistencies – Promise to detect them later – Provide much higher data availability UNIX sharing semantics • Centralized UNIX file systems (and Sprite) provide one-copy semantics – Every modification to every byte of a file is immediately and permanently visible to all clients • AFS uses a laxer model (close-to-open consistency) • Coda uses an even laxer model AFS-1 semantics • First version of AFS – Revalidated cached file on each open – Propagated modified files when they were closed • If two users on two different workstations modify the same file at the same time, the users closing the file last will overwrite the changes made by the other user Open-to-Close Semantics • Example: F’ First client F’ F F” F” overwrites F’ F” Second client Time AFS-2 semantics (I) • AFS-1 required each client to call the server every time it was opening an AFS file – Most of these calls were unnecessary as user files are rarely shared • AFS-2 introduces the callback mechanism – Do not call the server, it will call you! AFS-2 semantics (II) • When a client opens an AFS file for the first time, server promises to notify it whenever it receives a new version of the file from any other client – Promise is called a callback • Relieves the server from having to answer a call from the client every time the file is opened – Significant reduction of server workload AFS-2 semantics (III) • Callbacks can be lost! – Client will call the server every tau minutes to check whether it received all callbacks it should have received – Cached copy is only guaranteed to reflect the state of the server copy up to tau minutes before the time the client opened the file for the last time Coda semantics (I) • Client keeps track of subset s of servers it was able to connect the last time it tried • Updates s at least every tau seconds • At open time, client checks it has the most recent copy of file among all servers in s – Guarantee weakened by use of callbacks – Cached copy can be up to tau minutes behind the server copy Server Replication • Uses a read-one, write-all approach • Each client has a preferred server – Holds all callbacks for client – Answers all read requests from client • Client still checks with other servers to find which one has the latest version of a file • Servers probe each other once every few seconds to detect server failures Disconnected mode • Client caches are managed using an LRU policy • Coda allows user to specify which files should always remain cached on her workstation and to assign priorities to these files • When workstation gets reconnected, Coda initiates a reintegration process – Found later that it required a fast link between workstation and servers Conflict resolution • Coda provides automatic resolution of simple directory update conflicts • Other conflicts are to be resolved manually by the user Coda semantics (II) • Conflicts between cached copy and most recent copy in s can happen – Coda guarantees they will always be detected • At close time, client propagates the new version of the file to all servers in s – If s is empty. client marks the file for later propagation Replica management (I) • Emphasis here is on conflict detection – Coda does not prevent inconsistent updates but guarantees that inconsistent updates will always be detected • Each client update is uniquely identified by a storeid Replica management (II) • Coda maintains for each replica – Its last store ID (LSID) – The length v of its update history (in other words, a version number) Replica management (III) • Each replication site also maintains its estimates of the version numbers vA, vB, vc of the replicas of the file held by other sites (current version vector or CVV) • These estimates are always conservative Example • Three copies – A: LSID= 33345 – B: LSID= 33345 – C: LSID= 2235 v=4 CVV = (4 4 3) v=4 CVV = (4 4 3) v=3 CVV = (3 3 3) Replica management (III) • Coda compares the states of replicas by comparing their LSID’s and CVV’s • Four outcomes can be 1. Strong equality: same LSID’s and same CVV’s – Everything is fine Replica management (IV) 2. Weak equality: Same LSID’s and different CVV’s – Happens when one site was never notified that the other was updated – Must fix CVV’s Replica management (V) 3. Dominance /Submission: LSID’s are different and every element of the CVV of a replica is greater than or equal to the corresponding element of the CVV of the other replica Example: two replicas A and B CVVA = (4 3) A dominates B CVVB = (3 3) B is dominated by A A has the most recent version of the file Replica management (VI) 4. Inconsistency: LSID’s are different and some element of the CVV of a replica are greater than the corresponding elements of the CVV of the other replica but other are smaller Example: two replicas A and B CVVA = (4 2) A and B are CVVB = (2 3) inconsistent Must fix inconsistency before allowing access to the file Performance • Coda is slightly slower than AFS – Server replication degrades performance by a few percent – Reliance on Camelot transaction facility also impacts performance – Coda is not as well tuned as AFS • Using multicast helps reducing this gap Andrew Benchmark CONCLUSION • Coda project had an unusually long life – First work to introduce disconnected mode • Coda/AFS introduced many new ideas – Close-to-open consistency – Optimistic concurrency control – Callbacks (partially superseded by leases)