Teaching Portfolio 1998Kevin D

advertisement

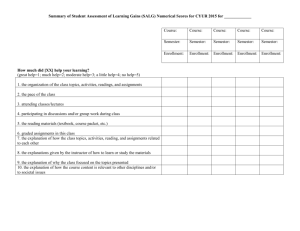

Teaching Portfolio 2010 Kevin D. Donohue Professor Electrical and Computer Engineering Department University of Kentucky Lexington, KY 40506-0046 http://www.engr.uky.edu/~donohue/ Table of Contents Subject Page Teaching Evaluation ............................................................................................................1 Changes to Portfolio since Last Review: .......................................................................1 Philosophy and Goals ....................................................................................................1 Course Characteristics ...................................................................................................1 Teaching Performance and Outcome Assessment .........................................................2 EE 101 Electrical Engineering Professions Seminar(outcome assessment) ............2 EE 211 Circuits I (outcome assessment) .................................................................4 EE221 Circuits II (outcome assessment) .................................................................6 EE222 Electrical Engineering Laboratory I .............................................................8 EE307 Circuit Analysis with Applications ..............................................................8 EE380 Microcomputer Organization .......................................................................9 EE421 Signals and Systems I ..................................................................................9 EE422 Signals and Systems II .................................................................................9 EE462G Electronic Circuits Laboratory (outcome assessment) ............................12 EE 499 Senior Design (outcome assessment) ........................................................16 EE 513 Audio Signals and Systems .......................................................................17 EE611 Deterministic Systems................................................................................19 EE630 Digital Signal Processing ...........................................................................20 EE 639 Adv. Topics in Com. and Signal Processing (outcome assessment) ........20 Student Evaluation Summary ............................................................................................22 Advising Evaluation...........................................................................................................22 Appendix A - Student Evaluations of Courses and Teaching............................................23 Teaching Evaluation: Changes to portfolio since last review: The feedback and improvement discussions have changed for EE221, EE422G, EE513, and EE611. These were the courses I taught in the last 2 years. Philosophy and Goals: My teaching assignment consists of undergraduate and graduate courses in the electrical engineering department with specialty courses in the signals and systems area (statistical signal processing, digital signal processing (DSP), and communication systems). These courses emphasize applying mathematical models for relating information to signals and systems, and using these models to solve engineering problems in control, communications, and signal processing. I believe my most significant activities are directed at generating excitement and genuine interest in the electrical engineering field, and instilling a confidence that they can contribute to the profession and change the world as others have in the past. This will hopefully motivate them to dream and set goals, work hard and become self learners as they deal with the challenges presented to them in the classroom. The confidence comes in meeting the challenges I create for the students. These challenges require the student master and apply basic knowledge and skill sets as described in the course outcomes. Projects are used to assess the more complex skills involving synthesis and provide the student with the confidence that they can apply their skills to solve open-ended problems. I hope to introduce a history based course on electrical engineering at some point in my career, or add it to one of the freshmen courses. I think it can be made stimulating for all students, and provide knowledge of where the field has been, where it can go, and how they can be a part. But limitations on my own time and a crowded curriculum make this an unlikely prospect for the near future. For now I tend to intersperse my lectures with stories of some the peculiar characters that have shaped our profession and changed the world (Faraday, Maxwell, Galvani, Franklin, Edison, Steinmetz, Tesla, Weiner, Shannon, Shockley, …) . Course Characteristics: My classroom activities are strongly influenced by student feedback, which I get directly from the students or through the teacher/course evaluations. As a result of student feedback over the years, the characteristics of my classroom are as follows. I give many quizzes throughout the course rather than a few midterms. The quizzes are graded by me are returned promptly with comments. I assign, collect, and grade homework (homework assignments are graded by the teaching assistant, if one is provided for the course). I give projects that involve students working in groups. I make class materials available on the web (see http://www.engr.uky.edu/~donohue/courses.html). I do a lot of 1 information broadcasts using class email lists the college of engineering computing services set up for me. I make students orally present project results or explain homework solutions to the rest of the class. In laboratory courses I emphasize experimental design skills and written explanations (how to write and communicate technical information). Therefore, I try not to provide a lot of detail in the lab assignment on how to make a measurement. This frustrates some students; however I am hopeful that through the struggle they will learn instrumentation and problem-solving skills applicable to broader setting. I encourage writing more concisely through the use of figures, graphs, and equations. I think by now most of my students see the value in developing their writing skills and appear to be improving at it. Teaching Performance and Outcome Assessment In order to determine how well students are achieving the course outcomes, I have started a system (since Spring 1999) for grading each assignment according to the outcomes listed for the course. If a single assignment has multiple outcomes, it will be graded in separate components and recorded as separate components in the class spreadsheet. So each column relates to the performance of one outcome. Scores are then averaged over all students and a class grade assigned for each outcome. This way I can track student outcome achievements with change I make in the classroom from semester to semester. The courses taught before 1999 just have a brief description of the course with a list of student evaluations on the teaching quality and course value for each time I taught the course. EE 101 Electrical Engineering Professions Seminar Subjects Covered: Professional practice, growth, conduct, ethics, computers in electrical engineering, the University computer system, careers in engineers, and professional societies. This is a one hour seminar course (taken Pass/Fail) designed to help freshmen become familiar with the electrical engineering profession and learn basic skill for enhancing their time as an undergraduate at the University of Kentucky. Teacher/Course Evaluation (by student): EE101 Student Ratings of Instructor and Course (Scale 1-4 with 4 being the best) Term Enrollment Value of Course Quality of Teaching Fall 2000 92 3.2 3.5 Fall 2001 102 3.1 3.4 Fall 2002 107 3.2 3.4 Course Outcome Assessment: 2 Two evaluations of course outcomes are performed. One is a self-evaluation that reflects the student confidence level, and the other is by the instructor derived from the average score on course assignments related to each outcome. Course Outcome List: 1. Know the academic requirement for an undergraduate degree in Electrical Engineering. 2. Understand ethical and professional issues associated with the electrical engineering profession. 3. Able to use word processing spreadsheets, computer networks, literature search techniques, email, and very simple electric networks. Student Self-Assessment: Students rated their own ability relative to each course outcome on a scale from 1 (no confidence) to 5 (most confident). The mean was taken over all students and converted to a percentage (out of 5), which is reported in the table below in terms of percent 100*(score-1)/4. Table EE101 Student Confidence Level Percentage (student self-assessment) Course Outcome Semester Respondents 1 2 3 Fall 00 52 87.5 85 90 Fall 01 52 -85 85 Fall 02 35 82 80 77.5 The dropping trend in Outcome 3 is not good. I need to give more assignments associated with this outcome. I originally dropped some because I did not want the course to burden the freshmen over their more important academic subjects; however I probably need to give smaller and possibly in-class assignments so students can gain more confidence in their abilities with these outcomes. Assessment by Instructor: The following assessment tools were used throughout the class: Homework assignments In-class assignments Table EE101 Student Performance Level Percentage (faculty assessed) Course Outcome Semester Enrollments 1 2 3 Fall 00 91 50 75 75 Fall 01 102 -75 62.5 Fall 02 107 -87 87 Feedback and Improvement: While I felt their performance has improved, it is hard to report a finer scale with a passfail grading system. I can only go by number of acceptable assignments turned in. The greater weight of outcome assessment for this course has to be the student self assessments rather than the faculty numbers. The increase in performance for outcomes 3 in the Fall 02 is most likely the result of giving few assignments. Student confidence level has held steady. For outcome 2, I am finding that my senior exit interviews reveal almost 100% of our students remember seeing the IEEE code of ethics. Most had seen this for the first time in EE101. In 1997 though 2000 many of our graduating seniors (more than 50%) did not recalled seeing the IEEE code of ethics before. I also feedback information from interviews I do with students on probation and the senior interview to help advise students on strategies for successfully completing the curriculum. I continue to provide this information to all faculty and especially those teaching EE101. Over the last 6 year we have seen over a 100% improvement in retention rates. Will EE101 was on the only factor, I do think it played a significant part in this success. EE 211 Circuits I Subjects Covered: AC and DC analysis of linear circuits including transient and steadystate analysis. This is the students’ first technical course in electrical engineering. The focus is on fundamental circuit analysis and circuit simulation software. Teacher/Course Evaluation (by student): EE211 Student Ratings of Instructor and Course (Scale 1-4 with 4 being the best) Term Enrollment Value of Course Quality of Teaching Spring 02 33 3.6 3.8 Fall 01 55 3.7 3.8 Spring 01 27 3.8 3.9 Fall 00 45 3.5 3.6 Spring 00 29 3.6 3.7 Course Outcome Evaluation Two evaluations of course outcome are performed. One is a student self-evaluation that reflects the student confidence level, and the other is by the instructor derived from the average score on course assignments related to each outcome. Outcome list: 1. 2. 3. 4. 5. Analyze simple resistive circuits including those containing operational amplifiers and controlled sources with loop and nodal analysis Analyze RLC circuits containing switches, independent sources, dependent sources, resistors, capacitors, inductors, and operational amplifiers for transient response using loop and nodal analysis Analyze RLC circuits with sinusoidal excitation sources for steady-state response using loop and nodal analysis Compute Thévenin and Norton equivalent circuits Use SPICE (computer simulation package) to compute voltages, currents, transient responses, and sinusoidal steady-state responses 4 Student Self-Assessment: Students rated their own ability relative to each course outcome on a scale from 1 (no confidence) to 5 (most confident). The mean was taken over all students and converted to a percentage (out of 5), which is reported in the table below. Table EE211 Student Confidence Level Percentage Course Outcome Semester Respondents 1 2 3 Spring 00 Fall 00 23 88 80 88 Spring 01 17 90 76 82 Fall 01 34 90 80 82 Spring 02 25 84 76 78 4 5 76 82 76 72 90 90 90 84 Assessment by Instructor: The following assessment tools were used throughout the class: Homework assignments In-class and take-home quizzes Final exam The complex assignments that involved multiple outcomes were graded and recorded in components. The average score was computed over all students and assessment tools related to each outcome. The percentage for each outcome is listed in the table below. Table EE211 Student Performance Level Percentage Course Outcome Semester Enrollment 1 2 3 Spring 00 29 81 74 75 Fall 00 23 88 71 72 Spring 01 27 82 84 84 Fall 01 45 80 79 81 Spring 02 29 85 80 71 4 48 60 74 76 83 5 81 73 87 90 78 Feedback and improvement: When I started to collect these statistics I realized the outcome number 4 was in definite need of improvement with a 48% average score on problems related to this outcome. I began to focus on this component, giving more class time to it and more homework problems. As a result there has been a steady improvement in this outcome until it is now comparable to the other outcomes. Student confidence in this outcome is still low, however. This indicates that while I may have been successful in teaching the mechanics of the problem, they lack the understanding and purpose of the analysis and confidence. I will work on this next time I teach the course. Outcomes 2 and 3 are now of my greatest 5 concern. The next time I teach the course I will focus on better examples for the lecture. I will also work on special exercises to use during recitation. EE221 Circuits II Subjects covered: Transfer functions of circuits, singularity functions, differential equation representations and solutions for circuits, two-port parameter representations of circuits, and design project organization. My main addition to the course was a hearing aid design project which I added in 1994 and is still being used in this course to develop and evaluate student outcomes related to open-ended design and team work. The web pages of Drs. Gedney and Donohue have various version of this project described. I also have a link so that students can evaluate their own hearing loss and develop the filters and amps to compensate for their own deviation from normal hearing. Recently I have been stressing the Engineering notebooks in preparation for senior design and it utility in industry. Teacher/Course Evaluation (by student): EE221 Student Ratings of Instructor and Course (Scale 1-4 with 4 being the best) Term Enrollment Value of Course Quality of Teaching Fall 2010 34 NYA NYA Spring 2010 34 3.5 3.1 Fall 2008 19 3.5 3.4 Spring 2007 33 3.2 3.2 Spring 2005 43 3.3 3.1 Fall 98 34 3.4 3.5 Fall 97 44 Team Taught 50% 3.0 3.2 Summer 97 19 No Evaluation No Evaluation Fall 96 41 3.5 3.6 Summer 96 19 No Evaluation No Evaluation Spring 96 59 3.3 3.2 Fall 95 61 3.2 3.3 Fall 94 26 3.5 3.6 Summer 94 10 No Evaluation No Evaluation Spring 93 71 3.4 3.6 Spring 92 58 3.5 3.5 Fall 91 51 3.6 3.6 In 2001 the outcomes for this course changed, so materials on assessments prior to 2001 have been removed. Below are current outcomes along with their assessments. Course Outcome Evaluation Two outcome evaluations are performed. One is a self-evaluation that reflects the student confidence level and the other is by the instructor derived from the average score on course assignments related to each outcome. 6 Outcome list: 1. 2. 3. 4. 5. 6. 7. 8. 9. Perform an AC steady-state power analysis on single-phase circuits. Perform an AC steady-state power analysis on three-phase circuits. Analyze circuits containing mutual inductance and ideal transformers. Derive transfer functions (variable-frequency response) from circuits containing independent sources, dependent sources, resistors, capacitors, inductors, operational amplifiers, transformers, and mutual inductance elements. Derive two-port parameters from circuits containing resistive and impedance elements. Use SPICE to compute circuit voltages, currents, and transfer functions. Describe a solution with functional block diagrams (top-down design approach). Work as a team to formulate and solve an engineering problem. Use computer programs (such as MATLAB and SPICE) for optimizing design parameters and verify design performance. Student Self-Assessment: Students rated their own ability relative to each course outcome on a scale from 1 (no confidence) to 5 (most confident). The mean was taken over all students and converted to a percentage (out of 5) reported in the table below. These are converted to percentages (100% = 5, 0% = 1) and indicated in the tables below. Table EE221 Student Confidence Level Percentage (self-assessment) Semester Respondents Spring 05 Spring 07 Fall 08 Spring 10 Fall 10 Mean Standard Deviation 34 27 19 34 34 1 82 88 90 85 NAY 86.25 2 77.5 76 67.5 75 NAY 74.00 3 77.5 80 82.5 70 NAY 77.50 3.50 4.45 5.40 Course Outcome 4 5 6 77.5 77.5 77.5 84 74 90 87.5 85 77.5 75 75 80 NAY NAY NAY 81.00 77.88 81.25 5.76 4.97 5.95 7 77.5 88 85 87.5 NAY 84.50 8 77.5 86 87.5 85 NAY 84.00 9 75 84 77.5 80 NAY 79.13 Mean 77.72 83.33 82.22 79.17 4.85 4.45 3.84 4.80 80.61 NAY => Not Available Yet, IP=> In Progress Assessment by Instructor: The following assessment tools were used throughout the class: Homework assignments Team-design project In-class and take-home quizzes Final exam The complex assignments that involved multiple outcomes were graded and recorded in components. The average score was computed over all students and assessment tools related to each outcome. The percentage for each outcome is listed in the table below. Table EE221 Student Performance Level Percentage (faculty-assessment) Semester Enrollment Spring 05 Spring 07 43 33 1 81.8 81.4 2 71.7 76.6 3 79.0 74.3 Course Outcome 4 5 6 79.6 76.5 77.4 80.4 78.8 78.7 7 7 84.3 91.1 8 90.6 94.7 9 85.0 96.3 Mean 80.66 83.59 Fall 08 Spring 10 Fall 10 Mean Standard Deviation 19 34 34 90.3 83.18 74.69 75.93 78.88 75.09 86.10 76.55 97.84 87.63 89.86 73 75.6 80 75.6 80 87.6 90 96.5 67.35 73.12 77.50 80.84 72.20 79.10 83.51 86.75 93.84 78.73 74.63 77.77 78.53 78.43 81.23 88.47 93.93 90.53 82.51 79.36 82.16 4.97 2.59 3.04 2.57 1.78 5.55 3.65 3.02 5.65 4.14 Feedback and Improvement: With tracking over 5 year we can identify some patterns of interest. First off it is interesting that that student assessments and faculty assessment of outcomes track pretty close, especially the far right column showing the means over all the outcomes. I think this speaks to the fact that the students take a quiz every week and get weekly feedback on how they are performing before taking the final exam. The most recent faculty assessments of student output show some drops in basic phasor analysis. This is for the most part review from Circuits1. Students complained that they did not cover it in Circuits 1, but this was not the case. I will not make any changes based on that, unless I see a trend. I have been emphasizing the project notebook more and grading more harshly, which is the reason the teamwork outcome appears to be dropping for outcome 8. I think it was a combination of an unusually low-level class (just opposite of the Spring 07 class) so again I will not make changes based on that. Overall the outcome ratings remain positive. Much of the variation I think is explain by the natural variation in the level of the students in the classes. I am thinking of being less aggressive with the project next time so the students can focus more on the circuit solving skill of outcomes 1-6. EE222 Electrical Engineering Laboratory I Subjects covered: Basic measurement and characterization of DC and AC voltages, currents, and power. Enrollment: 45 Students. EE222 Student Ratings of Instructor and Course (Scale 1-4 with 4 being the best) Term Enrollment Value of Course Quality of Teaching Fall 96 44 3.2 3.6 EE307 Circuit Analysis with Applications Subjects covered: Basic AC and DC circuit analysis techniques (mesh and nodal analysis with circuit elements comprised of resistors, capacitors, inductors, op amps, and transformers). Examples in instrumentation, electro-mechanical, and power transfer circuits. EE307 Student Ratings of Instructor and Course (Scale 1-4 with 4 being the best) Term Enrollment Value of Course Quality of Teaching Summer 96 40 No Evaluation No Evaluation Summer 95 41 No Evaluation No Evaluation 8 EE380 Microcomputer Organization Subjects covered: General Architecture of Microcomputer, 8086 instruction set and programming concepts EE380 Student Ratings of Instructor and Course (Scale 1-4 with 4 being the best) Term Enrollment Value of Course Quality of Teaching Fall 93 41 2.9 3.0 Spring 93 47 3.3 3.1 EE421G Signals and Systems I Subjects covered: Modeling and analysis of signals and systems using convolution, Fourier series, Fourier Transform bandwidth, basic filter design, modulation techniques, random variables and random processes and spectral density. EE421G Student Ratings of Instructor and Course (Scale 1-4 with 4 being the best) Term Enrollment Value of Course Quality of Teaching Spring 98 29 3.2 3.0 Spring 97 50 3.1 3.4 EE422G Signals and Systems Laboratory Prior to Fall 2007, this course was the second signal and systems course that covered discrete-time system models, Laplace and z-transforms, system block-diagrams, feedback analysis, and digital filter design. However after surveying employers, students, engineers from industry in the signals and systems area, there was a general trend in the feedback for students to have more experience at translating the theoretical knowledge to practical problems and implementing these concepts with programs/hardware. As a result we changed the curriculum to move probability theory out of the first signals and systems course so it could include the topics of the old EE422G course (now in EE421G), make probability from the mathematics department a required course, and change EE422G to a laboratory based course where students solve problems using real and simulated data with programs such as Matlab, Simulink, and LabVIEW. This change also addressed the feedback from students in their desire for more experience with these software packages. The course is intended to run once a year and be a part of the elective laboratory set for electrical engineering students. For the Fall 2007 and Spring 2008 semester it was taught in a hybrid mode where I lectured during lab periods for the first 4 weeks to cover the 9 probability and discrete concepts so that students in transition (those that had the old style EE421G) would not miss the basic materials. As a result I gave homework and quizzes on these subjects which would not ordinarily be a part of the regular lab course. Spring 2009 will the first time it is offered with a full set of labs. Therefore, no faculty assessments of student outcomes were performed because the assignments and assessment were significantly different from the ones that will be implement in the regular course. The teacher course evaluations and student self-assessments are provided, along with the syllabus for the transition period. EE422G Student Ratings of Instructor and Course (Scale 1-4 with 4 being the best) Term Enrollment Value of Course Quality of Teaching Fall 2007 14 3.4 3.6 Spring 2008 14 3.3 3.4 Spring 2009 25 3.2 3.3 Fall 2009 17 3.6 4.0 Fall 2010 23 NAY NAY Course Outcome Evaluation Two evaluations for the student course outcomes are performed. One is a self-evaluation that reflects the student confidence level and the other is by the instructor derived from the average score on course assignments related to each outcome. The course outcomes are student abilities to: 1. 2. 3. 4. 5. 6. Characterize random signals with correlation and probability density functions Analyze discrete-time signals with the (discrete) Fast Fourier transform. Design FIR and IIR filters based on signal and noise specifications. Characterize system dynamics using impulse responses, transfer functions, and state-variable representations. Simulate signals and systems using modern computer software packages Design experiments to estimate signal and system model parameters from input and/or output data. Table EE422G Student Confidence Level Percentage (student self-assessment) Semester Enrollments 1 5 6 Mean Fall 2007 14 75 72.5 72.5 75 72.5 72.5 73.33 Spring 2008 14 85 77.5 77.5 47.5 82.5 72.5 73.75 Spring 2009 25 62.5 70 57.5 57.5 62.5 57.5 61.25 Fall 2009 17 77.5 77.5 77.5 72.5 80 77.5 77.08 Fall 2010 23 NAY NAY NAY NAY NAY NAY NAY Mean Standard Deviation Course Outcome 2 3 4 75.00 74.38 71.25 63.13 74.38 70.00 71.35 9.35 3.75 12.97 8.98 8.66 8.86 9.46 NAY => Not Available Yet, IP=> In Progress 10 Table EE422G Student Confidence Level Percentage (faculty-assessment) Semester Enrollments 1 5 6 Mean Fall 2007 14 94.93 97.83 99.17 99.25 99.52 99.62 98.39 Spring 2008 14 97.10 97.50 98.33 97.04 96.92 98.50 97.57 Spring 2009 25 70.44 70.71 71.86 67.73 65.09 67.73 68.92 Fall 2009 17 85.58 93.48 94.30 90.84 90.42 94.99 91.60 Fall 2010 23 73.73 75.01 73.99 72.67 79.44 76.37 75.20 76.58 79.73 80.05 77.08 78.31 79.70 78.58 7.96 12.10 12.39 12.17 12.70 13.93 11.88 Mean Standard Deviation Course Outcome 2 3 4 NAY => Not Available Yet, IP=> In Progress Feedback and Improvement: Years 2007 and 2008 were transition years, where we had changed this course to a lab course for the first time and moved the discrete and laplace material into EE421G. So some of the lab times were devoted to lectures on the material they may have missed for taking the “old” EE421G as a prerequisite. Also several of the testing/evaluation assignments were geared to the EE421G outcomes in the transition time. For this reason, the means and standard deviations for each outcome of the faculty assessment (last rows of the table) start with Spring 2009. Note that starting with Spring 2009, the faculty assessments are much closer to the student self assessments. This is good and primarily because assessments for whole semester were more closely tied to the course outcomes. Spring 2009 also shows a drop in the self-assessment ratings. This also is true in the faculty assessments as well (relative to future semesters). I felt that this was because some students were not motivated to put time into the lab exercises to reinforce their knowledge. Especially with group efforts, there was a tendency for a member in a group to exploit the efforts of the others and not work as hard. This brought the group score down (with limited penalty to them, since they were pulled up by the others). So I instituted 2 changes in Fall 2009. One was for each student to sign into the lab upon their arrival. If a student was late or missing, they would be penalized for not being in the lab. This motivated all the students in the group to be there. Also I added a final exam to test students knowledge that should have been reinforced from engagement in the lab activities. This did boost both the self and faculty assessment scores. I will continue on with these practices. It is also noted that there is a large standard deviation from year to year on the faculty assessments. This is in part due to changing TAs and the grading scale they use in grading complex assignments, such as written lab reports. I could make the final worth more, but I do want the grade to reflect the significant writing effort, which is more of a team activity. Therefore, to get more uniformity on the lab exercises I will keep a 11 collection of sample assignments that I or past TAs have graded and give these to the new TAs can get a sense of a numerical scale for the grading. EE 462G Electronic Circuits Laboratory Subjects Covered: Experimental exercises in the design and analysis of electronic circuits incorporating transistors, zener diodes, integrated circuits, and operational amplifiers. This is now an elective lab in the electrical engineering curriculum. For students taking this lab, it is the second required lab on circuits. The first one emphasized linear circuit elements, and this one focuses on nonlinear circuit elements. Writing and instrumentation skills are emphasized. Teacher/Course Evaluation (by student): EE462G Student Ratings of Instructor and Course (Scale 1-4 with 4 being the best) Term Enrollment Value of Course Quality of Teaching Fall 2002 18 3.5 3.7 Spring 2003 45 3.5 3.8 Fall 2003 23 3.5 3.6 Summer 2004 15 3.7 3.9 Fall 2004 39 3.5 3.6 Summer 2005 8 4 4 Fall 2005 51 3.4 3.5 Spring 2006 26 Lost Lost Fall 2006 14 3.7 3.9 Spring 2007 30 3.6 3.7 Course Outcome Evaluation Two evaluations for the student course outcomes are performed. One is a self-evaluation that reflects the student confidence level and the other is by the instructor derived from the average score on course assignments related to each outcome. Outcome list: 1. 2. 3. 4. 5. Analyze circuits with nonlinear elements using semiconductor characteristics. Measure relevant quantities and parameters in electronic circuits using oscilloscopes, multimeters, function generators, power supplies, and curve tracers. Analyze electronic circuits with computer simulation programs (SPICE). Describe an experimental procedure involving circuits with semiconductor devices. Interpret experimental measurements involving circuits with semiconductor devices 12 Student Self-Assessment: Students rated their own ability relative to each course outcome on a scale from 1 (no confidence) to 5 (most confident). The mean was taken over all students and converted to a percentage (5=100%, 1=0%), which is reported in the table below. Table EE462 Student Confidence Level Percentage (student self-assessment) Semester Fall 2002 Spring 2003 Fall 2003 Summer 2004 Fall 2004 Summer 2005 Fall 2005 Spring 2006 Fall 2006 Spring 2007 Mean Standard Deviation Respon dents 18 45 23 15 39 4 5 Lost 7 19 1 87.5 75 67.5 90 77.5 82.5 85 Course Outcome 2 3 4 92.5 87.5 92.5 87.5 75 85 87.5 67.5 77.5 90 90 92.5 85 75 80 100 87.5 82.5 90 75 85 5 82.5 77.5 85 90 77.5 82.5 80 Mean 88.5 80 77 90.5 79 87 83 90 90 82.78 92.5 91.6 90.73 85 90 81.39 92.5 90 86.39 90 90 83.89 90 90.32 85.04 7.95 4.30 8.30 5.74 5.17 5.35 NAY => Not Available Yet, IP=> In Progress Student Self-Evaluation Data Summary The outcomes ratings range between 75 and 100 with an average rating of 85. The average class-to-class outcome ratings appear to be holding steady around the mean with a significant dip for the Fall 2003 and 2004 classes. The outcomes with the greatest fluctuations are 1 and 3, which are tied to the pre-lab assignments (circuit analysis and computer simulation). They have a standard deviation around 8, while the others have a standard deviation around 5. The last 2 times the course ran, students confidence level is has been either at or above the average within about 1 standard deviation. The Spring 2006 evaluation sheet disappeared, which was disappointing that this data were lost. A staff member was responsible for bring the evaluation sheets to the college office and claims they were deliver to the proper office on time. Assessment by Instructor: The following assessment tools were used throughout the class: Pre-lab assignments In-lab data sheets Lab written reports Final lab demonstrations The lab reports involving multiple outcomes were graded and recorded in components corresponding to each outcome. The average score was computed over all students and assessment tools related to each outcome. The percentage for each outcome is listed in the table below. 13 Table EE462 Student Performance Level Percentage (Faculty assessment) Semester Fall 2002 Spring 2003 Fall 2003 Summer 2004 Fall 2004 Summer 2005 Fall 2005 Spring 2006 Fall 2006 Spring 2007 Mean Standard Deviation Enroll ments 18 45 23 15 39 8 51 26 14 30 1 91 74.5 84.6 75.5 90.7 88.7 82.4 84.4 93.5 86.4 85.17 6.36 2 98.25 96.5 90.3 85.75 92.5 87.7 85 91 91.9 89.7 Course Outcome 3 4 91 87.2 80.25 97.5 81.9 90.8 76.25 86.25 82.75 89 93 84.4 77.7 75.7 93.1 94.9 87.3 95.8 95.4 95.7 90.86 86.31 4.25 7.26 5 81.75 88.5 94.3 83.9 75 85.8 76 85.1 84.1 81.8 Mean 89.84 87.45 90.00 81.53 88.39 87.92 79.36 89.70 90.52 89.80 89.73 84.83 87.45 6.71 4.79 3.86 Faculty Student Outcome Evaluation Data Summary The outcomes ratings range between 74.5 and 97.5 with an average rating of 87 and a standard deviation around 4. The average class-to-class outcome ratings appear to be holding steady around the mean with a significant dip over one standard deviation for the Fall 2005. The outcomes with the greatest fluctuations are 3 and 4, where 3 is part of the pre-lab assignments (circuit analysis and computer simulation) and for deals with describing lab procedures. Data Analysis: The average numbers for both the self and faculty performance ratings are surprisingly similar. In both cases the scores have been higher than average the last 2 times the course ran. This is encouraging and suggests that changes in instruction made along the way have made a difference. The outcomes with the greatest standard deviations (1 and 3 for self, 3 and 4 for faculty) show the most improvement. Overall there is no trend or significant difference in the student or faculty ratings that suggest a particular improvement at this time. Feedback and improvement: Lab infrastructure development: Over the course of teaching this lab in the first 3 years, I have guided the upgrade of oscilloscopes and curve tracer. The curve tracer was over 18 years old and outdated, the oscilloscopes were over 4 years old and were the oldest scopes in all our teach labs, even having less features than the oscilloscopes used in the sophomore labs. In addition I managed to convince the department to put PCs at all lab stations with GPIB interfaces for automated instrumentation with a LabVIEW interface. The newer equipment has enabled new labs to be designed that involve the students more with programming and advanced data analysis. Future improvements will likely involve moving away from the GPIB interface to USB, and designing labs that require more LabVIEW programming assignments. The outcomes started off high because the grading standard was not as rigorous the first year. The TAs do most of grading for the lab reports, and my ability to train them is 14 limited, due to time constraints and high variability in the TAs’ background. My first priority was having students write procedures, which they seem to rank high even from the beginning. I have been working on being more general in the lab assignment instructions so the students are doing more experimental design and less cookbook lab assignments. These were changes to enforce a standard that I feel is important and in the students and professions best interest. My work to help students in their achievement of these outcomes had not been all that successful at first as indicated from the outcomes on both the instructor and student assessments in Fall 2005. Since then I had made several changes and stress more of what I expect in the lab write ups with the hope of improving performance and it has appeared to make a difference in improving Outcome 4. I felt that many of the labs originally developed by Dr. Radun were high quality; however they took more time that expected for a 2 credit hour lab for the students to complete and write up. As a result students were skipping or doing substandard work on critical parts of the lab just to get the assignment done. Since summer of 2004 I have reduced the number of labs and now only required only one lab report and one pre-lab assignment from each team, so that students can put more effort into the writing process (one writes and the other edits or checks prelab work, and then they switch off for subsequent labs). I was hoping they would learn from each other better in this approach and take more time to do the assignments well. I also end the labs a couple of weeks early to have an open lab where students can come in and work on things they had questions about and prepare for the final lab practical exam. This unstructured time appears to be working as indicated by the consistent outcome rating since then. Another improvement over the years has been in the interpretation of the data. Students have always rated themselves relatively low. With the new equipment I have been lecturing more on computing statistics and relating that to the circuit and instrumentation properties. I shifted the experimental focus on some labs to collecting large data sets and analyzing the variability of an experimental measurement (using Matlab and LabVIEW). The quality of the measurement (procedure) can then be judged based on repeatability. In its current state I will focus more on upgrading equipment (a new version of B2SPICE is greatly needed) and providing the students with opportunities for more unstructured time in the lab where they can pursue some of their own questions that arise from the standard experiments. EE 499 Senior Design Subjects Covered: A course for senior students in electrical engineering with an emphasis on the engineering design processes requiring the creative involvement of students in open-ended problems relating to actual designs that are appropriate to the profession of electrical engineering. Student Ratings of Instructor and Course (Scale 1-4 with 4 being the best) Term Enrollment Value of Course Quality of Teaching Spring 99 8 3.3 3.6 15 Spring 99 Project Description: The following design project requires a good understanding of the concepts presented in EE380, EE421, and EE422 (some of EE461). The skills most often required for typical project tasks include programming (in Matlab, C, and assembly language) and teamwork. This design project focuses on a system that processes a voiced melody and synthesizes sounds in real-time to create a harmony. The critical element for the design solution involves programming a TMS320C3x floatingpoint DSP chip. Subtasks include designing simple audio amps (microphone and speaker circuits), and analyzing sounds from various voices and instruments. Student Self-Assessment: Spring 99 Students Responding Expected Grade Level of confidence Course Outcomes effectively work in groups to propose and develop engineering solutions. 6 3.2 76.00% apply previously acquired engineering principles as well as learn new principles in solving a large engineering design problem. 6 3.2 84.00% communicate and thoroughly document the results of an engineering design project to the engineering community using a variety of media (report, web page). 6 3.2 74.00% Assessment tools: Optional questions on teacher/course evaluation that asked to student the rate their own ability to perform each of the course outcomes Assessment by Instructor: Course Outcomes Spring 99 Students Evaluated Percent of total Grade Assessment Score effectively work in groups to propose and develop engineering solutions. 8 0.25 90.37% apply previously acquired engineering principles as well as learn new principles in solving a large engineering design problem. 8 0.3 95.33% communicate and thoroughly document the results of an engineering design project to the engineering community using a variety of media (report, web page). 8 0.45 88.50% Assessment tools: Group written pre proposals for solving the design problem that included a breakdown into subtasks, a timetable for completion, and division of effort among the team members 16 Individual oral presentations where each person explains the overall objective of the team and their individual role in the project including the individual tasks they need to accomplish (presentation are 50% peer rated) Individual engineering notebooks where the students log their work on the project Group presentation of the final product (demonstrate performance) Group written final report documenting the design and the design process Feedback and improvement: This is the first time I am using this style of assessment and the first time I taught this course. The numbers above represent a baseline score, and more data are required to draw specific conclusions about what should be improved. Since this was my first time teaching the course, I do have quite a few ideas on how to make it better. Students liked learning about programming the DSP hardware and were frustrated that all students did not get a chance to learn it (effort was divided up where some group member were primarily working on the algorithms and supporting analog hardware). As a result I have a have developed a set of 5 lab experiments designed to introduce the student to program and debug the C31 DSP chip. I will use this that next time I teach a DSP related course using the C30. EE 513 Audio Signals and Systems (A new course) Subjects Covered: Audio system and signal models, analysis and design of audio systems. I had the idea to offer a course like this for a long time. I finally followed through on it when a group of students presented me with a petition to offer a course in audio systems. With many interested students on hand, I decided to offer this course the following year in the summer so as not to burden the department with one more 500-level course in the regular year. I also felt this course was a good idea because there are currently no seniorlevel courses offered in signal processing and audio is a good application through which to teach several popular analyses and design approaches in signal processing. Signal processing makes up a significant part of the electrical engineering profession and with the information age taking us to a place of “sensors everywhere,” signal processing will continue to be an important component of the electrical engineering community. The course was offered 3 times as an EE599 course and went through significant changes each time. I initially wanted to cover psychoacoustics and nonlinear processing, but soon realized that students were struggling with programming concepts as well as the linear filter theory that they were introduced to in the signals and systems junior-level courses. So I refocused the course on digital filter design and applied signal analysis techniques. I had been able to fit in a very simple introduction to a Bayesian design of classifiers and give a studio project on it that involved classify recorded sounds or words. 17 In Fall 2007 the course was finally offered as an official senior elective course EE513, but was not listed until a week before the semester started and it conflicted with senior design, so only 4 students signed up. Because of their graduation status and limitations of the students’ schedules who had signed up, the department chair decided to let it run. In the Spring 2008 it was offered again with 14 student registering. The faculty assessments at this point are just developing and are difficult even to use as a base line. Below is a syllabus of the last time it was offered along with the teacher course evaluations. Teacher/Course Evaluation (by student): EE513 Student Ratings of Instructor and Course (Scale 1-4 with 4 being the best) Term Enrollment Value of Course Quality of Teaching Fall 2007 4 3.7 4 Spring 2008 13 3.2 3.5 Spring 2009 9 3.9 3.8 Spring 2010 11 3.7 3.7 Course Outcome Evaluation Two evaluations for the student course outcomes are performed. One is a self-evaluation that reflects the student confidence level and the other is by the instructor derived from the average score on course assignments related to each outcome. Outcome list: 1. 2. 3. 4. 5. 6. Characterize digital audio systems with difference equations and transfer functions. Characterize digital audio signals with correlation functions and power spectra. Design systems for processing audio data for applications such as filtering, audio effects, and signal classification. Know the fundamental principles of acoustic energy generation and propagation. Program with mathematics software to implement and evaluate designs. Work as a team to solve multi-component problems. Table EE513 Student Confidence Level Percentage (student self-assessment) Semester Fall 2007 Spring 2008 Spring 2009 Spring 2010 Mean Standard Deviation Enrollments 4 13 9 11 1 75 87.5 85 70 Course Outcome 2 3 4 75 87.5 37.5 77.5 80 82.5 77.5 77.5 72.5 77.5 80 72.5 79.38 76.88 81.25 66.25 81.25 84.38 Mean 72.92 81.25 81.25 77.50 78.23 8.26 1.25 19.74 4.33 5.54 7.24 4.33 NAY => Not Available Yet, IP=> In Progress 18 5 75 82.5 85 82.5 6 87.5 77.5 90 82.5 Table EE513 Student Confidence Level Percentage (faculty - assessment) Semester Fall 2007 Spring 2008 Spring 2009 Spring 2010 Mean Enrollments 4 13 9 11 Standard Deviation 1 66.13 85.14 83.77 91.70 Course Outcome 2 3 4 71.36 92.58 67.12 88.21 84.55 83.12 79.93 86.43 87.46 89.51 87.81 90.76 5 92.39 83.69 93.29 92.37 6 93.63 84.87 91.60 92.78 Mean 80.53 84.93 87.08 90.82 81.69 82.25 87.84 82.11 90.43 90.72 85.84 10.93 8.41 10.48 4.52 3.99 4.29 3.43 NAY => Not Available Yet, IP=> In Progress Feedback and Improvement: There appears to be a general agreement between the instructors and student selfevaluations. There is a significant increase in the outcomes (with both evaluations) after he first offering. One big difference was that I required individual written reports from the group projects. I think this helped student better assimilate the knowledge and skills they learned from each studio project. Both evaluations give higher ratings to the software and teamwork outcomes (5 and 6). This is the emphasis of the course, so it does not surprise me. I probably need to give more short quizzes throughout the semester to keep them focused on their analytical skills, however that would detract from the programming efforts. So I may try a modest increase (maybe 6 total quizzes) and see if that raises outcomes 1 through 4. In the most recent offering I did not use a textbook, since none of the book are a good fit for this course. The outcomes did go down a little after that, but it is within the standard deviations. So I will try it one more semester to see if there is a negative results from that. EE611 Deterministic Systems Subjects covered: Linear system models and solutions for multiple-input-output systems, System model controllability, System model observability, State-feedback design. Expected Student Outcomes: A student who has successfully completed this course should be able to: 1. Use transfer function and state-space representations to describe linear systems . 2. Classify systems based on their properties and descriptions, which include causality, linearity, time-invariance, continuous-time, controllability, observability, and stability. 3. Solve for system outputs given inputs, initial conditions/states, and state-space or transfer function descriptions. 4. Analyze and design state observer systems. 5. Analyze and design state feedback systems 6. Create computer programs (such as MATLAB, Octave, or Python) to analyze and design systems 19 Student Ratings of Instructor and Course (Scale 1-4 with 4 being the best) Term Enrollment Value of Course Quality of Teaching Fall 92 37 3.5 3.7 Fall 2008 31 3.7 3.8 Fall 2009 17 3.8 3.7 EE630 Digital Signal Processing Subjects covered: Z-transforms, Digital Filter Design, and Analog-to-Digital and Digital-to-Analog Conversion, lab component Filter implementation on DSP hardware. Student Ratings of Instructor and Course (Scale 1-4 with 4 being the best) Term Enrollment Value of Course Quality of Teaching Fall 95 11 3.6 3.6 Fall 94 14 3.6 3.7 Fall 93 9 3.4 3.7 EE 639 Advanced Topics in Communications and Signal Processing Subjects Covered: Advanced topics in signal processing and communications research and design topics of current interests. A review and extension of current literature and selected papers and reports. Spring 2002 Subjects Covered: Statistical Modeling by Wavelets, Estimation Theory for Signal Processing and Communications, and DSP implementation Fall 99 Subjects Covered: Statistical Modeling by Wavelets, Estimation Theory for Signal Processing and Communications Spring 96 Subjects Covered: Modern Spectral Estimation and Optimal Filtering Spring 95 Subjects Covered: Wavelets and Higher Order Statistics Spring 94 Subjects Covered: Modern Spectral Estimation and Optimal Filtering Student Ratings of Instructor and Course (Scale 1-4 with 4 being the best) Term Enrollment Value of Course Quality of Teaching Spring 02 14 3.8 3.8 Fall 99 5 3.5 3.5 Spring 96 3 No Evaluation No Evaluation Spring 95 8 3.9 3.9 Spring 94 9 3.2 3.7 20 Student Self-Assessment: Course Outcomes Ability to read and critically evaluate research in selected areas of communications and signal processing Ability to mathematically model communication and signal processing systems Ability to design a Monte Carlo simulation for performance analysis of communications and signal processing systems Ability to implement signal processing algorithms with modern hardware Fall 99 Spring 02 Students Assessment Percent of Students Assessment Percent of Evaluated Score total Grade Evaluated Score total Grade 5 80.66% 27.50% 7 4 10% 5 78.81% 45.00% 7 3.86 27.5% 5 79.93% 27.50% 7 4.36 27.55 NA NA NA 7 4.14 35% Assessment by Instructor: Course Outcomes Ability to read and critically evaluate research in selected areas of communications and signal processing Ability to mathematically model communication and signal processing systems Ability to design a Monte Carlo simulation for performance analysis of communications and signal processing systems Ability to implement signal processing algorithms with modern hardware Fall 99 Spring 02 Students Assessment Percent of Students Assessment Percent of Evaluated Score total Grade Evaluated Score total Grade 5 80.66% 27.50% 14 91% 10% 5 78.81% 45.00% 14 89% 27.5% 5 79.93% 27.50% 14 88% 27.55 NA NA NA 14 99% 35% Assessment tools: Literature review on designated topic of interest A Monte Carlo simulation design homework assignment Oral presentations explaining a result in the literature they want to challenge or confirm with a Monte Carlo simulation. (presentations are peer and instructor rated) Written final report documenting the research and conclusions Feedback and improvement: Between the two times I have taught this course, I added an additional outcome as a result of responding to an employers survey indicating that they like to see the graduates aware of how to implement the signal processing algorithm they learn the theory on. Therefore, NA is present in the 4th outcome for the Fall 99 class. There appears to be an improvement in the Monte Carlo methods. I have provided better examples and teaching 21 material in the Spring 2002 class, since I was able to build on the material presented in previous classes. I also worked harder with the students to help them find reasonable projects to work on for the class. This may have been the reason for the increase. Overall I think as an advanced topics course I try to cover too much since we have a significant gap in our grad program for signal processing courses. It may best to create an EE631 course as a sequel to EE630 and teach more fundamental concepts where they can focus on intermediate theory required to tackle more advanced concepts. Student Evaluation Summary: My student evaluations have been consistently positive over my career ( ranging from 3.0 to 4.0 on a 4 point scale). This is typically equal to or greater than the college average. However, there was a relative dip in ratings between 1997 and 1999. This I believe was more a function of distractions from my increased administrative duties (Interim Chair) and decreased margins in my personal life, rather than something systematic in my teaching style. Both sources of distraction have changed significantly, and my teaching ratings have gone back up. I take most student praise and criticisms seriously as listed in their comments and have accommodated when it felt benefit both the students and profession which they desired to work in. Advising Evaluation: While Director of Undergraduate Studies (DUGS) I was the advisor’s advisor to the department. I advised all students who are transferring in and helped new faculty understand the curriculum and their advising responsibilities. I regularly trained new faculty and remind old faculty in regards to the curriculum and their advising duties. I had not collected data to quantitatively assess my performance or the department’s performance in this area. I was not aware of any consistent complaints from the faculty or the students on the way I perform my advising duties as DUGS. Since becoming a regular faculty member in 2006 I advise about 20 students every semester. I try to ask them questions on their progress and give them opportunities to ask questions that go beyond the curriculum and relate to the profession and their careers. I find it rewarding and have not performed assessment on my job in this regards. 22 APPENDIX A Student Evaluations of Courses and Teaching From Fall 2006 through Spring 2010 Following the summary table of course and teacher ratings since starting at university of Kentucky in Fall 1991, the original student evaluations for the last 5 years are arranged in reverse chronological order (most recent first) with student comments first and then the numerical ratings. Table 1. Summary of course taught with Course/Teaching Rating EE101Term Enrollment Value of Course Fall 2000 92 3.2 Fall 2001 102 3.1 Fall 2002 107 3.2 EE211 Term Enrollment Value of Course Spring 02 33 3.6 Fall 01 55 3.7 Spring 01 27 3.8 Fall 00 45 3.5 Spring 00 29 3.6 EE221 Term Enrollment Value of Course Fall 2010 34 IP Spring 2010 34 3.5 Fall 2008 19 3.5 Spring 2007 33 3.2 Spring 2005 43 3.3 Fall 98 34 3.4 Fall 97 44 Team Taught 50% 3.0 Summer 97 19 No Evaluation Fall 96 41 3.5 Summer 96 19 No Evaluation Spring 96 59 3.3 Fall 95 61 3.2 Fall 94 26 3.5 Summer 94 10 No Evaluation Spring 93 71 3.4 Spring 92 58 3.5 Fall 91 51 3.6 EE222 Term Enrollment Value of Course Fall 96 44 3.2 EE307 Term Enrollment Value of Course 23 Quality of Teaching 3.5 3.4 3.4 Quality of Teaching 3.8 3.8 3.9 3.6 3.7 Quality of Teaching IP 3.1 3.4 3.2 3.1 3.5 3.2 No Evaluation 3.6 No Evaluation 3.2 3.3 3.6 No Evaluation 3.6 3.5 3.6 Quality of Teaching 3.6 Quality of Teaching Summer 96 Summer 95 EE380 Term Fall 93 Spring 93 EE421 Term Spring 98 Spring 97 EE422 Term Fall 2010 Fall 2009 Spring 2009 Spring 2008 Fall 2007 Fall 92 EE462GTerm Spring 2007 Fall 2006 Spring 2006 Fall 2005 Summer 2005 Fall 2004 Summer 2004 Fall 2003 Spring 2003 Fall 2002 EE499 Term Spring 99 EE513 Term Spring 2010 Spring 2009 Spring 2008 Fall 2007 EE599 Term Fall 2006 Spring 2004 Summer 2003 EE611 Term Fall 2009 Fall 2008 Fall 92 EE630 Term Fall 95 Fall 94 Fall 93 40 41 Enrollment 41 47 Enrollment 29 50 Enrollment 23 17 25 14 14 20 Enrollment 30 14 20 51 8 39 15 23 45 18 Enrollment 8 Enrollment 11 9 13 4 Enrollment 15 7 14 Enrollment 17 19 37 Enrollment 11 14 9 No Evaluation No Evaluation Value of Course 2.9 3.3 Value of Course 3.2 3.1 Value of Course IP 3.6 3.5 3.3 3.4 3.7 Value of Course 3.6 3.7 (lost records) 3.4 4 3.5 3.7 3.5 3.5 3.5 Value of Course 3.3 Value of Course 3.7 3.9 3.2 3.7 Value of Course 3.8 3.3 3.7 Value of Course 3.5 3.7 3.5 Value of Course 3.6 3.6 3.4 24 No Evaluation No Evaluation Quality of Teaching 3.0 3.1 Quality of Teaching 3.0 3.4 Quality of Teaching IP 4.0 3.3 3.4 3.6 3.7 Quality of Teaching 3.7 3.9 (lost records) 3.5 4 3.6 3.9 3.6 3.8 3.7 Quality of Teaching 3.6 Quality of Teaching 3.7 3.8 3.5 4 Quality of Teaching 3.9 3.5 3.9 Quality of Teaching 3.7 3.8 3.7 Quality of Teaching 3.6 3.7 3.7 EE 639 Term Spring 02 Fall 99 Spring 96 Spring 95 Spring 94 Enrollment 14 5 3 8 9 Value of Course 3.8 3.5 No Evaluation 3.9 3.2 25 Quality of Teaching 3.8 3.5 No Evaluation 3.9 3.7