id - Computer Sciences User Pages - University of Wisconsin

advertisement

Cimple 1.0: A Community Information Management

Workbench

Pedro DeRose

University of Wisconsin-Madison

Preliminary Examination

The CIM Problem

Numerous online communities

database researchers, movie fans, legal professionals, bioinformatics,

enterprise intranets, tech support groups

Each community = many data sources + many members

Database community

home pages, project pages, DBworld, DBLP, conference pages...

Movie fan community

review sites, movie home pages, theatre listings...

Legal profession community

law firm home pages

2

The CIM Problem

Members often want to discover, query, and monitor

information in the community

Database community

what is new in the past week in the database community?

any interesting connection between researchers X and Y?

find all citations of this paper in the past one week on the Web

what are current hot topics? who has moved where?

Legal profession community

which lawyers have moved where?

which law firms have taken on which cases?

Solving this problem is becoming increasingly crucial

initial efforts at UW, Y!R, MSR, Washington, IBM Almaden

3

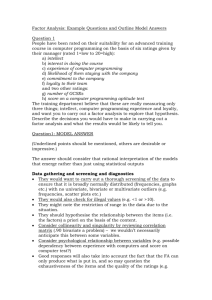

Planned Contributions of Thesis

1. Decompose the overall CIM problem

[IEEE 06, CIDR 07, VLDB 07]

HV Jagadish

Data Sources

Web Pages

*

**

Day 1

**

*

SIGMOD-07

*

.

.

.

Day n

.

.

.

HV Jagadish

served in

**

.

.

.

.

.

.

*

**

*

SIGMOD-07

Community Portal

User services

- keyword search

- query

- browse

- mine …

Leveraging the

Community

**

*

**

*

Incremental Expansion

4

Planned Contributions of Thesis

2. Provide concrete solutions to key sub-problem

• creating ER graphs: key novelty is composing plans from operators [VLDB 07]

• facilitates developing, maintaining, and optimizing

CreateE

CreateR

MatchMStrict

MatchMbyName

ExtractLabel

ExtractMbyName ExtractMbyName

Union

{s1 … sn} \ DBLP

c(person, label)

DBLP

main pages

conference

entities

person

entities

• leveraging communities: wiki solution with key novelties [ICDE 08]

• combines contributions from both humans and machines

• combines both structured and text contributions

5

Planned Contributions of Thesis

3. Capture solutions in the Cimple 1.0 workbench [VLDB 07]

• empty portal shell, including basic services and admin tools

• set of general operators, and means to compose them

• simple implementation of operators

• end-to-end development methodology

• extraction/integration plan optimizers

• developers can employ Cimple tools to quickly build and maintain portals

• will release publicly to drive research and evaluation in CIM

6

Planned Contributions of Thesis

4. Evaluate solutions and workbench on several domains

• use Cimple to build portals for multiple communities

• evaluate ease of development, extensibility, and accuracy of portal

• have built DBLife, a portal for the database community [CIDR 07, VLDB 07]

• will build a second portal for a non-research domain (e.g., movies, NFL)

7

Planned Thesis Chapters

Selecting initial data sources

Creating the daily ER graph

Merging daily graphs into the global ER graph

Incrementally expanding sources and data

Leveraging community members

Developing the Cimple 1.0 workbench

Evaluating the solutions and workbench

8

Selecting Initial Sources

Current solutions often use a "shotgun" approach

select as many potentially relevant sources as possible

lots of noisy sources, which can lower accuracy

Communitites often show an 80-20 phenomenon

small set of sources already covers 80% of interesting activity

Select these 20% of sources

e.g., for database community, sites of prominent researchers,

conferences, departments, etc.

Can incrementally expand later

semi-automatically or mass collaboration

Crawl sources periodically

e.g., DBLife crawls ~10,000 pages (+160 MB) daily

9

Creating the ER Graph – Current Solutions

Manual

e.g., DBLP

require a lot of human effort

Semi-automatic, but domain-specific

HV Jagadish

served in

SIGMOD-07

e.g., Yahoo! Finance, Citeseer

difficult to adapt to new domains

Semi-automatic and general

many solutions from the database, WWW, and Semantic Web communities,

e.g., Rexa, Libra, Flink, Polyphonet, Cora, Deadliner

often use monolithic solutions, e.g., learning methods such as CRFs

require little human effort

can be difficult to tailor to individual communities

10

Proposed Solution: A Compositional Approach

Discover Entities

HV Jagadish

Web Pages

**

HV Jagadish

**

*

*

SIGMOD-07

*

Discover Relationships

served in

SIGMOD-07

**

*

CreateE

CreateR

MatchMStrict

c(person, label)

MatchMbyName

ExtractMbyName

ExtractLabel

ExtractMbyName

Union

{s1 … sn} \ DBLP

main pages

conference

entities

person

entities

DBLP

11

Benefits of the Proposed Solution

Easier to develop, maintain, and extend

e.g., 2 students less than 1 week to create plans for initial DBLife

Provides opportunities for optimization

e.g., extraction and integration plans allow for plan rewriting

Can achieve high accuracy with relatively simple operators

by exploiting community properties

e.g., finding people names on seminar pages yields talks with 88% F1

12

Creating Plans to Discover Entities

Raghu Ramakrishnan

CreateE

MatchM

ExtractM

Union

s1 … sn

13

Creating Plans to Discover Entities (cont.)

These operators address well-known problems

CreateE

MatchM

In DBLife, we find simple implementations can

already work surprisingly well

ExtractM

Union

mention recognition, entity disambiguation...

many sophisticated solutions

often easy to collect entity names from community sources

(e.g., DBLP)

ExtractMByName: finds variations of names

entity names within a community are often unique

MatchMByName: matches mentions by name

s1 … sn

these simple methods work with 98% F1 in DBLife

14

Extending Plans to Handle Difficult Spots

CreateE

MatchMStrict

DBLP: Chen Li

···

41. Chen Li, Bin Wang, Xiaochun Yang.

VGRAM. VLDB 2007.

···

38. Ping-Qi Pan, Jian-Feng Hu, Chen Li.

Feasible region contraction.

Applied Mathematics and Computation.

···

MatchMbyName

ExtractMbyName

ExtractMbyName

Union

{s1 … sn}\ DBLP

DBLP

Can decide which operators to apply where

e.g., stricter operators over more ambiguous data

Provides optimization opportunities

similarly to relational query plans

see ICDE-07 for a way to optimize such plans

15

Creating Plans to Discover Relationships

Categorize relations into general classes

e.g., co-occur, label, neighborhood…

Then provide operators for each class

e.g., ComputeCoStrength, ExtractLabels, neighborhood selection…

And compose them into a plan for each relation type

makes plans easier to develop

plans are relatively simple to understand

can easily add new plans for new relation types

16

Illustrating Example: Co-occur

To find affiliated(person, org) relationship...

e.g., affiliated(Raghu, UW Madison), affiliated(Raghu, Yahoo! Research)

categorized as a co-occur relationship

...compose a simple co-occur plan

CreateR

Select (strength > θ)

ComputeCoStrength

×

Union

person

org

entities entities

s1 … sn

This simple plan already finds affiliations with 80% F1

17

Illustrating Example: Label

ICDE'07 Istanbul Turkey

Plan for

served-in(person, conf)

CreateR

c(person, label)

ExtractLabel

main pages

conference

entities

person

entities

General Chair

• Ling Liu

• Adnan Yazici

Program Committee Chairs

• Asuman Dogac

• Tamer Ozsu

• Timos Sellis

Program Committee Members

• Ashraf Aboulnaga

• Sibel Adali

…

18

Illustrating Example: Neighborhood

UCLA Computer Science Seminars

Plan for

gave-talk(person, venue)

CreateR

Title: Clustering and Classification

Speaker: Yi Ma, UIUC

Contact: Rachelle Reamkitkarn

c(person, neighborhood)

seminar

pages

org

entities

person

entities

Title: Mobility-Assisted Routing

Speaker: Konstantinos Psounis, USC

Contact: Rachelle Reamkitkarn

…

19

Creating Daily ER Graphs

Daily ER Graph

HV Jagadish

Data Sources

Web Pages

*

**

Day 1

**

*

SIGMOD-07

*

.

.

.

Day n

.

.

.

HV Jagadish

served in

**

.

.

.

.

.

.

*

**

*

SIGMOD-07

Global ER Graph

**

*

**

*

20

Merging Daily ER Graphs

Global ER Graph

UIUC

gave talk

AnHai

Enrich

gave talk

Stanford

Day n

UIUC

gave talk

AnHai

Match

global

ER Graph

Day n+1

daily

ER Graph

UIUC

...

AnHai

gave talk

Stanford

21

Cimple Workflow

Blackboard

http://…

http://…

datasources.xml

Crawler

index.xml

crawledPages/…

Discover Entities

Person

Publication

ExtractM

ExtractM

MatchM

CreateE

…

MatchM

CreateE

Discover Relationships

**

*

entities.xml

**

*

relationships.xml

Merge ER Graphs

globalGraph.xml

Services

superhomepages,

browsing, search

22

Example Plan Specification

ExtractMentions.pl

#!/usr/bin/perl

discoverPeopleEnities.cfg

###################################################################

# Arguments: <moduleDir> <fileIndex> <variationsFile>

#

moduleDir: the relative path to the module

# Look for names

pages the

andfile

mark

as mentions

# in

fileIndex:

indexthem

of crawled

files

EXTRACT_MENTIONS

tasks/getMentions/extractMentions

# =variationsFile:

a file containing mention name variations

#

$EXTRACT_MENTIONS PerlSearch $CRAWLER->index.xml

# Finds mentions in crawled files by searching for name variations

$NAME_VARIATIONS

###################################################################

use dblife::utils::CrawledDataAccess;

use dblife::utils::OutputAccess;

# Match mentions

# First get arguments

$fileIndex, $variationsFile) = @ARGV;

MATCH_MENTIONS my

= ($moduleDir,

tasks/getEntities/matchMentions

$MATCH_ENTITIES# Parse

RootNames

the crawled$EXTRACT_MENTIONS->mentions.xml

file index for info by URL

my %urlToInfo

open(FILEINDEX, "< $fileIndex");

while(<FILEINDEX>) {

if(/^<file>/) { ... }

# Create entities elsif(/^<\/file>/) { ... }

CREATE_ENTITIES} = ...

tasks/getEntities/createEntities

$CREATE_ENTITIES

RootNames $MATCH_ENTITIES->mentionGroups.xml

close(FILEINDEX);

# Output as we go

open(OUT, "> $moduleDir/output/output.xml");

...

# Search through crawled file for variations

foreach my $url (keys %urlToInfo) { ... }

...

close(OUT);

23

Example Operator APIs

extractMentions/output.xml

createEntities/output.xml

<mention id="mention-537390">

<entity id="entity-1979">

<mentionType>people</mentionType>

<entityName>Daniel Urieli</entityName>

<rootName>Jeffrey F. Naughton</rootName>

matchMentions/output.xml

<entityType>people</entityType>

<nameVariation>(Jeffrey|Jefferson|Jeff)\s+Naughton</nameVariation>

<mention>mention-545376</mention>

<mentionGroup>

<filepath>www.cse.uconn.edu/icde04/icde04cfp.txt</filepath>

<mention>mention-2510</mention>

<mention>mention-545376</mention>

<url>http://www.cse.uconn.edu/icde04/icde04cfp.txt</url>

<mention>mention-2511</mention>

<mention>mention-2510</mention>

<mentionLeftContextLength>100</mentionLeftContextLength>

<mention>mention-2512</mention>

<mention>mention-2511</mention>

<mentionRightContextLength>100</mentionRightContextLength>

</entity>

<mention>mention-2512</mention>

<mentionStartPos>3786</mentionStartPos>

</mentionGroup>

<mentionEndPos>3798</mentionEndPos>

<mentionMatch>Jeff Naughton</mentionMatch> <entity id="entity-1980">

<mentionGroup>

<mentionWindow> PROGRAM

CHAIR 6. Mary Fernandez, <entityName>Jeffrey

AT&T Gail Mitchell, F. Naughton</entityName>

<entityType>people</entityType>

BBN Technologies 7. Ling Liu,<mention>mention-537390</mention>

Georgia Tech 8. Jeff Naughton, U. Wisc.

<mention>mention-537390</mention>

SEMINAR CHAIR 9. Guy Lohman, <mention>mention-537678</mention>

IBM Almaden Mitch Cherniack,

Brandeis U. 10.

<mention>mention-537678</mention>

<mention>mention-538967</mention>

Panos Chrysanth</mentionWindow>

<mention>mention-538967</mention>

<mention>mention-539718</mention>

</mention>

<mention>mention-539718</mention>

<mention>mention-539973</mention>

<mention>mention-539973</mention>

<mention id="mention-1143"> <mention>mention-539974</mention>

<mention>mention-539974</mention>

<mention>mention-540184</mention>

<mentionType>people</mentionType>

<mention>mention-540184</mention>

<mention>mention-540265</mention>

<rootName>Guy M. Lohman</rootName>

<mention>mention-540265</mention>

<mention>mention-554093</mention>

<nameVariation>Guy\s+Lohman</nameVariation>

<mention>mention-554093</mention>

<mention>mention-540732</mention>

<filepath>www.cse.uconn.edu/icde04/icde04cfp.txt</filepath>

<mention>mention-540732</mention>

<mention>mention-540733</mention>

<url>http://www.cse.uconn.edu/icde04/icde04cfp.txt</url>

<mention>mention-540733</mention>

<mention>mention-541072</mention>

<mentionLeftContextLength>100</mentionLeftContextLength>

<mention>mention-541072</mention>

<mention>mention-541073</mention>

<mentionRightContextLength>100</mentionRightContextLength>

<mention>mention-541073</mention>

<mention>mention-541964</mention>

<mentionStartPos>3850</mentionStartPos>

<mention>mention-541964</mention>

<mention>mention-542572</mention>

<mentionEndPos>3859</mentionEndPos>

<mention>mention-542572</mention>

<mention>mention-198996</mention>

<mentionMatch>Guy Lohman</mentionMatch>

<mention>mention-198996</mention>

<mention>mention-543725</mention>

<mentionWindow>il Mitchell,

BBN Technologies 7. Ling

Liu, Georgia

<mention>mention-544495</mention>

Tech 8. Jeff Naughton, U. Wisc.

SEMINAR CHAIR 9. Guy <mention>mention-543725</mention>

Lohman, IBM Almaden

<mention>mention-544495</mention>

Mitch Cherniack, Brandeis U. <mention>mention-545057</mention>

10. Panos Chrysanthis, U.

Pittsburgh 11.

<mention>mention-545057</mention>

...

Aidong Zhang, SU</mentionWindow>

...

</mentionGroup>

</mention>

</entity>

24

Experimental Evaluation: Building DBLife

Initial DBLife (May 31, 2005)

Data Sources (846): researcher homepages (365), department/organization homepages (94),

conference homepages (30), faculty hubs (63), group pages (48), project pages (187),

colloquia pages (50), event pages (8), DBWorld (1), DBLP (1)

Core Entities (489): researchers (365), department/organizations (94), conferences (30)

Operators:

DBLife-specific implementation of MatchMStrict

Relation Plans (8): authored, co-author, affiliated with, gave talk, gave tutorial, in panel,

served in, related topic

Maintenance and Expansion

Data Source Maintenance: adding new sources, updating relocated pages, updating source metadata

Time

2 days, 2 persons

2 days, 2 persons

1 day, 1 person

2 days, 2 persons

Time

1 hour/month,

1 person

Current DBLife (Mar 21, 2007)

Data Sources (1,075): researcher homepages (463), department/organization homepages (103),

conference homepages (54), faculty hubs (99), group pages (56), project pages (203), colloquia pages (85),

event pages (11), DBWorld (1), DBLP (1)

Mentions (324,188): researchers (125,013), departments/organizations (30,742), conferences (723), publication:

(55,242), topics (112,468)

Entities (16,674):

researchers (5,767), departments/organizations (162), conferences (232), publications (9,837),

topics (676)

Relation Instances (63,923): authored (18,776), co-author (24,709), affiliated with (1,359), served in (5,922),

gave talk (1,178), gave tutorial (119), in panel (135), related topic (11,725)

25

Relatively Easy to Deploy, Extend, and Debug

DBLife has been deployed and extended by several developers

CS at IL, CS at WI, Biochemistry at WI, Yahoo! Research

development started after only a few hours Q&A

Developers quickly grasped our compositional approach

easily zoomed in on target components

could quickly tune, debug, or replace individual components

e.g., new student extended ComputeCoStrength operator and added the

"affiliated" plan in just a couple days

26

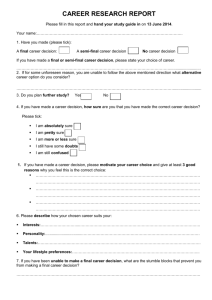

Accuracy in DBLife

Mean accuracy over 20 randomly chosen researchers

Experiment

Mean

Mean

Recall Precision

Mean

F1

Extracting mentions with ExtractMByName

0.99

0.98

0.98

Discovering entities with default plan

1.00

0.96

0.98

Discovering entities with source-aware plan

0.97

0.99

0.98

Finding "authored" relations (DBLP plan)

0.76

0.98

0.84

Finding "affiliated" relations (co-occurrence)

0.85

0.83

0.80

Finding "served in" relations (labels)

0.84

0.81

0.77

Finding "gave talk" relations (neighborhood)

0.87

1.00

0.88

Finding "gave tutorial" relations (labels)

0.90

1.00

0.92

Finding "on panel" relations (labels)

0.95

0.92

0.89

Matching entities across daily graphs

1.00

1.00

1.00

27

Proposed Future Work: Data Model

Requirements

relatively easy to understand and manipulate for non-technical users

temporal dimension to represent changing data

Candidate: Temporal ER Graph

Day n

Day n+1

SIGMOD-07

served in

HV Jagadish

SIGMOD-07

served in

HV Jagadish

mentionOf

mentionOf

"HV Jagadish"

"HV Jagadish"

extractedFrom

extractedFrom

http://cse.umich.edu/...

mentionOf

"Jagadish, HV"

extractedFrom

http://cse.umich.edu/...

28

Data Model Implementation

Current implementation is ad hoc

combination of XML, RDBMS, and unstructured files

no clean separation between data and operators

Will explore a more principled implementation

efficient storage and processing

abstraction through an API

29

Proposed Future Work: Operator Model

Identify core set of efficient operators that encompass

many common CIM extraction/integration tasks

Data acquisition

query search engines

crawl sources (e.g., Crawler)

Data extraction

extract mentions (e.g., ExtractM)

discover relations (e.g., ComputeCoStrength, ExtractLabels)

Data integration

match mentions (e.g., MatchM)

match entities over time (e.g., Match operator in merge plan)

match entities across portals

Data manipulation

select subgraphs

join graphs

30

Operator Model Extensibility

Input parameters that can be tuned for each domain

e.g., MatchMByName

"HV Jagadish" = "Jagadish, HV"

input parameters: name permutation rules

e.g., MatchMByNeighborhood

"Cong Yu, HV Jagadish, Yun Chen" = "Yun Chen, HV Jagadish, Amab Nandi"

input parameters: window size, overlapping tokens required

31

Proposed Future Work: Plan Model

Need language for developers to compose operators

Workflow language

specifies operator order, how they are composed

used by current Cimple implementation

Declarative language

use a Datalog-like language to compose operators

existing work [Shen, VLDB 07] seems promising

provides opportunities for data-specific optimizations

32

Planned Thesis Chapters

Selecting initial data sources

Creating the daily ER graph

Merging daily graphs into the global ER graph

Incrementally expanding sources and data

Leveraging community members

Developing the Cimple 1.0 workbench

Evaluating the solutions and workbench

33

Incrementally Expanding

Most prior work periodically re-runs source discovery step

Cimple leverages a common community property

important new sources and entities are often mentioned in certain

community sources (e.g., DBWorld)

Message type: conf. ann.

Subject: Call for Participation: VLDB Workshop on Management of Uncertain Data

Call for Participation

Workshop on

"Management of Uncertain Data"

in conjunction with VLDB 2007

http://mud.cs.utwente.nl

...

monitor these sources with simple extraction plans

34

Leveraging Community Members

Machine-only contributions

little human effort, reasonable initial portal, automatic updates

inaccuracies from imperfect methods, limited coverage

Human-only contributions

accurate, can provide data not in sources

significant human effort, difficult to solicit, typically unstructured

Combine contributions from both humans and machines

benefits of both

encourage human contributions with machine's initial portal

Combine both structured and text contributions

allows structured services (e.g., querying)

35

Illustrating Example

<# person(id=1){name}=David J. DeWitt #>

David J. DeWitt

Professor

<# person(id=1){title}=Professor #>

<strong>Interests:</strong>

<# person(id=1).interests(id=3)

.topic(id=4){name}=Parallel DB #>

Interests:

Parallel DB

<# person(id=1){name}=David J. DeWitt #>

<# person(id=1){name}=David J. DeWitt #>

David J. DeWitt

<# person(id=1){title}=John P. Morgridge

Professor #>

<# person(id=1){title}= John P. Morgridge

Professor #>

<# person(id=1){organization}=UW-Madison#>

since 1976

John P. Morgridge Professor

UW-Madison since 1976

<# person(id=1) {organization}=UW #>

since 1976

<strong>Interests:</strong>

<# person(id=1).interests(id=3)

.topic(id=4){name}=Parallel DB #>

<strong>Interests:</strong>

<# person(id=1).interests(id=3)

.topic(id=4){name}=Parallel DB #>

<# person(id=1).interests(id=5)

.topic(id=6){name}=Privacy #>

Interests:

Parallel DB

Privacy

Madwiki solution (Machine assisted development of wikipedias)

36

The Madwiki Architecture

Data

Sources

M

G

T

V1

W1

V2

W2

V3

W3

V3’

W3’

u1

T3 ’

Community wikipedia, backed by a structured database

Challenges include

How to model underlying databases G and T

How to export a view Vi as a wiki page Wi

How to translate edits to a wiki page Wi into edits to G

How to propagate changes to G to affected wiki pages

37

Modeling the Underlying Database G

Use an entity-relationship graph

commonly employed by current community portals

familiar to ordinary, database-illiterate users

id = 4

name = Parallel DB

interests

id = 3

interests

id = 5

id = 6

name = Privacy

interests

id = 8

id = 1

name = David J. DeWitt

organization = UW-Madison

id = 7

name = Statistics

services

id = 11

as =general chair

id = 12

name = SIGMOD 02

38

Storing ER Graph G

Use an RDBMS for efficient querying and concurrency

Extend with temporal functionality for undo [Snodgrass 99]

Entity_ID

id

etype

1

4

12

…

person

topic

conf

…

Relationship_ID

id rtype eid1 eid2

3 interests

11 services

…

…

1

1

…

4

12

…

xid

value

1

2

UW

MITRE

xid

2

1

value

Organization_m

start

stop

2007-04-01 … 9999-12-31…

2007-05-20 … 9999-12-31…

Organization_u

start

stop

Purdue

2007-05-02 … 9999-12-31 …

UW-Madison 2007-05-27 … 9999-12-31 …

who

M

M

who

U1

U2

39

Reconciling Human and Machine Edits

When edits conflict, must reconcile to choose attribute values

Reconciliation policy encoded as view over attribute tables

e.g., "latest": current value is latest value, whether human or machine

e.g., "humans-first": current value is latest human value, or latest machine

value if there are no human values

xid

1

2

1

Organization_p

value

start

stop

who

UW

2007-04-01 … 2007-05-27… M

Purdue

2007-05-02 … 9999-12-31… U1

UW-Madison 2007-05-27 … 9999-12-31… U2

40

Exporting Views over G as Wiki Pages

Views over ER graph G select sub-graphs

id = 4

name = Parallel DB

interests

id = 3

id = 6

name = Privacy

interests

id = 5

id = 1

name = David J. DeWitt

organization = UW-Madison

Use s-slots to represent sub-graph's data in wiki pages

<# person(id=1){name}=David J. DeWitt #>

David J. DeWitt

<# person(id=1){organization}=UW-Madison#>

<strong>Interests:</strong>

<# person(id=1).interests(id=3)

.topic(id=4){name}=Parallel DB #>

<# person(id=1).interests(id=5)

.topic(id=6){name}=Privacy #>

UW-Madison

Interests:

Parallel DB

Privacy

41

Translating Wiki Edits to Database Edits

Use wiki edits to infer ER edits to underlying view

<# person(id=1){name}=David J. DeWitt #>

<# person(id=1){organization}=UW #>

<# person(id=1){name}=David J. DeWitt #>

<# person(id=1){organization}=UWMadison #>

<strong>Interests:</strong>

<# person(id=1).interests(id=3)

.topic(id=4){name}=Parallel DB #>

<strong>Interests:</strong>

<# person(id=1).interests(id=3)

.topic(id=4){name}=Parallel DB #>

<# person(id=1).interests(id=5)

.topic(id=6){name}=Privacy #>

id = 4

name = Parallel DB

interests

id = 3

id = 4

name = Parallel DB

interests

id = 3

id = 1

name = David J. DeWitt

organization = UW

interests

id = 5

id = 6

name = Privacy

id = 1

name = David J. DeWitt

organization = UW-Madison

Map ER edits to RDBMS edits over G

See ICDE 08 for details of mapping

42

Resolving Ambiguous Wiki Edits

Users can edit data, views, and schemas

change the attribute age of person X to 42

display the attribute homepage of conference entities on wiki pages

add the new attribute pages to the publication entity schema

Wiki edits can be ambiguous

change the attribute title of person X to NULL

do not display the attribute title of people entities on wiki pages

delete the attribute title from the people entity schema

Recognize ambiguous edits, then ask users to clarify intention

43

Propagating Database Edits to Wiki Pages

Update wiki pages when G changes

similar to view update

Eager propagation

pre-materialize wiki text, and immediately propagate all changes

raises complex concurrency issues

Simpler alternative: lazy propagation

materialize wiki text on-the-fly when requested by users

underlying RDBMS manages edit concurrency

preliminary evaluation indicates lazy propagation is tractable

44

Madwiki Evaluation

S-slot expressiveness

prototype shows s-slots can express all structured data in DBLife

superhomepages except aggregation and top-k

Materializing and serving wiki pages

materializing and serving time increases linearly with page size

vast majority of current pages are small, hence served in < 1 second

45

Proposed Future Work

Structured data in wiki pages

s-slot tags can be confusing and cumbersome to users

propose to explore a structured data representation closer to natural text

key challenge: how to avoid requiring an intractable NLP solution

Reconciling conflicting edits

preliminary work identifies problem, does not provide satisfactory solution

propose to explore reconciling edits by learning editor trustworthiness

lots of existing work, but not in CIM settings

key challenge: edits are sparse, and not missing at random

46

Developing the Cimple 1.0 Workbench

Set of tools for compositional portal building

empty portal shell, including basic services and admin tools

browsing, keyword search…

set of general operators, and means to compose them

MatchM, ExtractM…

simple implementation of operators

MatchMbyName, ExtractMbyName…

end-to-end development methodology

1. select sources, 2. discover entities…

extraction/integration plan optimizers

see VLDB 07 for an optimization solution

47

Proposed Evaluation: A Second Domain

Use Cimple to build portal for non-research domain

Evaluate portal's ease of development, extensibility, and accuracy

Movie Domain

movie, actor, director, appeared in, directed…

sources include critic homepages, celebrity news sites, fan sites

existent portals (e.g., IMDB), arguably an unfair advantage

NFL Domain

player, coach, team, played for, coached…

sources include homepages for players, teams, tournaments, stadiums

Digital Camera Domain

manufactureres, cameras, stores, reviewers, makes, sells, reviewed…

sources include manufacturer homepages, online stores, reviewer sites

48

Conclusions

CIM is an interesting and increasingly crucial problem

many online communities

initial efforts at UW, Y!R, MSR, Washington, IBM Almaden

Preliminary work on CIM seems promising

decomposed CIM and developed initial solutions

began work on the Cimple 1.0 workbench

developed the DBLife portal

Much work remains to realize the potential of this approach

formal data, operator, and plan model

more natural editing and robust reconciliation for Madwiki

Cimple 1.0 workbench

a second portal

49

Proposed Schedule

February

formal data, operator, and plan model for Cimple

March

solution for reconciling human and machine edits based on trust

June

more natural structured data representation for Madwiki wiki text

July

completion of Cimple 1.0 beta workbench

August

deployment of second portal built using the Cimple 1.0 workbench

public release of Cimple 1.0 workbench

50

Leveraging Communities – Prior Work

Automatic methods are imperfect

extraction/integration errors, and incomplete coverage

Some solutions builds portals with human-only contributions

Wikipedia, Intellipedia, umasswiki.com, ecolicommunity.org...

given an active, trusted community, can achieve excellent accuracy

can require lots of effort to maintain, and typically unstructured

Recent work explores allowing structured user contributions

Semantic Wikipedia, WikiLens, MetaWeb...

focus on extending wiki language to express structured data

do not explore synergistic leveraging of human and automatic contributions

51