slides

advertisement

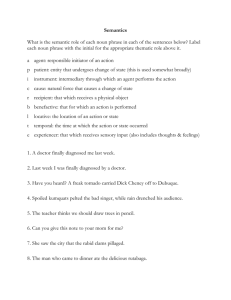

Natural Logic? Lauri Karttunen Cleo Condoravdi Annie Zaenen Palo Alto Research Center Overview Part 1 Why Natural Logic MacCartney’s NatLog Part 2 PARC Bridge Discussion Anna Szabolski on the semantic enterprise (2005) On this view, model theory has primacy over proof theory. A language may be defined or described perfectly well without providing a calculus (thus, a logic) for it, but a calculus is of distinctly limited interest without a class of models with respect to which it is known to be sound and (to some degree) complete. It seems fair to say that (a large portion of) mainstream formal semantics as practiced by linguists is exclusively model theoretic. As I understand it, the main goal is to elucidate the meanings of expressions in a compositional fashion, and to do that in a way that offers an insight into natural language metaphysics (Bach 1989) and uncovers universals of the syntax/semantics interface 4. Anna Szabolski The idea that our way of doing semantics (model-theory) is both insightful and computationally (psychologically) unrealistic has failed to intrigue formal semanticists into action. Why? There are various, to my mind respectable, possibilities. (i) Given that the field is young and still in the process of identifying the main facts it should account for, we are going for the insight as opposed to the potential of computational / psychological reality. (ii) We don’t care about psychological reality and only study language in the abstract. (iii) We do care about potential psychological reality but are content to separate the elucidation of meaning (model theory) from the account of inferencing (proof theory). But if the machineries of model theory and proof theory are sufficiently different, option (iii) may end up with a picture where speakers cannot know what sentences mean, so to speak, only how to draw inferences from them. Is that the correct picture? Why model theory is not in fashion in Computational Linguistics Computers don’t have realistic models up to now; everything is syntax Moss (2005) If one is seriously interested in entailment, why not study it axiomatically instead of building models? In particular, if one has a complete proof system, why not declare it to be the semantics? Indeed, why should semantics be founded on model theory rather than proof theory?” Why full-fledged proof theory is not in fashion in Computational Linguistics Too big an enterprise to be undertaken in one go FOL is undecidable. Ambitious attempt: Fracas (DRS) Need to work up our way through decidable logics: Moss’s hierarchy Unfortunately limited to the logical connectives Natural Logic? Long tradition: Aristotle, scholastics, Quine(?) Wittgenstein(?), Davidson, Parsons, ... Lakoff (i) We want to understand the relationship between grammar and reasoning. (ii) We require that significant generalizations, especially linguistic ones, be stated. (iii) On the basis of (i) and (ii), we have been led tentatively to the generative semantics hypothesis. We assume that hypothesis to see where it leads. Given these aims, empirical linguistic considerations play a role in determining what the logical forms of sentences can be. Let us now consider certain other aims. (iv) We want a logic in which all the concepts expressible in natural language can be expressed unambiguously, that is, in which all nonsynonymous sentences (at least, all sentences with different truth conditions) have different logical forms. (v) We want a logic which is capable of accounting for all correct inferences made in natural language and which rules out incorrect ones. We will call any logic meeting the goals of (i)-(v) a 'natural logic'. Basic idea Some inferences can be made on the basis of linguistic form alone. John and Mary danced. John danced. Mary danced. The boys sang beautifully. The boys sang. But: Often studied by philosophers interested in a limited number of phenomena Often ignoring the effect of lexical items. Problem 1: impact of lexical items is pervasive John and Mary carried the piano. ?? John carried the piano. The boys sang allegedly. ?? The boys sang. There are no structural inferences without lexical items playing a role. When lexical items are taken into account, the domain of ‘natural logic’ goes beyond what has been studied under that name up to now. Problem 2: Need for disambiguation we cannot work on literal strings The members of the royal family are visiting dignitaries. visiting dignitaries can be boring. a. Therefore, the members of the royal family can be boring. b. Therefore, what the members of the royal family are doing can be boring. Advantages of natural logic Lexico-syntactic Incremental What is doable ‘Syntactic’ approaches geared to specific inferences Examples: MacCartney’s approach to Natural Logic PARC’s Bridge Textual entailment (minimal world knowledge) Geared to existential claims (What happened, where, when) Existential claims What happened? Who did what to whom? Microsoft managed to buy Powerset. Microsoft acquired Powerset. Shackleton failed to get to the South Pole. Shackleton did not reach the South Pole. The destruction of the file was not illegal. The file was destroyed. The destruction of the file was averted. The file was not destroyed. Monotonicity What happened? Who did what to whom? Every boy managed to buy a small toy. Every small boy acquired a toy. Every explorer failed to get to the South Pole. No experienced explorer reached the South Pole. No file was destroyed. No sensitive file was destroyed. The destruction of a sensitive file was averted. A file was not destroyed. The creation of a new benefit was averted. A benefit was not created. Recognizing Textual Inferences MacCartney’s Natural Logic (NatLog) Point of departure: Sanchez Valencia’s elaborations of Van Benthem’s Natural Logic Seven relevant relations: x≡y x⊏y x⊐y x^y x|y x‿y x#y equivalence couch ≡ sofa x=y forward entailment crow⊏bird x⊂y reverse entailment Asian⊐Thai x⊃y negation able^unable x⋂y = 0⋀x⋃y=U alternation cat|dog x⋂y = 0⋀x⋃y≠U cover animal‿non-apex⋂y ≠ 0⋀x⋃y=U independence hungry#hippo Table of joins for 7 basic entailment relations ≡ ⊏ ⊐ ^ | ‿ # ≡ ≡ ⊏ ⊐ ^ | ‿ # ⊏ ⊏ ⊏ ≡⊏⊐|# | | ⊏^|‿# ⊏|# ⊐ ⊐ ≡⊏⊐‿# ⊐ ‿ ⊐^|‿# ‿ ⊐‿# ^ ^ ‿ | ≡ ⊐ ⊏ # | | ⊏^|‿# | ⊏ ≡⊏⊐|# ⊏ ⊏|# ‿ ‿ ‿ ⊐^|‿# ⊐ ⊐ ≡⊏⊐‿# ⊐‿# # # ⊏‿# ⊐|# # ⊐|# ⊏‿# ≡⊏⊐^|‿# Cases with more than one possibility indicate loss of information. The join of # and # is totally uninformative. Entailment relations between expressions differing in atomic edits (substitution, insertion, deletion) Substitutions: open classes (need to be of the same type) Synonyms: ≡ relation Hypernyms: ⊏ relation (crow bird) Antonyms: | relation (hot|cold) Note: not ^ in most cases, no excluded middle. Other nouns: | (cat|dog) Other adjectives: # (weak#temporary) Verbs: ?? … Geographic meronyms: ⊏ (in Kyoto ⊏in Japan) but note: not without the preposition Kyoto is beautiful ⊏ Japan is beautiful Substitutions: closed classes, example quantifiers: all ≡ every every ⊏ some (non-vacuity assumed) some ^ no no | every (non-vacuity assumed) four or more ⊏ two or more exactly four | exactly two at most four ‿ at least two (overlap at 2, 3, 4) most # ten or more Deletions and insertions: default: ⊏ (upwardmonotone contexts are prevalent) e.g. red car ⊏car But doesn’t hold for negation, non-intersective adjectives, implicatives. Composition Bottom up nobody can enter without a bottle of wine nobody can enter without a bottle of liquor (nobody (can (enter (without wine))) lexical entailment: wine ⊏ liquor without: downward monotone without wine ⊐ without liquor can upward monotone, nobody downward monotone nobody can enter without wine ⊏ nobody can enter without liquor Composition: projectivity of logical connectives connective ≡ ⊏ ⊐ ^ | ‿ # Negation (not) ≡ ⊐ ⊏ ^ ‿ | # Conjunction (and)/intersectio n ≡ ⊏ ⊐ | | # # Disjunction (or) ≡ ⊏ ⊐ ‿ # ‿ # Conditional antecedent ≡ ⊐ ⊏ # # # # Conditional consequent ≡ ⊏ ⊐ | | # # Biconditional ≡ # # # # # # Composition: projectivity of logical connectives connective ≡ ⊏ ⊐ ^ | ‿ # Conjunction (and)/intersectio n ≡ ⊏ ⊐ | | # # happy ≡ glad kiss ⊏ touch human ^ nonhuman French | German Metallic ‿ nonferrous swimming # hungry kiss and hug ⊏ touch and hug living human | living nonhuman French wine | Spanish wine metallic pipe # nonferrous pipe Composition: projectivity of logical connectives connective ≡ ⊏ ⊐ ^ | ‿ # Disjunction (or) ≡ ⊏ ⊐ ‿ # ‿ # happy ≡ glad kiss ⊏ touch human ^ nonhuman French | German more that 4 ‿ less than 6 happy or rich ≡ glad or rich kiss or hug ⊏ touch or hug human or equine ^ nonhuman or equine French or Spanish # German or Spanish 3 or more than 4 ‿ 3 or less than 6 Composition: projectivity of logical connectives connective ≡ ⊏ ⊐ ^ | ‿ # Negation (not) ≡ ⊐ ⊏ ^ ‿ | # happy ≡ glad kiss ⊏ touch human ^ nonhuman French | German more that 4 ‿ less than 6 swimming # hungry not happy ≡ not glad not kiss ⊐ not touch not human ^ not nonhuman not French ‿ not German not more than 4 | not less than 6 not swimming # not hungry Compositionality: projectivity of quantifiers 1st argument quantifier 2nd argument ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊏ ⊐ ^ | ⏝ # some ≡ ⊏ ⊐ ⏝ # ⏝ # ≡ ⊏ ⊐ º # ⏝ # no ≡ ⊐ ⊏ | # | # ≡ ⊐ ⊏ | # | # every ≡ ⊐ ⊏ | # | # ≡ ⊏ ⊐ | | # # not every ≡ ⊏ ⊐ ⏝ # ⏝ # ≡ ⊐ ⊏ ⏝ ⏝ # # at least two ≡ ⊏ ⊐ # # # # ≡ ⊏ ⊐ # # # # most ≡ # # # # # # ≡ ⊏ ⊐ | | # # exactly one ≡ # # # # # # ≡ # # # # # # all but one ≡ # # # # # # ≡ # # # # # # Compositionality: projectivity of quantifiers: some, first argument 1st argument quantifier some ≣ ⊏ ⊐ ^ | ⏝ # 2nd argument ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊏ ⊐ ⏝ # ⏝ # ≡ ⊏ ⊐ ⏝ # ⏝ # couch,sofa: some couches sag ≡ some sofas sag finch,bird: some finches sing ⊏ some birds sing boy,small boy: some boys sing ⊐ some small boys sing human, non-human: Some humans sing ⏝ some non-humans sing boy,girl: Some boys sing # Some girls sing animal,non-ape: Some animals breathe ⏝ some non-apes breathe Compositionality: projectivity of quantifiers: some, second argument 1st argument quantifier some ≣ ⊏ ⊐ ^ | 2nd argument ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊏ ⊐ ⏝ # ⏝ # ≡ ⊏ ⊐ ⏝ # ⏝ # beautiful,pretty: some couches are pretty ≡ some couches are beautiful sing beautifully,sing: some finches sings beautifully ⊏ some finches sing sing, sing beautifully: some finches sing ⊐ some finches sing beautifully human,non-human: some humans sing ⏝ some non-humans sing late|early: some people were early # some people were late Compositionality: projectivity of quantifiers: no; first argument 1st argument quantifier no ≡ ⊏ ⊐ ^ | ⏝ 2nd argument ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊐ ⊏ | # | # ≡ ⊐ ⊏ | # | # couch,sofa: no couches sag ≡ no sofas sag finch,bird: no finches sing ⊐ no birds sing boy,small boy: no boys sing ⊏ no small boys sing human, non-human: no humans sing | no non-humans sing boy, girl: no boys sing # no girls sing Animal,non-ape: no animals breathe | no non-apes breathe Compositionality: projectivity of quantifiers: every; first argument 1st argument quantifier every ≡ ⊏ ⊐ ^ | ⏝ 2nd argument ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊐ ⊏ | # | # ≡ ⊏ ⊐ | | # # couch,sofa: every couch sags ≡ every sofa sags finch,bird: every finch sings ⊐ every bird sings boy,small boy: every boy sings ⊏ every small boy sings human, non-human: every human sings | every non-human sings boy, girl: every boy sings # every girl sings animal, non-ape: every animal breathes | every non-ape breathes Compositionality: projectivity of quantifiers: not every; first argument 1st argument quantifier not every ≡ ⊏ ⊐ ^ | ⏝ # 2nd argument ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊏ ⊐ ⏝ # ⏝ # ≡ ⊐ ⊏ ⏝ ⏝ # # couch, sofa: not every couch sags ≡ not every sofa sags finch, bird: not every finch sings ⊏ not every bird sings boy, small boy: not every boy sings ⊐ not every small boy sings human, non-human: not every human sings ⏝ not every non-human sings boy, girl: not every boy sings # not every girl sings animal, non-ape: not every animal breathes ⏝ not every non-ape breathes Projectivity of Verbs most verbs are upward monotone and project ^, |, and ⏝ as # humans ^ nonhumans eats humans # eats non-humans but there are a lot of exceptions verbs with sentential complements require special treatment: factives, counterfactives, implicatives… (Parc verb classes) Factives Class Positive ++/-+ forget that is odd that Negative +-/-- pretend that pretend to Inference Pattern forget that X ⇝ X, not forget that X ⇝ X is odd that X ⇝ X, is not odd that X ⇝ X pretend that X ⇝ not X, not pretend that X ⇝ not X pretend to X ⇝ not X, not pretend to X ⇝ not X + forget that host polarity pretend that host polarity + pretend that host polarity forget that host polarity + + - complement polarity complement polarity complement polarity complement polarity Abraham pretended that Sarah was his sister. ⇝ Sarah was not his sister Howard did not pretend that it did not happen. ⇝ It happened. Implicatives Class Two-way ++/-- manage to implicatives +-/-+ fail to ++ Inference Pattern manage to X ⇝ X, not manage to X ⇝ not X fail to X ⇝ not X, not fail to X ⇝ X force to force X to Y ⇝ Y refuse to refuse to X ⇝ not X One-way +implicatives -- be able to not be able to X ⇝ not X -+ hesitate to not hesitate to X ⇝ X Translating PARC classes into the MacCartney approach sign del ins example implicatives factives ++/-- ≡ ≡ He managed to escape ≡ he escaped ++ ⊏ ⊐ He was forced to sell ⊏ he sold -- ⊐ ⊏ He was permitted to live ⊐ he did live +-/-+ ^ ^ He failed to pay ^ he paid +- | | He refused to fight | he fought -+ ‿ ‿ He hesitated to ask ‿ he asked # # He believed he had won/ he had won +-/+ +-/- Neutral does not take the presuppositions of the implicatives into account T. Ed didn’t forget to force Dave to leave H. Dave left i f(e) g(xi-1,e) projections h(x0,xi) joins 0 Ed didn’t fail to force Dave to leave 1 Ed didn’t force Dave to leave DEL(fail) ^ Context downward monotone: ^ ^ 2 Ed forced Dave to leave DEL(not) ^ Context upward monotone: ^ Join of ^,^: ≡ 3 Dave left DEL(force) ⊏ Context upward monotone: ⊏ Join of ≡,⊏: ⊏ t: We were not able to smoke h: We smoked Cuban cigars i xi ei 0 We were not able to smoke 1 We did not smoke DEL(perm ⊐ it) Downward monotone:⊏ ⊏ 2 We smoked DEL(not) ^ Upward monotone: ^ Join of ⊏,^: | 3 We smoked Cuban cigars INS(C.cig ars) ⊐ Upward monotone: ⊐ Join of |,⊐ : | We end up with a contradiction f(ei) g(xi-1,ei) h(x0,xi) Why do the factives not work? MacCartney’s system assumes that the implicatures switch when negation is inserted or deleted But that is not the case with factives and counterfactives, they need a special treatment Other limtations De Morgan’s laws: Not all birds fly some birds do not fly Buy/sell, win/lose Doesn’t work with atomic edits as defined. Needs larger units Bridge vs NatLog NatLog Symmetrical between t and h Bottom up Local edits, more compositional Surface based Integrates monotonicity and implicatives tightly Bridge Asymmetrical between t and h Top down Global rewrites possible Normalized input Monotonicity calculus and implicatives less tightly coupled We need more power than NatLog allows for but it needs to be deployed in a more constrained way than the current Bridge system demonstrates PARC’s BRIDGE System Anna Szabolski 2005 Consider the model theoretic and the natural deduction treatments of the propositional connectives. The two ways of explicating conjunction and disjunction amount to the same thing indeed: if you know the one you can immediately guess the other. Not so with classical negation. The model theoretic definition is in one step: ¬p is true if and only if p is not true. In contrast, natural deduction obtains the same result in three steps. First, elimination and introduction rules for ¬ yield a notion of negation as in minimal logic. Then the rule Ex Falso Sequitur Quodlibet is added to obtain intuitionistic negation, and finally Double Negation Cancellation to obtain classical negation. While it may be a matter of debate which explication is more insightful, it seems clear that the two are intuitively not the same, even though eventually they deliver the same result. Van Benthem “Dictum de Omni et Nullo”: admissible inferences of two kinds: downward monotonic (substituting stronger predicates for weaker ones), upward monotonic (substituting weaker predicates for stronger ones). Conservativity: Q AB iff Q A(BintersectionA) Toward NL Understanding Local Textual Inference A measure of understanding a text is the ability to make inferences based on the information conveyed by it. Veridicality reasoning Did an event mentioned in the text actually occur? Temporal reasoning When did an event happen? How are events ordered in time? Spatial reasoning Where are entities located and along which paths do they move? Causality reasoning Enablement, causation, prevention relations between events Knowledge about words for access to content The verb “acquire” is a hypernym of the verb “buy” The verbs “get to” and “reach” are synonyms Inferential properties of “manage”, “fail”, “avert”, “not” Monotonicity properties of “every”, “a”, “no”, “not” Every (↓) (↑), A (↑) (↑), No(↓) (↓), Not (↓) Restrictive behavior of adjectival modifiers “small”, “experienced”, “sensitive” The type of temporal modifiers associated with prepositional phrases headed by “in”, “for”, “through”, or even nothing (e.g. “last week”, “every day”) Construction of intervals and qualitative relationships between intervals and events based on the meaning of temporal expressions Local Textual Inference Initiatives PASCAL RTE Challenge (Ido Dagan, Oren Glickman) 2005, 2006 PREMISE CONCLUSION TRUE/FALSE Rome is in Lazio province and Naples is in Campania. Rome is located in Lazio province. TRUE ( = entailed by the premise) Romano Prodi will meet the US President George Bush in his capacity as the president of the European commission. George Bush is the president of the European commission. FALSE (= not entailed by the premise) World knowledge intrusion Romano Prodi will meet the US President George Bush in his capacity as the president of the European commission. George Bush is the president of the European commission. FALSE Romano Prodi will meet the US President George Bush in his capacity as the president of the European commission. Romano Prodi is the president of the European commission. TRUE G. Karas will meet F. Rakas in his capacity as the president of the European commission. F. Rakas is the president of the European commission. TRUE Inference under a particular construal Romano Prodi will meet the US President George Bush in his capacity as the president of the European commission. George Bush is the president of the European commission. FALSE (= not entailed by the premise on the correct anaphoric resolution) G. Karas will meet F. Rakas in his capacity as the president of the European commission. F. Rakas is the president of the European commission. TRUE (= entailed by the premise on one anaphoric resolution) Compositionality: projectivity of quantifiers: not every; second argument 1st argument quantifier not every ≣ ⊏ ⊐ ^ | ⏝ # 2nd argument ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊏ ⊐ ⏝ # ⏝ # ≡ ⊐ ⊏ ⏝ ⏝ # # Compositionality: projectivity of quantifiers 1st argument quantifier at least two 2nd argument ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊏ ⊐ # # # # ≡ ⊏ ⊐ # # # # Compositionality: projectivity of quantifiers 1st argument quantifier most 2nd argument ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊏ ⊐ ^ | ⏝ # ≡ # # # # # # ≡ ⊏ ⊐ | | # # Compositionality: projectivity of quantifiers 1st argument quantifier exactly one 2nd argument ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊏ ⊐ ^ | ⏝ # ≡ # # # # # # ≡ # # # # # # Compositionality: projectivity of quantifiers 1st argument quantifier all but one 2nd argument ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊏ ⊐ ^ | ⏝ # ≡ # # # # # # ≡ # # # # # # some Compositionality: projectivity of quantifiers: no; second argument 1st argument quantifier no ≡ ⊏ ⊐ ^ | ⏝ # 2nd argument ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊐ ⊏ | # | # ≡ ⊐ ⊏ | # | # Compositionality: projectivity of quantifiers: every; second argument 1st argument quantifier every ≡ ⊏ ⊐ ^ | ⏝ # 2nd argument ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊏ ⊐ ^ | ⏝ # ≡ ⊐ ⊏ | # | # ≡ ⊏ ⊐ | | # # Composition: projectivity of logical connectives connective ≡ ⊏ ⊐ ^ | ‿ # Biconditional ≡ # # # # # # Composition: projectivity of logical connectives connective ≡ ⊏ ⊐ ^ | ‿ # Conditional antecedent ≡ ⊐ ⊏ # # # # kiss ⊏ touch If she kissed her, she likes her ⊐ if she touched her, she likes her human ^ nonhuman Composition: projectivity of logical connectives connective ≡ ⊏ ⊐ ^ | ‿ # Conditional consequent ≡ ⊏ ⊐ | | # # kiss ⊏ touch If he wins I’ll kiss him⊏ if he wins I’ll touch him human ^ nonhuman If it does this it shows that it is human | if it does this it shows that it is nonhuman French | German If he wins he gets French wine | if he wins he gets German wine