Conceptualization, Operationalization, and Measurement

advertisement

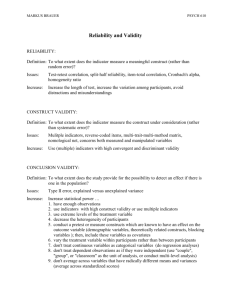

Conceptualization, Operationalization, and Measurement Independent and Dependent Variables • Independent variable what is manipulated is • Dependent variable is what is affected by the independent variable • a treatment or program or cause • effects or outcomes • ‘Factor’ • ‘Measure’ • ‘Explanatory Variable’ • ‘Response Variable’ Concept versus Construct • Concept 1. Term (nominal definition) that represents an idea that you wish to study; 2. Represents collections of seemingly related observations and/or experiences • Concepts as Constructs – We refer to concepts as constructs to recognize their social construction. More on constructs • Three classes of things that social scientists measure: – Directly observable: # of people in a room – Indirectly observable: income – Constructs: creations based on observations; cannot themselves be directly or indirectly observed • Example; You can treat gender as – directly observable (gender presentation) – indirectly observable (check box) – a construct where you develop dimensions and indicators of gender (which then requires much more conceptualization) Conceptualization 1. The process of conceptualization includes coming to some agreement about the meaning of the concept 2. In practice you often move back and forth between loose ideas of what you are trying to study and searching for a word that best describes it. 3. Sometimes you have to “make up” a name to encompass your concept. If you are interested in studying the extent to which people exhibit behaviors that bring together groups, you might come up with the nominal definition “bridge maker.” 4. As you form the aspects of a concept, you begin to see the dimensions; terms that define subgroups of a concept. 5. With each dimension, you must decide on indicators; signs of the presence or absence of that dimension. (Dimensions are usually concepts themselves). Operationalizing Choices • The process of creating a definition(s) for a concept that can be observed and measured • The development of specific research procedures that will result in empirical observations • Examples – SES is defined as a combination of income and education and I will measure each by… – The development of questions (or characteristics of data in qualitative work) that will indicate a concept • See example about ‘looking for work’ on page 125 Variable Attribute Choices • Variable attributes need to be exhaustive and exclusive • Represent full range of possible variation • Degree of Precision – selection depends on your research interest, but if you’re not sure, it’s better to include more detail than too little • Level of Measurement Level of Measurement • Nominal measures – only offer a name or a label for a variable – there is not ranking; they are not numerically related – gender; race • Ordinal measures – Variables with attributes that can be rank ordered – Can say one response is “more” or “less” than another – Distance between does not have meaning – Scales and indexes are ordinal measures, but conventions for analysis allow us to assume equidistance between attributes. Thus, they are often treated like “interval” measures. • Interval Measures • Distance separating attributes has meaning and is standardized (equidistant) • “0” value does not mean that a variable is not present • For example, elevation and temperature • Ratio Measures • attributes of a variable have a “true zero point” that means something • Height and Weight • allows one to create ratios Determining Quality of Measurement • Reliability: The extent to which the same research technique applied again to the same object/subject will give you the same result • Reliability does not ensure accuracy – a measure can be reliable but inaccurate – (invalid) because of bias in the measure or in data collector/coder Example of Reliability Problems • Intercoder reliability • Coders make subjective decision about the presence of violence in a series of ads; “present” or “not present” • We have a reliability problem when more than one coder looks at the same ad and codes it differently. • Solution: Operationalize specifically what “counts” as violence Techniques for Confirming Reliability or Discovering Problems a. Test-Retest b. Split-half Method - Divide indicators into 2 groups and use 2 surveys If random selection of respondents and indicators are reliable, then there should be no significant differences between the 2 groups from which data was collected c. Use Established Measures d. Reliability of data collectors/coders: training, follow up checks, intercoder reliability checks • Validity: The extent to which our measure reflects what we think or want them to be measuring Face Validity • The measure seems to be related to what we are interested in finding out even if it does not fully encompass the concept Criterion-related Validity • Predictive Validity • The measure is predictive of some external criterion • Example: – – Criterion = Success in College measure = ACT scores (high criterion validity?) Construct Validity • The measure is logically related to another variable as you had conceptualized it to be • Example: – Construct = happiness – Measure = financial stability • (if not related to happiness, low construct validity) Content Validity • How much a measure covers a range of meanings? Did you cover the full range of dimensions related to a concept? • Example: – – You think that you’re measuring prejudice, but you only ask questions about race. What about sex, religion, etc.? Internal Validity • Internal validity addresses the "true" causes of the outcomes that you observed in your study. – Strong internal validity means that you not only have reliable measures of your independent and dependent variables BUT a strong justification that causally links your independent variables to your dependent variables. – At the same time, you are able to rule out extraneous variables, or alternative, often unanticipated, causes for your dependent variables. External Validity • External validity addresses the ability to generalize your study to other people and other situations. – To have strong external validity (ideally), you need a probability sample of subjects or respondents drawn using "chance methods" from a clearly defined population. – Ideally, you will have a good sample of groups and a sample of measurements and situations. – When you have strong external validity, you can generalize to other people and situations with confidence.