2-page proposal file

advertisement

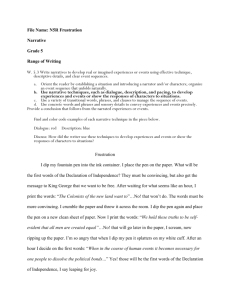

“Do You Want to Take a Survey?” Exploring Tools to Increase Undergraduate Student Response Rates Danielle Smalls, Holly Matusovich, Rachel McCord, Department of Engineering Education, Virginia Tech Abstract: Low survey response rates are a pervasive problem in research on undergraduate students. This study aims to address the problem of low response rates by finding the best method of data collection that satisfies the needs of the researcher while grabbing the attention of the student. In particular, this study focuses on discovering the most effective method for collecting data about student’s experiences learning engineering in real-time. Real-time data collection for this study is described as gathering information about experiences within the context of the current situation. Capturing data in the moment helps to eliminate possible memory loss with regard to experiences and also clarifies the context of the question. Using focus groups, this study compares undergraduate students’ perspectives on data collection tools from popular social media, institutional, and traditional online survey software. The outcomes from this study include ways of prompting students to take the surveys (impetus) and suggestions for the format of the survey to increase response rates. Surprisingly we found that students suggested pen and paper as a top choice over electronic methods, even though this approach was not among our suggested options. These outcomes can help researchers develop effective strategies for real-time data collection. Literature Review Students, particularly in higher education, are constantly bombarded with surveys imploring their opinions on specific issues. In higher education, and across many research contexts, response rates remain low. Researchers have investigated low response rates from many perspectives, in one case finding that the pervasiveness of computers on a campus contributes positively to response rates (Porter & Umbach, 2006). Therefore, we anticipated that using electronic means of collecting real-time data would increase response rates. However, given the trend towards low response rates to surveys in general, we thought it prudent to investigate students’ perspectives before investing further resources into survey development tools. Response rates are particularly important for the context of our study which includes real-time data collection. Grounded on the Experience Sample Methods (ESM) framework (Hektner, Schmidt, & Csikszentmihalyi, 2006), real-time data collection means gathering information about experiences within the context of the current situation. In our study, we want to gather information about engineering students learning in engineering classrooms. While many instruments have been developed that measure success in academics using different learning strategies, very few (if any) have focused on collecting data in a real-time format as students are learning in class. This study aims to address this problem by finding the best method of data collection that satisfies the needs of the researcher while grabbing the attention of the student. Methodology Focus groups with semi-structured questions (Patton, 2002) were used to gather information about a series of proposed survey tools including social media such as Facebook and Twitter, standard survey software such as Qualtrics, and classroom technologies such as clickers or interactive software. Our intention was to determine which survey tool would be the most effective in garnering student responses and why. Our focus groups consisted of students enrolled in either a summer pre-college preparatory engineering program or participants in a summer research program for engineering education. Two focus group sessions were conducted over the course of the summer. The focus groups were different in size with one consisting of one person and the other consisting of eight people. Despite this size difference, themes were consistent across each group such that we believe the samples are representative of the larger population. Participants were recruited in-person by a study co-investigator though two researchers conducted each focus group together. Questions focused on the students’ prior experiences with surveys, the types of survey tools to which they are most likely to respond, and their willingness to participate in surveys given during class periods. A presentation was shown to give examples of several different real-time survey tools. Focus group interviews were recorded, transcribed ver batim and analyzed using open coding (Miles & Huberman, 1994). The interviews were reviewed (audio recordings and transcriptions) until a finalized set of codes emerged. The researchers discussed the coding and final codes finding that they grouped into two main categories: format and impetus. Format described the proposed survey tool while Impetus gave the reason for a student completing the survey in class. Results Surprisingly, our results demonstrate that students prefer pen and paper for real-time data collection in classroom settings. This method emerged in both focus groups even though it was not presented as an original option in the example presentation. The stated reasons for selecting pen and paper included a desire to be free from on-line distractions, having the ability to add comments in the margins if the stated questions missed key points (similar to the idea of free response boxes on quantitative surveys), the pen and paper and the presence of the researcher (survey distribution and collection) are physical reminders to do the survey. These reasons cover both Format and Impetus categories. We also found that it was important to students to be able to give their feedback to instructors for the purpose of course improvement, though some preferred more anonymity in such responses than others. Table 1: Final Codes, Sub-codes and Definitions Code Format Impetus Sub-Code Pen and Paper Short and Simple Free Response Box Electronic Formats Anonymity Opinion matters Pen and Paper Incentives Definition Participants mention a preference for or against pen and paper Preferences on length and complexity (all mentions seemed to be short and simple) Mentions of liking or not liking free response boxes on surveys Mentions of liking or not liking any of the proposed electronic formats Preferences for anonymity Reasons for taking or not taking a survey that has to do with their opinion being important to a person, to research, as feedback, etc. Mentions that pen and paper serves as a physical reminder to take the survey, there is also often a person present evoking a desire to help the person Mentions of benefits of tangible incentives Discussion and Conclusions We believe these research study outcomes will help researchers increase survey response rates particularly with regard to real-time data collection. Our assumption was that students would choose a survey tool related to technology because of the current generation’s integration of technology. We set about with the idea that we needed to find the right technology to appeal to students and minimize classroom disruptions for the students and instructor. However, we were surprised to find that student prefer pen and paper which was contrary to our original assumption. Participants believe that pen and paper surveys would be the most effective in format and impetus in getting students to take the survey. They also believe this would be the least disruptive to class learning as students would not be tempted to use electronic media beyond the survey for social networking and internet searching during class. Implementation of the pen and paper method in real-time will be a complex task even though it is low technology. For example, the decision of when to pass out the tools during class and alerting the students of when to answer the question will depend on the lecture structure of the class, the professor’s teaching style, and the layout of the classroom. References Hektner, J. M., Schmidt, J. A., & Csikszentmihalyi, M. (Eds.). (2006). Experience Sampling Method: Measuring the Quality of Everyday Life. Thousand Oaks, CA: Sage Publications, Inc. Miles, M. B., & Huberman, A. M. (1994). Qualitative Data Analysis : An Expanded Sourcebook (2nd ed.). Thousand Oaks, CA: Sage. Patton, M. Q. (2002). Qualitative Research & Evaluation Methods (3rd ed.). Thousand Oaks: SAGE Publications. Porter, S. R., & Umbach, P. D. (2006). Student Survey Response Rates across Institutions: Why Do They Vary? Research in Higher Education, 47(2), 229-247. doi: 10.2307/40197408