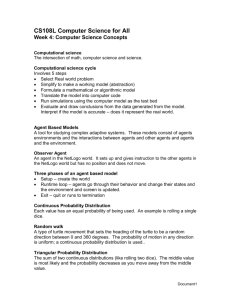

What is Computer Architecture? - Computer Science Department

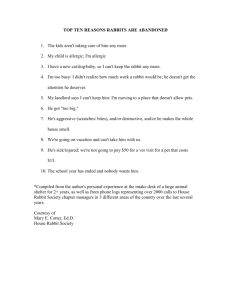

advertisement

CES 524 Computer Architecture

Fall 2006

W 6 to 9 PM

Salazar Hall 2008

B. Ravikumar (Ravi)

Office: Darwin Hall, 116 I

Office hours: TBA

Text-book

Computer Architecture: A

quantitative approach

Hennessy and Patterson

3rd edition

Other references:

• Hennessy and Patterson, Computer Organization and Design,

hardware-software interface. (undergraduate text by the same

authors)

• Jordan and Alaghband, Fundamentals of Parallel Processing.

• Hwang, Advanced Computer Architecture. (excellent source on

parallel and pipeline computing)

• I. Koren, Computer Arithmetic Algorithms.

• Survey articles, on-line lecture notes from several sources.

• http://www.cs.utexas.edu/users/dburger/teaching/cs382mf03/homework/papers.html

• http://www.cs.wisc.edu/arch/www/

Class notes and lecture format

• power-point slides, some times pdf, postscript etc.

• Tablet PC will be used to

• add comments

• draw sketches

• write code

• make corrections etc.

• lecture notes prepared with generous help provided

through the web sites of many instructors:

• Patterson, Berkeley

• Susan Eggers, University of Washington

• Mark Hill, Wisconsin

• Breecher, Clark U

• DeHon, Caltech, etc.

Background expected

• Logic Design

• Combinational logic, sequential logic

• logic design and minimization of circuits

• finite state machines

• Discrete Components-multiplexers, memory units, ALU

• Basic Machine structure

• processor (Data path, control), memory, I/O

• Number representations, computer arithmetic

• Assembly language programming

But if you have not studied some of these topics or don’t

remember, don’t worry! These topics will be reviewed.

Coursework and grading

• Home Work Sets (about 5 sets) 25 points

• Most problems will be assigned from the text. Text has many problems

marked * with solutions at the back. These will be helpful in solving the HW

problems.

• Additional problems at similar level

• Some implementation exercises (mostly C programming)

• Policy on collaboration

• Mid-semester Tests

25 points

• One will be in-class, open book/notes, 75 minutes long

• The other can be take-home or in-class. (discussed later)

• dates will be announced (at least) one week in advance

• all topics discussed until the previous lecture

• Project

15 points

• Semester-long work. Each one chooses one problem to work on.

• Design, implementation, testing etc.

• Report and a presentation

• Final Exam

35 points

(take-home?)

Project Examples

• hardware design

e.g. circuit design for dedicated application such as image

processing

• hardware testing

• cache-efficient algorithm design and implementation

• wireless sensor networks

• embedded system design (for those who are doing CES 520)

• Tablet PC hardware study

Overview of the course

• Review of Computer Organization

• Digital logic design

• Computer Arithmetic

• machine/assembly language

• Data and control path design

• Cost performance trade-offs

• Instruction Set Design Principles

• classification of instruction set architectures

• addressing modes

• encoding instructions

• trade-offs/examples/historical overview

• Instruction level parallelism

• overview of pipelining

• superscalar execution

• branch prediction

• dynamic scheduling

• Cache Memory

• cache performance modeling

• main memory technology

• virtual memory

• cache-efficient algorithm design

• external memory data structures, algorithms, applications

• Shared-memory multiprocessor system

• symmetric multiprocessors

• distributed shared-memory machines

• Storage systems

• RAID architecture

• I/O performance measures

• Advanced Topics

• Computer Arithmetic

• alternatives to floating-point

•Design to minimize size, delay etc.

• circuit complexity trade-offs (area, time, energy etc.)

• hardware testing

• Fault-models, fault testing

• model checking

• external memory computation problems

• external memory algorithms

• data structures, applications

• Cache-efficient algorithms

• Nonstandard models

• quantum computing

• bio-computing and neural computing

• Sensor networks

Overview of the course

• Review of Computer Organization

• Digital logic design

w

x

x

y

y

z

z

Inputs

Decoder

w

.

.

.

...

Outputs

(a) Programmable

OR gates

(b) Logic equivalent

of part a

(c) Programmable read-only

memory (PROM)

Overview of the course

• Review of Computer Organization

• Digital logic design

Inputs

8-input

ANDs

...

AND

array

(AND

plane)

.

.

.

6-input

ANDs

OR

array

(OR

plane)

...

4-input

ORs

Outputs

(a) General programmable

combinational logic

(b) PAL: programmable

AND array, fixed OR array

(c) PLA: programmable

AND and OR arrays

Overview of the course

• Review of Computer Organization

• Digital logic design – sequential circuits

Dime

Quarter

Reset

------- Input ------Dime

Current

state

S 00

S 10

S 25

S 00

S 10

S 20

S 35

S 00

S 20

S 30

S 35

S 00

S 25

S 35

S 35

S 00

S 30

S 35

S 35

S 00

S 35

S 35

S 35

S 00

Next state

S 00 is the initial state

S 35 is the final state

S 10

S 20

Reset

Reset

Dime

Start

Quarter

Dime

Quarter

Quarter

S 00

S 25

Reset

Reset

Dime

Quarter

Reset

Dime

Quarter

S 35

Dime

Quarter

S 30

Example of sequential circuit design

Overview of the course

• Review of Computer Organization

• Computer Arithmetic

Overview of the course

• Review of Computer Organization

• machine/assembly language

MIPS instruction set

Overview of the course

• Review of Computer Organization

• Data and control path design

Overview of the course

•

Review of Computer Organization

•

Cost performance trade-offs

A processor spends 30% of its time on flp addition, 25% on flp mult,

and 10% on flp division. Evaluate the following enhancements, each

costing the same to implement:

a.

b.

c.

Redesign of the flp adder to make it twice as fast.

Redesign of the flp multiplier to make it three times as fast.

Redesign the flp divider to make it 10 times as fast.

Overview of the course

• Instruction Set Design Principles

• classification of instruction set architectures

Overview of the course

• addressing modes

Overview of the course

• Instruction level parallelism

• overview of pipelining

Overview of the course

• overview of pipelining

Hazards

When the same resource is required by two successive

instructions executing different cycles, a hazard occurs.

• read after write

x=y+z

w=x+t

The second instruction’s “read x” part of the cycle has to

wait for the first instruction’s “write x” part of the cycle.

Spring 2003

CSE P548

23

• branch prediction

x = y + z;

if (x > 0)

y = y + 1;

else z = z +1;

The instruction after x = y + z can only be determined after x has

been computed.

x = 1;

while (x < 100) do

{

sum = sum + x;

x = x + 1;

}

The instruction after x = x + 1 will be almost always sum = sum + x

(99 out of 100 times), so if we know that the instruction is in a loop,

we predict that the loop is executed and we will be correct most of the

time.

• Cache Memory

• cache performance modeling

• main memory technology

• virtual memory

• cache-efficient algorithm design

• external memory data structures, algorithms, applications

• branch prediction

• dynamic scheduling

• Cache Memory

• cache performance modeling

• main memory technology

• virtual memory

• cache-efficient algorithm design

• external memory data structures, algorithms, applications

• Shared-memory multiprocessor system

• symmetric multiprocessors

• distributed shared-memory machines

• Storage systems

• RAID architecture

• I/O performance measures

Hitting the Memory Wall

Relative performance

10 6

Processor

10 3

Memory

1

1980

1990

2000

2010

Calendar year

Fig. 17.8 Memory density and capacity have grown along with the

CPU power and complexity, but memory speed has not kept pace.

Spring 2003

CSE P548

27

• branch prediction

• dynamic scheduling

• Cache Memory

• cache performance modeling

• main memory technology

• virtual memory

• cache-efficient algorithm design

• external memory data structures, algorithms, applications

• Shared-memory multiprocessor system

• symmetric multiprocessors

• distributed shared-memory machines

• Storage systems

• RAID architecture

• I/O performance measures

• branch prediction

• dynamic scheduling

• Cache Memory

• cache performance modeling

• main memory technology

• virtual memory

• cache-efficient algorithm design

• external memory data structures, algorithms, applications

• Shared-memory multiprocessor system

• symmetric multiprocessors

• distributed shared-memory machines

• Storage systems

• RAID architecture

• I/O performance measures

• Advanced Topics

• Computer Arithmetic

• alternatives to floating-point

•Design to minimize size, delay etc.

• circuit complexity trade-offs (area, time, energy etc.)

• hardware testing

• Fault-models, fault testing

• model checking

• external memory computation problems

• external memory algorithms

• data structures, applications

• Cache-efficient algorithms

• Nonstandard models

• quantum computing

• bio-computing and neural computing

• Sensor networks

Focus areas

• instruction set architecture, review of computer organization

• instruction-level parallelism (chapters 3 and 4)

• computer arithmetic

(Appendix)

• cache-performance analysis and modeling (chapter 5)

• multiprocessor architecture (chapter 7)

• interconnection networks (chapter 8)

• cache-efficient algorithm design

• code optimization and advanced compilation techniques

• FPGA and reconfigurable computing

History of Computer Architecture

Abacus is probably more than 3000 years old.

The abacus is prepared for use by placing it flat on a table or one's lap and pushing all the

beads on both the upper and lower decks away from the beam. The beads are manipulated

with either the index finger or the thumb of one hand.

The abacus is still in use today by shopkeepers in Asia. The use of the abacus is still taught

in Asian schools, and some schools in the West. Visually impaired children are taught to

use the abacus where their sighted counterparts would be taught to use paper and pencil to

perform calculations. One particular use for the abacus is teaching children simple

mathematics and especially multiplication; the abacus is an excellent substitute for rote

memorization of multiplication tables, a particularly detestable task for young children.

History of Computer Architecture

• Mechanical devices for controlling complex operations have been in

existence since at least 1500's. (First ones were rotating pegged cylinders

in musical boxes.)

The medieval development of camshafts has proven to be of immense technological significance. It

allowed the budding medieval industry to transform the rotating movement of waterwheels and

windmills into the movements that could be used for the hammering of ore, the sawing of wood and

the manufacturing of paper.

•Pascal developed a mechanical calculator to help in tax work.

• Pascal's calculator contains eight dials that connect to a drum, with a

linkage that causes a dial to rotate one notch when a carry is produced

from a dial in a lower position.

• Some of Pascal's adding machines which he started to build in 1642,

still exist today.

Pascal began work on his calculator in 1642, when he was only 19 years old.

He had been assisting his father, who worked as a tax commissioner, and

sought to produce a device which could reduce some of his workload. By 1652

Pascal had produced fifty prototypes and sold just over a dozen machines, but

the cost and complexity of the Pascaline – combined with the fact that it

could only add and subtract, and the latter with difficulty – was a barrier to

further sales, and production ceased in that year. By that time Pascal had

moved on to other pursuits, initially the study of atmospheric pressure, and

later philosophy.

The Pascaline was a decimal machine. This proved to be a liability, however, as the contemporary French

currency system was not decimal. It was instead similar to the Imperial pounds ("livres"), shillings ("sols")

and pence ("deniers") in use in Britain until the 1970s, and necessitated that the user perform further

calculations if the Pascaline was to be used for its intended purposes, as a currency calculator.

In 1799 France changed to a metric system, by which time Pascal's basic design had inspired other

craftsmen, although with a similar lack of commercial success. Child prodigy Gottfried Wilhelm von Leibniz

produced a competing design, the Stepped Reckoner, in 1672 which could perform addition, subtraction,

multiplication and division, but calculating machines did not become commercially viable until the early

19th century, when Charles Xavier Thomas de Colmar's Arithmometer, itself based on Von Leibniz's design,

was commercially successful.

• Babbage built in 1800's a computational device called difference

engine.

• This machine had features seen in modern computers- means for reading input

data, storing data, performing calculations, producing output and automatically

controlling the operations of the machine.

Difference engines were forgotten and then rediscovered in 1822 by Charles

Babbage, who proposed it in a paper to the Royal Astronomical Society

entitled "Note on the application of machinery to the computation of very

big mathematical tables." This machine used the decimal number system

and was powered by cranking a handle. The British government initially

financed the project, but withdrew funding when Babbage repeatedly asked

for more money whilst making no apparent progress on building the

machine. Babbage went on to design his much more general analytical

engine but later produced an improved difference engine design (his

"Difference Engine No. 2") between 1847 and 1849. Inspired by Babbage's

difference engine plans, Per Georg Scheutz built several difference engines

from 1855 onwards; one was sold to the British government in 1859. Martin

Wiberg improved Scheutz's construction but used his device only for

producing and publishing printed logarithmic tables.

The principle of a difference engine is Newton's method of differences, It

may be illustrated with a small example. Consider the quadratic

polynomial p(x) = 2x2 − 3x + 2

To calculate p(0.5) we use the values from the

lowest diagonal. We start with the rightmost

column value of 0.04. Then we continue the

second column by subtracting 0.04 from 0.16 to

get 0.12. Next we continue the first column by

taking its previous value, 1.12 and subtracting the

0.12 from the second column. Thus p(0.5) is 1.120.12 = 1.0. In order to compute p(0.6), we iterate

the same algorithm on the p(0.5) values: take

0.04 from the third column, subtract that from

the second column's value 0.12 to get 0.08, then

subtract that from the first column's value 1.0 to

get 0.92, which is p(0.6).

Babbage’s analytical engine

• Babbage also designed a more sophisticated

machine known as analytical engine which had a

mechanism for branching and a means for

programming using punched cards.

Portion of the mill of the Analytical

Engine with printing mechanism, under

construction at the time of Babbage’s

death.

The designs for the Analytical Engine include almost all the

essential logical features of a modern electronic digital computer.

The engine was programmable using punched cards. It had a ‘store’

where numbers and intermediate results could be held and a

separate ‘mill’ where the arithmetic processing was performed. The

separation of the ‘store’ (memory) and ‘mill’ (central processor) is a

fundamental feature of the internal organisation of modern

computers.

The Analytical Engine could have `looped’ (repeat the same

sequence of operations a predetermined number of times) and was

capable of conditional branching (IF… THEN… statements) i.e.

automatically take alternative courses of action depending on the

result of a cacluation.

Had it been built it would have needed to be operated by a steam

engine of some kind. Babbage made little attempt to raise funds to

build the Analytical Engine. Instead he continued to work on

simpler and cheaper methods of manufacturing parts and built a

small trial model which was under construction at the time of his

death.

• Analytical engine was never built because technology was not

developed to meet the required standards.

• Ada Lovelace (daughter of the poet Byron) worked with Babbage

to write the earliest computer programs to solve problems on the

analytical engine.

• A version of Babbage's difference engine was actually built by the

Science Museum in London in 1991 and can still be viewed today.

• It took over a century until the start of World War II, before the

next major development in computing machinery took place.

• In England, German U-boat submarines were causing heavy

damage on allied shipping.

• The U-boats received communications using a secret code

which was implemented by a machine made by Siemens

known as ENIGMA.

• The process of encryption used by Enigma was known for a

longtime. But decoding was a much harder task.

• Alan Turing, a British Mathematician, and others in England, built

an electromechanical machine to decode the message sent by

ENIGMA.

• The Colossus was a successful code breaking machine that came

out of Turing's research.

• Colossus had all the features of an electronic computers.

• Vacuum tubes to store the contents of a paper tape that is fed into the

machine.

• Computations took place among the vacuum tubes and programming

was performed with plug boards.

• Around the same time as Turing’s efforts, Eckert and Mauchly set out

to create a machine to compute tables of ballistic trajectories for the U.

S. Army.

• The result of their effort was the Electronic Numerical Integrator and

Computer (ENIAC) at Moore School of Engineering at the University of

Pennsylvania.

• ENIAC consisted of 18000 vacuum tubes, which made up the

computing section of the machines programming and data entry

were performed by setting switches.

• There was no concept of stored program, and there was no central

memory unit.

• But these were not serious limitations since ENIAC was intended

to do special-purpose calculations.

• ENIAC was not ready until the war was over, but it wa

successfully use for nine years after the war (1946-1955).

• After ENIAC was completed, von Neumann joined Ekert and

Mauchly. Together, they worked on a model for stored program

computer called EDVAC.

• Eckert and Mauchley and von Neumann and Goldstein split up after

disputes over credit and differences of opinion.

• But the concept of stored program computer came out of this

collaboration. The term von Neumann architecture is used to denote a

stored program computer.

• Wilkes in Cambridge University built a stored program computer

(EDSAC) that was completed in 1947.

• Atanasoff at Iowa State built a small-scale electronic computer.

His work came to light as part of a lawsuit.

• Another early machine that deserves credit is Konrad Zuse’s machine

in Germany.

• Historical papers (e.g. by Knuth) have been written on the earliest

computer programs ever written on these machines:

• sorting

• generate perfect squares, primes

Comparison of early computers

ENIAC - details

• Decimal (not binary)

• 20 accumulators of 10 digits

• ENIAC used ten-position ring counters to store

digits; each digit used 36 tubes, 10 of which were the

dual triodes making up the flip-flops of the ring counter.

Arithmetic was performed by "counting" pulses with the

ring counters and generating carry pulses if the counter

"wrapped around", the idea being to emulate in

electronics the operation of the digit wheels of a mechanical adding machine.

•

•

•

•

•

•

Programmed manually by switches

18,000 vacuum tubes

30 tons

15,000 square feet

140 kW power consumption

5,000 additions per second

ENIAC – ALU, Reliability

• The basic clock cycle was 200 microseconds, or 5,000 cycles per second for operations on

the 10-digit numbers. In one of these cycles, ENIAC could write a number to a register, read

a number from a register, or add/subtract two numbers. A multiplication of a 10-digit

number by a d-digit number (for d up to 10) took d+4 cycles, so a 10- by 10-digit

multiplication took 14 cycles, or 2800 microseconds—a rate of 357 per second. If one of the

numbers had fewer than 10 digits, the operation was faster. Division and square roots took

13(d+1) cycles, where d is the number of digits in the result (quotient or square root). So a

division or square root took up to 143 cycles, or 28,600 microseconds—a rate of 35 per

second. If the result had fewer than ten digits, it was obtained faster.

• By the simple (if expensive) expedient of never turning the machine off, the engineers

reduced ENIAC's tube failures to the acceptable rate of one tube every two days. According to

a 1989 interview with Eckert the continuously failing tubes story was therefore mostly a

myth: "We had a tube fail about every two days and we could locate the problem within 15

minutes."

• In 1954, the longest continuous period of operation without a failure was 116 hours (close to

five days). This failure rate was remarkably low, and stands as a tribute to the precise

engineering of ENIAC.

von Neumann machine

•

•

•

•

Stored Program concept

Main memory storing programs and data

ALU operating on binary data

Control unit interpreting instructions from memory and

executing

• Input and output equipment operated by control unit

• Princeton Institute for Advanced Studies

• IAS

• Completed 1952

Commercial Computers

• 1947 - Eckert-Mauchly Computer Corporation

• BINAC

• UNIVAC I (Universal Automatic Computer)

• Sold for $1 Million.

• Totally 48 machines were built

• US Bureau of Census 1950 calculations

• Became part of Sperry-Rand Corporation

• Late 1950s - UNIVAC II

• Faster

• More memory

IBM and DEC

• Punched-card processing equipment

• Office automation, electric type-writers etc.

• 1953 - the 701

• IBM’s first stored program computer

• Scientific calculations

• 1955 - the 702

• Business applications

• Lead to 700/7000 series

• In 1964, IBM invested $5 B to build IBM 360 series

of machines. (main-frame)

• DEC developed PDP series (minicomputers)

Transistors

•

•

•

•

•

•

•

•

Replaced vacuum tubes

Smaller

Cheaper

Less heat dissipation

Solid State device

Made from Silicon (Sand)

Invented 1947 at Bell Labs

William Shockley, Bardeen and Brittain

Transistor Based Computers

•

•

•

•

Second generation machines

NCR & RCA produced small transistor machines

IBM 7000

DEC - 1957

• Produced PDP-1

Microelectronics

• Literally - “small electronics”

• A computer is made up of gates, memory cells and

interconnections

• These can be manufactured on a semiconductor

• e.g. silicon wafer

Generations of Computers

•

•

•

•

•

•

•

Vacuum tube - 1946-1957

Transistor - 1958-1964

Small scale integration - 1965 on

• Up to 100 devices on a chip

Medium scale integration - to 1971

• 100-3,000 devices on a chip

Large scale integration - 1971-1977

• 3,000 - 100,000 devices on a chip

Very large scale integration - 1978 to date

• 100,000 - 100,000,000 devices on a chip

Ultra large scale integration

• Over 100,000,000 devices on a chip

Introduction

1.1 Introduction

1.2 The Task of a Computer Designer

1.3 Technology and Computer Usage Trends

1.4 Cost and Trends in Cost

1.5 Measuring and Reporting Performance

1.6 Quantitative Principles of Computer Design

1.7 Putting It All Together: The Concept of Memory Hierarchy

What’s Computer Architecture?

The attributes of a [computing] system as seen by the programmer, i.e.,

the conceptual structure and functional behavior, as distinct from the

organization of the data flows and controls the logic design, and the

physical implementation.

Amdahl, Blaaw, and Brooks, 1964

SOFTWARE

What’s Computer Architecture?

• 1950s to 1960s: Computer Architecture Course Computer

Arithmetic was the main focus.

• 1970s to mid 1980s: Computer Architecture Course

Instruction Set Design, especially ISA appropriate for

compilers. (covered in Chapter 2)

• 1990s and later: Computer Architecture Course

Design of CPU, memory system, I/O system,

Multiprocessors, instruction-level parallelism etc.

The Task of a Computer Designer

1.1 Introduction

1.2 The Task of a

Computer Designer

Evaluate Existing

1.3 Technology and

Implementation

Systems for

Complexity

Computer Usage

Bottlenecks

Trends

1.4 Cost and Trends in

Benchmarks

Cost

Technology

1.5 Measuring and

Trends

Reporting

Implement Next

Performance

Simulate New

Generation System

Designs and

1.6 Quantitative Principles

Organizations

of Computer Design

1.7 Putting It All Together:

Workloads

The Concept of

Memory Hierarchy

Technology and Computer Usage Trends

1.1 Introduction

1.2 The Task of a Computer

Designer

1.3 Technology and Computer

Usage Trends

1.4 Cost and Trends in Cost

1.5 Measuring and Reporting

Performance

1.6 Quantitative Principles of

Computer Design

1.7 Putting It All Together: The

Concept of Memory

Hierarchy

When building a Cathedral numerous

very practical considerations need

to be taken into account:

• available materials

• worker skills

• willingness of the client to pay the

price.

Similarly, Computer Architecture is

about working within constraints:

• What will the market buy?

• Cost/Performance

• Tradeoffs in materials and

processes

Trends

Gordon Moore (Founder of Intel) observed in 1965 that the number of transistors

that could be crammed on a chip doubles every year.

This has CONTINUED to be true since then. (Moore’s

law)

Transistors Per Chip

1.E+08

Pentium 3

Pentium Pro

1.E+07

Pentium II

Power PC G3

Pentium

486

1.E+06

Power PC 601

386

80286

1.E+05

8086

1.E+04

4004

1.E+03

1970

1975

1980

1985

1990

1995

2000

2005

Trends

Processor performance, as measured by the SPEC benchmark has also risen

dramatically.

Alpha 6/833

Sun MIPS

-4/ M

260 2000

IBM

RS/

6000

DEC Alpha 5/500

DEC

AXP/ DEC Alpha 4/266

DEC Alpha 21264/600

500

87

88

89

90

91

92

93

94

95

96

97

98

99

20

00

5000

4000

3000

2000

1000

0

Trends

Memory Capacity (and Cost) have changed dramatically in the last 20 years.

size

1000000000

year

1980

1983

1986

1989

1992

1996

2000

100000000

Bits

10000000

1000000

100000

10000

1000

1970

1975

1980

1985

Year

1990

1995

2000

size(Mb) cyc time

0.0625 250 ns

0.25

220 ns

1

190 ns

4

165 ns

16

145 ns

64

120 ns

256

100 ns

Trends

Based on SPEED, the CPU has increased dramatically, but memory and

disk have increased only a little. This has led to dramatic changes in

architecture, Operating Systems, and Programming practices.

Capacity

Speed (latency)

Logic

2x in 3 years

2x in 3 years

DRAM

4x in 3 years

2x in 10 years

Disk

4x in 3 years

2x in 10 years

Growth in Microprocessor performance

• Growth in microprocessor performance since the mid-1980’s

has been substantially higher than before 1980’s.

• Figure 1.1 shows this trend. Processor performance has

increased by a factor of about 1600 in 16 years.

• There are two graphs in this figure. The graph showing growth

rate of about 1.58 per year in the real one; the other one showing

a rate of 1.35 per year is an extrapolation from the time prior to

1980.

• Prior to 1980’s performance growth was largely technology

driven.

• Growth increases since 1980 is attributable to architectural and

organizational ideas.

Performance Trends

Current taxonomy of computer systems

The early computers were just main-frame systems.

Current classification of computer systems is broadly:

• Desktop computing

• Low-end PC’s

• High-end work-stations

• Servers

• High throughput

• Greater reliability (with back-up)

• Embedded computers

• Hand-held devices (video-games, PDA, cell-phones)

• Sensor networks

• appliances

Summary of the three computing classes

Cost of IC

• wafer is manufactured (typically circular) and the dies are cut.

• tested, packaged and shipped. (all dies are identical.)

Cost of IC

• wafer is manufactured (typically circular). (See Figure

1.8) and the dies are cut.

• tested, packaged and shipped. (all dies are identical.)

Cost of die + testing + packaging and final test

Cost of IC =

Final test yield

Cost of wafer

Cost of Die =

Dies per wafer * Die yield

Dies per wafer = p (wafer

radius)2

-------------------

-

Die area

Can you explain the correction factor?

p * Wafer diameter

--------------------(2 * Die area)0.5

Distribution of cost in a system

Measuring And Reporting Performance

1.1 Introduction

1.2 The Task of a Computer Designer

1.3 Technology and Computer Usage

Trends

1.4 Cost and Trends in Cost

1.5 Measuring and Reporting

Performance

1.6 Quantitative Principles of Computer

Design

1.7 Putting It All Together: The Concept

of Memory Hierarchy

This section talks about:

1.

Metrics – how do we describe in

a numerical way the

performance of a computer?

2. What tools do we use to find

those metrics?

Metrics

Plane

DC to

Paris

Speed

Passengers

Throughput

(pmph)

Boeing 747

6.5 hours

610 mph

470

286,700

Concorde

3 hours

1350 mph

132

178,200

• Time to run the task (Exec Time)

• Execution time, response time, latency

• Tasks per day, hour, week, sec, ns … (Performance)

• Throughput, bandwidth

Metrics - Comparisons

"X is n times faster than Y" means

ExTime(Y)

--------ExTime(X)

=

Performance(X)

--------------Performance(Y)

= n

Speed of Concorde vs. Boeing 747

Throughput of Boeing 747 vs. Concorde

Metrics - Comparisons

Pat has developed a new product, "rabbit" about which she wishes to determine

performance. There is special interest in comparing the new product, rabbit to

the old product, turtle, since the product was rewritten for performance reasons.

(Pat had used Performance Engineering techniques and thus knew that rabbit

was "about twice as fast" as turtle.) The measurements showed:

Performance Comparisons

Product

Turtle

Rabbit

Transactions / second

30

60

Seconds/ transaction

0.0333

0.0166

Seconds to process transaction

3

1

Which of the following statements reflect the performance comparison of rabbit and turtle?

o Rabbit is 100% faster than turtle.

o Rabbit is twice as fast as turtle.

o Rabbit takes 1/2 as long as turtle.

o Rabbit takes 1/3 as long as turtle.

o Rabbit takes 100% less time than

turtle.

o Rabbit takes 200% less time than

turtle.

o Turtle is 50% as fast as rabbit.

o Turtle is 50% slower than rabbit.

o Turtle takes 200% longer than rabbit.

o Turtle takes 300% longer than rabbit.

Metrics - Throughput

Application

Answers per month

Operations per second

Programming

Language

Compiler

(millions) of Instructions per second: MIPS

ISA

(millions) of (FP) operations per second:

MFLOP/s

Datapath

Megabytes per second

Control

Function Units

TransistorsWiresPins

Cycles per second (clock rate)

Methods For Predicting Performance

• Benchmarks

• Hardware: Cost, delay, area, power estimation

• Simulation (many levels)

• ISA, RT, Gate, Circuit

• Queuing Theory

• Rules of Thumb

• Fundamental “Laws”/Principles

• Trade-offs

Execution time

Execution time can be defined in different ways:

• wall-clock time, response time or elapsed time: latency to

complete the task. (includes disk accesses, memory accesses,

input/output activities etc.), including all possible overheads.

• CPU time: does not include the I/O, and other overheads.

• user CPU time: execution of application tasks

• system CPU time: execution of system tasks

Throughput vs. efficiency

Benchmarks

SPEC: System Performance Evaluation Cooperative

•

•

•

First Round 1989

• 10 programs yielding a single number (“SPECmarks”)

Second Round 1992

• SPECInt92 (6 integer programs) and SPECfp92 (14 floating point

programs)

• Compiler Flags unlimited. March 93 of DEC 4000 Model 610:

spice: unix.c:/def=(sysv,has_bcopy,”bcopy(a,b,c)=

memcpy(b,a,c)”

wave5: /ali=(all,dcom=nat)/ag=a/ur=4/ur=200

nasa7: /norecu/ag=a/ur=4/ur2=200/lc=blas

Third Round 1995

• new set of programs: SPECint95 (8 integer programs) and SPECfp95 (10

floating point)

• “benchmarks useful for 3 years”

• Single flag setting for all programs: SPECint_base95, SPECfp_base95

Benchmarks

CINT2000 (Integer Component of SPEC CPU2000):

Program

164.gzip

175.vpr

176.gcc

181.mcf

186.crafty

197.parser

252.eon

253.perlbmk

254.gap

255.vortex

256.bzip2

300.twolf

Language

C

C

C

C

C

C

C++

C

C

C

C

C

What Is It

Compression

FPGA Circuit Placement and Routing

C Programming Language Compiler

Combinatorial Optimization

Game Playing: Chess

Word Processing

Computer Visualization

PERL Programming Language

Group Theory, Interpreter

Object-oriented Database

Compression

Place and Route Simulator

http://www.spec.org/osg/cpu2000/CINT2000/

Benchmarks

CFP2000 (Floating Point Component of SPEC CPU2000):

Program

Language

What Is It

168.wupwise

Fortran 77

Physics / Quantum Chromodynamics

171.swimFortran 77

Shallow Water Modeling

172.mgrid

Fortran 77

Multi-grid Solver: 3D Potential Field

173.applu

Fortran 77

Parabolic / Elliptic Differential Equations

177.mesa

C

3-D Graphics Library

178.galgel

Fortran 90

Computational Fluid Dynamics

179.art

C

Image Recognition / Neural Networks

183.equake

C

Seismic Wave Propagation Simulation

187.facerec

Fortran 90

Image Processing: Face Recognition

188.ammp

C

Computational Chemistry

189.lucas

Fortran 90

Number Theory / Primality Testing

191.fma3d

Fortran 90

Finite-element Crash Simulation

200.sixtrack

Fortran 77

High Energy Physics Accelerator Design

301.apsi

Fortran 77

Meteorology: Pollutant Distribution

Benchmarks

Sample Results For SpecINT2000

http://www.spec.org/osg/cpu2000/results/res2000q3/cpu2000-20000718-00168.asc

Base

Benchmarks

Ref Time

164.gzip

1400

175.vpr

1400

176.gcc

1100

181.mcf

1800

186.crafty

1000

197.parser

1800

252.eon

1300

253.perlbmk

1800

254.gap

1100

255.vortex

1900

256.bzip2

1500

300.twolf

3000

SPECint_base2000

SPECint2000

Base

Base

Run Time Ratio

277

419

275

621

191

500

267

302

249

268

389

784

505*

334*

399*

290*

522*

360*

486*

596*

442*

710*

386*

382*

438

Peak

Peak

Peak

Ref Time Run Time Ratio

1400

270

1400

417

1100

272

1800

619

1000

191

1800

499

1300

267

1800

302

1100

248

1900

264

1500

375

3000

776

518*

336*

405*

291*

523*

361*

486*

596*

443*

719*

400*

387*

442

Intel OR840(1 GHz

Pentium III

processor)

Benchmarks

Performance Evaluation

• “For better or worse, benchmarks shape a field”

• Good products created when have:

• Good benchmarks

• Good ways to summarize performance

• Given sales is a function in part of performance relative to

competition, investment in improving product as reported

by performance summary

• If benchmarks/summary inadequate, then choose between

improving product for real programs vs. improving product

to get more sales;

Sales almost always wins!

• Execution time is the measure of computer performance!

Benchmarks

How to Summarize Performance

Management would like to have one number.

Technical people want more:

1.

They want to have evidence of reproducibility – there should be

enough information so that you or someone else can repeat the

experiment.

2.

There should be consistency when doing the measurements

multiple times.

How would you report these results?

Computer A

Computer B

Computer C

Program P1 (secs)

1

10

20

Program P2 (secs)

1000

100

20

Total Time (secs)

1001

110

40

Quantitative Principles of Computer Design

1.1 Introduction

1.2 The Task of a Computer

Designer

1.3 Technology and Computer Usage

Trends

1.4 Cost and Trends in Cost

1.5 Measuring and Reporting

Performance

1.6 Quantitative Principles of

Computer Design

1.7 Putting It All Together: The

Concept of Memory Hierarchy

Make the common case fast.

Amdahl’s Law:

Relates total speedup of a system

to the speedup of some portion of

that system.

Quantitative Design

Amdahl's Law

Speedup due to enhancement E:

Speedup( E )

Execution _ Time _ Without _ Enhancement

Performance _ With _ Enhancement

Execution _ Time _ With _ Enhancement

Performance _ Without _ Enhancement

This fraction enhanced

Suppose that enhancement E accelerates a fraction F of the

task by a factor S, and the remainder of the task is

unaffected

Quantitative Design - Amdahl’s law

ExTimenew = ExTimeold x (1 - Fractionenhanced) + Fractionenhanced

Speedupenhanced

Speedupoverall =

ExTimeold

ExTimenew

1

=

(1 - Fractionenhanced) + Fractionenhanced

Speedupenhanced

This fraction enhanced

ExTimeold

ExTimenew

Quantitative Design -Amdahl's Law

• Floating point instructions improved to run 2X; but only 10%

of actual instructions are FP

ExTimenew = ExTimeold x (0.9 + .1/2) = 0.95 x ExTimeold

Speedupoverall

=

1

0.95

=

1.053

Quantitative Design

Cycles Per Instruction

CPI = (CPU Time * Clock Rate) / Instruction Count

= Cycles / Instruction Count

n

CPU _ Time Cycle _ Time * CPIi * I i

“Instruction Frequency”

n

CPI CPI i * Fi

i 1

where

i 1

Invest Resources where time is Spent!

Number of

instructions

of type I.

Fi

Ii

Instruction _ Count

Quantitative Design

Suppose we have a machine where we can count the frequency with

which instructions are executed. We also know how many cycles it takes

for each instruction type.

Base Machine (Reg / Reg)

Op

Freq

Cycles

CPI(i)

(% Time)

ALU

50%

1

.5

(33%)

Load

20%

2

.4

(27%)

Store

10%

2

.2

(13%)

Branch

20%

2

.4

(27%)

Total CPI

How do we get CPI(I)?

How do we get %time?

1.5

Quantitative Design

Locality of Reference

Programs access a relatively small portion of the address

space at any instant of time.

There are two different types of locality:

Temporal Locality (locality in time): If an item is referenced,

it will tend to be referenced again soon (loops, reuse, etc.)

Spatial Locality (locality in space/location): If an item is

referenced, items whose addresses are close by tend to be

referenced soon (straight line code, array access, etc.)

The Concept of Memory Hierarchy

1.1 Introduction

1.2 The Task of a Computer Designer

1.3 Technology and Computer Usage

Trends

1.4 Cost and Trends in Cost

1.5 Measuring and Reporting

Performance

1.6 Quantitative Principles of Computer

Design

1.7 Putting It All Together: The Concept

of Memory Hierarchy

Fast memory is expensive.

Slow memory is cheap.

The goal is to minimize the

price/performance for a

particular price point.

Memory Hierarchy

Registers

Level 1

cache

Level 2

Cache

Memory

Disk

Typical

Size

4 - 64

<16K

bytes

<2 Mbytes

<16

Gigabytes

>5

Gigabytes

Access

Time

1 nsec

3 nsec

15 nsec

150 nsec

5,000,00

0 nsec

Bandwidt

h (in

MB/sec)

10,000 –

50,000

2000 5000

500 1000

500 1000

100

Managed

By

Compiler

OS

OS/User

Hardware Hardware

Memory Hierarchy

• Hit: data appears in some block in the upper level (example: Block X)

• Hit Rate: the fraction of memory access found in the upper level

• Hit Time: Time to access the upper level which consists of

RAM access time + Time to determine hit/miss

• Miss: data needs to be retrieved from a block in the lower level (Block

Y)

• Miss Rate = 1 - (Hit Rate)

• Miss Penalty: Time to replace a block in the upper level +

Time to deliver the block to the processor

• Hit Time << Miss Penalty (500 instructions on 21264!)

Memory Hierarchy

Registers

Level 1

cache

Level 2

Cache

Memory

What is the cost of executing a program if:

• Stores are free (there’s a write pipe)

• Loads are 20% of all instructions

• 80% of loads hit (are found) in the Level 1 cache

• 97 of loads hit in the Level 2 cache.

Disk

Wrap Up

1.1 Introduction

1.2 The Task of a Computer Designer

1.3 Technology and Computer Usage Trends

1.4 Cost and Trends in Cost

1.5 Measuring and Reporting Performance

1.6 Quantitative Principles of Computer Design

1.7 Putting It All Together: The Concept of Memory Hierarchy