defining sotl scholarship of teaching and learning

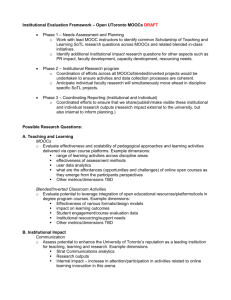

advertisement

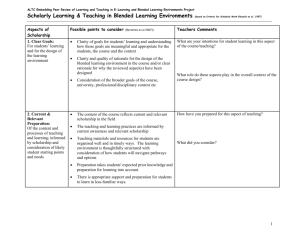

MAINTAINING QUALITY IN BLENDED LEARNING: FROM CLASSROOM ASSESSMENT TO IMPACT EVALUATION PART II: IMPACT EVALUATION Patsy Moskal (407) 823-0283 pdmoskal@mail.ucf.edu http://rite.ucf.edu DEFINING SOTL SCHOLARSHIP OF TEACHING AND LEARNING (SOTL) Scholarly research on effective teaching and student learning Ernest Boyar, 1990, Scholarship Reconsidered Carnegie Academy for the Scholarship of Teaching and Learning Scholarship Assessed (1997) Charles Glassick, Mary Taylor Huber, and Gene Maeroff NATIONAL ORGANIZATIONS DEVOTED TO THE SCHOLARSHIP OF TEACHING & LEARNING International Society for the Scholarship of Teaching & Learning American Association for Higher Education & Accreditation Carnegie Academy for the Scholarship of Teaching & Learning The Carnegie Foundation for the Advancement of Teaching MOTIVATION FOR SOTL Research new instructional methods or classroom changes for improvement Provides opportunities for publication and presentation Tenure and promotion Supporting data for accreditation, grant proposals, etc… JOURNALS DEVOTED TO SOTL Journal of Scholarship of Teaching & Learning Teaching in Higher Education New Directions for Teaching & Learning Journal on Excellence for Teaching and Learning Achieving Learning in Higher Education DISCIPLINE SPECIFIC JOURNALS Journal of Education for Business American Biology Teacher Journal of Research in Science Teaching Studies in Art Education Teaching and Learning in Medicine Journal of Nursing Information Arts and Humanities in Higher Education HOW TO ACCOMPLISH SOTL CHALLENGES IN COMPLETING SOTL RESEARCH Faculty lack of expertise in research/stats Lack of time, resources Minimize class disruption Challenges to designing research THE ALICE IN WONDERLAND APPROACH TO ASSESSMENT “Would you tell me, please, which way I ought to go from here?” said Alice. “That depends a good deal on where you want to get to,” said the Cat. “I don’t much care where—” said Alice. “Then it doesn’t matter which way you go,” said the Cat. “—so long as I get somewhere,” Alice added “Oh, you’re sure to do that,” said the Cat, “if you only walk around long enough.” --Lewis Carroll PRINCIPLES THAT GUIDE OUR EVALUATION Evaluation must be objective. Evaluation must conform to the culture Uncollected data cannot be analyzed. Data do not equal information. Qualitative and quantitative approaches must complement each other. Evaluation must show an impact. Results may not be generalized THE KEY TO SUCCESSFULLY ACCOMPLISHING SOTL… 1. 2. 3. 4. 5. 6. Clear Goals Adequate Preparation Appropriate Methods Significant Results Effective Presentation Reflective Critique (Glassick, Huber, Maeroff, 1997) CLEAR GOALS Do you have a clear goal? Is your goal doable? THE K.I.S.S. PRINCIPLE OF ASSESSMENT DESIGN Keep It Simple and Straightforward! A simple, doable design is better than a complex, impossible design that is never completed! HITTING THE TARGET… What You do you want to know? must clearly define your questions. And, if your data doesn’t answer your questions… what’s the point?! ADEQUATE PREPARATION Have Can If you looked at the literature? you do your study? you need help, can you get support? FINDING SOURCES OF HELP Faculty development center Institutional Office of Assessment Statistics Content Other research or research folks experts researchers APPROPRIATE METHODS Do you have data or can you get it? Do your methods “fit” your goal and objectives? Be prepared to rewind and repeat! SOME ASSESSMENT/EVALUATION TOOLS Surveys Focus groups Course-based performance Observations Tests/exams Pre-collected data E-portfolios Rubrics SIGNIFICANT RESULTS Did you achieve your objectives? Does this work inform and add to the field? STATISTICALLY OR PRACTICALLY SIGNIFICANT?? Don’t let the statistics run your design! Statistically significant may not be practically significant. What about the random sample? Quantitative and qualitative approaches must complement each other SOME EXAMPLES SURVEYS SURVEY PROS AND CONS PROS Easy to administer Electronic is possible Researcher’s questions Can tell you “what” Open ended can tell you more Can look at demographics CONS Student opinions Low response rate Timing can impact results Wording of questions is important “Over surveyed” students STUDENT SATISFACTION IN BLENDED COURSES Percent N = 36,801 49% 28% 17% 6% Very Satisfied Satisfied 2% Neutral Unsatisfied Very Unsatisfied 24 STUDENTS’ POSITIVE PERCEPTIONS ABOUT BLENDED LEARNING Convenience Reduced Logistic Demands Increased Learning Flexibility Technology Enhanced Learning Reduced Opportunity Costs for Education LESS POSITIVES WITH BLENDED LEARNING Reduced Face-to-Face Time Technology Problems Reduced Instructor Assistance Overwhelming Increased Workload 26 Increased Opportunity Costs for Education STUDENT SATISFACTION IN FULLY ONLINE AND BLENDED COURSES Percent Fully online (N = 67,433) Blended (N = 36,801) 47% 49% 28%28% 16%17% 6% 6% Very Satisfied Satisfied Neutral 3% 2% Very Unsatisfied Unsatisfied SOME EXAMPLES PRE-EXISTING DATA PRE-EXISTING DATA PROS AND CONS PROS Already collected May have longitudinal data Often in electronic spreadsheet CONS Someone else decided what to collect May not be in a form of your choosing Requires permission and obtaining from others STUDENT SUCCESS AND WITHDRAWAL SUCCESS RATES BY MODALITY SPRING 09 THROUGH SPRING 10 Percent F2F n=456,125 Blended n=30,361 Fully Online n=83,274 WITHDRAWAL RATES BY MODALITY SPRING 09 THROUGH SPRING 10 Percent F2F n=456,125 Blended n=30,361 Fully Online n=83,274 STUDENT EVALUATION OF INSTRUCTION SEI A decision rule for the probability of faculty member receiving an overall rating of Excellent (n=1,280,890) If... Excellent Very Good Fair Poor Good Facilitation of learning Communication of ideas Respect and concern for students Then... The probability of an overall rating of Excellent = .97 The probability of an overall rating of Fair or Poor =.00 & A COMPARISON OF EXCELLENT RATINGS BY COURSE MODALITY--UNADJUSTED AND ADJUSTED FOR INSTRUCTORS SATISFYING RULE 1 (N=1,171,664) Course Modality Blended Online Enhanced F2F ITV Overall % Excellent 48.9 47.6 46.8 45.7 34.2 If Rule 1 % Excellent 97.2 97.3 97.5 97.2 96.6 EFFECTIVE PRESENTATION Did you remember your objectives? Did you remember your audience? Did you present your message in a clear, understandable manner? DATA DO NOT EQUAL INFORMATION… Data, by itself, answers no questions and is nothing more than a bunch of numbers and/or letters. How you interpret the data for others can determine how well they understand. Visuals are good! Ongoing assessment is best. REFLECTIVE CRITIQUE Did you critique your own work? What worked well and what didn’t work? Where do you go from here? EVALUATION CYCLE 1. Define your question(s). 2. Determine methods that can answer question(s). 3. Implement methods, gather, and analyze data. 4. Interpret results – did you answer your question? 5. Make decisions based on results. ADDITIONAL THINGS TO CONSIDER SOME ISSUES TO PONDER WITH DATA Uncollected data cannot be analyzed! Data is not always “clean” and can require work Look for data you already have TACKLING IRB What is it? Training may be required A MUST if you want to publish or present Don’t be intimidated! UNEXPECTED ISSUES Technology challenges People challenges No response Dirty data MAKE AN IMPACT WITH YOUR ASSESSMENT… The final step in assessment (or evaluation) should be determining how your results can impact decisions for the future. EXTENDING SOTL TO PROGRAM RESEARCH AND BEYOND Opportunity Costs A little A lot IMPACT EVALUATION AS A MATTER OF SCALE Institution College Department Program Individual Few Level 46 There is added value at every level Many RESEARCH INITIATIVE FOR TEACHING EFFECTIVENESS What services do we provide? Research design Survey construction & administration Data analysis & interpretation Results provided in “charts and graphs” format Publication and presentation assistance Patsy Moskal, Ed.D. (407) 823-0283 pdmoskal@mail.ucf.edu http://rite.ucf.edu CONTACT INFORMATION