Packing and Unpacking Sources of Validity Evidence

advertisement

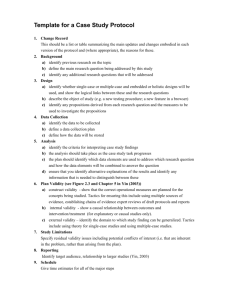

Packing and Unpacking Sources of Validity Evidence: History Repeats Itself Again Stephen G. Sireci University of Massachusetts Amherst Presentation for the conference “The Concept of Validity: Revisions, New Directions, & Applications” October 9, 2008 University of Maryland, College Park Validity A concept that has evolved and is still evolving The most important consideration in educational and psychological testing Simple, but complex – Can be misunderstood – Disagreements regarding what it is, and what is important Purposes of this presentation Provide some historical context on the concept of validity in testing Present current, consensus definitions of validity Describe the validation framework implied in the Standards Discuss limitations of current framework Suggest new directions for validity research and practice Packing and unpacking: A prelude Packing Does the test measure what it purports to measure? A test is valid for anything with which it correlates. Validity is a unitary concept. Unpacking Predictive, status, content, congruent validity Clarity, coherence, plausibility of assumptions (validity argument) 5 sources of validity evidence Validity defined What is validity? How have psychometricians come to define it? What does valid mean? Truth? According to Websters, Valid: 1. having legal force; properly executed and binding under the law. 2. sound; well grounded on principles or evidence; able to withstand criticism or rejection. 3. effective, effectual, cogent 4. robust, strong, healthy (rare) What is validity? According to Websters: Validity: 1. the state or quality of being valid; specifically, (a) strength or force from being supported by fact; justness; soundness; (b) legal strength or force. 2. strength or power in general 3. value (rare) How have psychometricians defined validity? Some History In the beginning In the beginning Modern measurement started at the turn of the 20th century 1905: Binet-Simon scale – 30-item scale designed to ensure that no child could be denied instruction in the Paris school system without formal examination • Binet died in 1911 at age 54 Note College Board was established in 1900 – Began essay testing in 1901 What else was happening around the turn of the century? 1896: Karl Pearson, Galton Professor of Eugenics at University College, published the formula for the correlation coefficient Given the predictive purpose of Binet’s test, interest in heredity and individual differences, and a new statistical formula relating variables to one another validity was initially defined in terms of correlation Earliest definitions of Validity “Valid scale” (Thorndike, 1913) A test is valid for anything with which it correlates – Kelley, 1927; Thurstone, 1932; – Bingham, 1937; Guilford (1946); others Validity coefficients – correlations of test scores with grades, supervisor ratings, etc. Validation started with group tests 1917: Army Alpha and Army Beta – (Yerkes) – Classification of 1.5 million recruits Borrowed items and ideas from Otis Tests – Otis was one of Terman’s graduate students Military Testing Tests were added or subtracted to batteries based solely on correlational evidence (e.g, increase in R2). How well does test predict pass/fail criterion several weeks later? Jenkins (1946) and others emerged in response to problems with notion that validity=correlation – See also Pressey (1920) Problems with notion that validity = correlation Finding criterion data Establishing reliability of criterion Establishing validity of criterion If valid, measurable, criteria exist, why do we need the test? What did critics of correlational evidence of validity suggest for validating tests? Professional judgment “...it is proper for the test developer to use his individual judgment in this matter though he should hardly accept it as being on par with, or as worthy of credence as, experimentally established facts showing validity.” – (Kelley, 1927, pp. 30-31) What did critics of correlational evidence of validity suggest for validating tests? Appraisal of test content with respect to the purpose of testing (Rulon, 1946) – rational relationship Sound familiar? Early notions of content validity – (Kelley, Mosier, Rulon, Thorndike, others) – but notice Kelley’s hesitation in endorsing this evidence, or going against the popular notion Other precursors to content validity Guilford (1946): validity by inspection? Gulliksen (1950): “Intrinsic Validity” – pre/post instruction test score change – consensus of expert judgment regarding test content – examine relationship of test to other tests measuring same objectives Herring (1918): 6 experts evaluated the “fitness of items” Development of Validity Theory By the 1950s, there was consensus that correlational evidence was not enough and that judgmental data of the adequacy of test content should be gathered Growing idea of multiple lines of “validity evidence” Emergence of Professional Standards Cureton (1951): First “Validity” chapter in first edition of “Educational Measurement” (edited by Lindquist). Two aspects of validity – Relevance (what we would call criterion-related) – Reliability Cureton (1951) Validity defined as “the correlation between actual test scores and true criterion scores” but: “curricular relevance or content validity” may be appropriate in some situations. Emergence of Professional Standards 1952: APA Committee on Test Standards – Technical Recommendations for Psychological Tests and Diagnostic Techniques: A Preliminary Proposal Four “categories of validity” – predictive, status, content, congruent Emergence of Professional Standards 1954: APA, AERA, & NCMUE produced – Technical Recommendations for Psychological Tests and Diagnostic Techniques Four “types” or “attributes” of validity: – construct validity (instead of congruent) – concurrent validity (instead of status) – predictive – content 1954 Standards Chair was Cronbach and guess who else was on the Committee? – Hint: A philosopher Promoted idea of: – different types of validity – multiple types of evidence preferred – some types preferable in some situations Subsequent Developments 1955: Cronbach and Meehl – Formally defined and elaborated the concept of construct validity. – Introduced term “criterion-related validity” 1956: Lennon – Formally defined and elaborated the concept of content validity. Subsequent Developments Loevinger (1957): big promoter of construct validity idea. Ebel (1961…): big antagonist of unified validity theory – Preferred “meaningfulness” Evolution of Professional Standards 1966: AERA, APA, NCME Standards for Educational and Psychological Tests and Manuals Three “aspects” of validity: – Criterion-related (concurrent + predictive) – Construct – Content 1966: Standards Introduced notion that test users are also responsible for test validity Specific testing purposes called for specific types of validity evidence. – Three “aims of testing” • present performance • future performance • standing on trait of interest Important developments in content validation Evolution of Professional Standards 1974: AERA, APA, NCME Standards for Educational and Psychological Tests Validity descriptions borrowed heavily from Cronbach (1971) – Validity chapter in 2nd edition of “Educational Measurement” (edited by R.L. Thorndike) 1974: Standards Defined content validity in operational, rather than theoretical, terms. Beginning of notion that construct validity is much cooler than content or criterion-related. Early consensus of “unitary” conceptualization of validity Evolution of Professional Standards 1985: AERA, APA, NCME Standards for Educational and Psychological Testing note “ing” Described validity as unitary concept Notion of validating score-based inferences Very Messick-influenced 1985 Standards More responsibility on test users More standards on applications and equity issues Separate chapters for – Validity – Reliability – Test development – Scaling, norming, equating – Technical manuals 1985 Standards New chapters on specific testing situations – Clinical – Educational – Counseling – Employment – Licensure & Certification – Program Evaluation – Linguistic Minorities – “People who have handicapping conditions” 1985 Standards New chapters on – Administration, scoring, reporting – Protecting the rights of test takers – General principles of test use Listed standards as – primary, – secondary, or – conditional. 1999 Standards New “Fairness in Testing” section No more “primary,” “secondary,” “conditional.” 3-part organizational structure 1. Test construction, evaluation, & documentation 2. Fairness in testing 3. Testing applications 1999 Standards (2) Incorporated the “argument-based approach to validity” Five “Sources of Validity Evidence” 1. Test content 2. Response processes 3. Internal structure 4. Relations to other variables 5. Testing consequences We’ll return to these sources later. Comparing the Standards: Packing & Unpacking Validity Evidence Edition Validity 1954 Construct, concurrent, predictive, content Criterion-related, construct, content Criterion-related, construct, content Unitary (but, content-related evidence, etc.) Unitary: 5 sources of evidence 1966 1974 1985 1999 What are the current and influential definitions of validity? Cronbach: Influential, but not current (1971…) Messick (1989…) Shepard (1993) Standards (1999) Kane (1992, 2006) Messick (1989): 1st sentence “Validity is an integrated evaluative judgment of the degree to which empirical evidence and theoretical rationales support the adequacy and appropriateness of inferences and actions based on test scores and other modes of assessment.” (p. 13) This “integrated” judgment led Messick, and others, to conclude All validity is construct validity. It outside my purpose today to debate the unitary conceptualization of validity, but like all theories, it has strengths and limitations. But two quick points… Unitary conceptualization of validity Focuses on inferences derived from test scores – Assumes measurement of a construct motivates test development and purpose The focus on analysis of scores may undermine attention to content validity Removal of term “content validity” may have had negative effect on validation practices. Consider Ebel (1956) “The degree of construct validity of a test is the extent to which a system of hypothetical relationships can be verified on the basis of measures of the construct…but this system of relationships always involves measures of observed behaviors which must be defended on the basis of their content validity” (p. 274). Consider Ebel (1956) “Statistical validation is not ann alternative to subjective evaluation, but an extension of it. All statistical procedures for validating tests are based ultimately upon common sense agreement concerning what is being measured by a particular measurement process” (p. 274). The 1999 Standards accepted the unitary conceptualization, but also took a practical stance. The practical stance stems from the use of an argument-based approach to validity. – Cronbach (1971, 1988) – Kane (1992, 2006) The Standards (1999) succinctly defined validity “Validity refers to the degree to which evidence and theory support the interpretations of test scores entailed by proposed uses of tests.” (p. 9) Why do I say the Standards incorporated the argument-based approach to validation? “Validation can be viewed as developing a scientifically sound validity argument to support the intended interpretation of test scores and their relevance to the proposed use.” (AERA et al., 1999, p. 9) Kane (1992) “it is not possible to verify the interpretive argument in any absolute sense. The best that can be done is to show that the interpretive argument is highly plausible, given all available evidence” (p. 527). Kane: Argument-based approach a) Decide on the statements and decisions to be based on the test scores. b) Specify inferences/assumptions leading from test scores to statements and decisions. c) Identify competing interpretations. d) Seek evidence supporting inferences and assumptions and refuting counterarguments. Philosophy of Validity Messick (1989) “if construct validity is considered to be dependent on a singular philosophical base such as logical positivism and that basis is seen to be deficient or faulty, then construct validity might be dismissed out of hand as being fundamentally flawed” (p. 22). Messick (1989) “nomological networks are viewed as an illuminating way of speaking systematically about the role of constructs in psychological theory and measurement, but not as the only way” (p. 23). 3 perspectives on rel. b/w test and other indicators of construct 1. Test and nontest consistencies are manifestations of real traits. 2. Test and nontest consistencies are defined by rel. among constructs in a theoretical framework. 3. Test and nontest consistencies are attributable to real entities but are understood in terms of constructs. See Messick (1989) Figures 2.1-2.3 Messick on test validation “test validation is a process of inquiry” (p. 31) 5 systems of inquiry – Leibnizian – Lockean – Kantian – Hegelian – Singerian Systems of inquiry Main points – Validation can seek to confirm – Validation can seek consensus (Leibniz, Lock) – Validation can seek alternative hypotheses – Validation can seek to disconfirm (Kant, Hegel, Singer) Two other important points by Messick (1989) “the major limitation is shortsightedness with respect to other possibilities” (p. 33) “The very variety of methodological approaches in the validational armamentarium, in the absence of specific criteria for choosing among them, makes it possible to select evidence opportunistically and to ignore negative findings” (p. 33) If you look at the seminal papers and textbooks, and the various editions of the Standards, there are several fundamental and consensus tenets about validity theory and test validation. Fundamental Validity Tenets Validity is NOT a property of a test. A test cannot be valid or invalid. What we seek to validate are (inferences) uses of test scores. Validity is not all or none. Test validity must be evaluated with respect to a specific testing purpose. Thus, a test may be appropriate for one purpose, but not for another. Fundamental Validity Tenets (cont.) Evaluating the validity of inferences derived from test scores requires multiple lines of evidence (i.e., different types of evidence for validity). Test validation never ends—it is an ongoing process. I believe these tenets can be considered “consensus” due to their incorporation in the standards and predominance in the literature. But of course, not everyone need agree with consensus, and we will here important points from detractors over the next two days. Criticisms of this perspective Tests are never truly validated (we are never done). No prescription or guidance regarding specific types of evidence to gather and how to gather it. Ideal goals with no guidance leads to inaction. The argument-based approach is a compromise between sophisticated validity theory and the reality that at some point, we must make a judgment about the defensibility and suitability of use of a test for a particular purpose. What guidance does the Standards give us? Five “sources of evidence that might be used in evaluating a proposed interpretation of test scores for particular purposes” (Messick, 1989, p. 13). “Validation is a matter of making the most reasonable case to guide both current use of the test and current research to advance understanding of what the test scores mean… To validate an interpretive inference is to ascertain the degree to which multiple lines of evidence are consonant with the inference, while establishing that alternative inferences are less well supported.” The current Standards Provide a useful framework for evaluating the use of a test for a particular purpose. – And for documenting validity evidence Allow us to use multiple lines of evidence to support use of a test for a particular purpose But, are not prescriptive and do not provide examples or references to “adequate” validity arguments. Standards’ Validation Framework Validity evidence based on 1. Test content 2. Response processes 3. Internal structure 4. Relations to other variables 5. Testing consequences What is helpful in the Standards framework? It provides a system for categorizing validity evidence so that a coherent set of evidence can be put forward. It provides a way of standardizing the reporting of validity evidence. It focuses on both test construction and test score validation activities. Emphasizes the importance of evaluating consequences What are the limitations in the Standards framework? Not all types of evidence of validity fit into the 5 sources categories. No examples of good validation studies or of when sufficient evidence is put forth No statistical guidance No references Vagueness in some areas Suggestions for revising the Standards (1) Need to refine sources of validity evidence to accommodate – Analysis of group differences – Alignment research – Differential item functioning – Statistical analysis of test bias Need more clarity on validity evidence for accountability testing (groups, rather than individuals) Suggestions for revising the Standards (2) Need to define “score comparability” – Across subgroups of examinees taking a single assessment – Across accommodations to standardized assessments – Across different language versions of an assessment – Across different tests in CAT/MST – Across different modes of assessment Suggestions for revising the Standards (3) Include specific examples of laudable test validation analyses and references to studies that exemplify sound validity arguments. Closing remarks There are different perspectives on validity theory. Whether a test is valid for a particular purpose will always be a question of judgment. A sound validity argument makes the judgment an easy one to make. Closing remarks (2) For educational tests, validity evidence based on test content, is fundamental. Without confirming the content tested is consistent with curricular goals, the test adequately represents the intended domain, and the test is free of constructirrelevant material, the utility of the test for making educational decisions will be undermined. Why are there different perspectives on validity? It’s philosophy. It’s okay to disagree, but we need consensus with respect to nomenclature, and that is where differences can hurt us as a profession. For over 50 years, the Standards have provided consensus definitions. Remember, thhreats to validity boil down to Construct underrepresentation Construct-irrelevant variance “Tests are imperfect measures of constructs because they either leave out something that should be included…or else include something that should be left out, or both” (Messick, 1989, p. 34) Adhering to and Improving the Standards I don’t agree with everything in the Standards. But I find it easier to work within the framework, than against it. Advice for the remainder of the conference, and for your future validity endeavors If you criticize, have specific improvements to contribute. – (e.g., evidence-centered design) Consider different perspectives on validity when evaluating use of a test for a particular purpose – If one statistical analysis is offered as “validation,” be suspicious – Look for evidence in test construction Thank you for your attention And thanks to Bob Lissitz and UMD for the invitation and for holding this conference. I look forward to continuing the conversation. There is certainly a lot more to hear, and to say. Sireci@acad.umass.edu