Purpose of seminar

ANALYZING AND

VISUALIZING INTERACTIONS

IN SAS 9.4

Andy Lin

IDRE Statistical Consulting

Background

Regression models effects of IVs on DVs.

E.g. does amount of time exercising predict weight loss?

Can also model effect of IV modified by another IV

moderating variable (MV)

e.g is effect of exercise time on weight loss modified by the type of exercise?

Effect modification = interaction

Background

Interactions are products of IVs

Typically entered with the IVs into regression

All we get out of regression is a coefficient

Not enough to understand interaction

What are the conditional effects?

Simple effects and slopes

Conditional interactions

Purpose of seminar

Demonstrate methods to estimate, test and graph effects within an interaction

Specifically we will use PROC PLM to:

Calculate and estimate simple effects

Compare simple effects

Graph simple effects

weight = β

0

+ β s

SEX + β h

HEIGHT

Main effects vs interaction models

Main effects models

IV effects constrained to be the same across levels of all other IVs in the model

Main effect of height is constrained to be the same across sexes

Average of male and female height effect

weight = β

0

+ β s

SEX + β h

HEIGHT

Main effects vs interaction models

Interaction models

Allow effect of an IV to vary with levels of another IV

Formed as product of 2 IVs

Now the effect of height may vary between sexes

And effect of sex may vary at different heights

Simple effects and slopes

From this equation

We can derive sex-specific regression equations

Males (sex=0)

Females (sex=1)

Simple effects and slopes

Each sex has its own height effect

Males (sex=0)

Females (sex=1)

These are the simple slopes of height within each group

Interaction coefficient is difference in simple slopes

PROC PLM

We use proc plm for most of our analyses

Proc plm performs post-estimation analyses and graphing

Uses and “item store” as input

Contains model information (coefficients and covariance matrices)

Item store created in other procs

Inlcuding glm, genmod, logistic, phreg, mixed, glmmix, and more

PROC PLM

Important proc plm statement used in this seminar

Estimate statement

Forms linear combinations of coefficients and tests them against 0

Very flexible – linear combinations can be means, effects, contrasts, etc.

We use it to estimate and compare simple slopes

Syntax is a bit more difficult

PROC PLM

Important proc plm statement used in this seminar

Slice statement

Specifically analyzes simple effects

Very simple syntax

Lsmestimate statement

Compare estimated marginal means, i.e. calculate simple effects

More versatile than slice

PROC PLM

Lsmeans statement

Estimates marginal means and can calculate differences between them

Effectplot

Plots predicted values of the outcome across range of values on 1 or more predictors

Can visualize interactions

Many types of plots

WHY PROC PLM

Many of these statements found in regression procs

Why use PROC PLM?

Do not have to rerun model as we run code for interaction analysis

These statements sometimes have more functionality in

PROC PLM

Dataset used in seminar

Study of average weekly weight loss achieved by subjects in 3 exercise programs

900 subjects

Important variables:

Loss – continuous, normal outcome – average weekly weight loss

Hours – continuous predictor – average weekly hours of exercise

Effort – continuous predictor – average weekly rating of exertion when exercising, ranging from 0 to 50

Dataset used in the seminar

Important variables cont:

Prog – 3-category predictor - which exercise program the subject followed, 1=jogging, 2=swimming,

3=reading (control)

Female – binary predictor - gender, 0=male,

1=female

Satisfied - binary outcome - subject’s overall satisfaction with weight loss due to participation in exercise program, 0=unsatisfied, 1=satisified

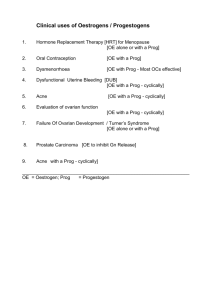

Continuous-by-continuous: the model

We first model the interaction of 2 continuous IVs

The effect of a continuous IV on the outcome is called a slope

Expresses change in outcome pre unit increase in IV

With the interaction of 2 continuous variables, the slope of each IV is allowed to vary with the other IV

Simple slopes

Continuous-by-continuous: the model

Let us look at model where Y is predicted by continuous X, continuous Z, and their interaction:

Be careful when interpreting β x

and β z

They are simple effects (when interacting variable=0), not main effects

Continuous-by-continuous: the model

The coefficient β xz is interpreted as the change in the simple slope of X per unit-increase in Z

Equation for simple slope of X:

Continuous-by-continuous: example model

We regress loss on hours, effort, and their interaction

Is the effect of hours modified by the effort that the subject exerts?

And the converse – is effect of effort modified by hours?

Continuous-by-continuous: example model proc glm data=exercise; model loss = hours|effort / solution; store contcont; run ;

The “|” requests main effects and interactions

solution requests table of regression coefficients

store contcont creates an item store of the model for proc plm

Continuous-by-continuous: example model

Interaction is significant

Remember that hours and effort terms are simple slopes

Continuous-by-continuous: calculating simple slopes

Estimate statement used to form linear combinations of regression coefficients

Including simple slopes (and effects)

Very flexible

Understanding the regression equation very helpful in coding estimate statements

Estimate statement syntax

Estimate ‘label’ coefficient values / e

e.g. to estimate expected loss when hours=2 and effort = 30 proc plm restore=contcont; estimate 'pred loss, hours=2, effort=30' intercept 1 hours 2 effort 30 hours*effort 60 / e; run ;

The regression coefficients are multiplied by their values and summed to form the estimate, which is tested against 0

We see that the values are correct

And a test against 0 (not interesting here)

Continuous-by-continuous: calculating simple slopes

Let’s revisit the formula for the simple slope of X moderated by Z

In the estimate statement, we will put a 1 after β x and the value of z after β zx

In our model, X = hours and Z=effort

Continuous-by-continuous: calculating simple slopes

What values of effort to choose to evaluate simple slopes of hours

Two common choices:

Substantively important values (education=12yrs, BMI=18, temperature = 98.6, etc.)

Data-driven values (mean, mean+sd, mean-sd)

There are no a priori important values of effort, so we choose (mean, mean+sd, mean-sd) = (26.66,

34.8, 24.52)

Continuous-by-continuous: calculating simple slopes proc plm restore=contcont; estimate 'hours, effort=mean-sd' hours 1 hours*effort 24.52

,

‘ hours, effort=mean' hours 1 hours*effort 29.66

,

'hours, effort=mean+sd' hours 1 hours*effort 34.8

/ e; run ;

Continuous-by-continuous: calculating simple slopes

We might be interested in whether those simple slopes are different, but we don’t need to test it

Why?

If the moderator is continuous and interaction is significant then simple slopes will always be different

We demonstrate a difference to show this

Continuous-by-continuous: calculating simple slopes

To get the difference between simple slopes, take the difference between values across coefficients in the estimate statement hours 1 hours*effort 29.66

- hours 1 hours*effort 24.52

hours 0 hours*effort 5.14

Continuous-by-continuous: calculating simple slopes

Coefficients with 0 values can be omitted: proc plm restore=contcont; estimate 'diff slopes, mean+sd - mean' hours*effort 5.14

; run ;

Same t-value and p-value as interaction coefficient

Continuous-by-continuous: graphing simple slopes

We use effectplot statement in proc plm

Plot predicted outcome across range of values of predictors

We will plot across range of 2 predictors to depict an interaction

Simple slopes as contour plots

proc plm source=contcont; effectplot contour (x=hours y=effort); run ;

Simple slopes as contour plots

Contour plots uncommon

Nice that both continuous variables are represented continuously

Simple slopes of hours are horizontal lines across graph

The more the color changes, the steeper the slope

Simple slopes as a fit plot

proc plm source=contcont; effectplot fit (x=hours) / at(effort= 24.52 29.66 34.8

); run ;

Effort will not be represented continuously, so we must specify values what we want

A separate graph will be plotted for each effort

Simple slopes as a fit plot

More easily understood

But why not all 3 on one graph?

Creating a custom graph through scoring

We can make the graph ourselves by getting predicted loss values across a range of hours at the

3 selected effort values (24.52, 29.66, 34.8) by:

Creating a dataset of hours and effort values at which to predict the outcome loss

Use the score statement in proc plm to predict the outcome and its 95% confidence interval

Use the scored dataset in proc sgplot to create a plot

Creating a custom graph through scoring data scoredata; do effort = 24.52

, 29.66

, 34.8

; do hours = 0 to 4 by 0.1

; output; end; end; run ; proc plm source=contcont; score data=scoredata out=plotdata predicted=pred lclm=lower uclm=upper; run ; proc sgplot data=plotdata; band x=hours upper=upper lower=lower / group=effort transparency= 0.5

; series x=hours y=pred / group=effort; yaxis label="predicted loss"; run ;

Creating a custom graph through scoring

Purty!

Quadratic effect: the model

Special case of a continuous-by-continuous interaction

Interaction of IV with itself

Allows the (linear) effect of the IV to vary depending on the level of the IV itself

Models a curvilinear relationship between DV and IV

Quadratic effect: the model

The regression equation with linear and quadratic effects of continuous predictor X:

β x is still interpreted as slope of X when X=0

β xx interpretation slightly different

Represents ½ the change in the slope of X when X increase by 1 unit

Quadratic effect: the model

To get formula for simple slope of X, we must use partial derivative:

Here we see that the slope of X changes by 2 β xx per unit-increase in X

Quadratic effect: example model

We regress loss on the linear and quadratic effect of hours proc glm data=exercise order=internal; model loss = hours|hours / solution; store quad; run ;

Quadratic effect: example model

Quadratic effect is significant

Negative sign indicates that slope becomes more negative as hours increases (inverted U-shaped curve)

Diminishing returns on increasing hours

Quadratic effect: calculating simple slopes

We construct estimate statements for simple slopes in the same way as before

BUT, we must be careful to multiply the value after the quadratic effect by 2

We will put a 1 after β x

β xx and the value of 2*x after

No a priori important values of hours, so we choose mean=2, mean+sd=2.5, and mean-sd=1.5

Quadratic effect: calculating simple slopes proc plm restore=quad; estimate 'hours, hours=mean-sd(1.5)' hours 1 hours*hours 3 ,

'hours, hours=mean(2)' hours 1 hours*hours 4 ,

'hours, hours=mean+sd(2.5)' hours 1 hours*hours 5 / e; run ;

Slopes decrease as hours increase, eventually non-significant

Quadratic effect: comparing simple slopes

Do not need to compare

Significance always same as interaction coefficient

Quadratic effect: graphing the quadratic effect

The “fit” type of effectplot is made for plotting the outcome vs a single continuous predictor proc plm restore=quad; effectplot fit (x=hours); run ;

Quadratic effect: graphing the quadratic effect

• Diminishing returns apparent

• Too many hours of exercise may lead to weight gain

Continuous-by-categorical: the model

We can also estimate the simple slopes in a continuous-by-categorical interaction

We will estimate the slope of the continuous variable within each category of the categorical variable

We could also look at the simple effects of the categorical variable across levels of the continuous

First, how do categorical variables enter regression models?

Categorical predictors and dummy variables

A categorical predictor with k categories can be represented by k dummy variables

Each dummy codes for membership to a category, where 0=non-membership and 1=membership

However, typically only k-1 dummies are entered into the regression model?

Each dummy is a linear combination of all other dummies -- collinearity

Regression model cannot estimate coefficient for a collinear predictor

Categorical predictors and dummy variables

Omitted category known as the reference category

All effects of a categorical variable in the regression table are comparisons with reference group

SAS by default will use the last category as the reference

Categorical predictors and dummy variables

Interaction of dummy variables and continuous variable

To interact the dummy variables with a continuous predictor, multiply each one by the continuous variable

Any interaction involving an omitted dummy will be omitted as well

Continuous-by-categorical: the model

Here is the regression equation for a continuous variable, X, interacted with a 3-category categorical predictor, M

β x is simple slope of X for M=3

β m1

β xm1 and β m2 and β xm2 are simple effects of M when X=0 r epresent differences in slopes of X when

M=1 and M=2, and differences in simple effects of M per unit change in X

Continuous-by-categorical: the model

Formulas for simple slopes

Continuous-by-categorical: example model

We regress loss on hours, prog (3-category) and their interaction proc glm data=exercise order=internal; class prog; model loss = hours|prog / solution; store catcont; run ;

Put prog on class statement to declare it categorical

Use order=internal to order prog by numeric value rather than formats

Continuous-by-categorical: example model

Interaction is significant overall

Notice the 0 coefficients for reference groups

Continuous-by-categorical: calculating simple slopes

Here are the formulas for our simple slopes again:

SAS will accept the first two formulas for estimates of the simple slopes in estimate statements

But the estimate statement for the slope of X when (M=3)

REQUIRES the inclusion of the coefficient for the interaction X and (M=3), even though it is constrained to 0

We don’t normally need to calculate the slope in the reference group, nor compare to other slopes, so not usually a huge problem

Continuous-by-categorical: calculating simple slopes proc plm restore = catcont; estimate 'hours slope, prog=1 jogging' hours 1 hours*prog 1 0 0 ,

'hours slope, prog=2 swimming' hours 1 hours*prog 0 1 0 ,

'hours slope, prog=3 reading' hours 1 hours*prog 0 0 1 / e; run ;

Notice the inclusion of the zero coefficient in the estimate of the slope when M=3

Continuous-by-categorical: calculating simple slopes

Increasing hours increases weight loss in jogging and swimming, lessens loss in reading program

Notice that last estimate appears in regression table as hours coefficient

Potential pitfall

If calculating a simple slope or effect, do not omit interaction coefficients

Otherwise, SAS will average over those coefficients

Let’s pretend we forgot to include the 0 interaction coefficient in the estimation of the hours slope when

M=3 proc plm restore = catcont; estimate 'hours slope, prog=3 reading (wrong)' hours 1 / e; run ;

Potential pitfall

The e option gives us the estimate coefficients

SAS applied values of .333 to all 3 interaction coefficients, averaging their effects

Continuous-by-categorical: calculating simple slopes

We again take differences in values across coefficients to test differences in simple slopes: hours 1 hours*prog 1 0 0

-hours 1 hours*prog 0 1 0 hours 0 hours*prog 1 -1 0

Continuous-by-categorical: calculating simple slopes proc plm restore = catcont; estimate 'diff slopes, prog=1 vs prog=2' hours*prog 1 1 0 ,

'diff slopes, prog=1 vs prog=3' hours*prog 1 0 1 ,

'diff slopes, prog=2 vs prog=3' hours*prog 0 1 1 / e; run ;

Slopes in prog=1 and prog=2 do not differ

Other 2 comparisons are regression coefficients

Continuous-by-categorical: graphing slopes

The slicefit type of effectplot plots the outcome against a continuous predictor on the X-axis, with separate lines by a categorical predictor (typically, but can be continuous) proc plm source=catcon; effectplot slicefit (x=hours sliceby=prog) / clm; run ;

The option clm adds confidence limits

Continuous-by-categorical: graphing slopes

Easy to see direction of effects, and that slopes in jogging and reading do not differ

Categorical-by-categorical: the model

The interaction of a categorical variables X with 2 categories and M with 3 produces 6 interaction dummies

Any interaction dummy formed by a omitted dummy will be omitted as well

4 of the 6 will be omitted because of collinearity

Categorical-by-categorical: the model

Categorical-by-categorical: the model

Categorical-by-categorical: the model

Regression equation modeling the interaction of X and

M

β x is simple effect of X (X=0 vs X=1) for M=3

β m1 and β m2 are simple effects of M when X=1

β x0m1 and β x0m2 r epresent differences in effects of X when M=1 and M=2, or differences in effects of M when X=0

Think of simple effects as differences in expected means

Simple effects represent differences between the mean outcome of 2 groups that belong to different categories on one predictor

For instance, the simple effect of X when M=1 is the difference between the mean outcome when

X=0,M=1 and the mean outcome when X=1,M=1

Simple effects expressed as differences in means

Categorical-by-categorical: example model proc glm data=exercise order=internal; class female prog; model loss = female|prog / solution; store catcat; run ;

Categorical-by-categorical: example model

Interaction is overall significant

Lots of omitted coefficients

Categorical-by-categorical: estimating simple effects with the slice statement

Slice statement designed for simple effect estimation

Syntax:

slice interaction_effect / sliceby= diff

interaction_effect is interaction to be decomposed

Sliceby= specifies variable at whose distinct levels the simple effects of the other variable will be estimated

Diff produces numerical estimates of the simple effect, instead of just a test of significance (default)

Categorical-by-categorical: estimating simple effects with the slice statement proc plm restore = catcat; slice female*prog / sliceby=prog diff adj=bon plots=none nof e means; slice female*prog / sliceby=female diff adj=bon plots=none nof e means; run ;

Estimates both sets of simple effects

Bonferroni adjustment due to multiple comparisons (adj=bon)

No plotting (hard to interpret and slow)

We suppress the somewhat redundant F-test “nof”

The means of each cell will be output with “means”

Categorical-by-categorical: estimating simple effects with the slice statement

All simple effects are significant except males vs females in reading program

So genders differ in other 2 programs

And programs differ within each gender

Estimating simple effects with the lsmestimate statement

The lsmestimate statement combines lsmeans and estimate statements

Used to estimate linear combinations of estimated

(marginal) means

From a balanced population

Simple effects can be estimated through linear combinations of marginal means

Estimating simple effects with the lsmestimate statement

Syntax:

lsmestimate effect [value, level_x level_m]...

Effect is effect made up of only categorical predictors

Value is value to apply to mean in linear combination

level_x and level_m are the ORDINAL levels of the categorical predictors defining target mean

For X=0 and X=1, specify 1 for X=0 and 2 for X=1

Estimating simple effects with the lsmestimate statement proc plm restore=catcat; lsmestimate female*prog 'male-female, prog = jogging(1)' [ 1 , 1 1 ] [1 , 2 1 ],

'male-female, prog = swimming(2)' [ 1 , 1 2 ] [1 , 2 2 ],

'male-female, prog = reading(3)' [ 1 , 1 3 ] [1 , 2 3 ],

'jogging-reading, female = male(0)' [ 1 , 1 1 ] [1 , 1 3 ],

'jogging-reading, female = female(1)' [ 1 , 2 1 ] [1 , 2 3 ],

'swimming-reading, female = male(0)' [ 1 , 1 2 ] [1 , 1 3 ],

'swimming-reading, female = female(1)' [ 1 , 2 2 ] [1 , 2 3 ],

'jogging-swimming, female = male(0)' [ 1 , 1 1 ] [1 , 1 2 ],

'jogging-swimming, female = female(1)' [ 1 , 2 1 ] [1 , 2 2 ] / e adj=bon; run ;

Estimating simple effects with the lsmestimate statement

Same estimates as slice statement

Comparing simple effects with the lsmestimate statement

Only the lsmestimate and not the slice statement can compare simple effects

To compare, place 2 simple effects on same row and reverse values for 1

[1, 1 1] [-1, 2 1]

-[1, 1 2] [-1, 2 2]

[1, 1 1] [-1, 2 1] [-1, 1 2] [1, 2 2]

Comparing simple effects with the lsmestimate statement proc plm restore=catcat; lsmestimate prog*female 'diff m-f, jog-swim’ [ 1 , 1 1 ] [1 , 2 1 ] [1 , 1 2 ] [ 1 , 2 2 ],

'diff m-f, jog-read' [ 1 , 1 1 ] [1 , 2 1 ] [1 , 1 3 ] [ 1 , 2 3 ],

'diff m-f, swim-read' [ 1 , 1 2 ] [1 , 2 2 ] [1 , 1 3 ] [ 1 , 2 3 ],

'diff jog-read, m - f' [ 1 , 1 1 ] [1 , 1 3 ] [1 , 2 1 ] [ 1 , 2 3 ],

'diff swim-read, m - f' [ 1 , 1 2 ] [1 , 1 3 ] [1 , 2 2 ] [ 1 , 2 3 ],

'diff jog-swim, m - f' [ 1 , 1 1 ] [1 , 1 2 ] [1 , 2 1 ] [ 1 , 2 2 ]/ e adj=bon; run ;

Comparing simple effects with the lsmestimate statement

All differences are significant – although only one we already didn’t know

Categorical-by-categorical: graphing simple effects

The interaction type effectplot is used to plot the outcome vs two categorical predictors.

The connect option is used to connect the points proc plm restore=catcat; effectplot interaction (x=female sliceby=prog) / clm connect; effectplot interaction (x=prog sliceby=female) / clm connect; run ;

Graph of simple gender effects

No effect of gender in the reading program

Graph of simple program effects

Effect of program seems stronger from females

3-way interactions: categorical-bycategorical-by-continuous

Interaction of 3 predictors can be decomposed in many more ways than the interaction of 2.

Imagine we interact 2-category X with 3-category

M and continuous Z

How can we decompose this interaction?

3-way interactions, categorical-bycategorical-by-continuous: the model

We can estimate the conditional interaction of X and Z across levels of M

Do X and Z interact at each level of M?

Are the X and Z interactions different across levels of

M?

We can further decompose the conditional interactions of X and Z

What are the simple slopes of Z across X and simple effects of X across Z?

3-way interactions, categorical-bycategorical-by-continuous: the model

We could then look at interaction of X and M across levels of Z

Do X and M interact at various values of Z?

Are these interactions different?

Within each conditional interaction of X and M, what are the simple effects of X and M?

We can also look at the interaction of M and Z across X

3-way interactions, categorical-bycategorical-by-continuous: the model

Regression equation can be intimidating

Single variable coefficients are still simple effects and slopes (but now for 2 reference levels each)

2-way interaction coefficients are conditional interactions (at reference level of 3 rd variable)

3-way interactions: example model

We regress loss on female (2-category), prog (3category) and hours (continuous) proc glm data = exercise order=internal; class female prog; model loss = female|prog|hours / solution; store catcatcon; run ;

3-way interactions: example model

3-way interaction is significant

3-way interactions: example model

Not very easy to interpret!

3-way interactions: simple slope focused analysis

Imagine our focus is estimating which groups benefit the most from increasing the weekly number of hours of exercise.

This analysis is focused on the simple slopes of hours

We approach this section by addressing questions the researcher might ask, starting with the lowest level and building up

What are the simple slopes of Z across levels of X and M?

There are a total of 6 groups made up by X and M, and we can estimate the slope of hours in each

We use estimate statements again

Place a 1 after the coefficient for the slope variable by itself (e.g. hours)

Place a 1 after each 2-way interaction coefficient involving the slope variable and either of the 2 factor groups (e.g. hours*(female=0) and hours*(prog=1))

Place a 1 after the 3-way interaction coefficient involving the slope variable and both of the factor groups (e.g. hours*(female=0,prog=1))

3-way interaction: estimating simple slopes using estimate statement proc plm restore=catcatcon; estimate 'hours slope, male prog=jogging' hours 1 hours*female 1 0 hours*prog 1 0 0 hours*female*prog 1 0 0 0 0 0 ,

'hours slope, male prog=swimming' hours 1 hours*female 1 0 hours*prog 0 1 0 hours*female*prog 0 1 0 0 0 0 ,

'hours slope, male prog=reading' hours 1 hours*female 1 0 hours*prog 0 0 1 hours*female*prog 0 0 1 0 0 0 ,

'hours slope, female prog=jogging' hours 1 hours*female 0 1 hours*prog 1 0 0 hours*female*prog 0 0 0 1 0 0 ,

'hours slope, female prog=swimming' hours 1 hours*female 0 1 hours*prog 0 1 0 hours*female*prog 0 0 0 0 1 0 ,

'hours slope, female prog=reading' hours 1 hours*female 0 1 hours*prog 0 0 1 hours*female*prog 0 0 0 0 0 1 / e adj=bon; run ;

3-way interaction: estimating simple slopes using estimate statement

Increasing number of weekly hours of exercise significantly increases weight loss in all groups except those in the reading program, where it decreases weight loss (not significantly for females in the reading program after Bonferroni adjustment)

Are the (X*Z) conditional interactions significant?

We can now compare the simple slopes hours of between genders within each program

This is a test of whether hours and gender interact within each program

As always, we test differences in effects by subtracting values across coefficients

Are the (X*Z) conditional interactions significant?

proc plm restore=catcatcon; estimate 'diff hours slope, male-female prog=1' hours*female 1 1 hours*female*prog 1 0 0 1 0 0 ,

'diff hours slope, male-female prog=2' hours*female 1 1 hours*female*prog 0 1 0 0 1 0 , adj=bon;

'diff hours slope, male-female prog=3' hours*female 1 1 hours*female*prog 0 0 1 0 0 1 / e run ;

Males and female benefit differently from increasing the number of hours in jogging and reading programs.

One of these interactions appears in the regression table? Which one?

Are the (X*Z) conditional interactions different?

We can test if the conditional interactions are different from one another

Do the way males and females benefit differently by increasing hours of exercise VARY between programs?

Take differences between conditional interactions

Notice only the 3-way interaction coefficient is left

Are the (X*Z) conditional interactions different?

proc plm restore=catcatcon; estimate 'diff diff hours slope, male-female prog=1-prog=2' hours*female*prog 1 1 0 1 1 0 ,

'diff diff hours slope, male-female prog=1-prog=3' hours*female*prog 1 0 1 1 0 1 ,

'diff diff hours slope, male-female prog=2-prog=3' hours*female*prog 0 1 1 0 1 1 / e; run ;

All of the comparisons are significant. The differential benefit from increasing exercise hours between genders differs between all 3 programs.

3-way interaction: graphing simple slopes

We need to partition our graphs by a thirdvariable now.

We can use the plotby= option, to plot separate graphs across levels of a variable proc plm restore=catcatcon; effectplot slicefit (x=hours sliceby=female plotby=prog) / clm; run ;

3-way interaction: graphing simple slopes

Easy to see slopes, differences between slopes, and interactions

3-way interaction, simple effects focused analysis

Imagine instead we are more interested in gender differences across programs and at different hours of weekly exercise?

Similar questions can be posed

What are the simple effects of X across M and Z?

We use lsmestimate statements to estimate simple effects of female at each level of prog at the mean, mean-sd and mean+sd of hours

The “at” option allows us to specify hours

For this question we could use slice or lsmestimate

Estimating the simple effects of X across M and Z using lsmestimate proc plm restore=catcatcon; lsmestimate female*prog 'male-female, prog=jogging(1) hours=1.51' [ 1 , 1 1 ] [1 , 2 1 ],

'male-female, prog=swimming(2) hours=1.51' [ 1 , 1 2 ] [1 , 2 2 ],

'male-female, prog=reading(3) hours=1.51' [ 1 , 1 3 ] [1 , 2 3 ] / e adj=bon at hours= 1.51

; lsmestimate female*prog 'male-female, prog=jogging(1) hours=2' [ 1 , 1 1 ] [1 , 2 1 ],

'male-female, prog=swimming(2) hours=2' [ 1 , 1 2 ] [1 , 2 2 ],

'male-female, prog=reading(3) hours=2' [ 1 , 1 3 ] [1 , 2 3 ] / e adj=bon at hours= 2 ; lsmestimate female*prog 'male-female, prog=jogging(1) hours=2.5' [ 1 , 1 1 ] [1 , 2 1 ],

'male-female, prog=swimming(2) hours=2.5' [ 1 , 1 2 ] [1 , 2 2 ],

'male-female, prog=reading(3) hours=2.5' [ 1 , 1 3 ] [1 , 2 3 ] / e adj=bon at hours= 2.5

; run ;

Estimating the simple effects of X across M and Z using lsmestimate

Are the conditional interactions significant?

The overall test of each conditional interaction of female and program (at a fixed number of hours) involves tests of 2 coefficients (which are differences in simple effects), so must be tested with a joint F-test

The “joint” option on lsmestimate performs a joint Ftest

Are the conditional interactions significant?

proc plm restore=catcatcon; lsmestimate female*prog 'diff male-female, prog=1 - prog=2, hours=1.51' [ 1 , 1 1 ] [1 , 2 1 ] [1 , 1 2 ] [ 1 , 2 2 ],

'diff male-female, prog=1 - prog=3, hours=1.51' [ 1 , 1 1 ] [1 , 2 1 ] [1 , 1 3 ] [ 1 , 2 3 ],

'diff male-female, prog=2 - prog=3, hours=1.51' [ 1 , 1 2 ] [1 , 2 2 ] [1 , 1 3 ] [ 1 , 2 3 ] / e at hours= 1.51

joint; lsmestimate female*prog 'diff male-female, prog=1 - prog=2, hours=2' [ 1 , 1 1 ] [1 , 2 1 ] [1 , 1 2 ] [ 1 , 2 2 ],

'diff male-female, prog=1 - prog=3, hours=2' [ 1 , 1 1 ] [1 , 2 1 ] [1 , 1 3 ] [ 1 , 2 3 ],

'diff male-female, prog=2 - prog=3, hours=2' [ 1 , 1 2 ] [1 , 2 2 ] [1 , 1 3 ] [ 1 , 2 3 ] / e at hours= 2 joint; lsmestimate female*prog 'diff male-female, prog=1 - prog=2, hours=2.5' [ 1 , 1 1 ] [1 , 2 1 ] [1 , 1 2 ] [ 1 , 2 2 ],

'diff male-female, prog=1 - prog=3, hours=2.5' [ 1 , 1 1 ] [1 , 2 1 ] [1 , 1 3 ] [ 1 , 2 3 ], diff male-female, prog=2 - prog=3, hours=2.5' [ 1 , 1 2 ] [1 , 2 2 ] [1 , 1 3 ] [ 1 , 2 3 ] / e at hours= 2.5

joint; run ;

Are the conditional interactions significant?

Female and prog significantly interact at hours = 1.5, 2 and 2.5

3-way interaction: graphing simple effects

We add the plotby= option to an interaction plot proc plm restore=catcatcon; effectplot interaction (x=female sliceby=prog) / at(hours = 1.51 2 2.5

) clm connect; run ;

3-way interaction: graphing simple effects

Interaction more pronounced at lower numbers of hours

Logistic Regression

Binary (0/1) outcome

Often defined as success and failure

Models how predictors affect probability of the outcome

Probability, p, is transformed to logit in logistic regression

Logit transformation

Logit transforms probability to log-odds metric

Can take on any value (instead of restricted to 0 through 1)

Logistic regression

Logit of p (not p itself) is modeled as having a linear relationship with predictors

Non-linear relationship between p and predictors

Imagine simple logit model where estimate the log odds of p when X=0 and X=1:

The difference between log odds estimate is:

Remembering our logarithmic identity and the definition of odds:

Non-linear relationship between p and predictors

We substitute and get:

Which we then exponentiate:

Odds ratios

Exponentiated logistic regression coefficients are interpreted as odds ratios (ORs)

By what factor is the odds changed per unit increase in the predictor

Or, what is the percent change in the odds per unit increase in the predictor

Odds ratios are constant across the range of the predictor

Differences in probabilities are not

But ORs can be misleading without knowing the underlying probabilities

Logistic regression, categorical-bycontinuous interaction: example model

We model how the odds (probability) of satisfaction is predicted by hours of exercise, program and their interaction

We can create an item store in proc logistic for proc plm

Logistic regression, categorical-bycontinuous interaction: example model proc logistic data = exercise descending; class prog / param=glm order=internal; model satisfied = prog|hours / expb; store logit; run ;

descending tells SAS to model probability of 1 instead of 0, the default param=glm ensures we use dummy coding (rather than effect coding, the default) expb exponentiates the regression coeffients – although not all are interpreted as odds ratios

Logistic regression, categorical-bycontinuous interaction: example model

Interaction is significant

Logistic regression cat-by-cont, calculating and graphing simple ORs

The simple slope of hours in each program yields an odds ratio when exponentiated

We use the oddsratio statement within proc logistic to estimate these simple odds ratios

A nice odds ratio plot is produced by default

Logistic regression cat-by-cont, calculating and graphing simple ORs proc logistic data = exercise descending; class prog / param=glm order=internal; model satisfied = prog|hours / expb; oddsratio hours / at(prog=all); store logit; run ;

The at(prog=all) option requests that oddsratio for hours be calculated at each level of prog

Logistic regression cat-by-cont, calculating and graphing simple ORs

Increasing weekly hours of exercise increases odds of satisfaction in jogging and swimming groups

Simple odds ratios can be compared in estimate statements

This code produces the simple odds ratios in an estimate statement proc plm restore=logit; estimate 'hours OR, prog=1' hours 1 hours*prog 1 0 0 ,

'hours OR, prog=2' hours 1 hours*prog 0 1 0 ,

'hours OR, prog=3' hours 1 hours*prog 0 0 1 / e exp cl; run;

This code compares them proc plm restore=logit; estimate 'ratio hours OR, prog=1/prog=2' hours*prog 1 1 0 ,

'ratio hours OR, prog=1/prog=3' hours*prog 1 0 1 ,

'ratio hours OR, prog=2 /prog=3' hours*prog 0 1 1 / e exp cl; run ;

Simple odds ratios can be compared in estimate statements

The exponentiated differences between simple slopes (exponentiated interaction coefficient) yields a ratio of odds ratios

ORjog/ORswim = Ratio of ORs

4.109/5.079 = .809

Predicted probabilities

Odds ratios summarize the effects of predictors in 1 number, but can be misleading because we don’t know the underlying probabilities

E.g. OR for p=.001 and p=.003 is the same OR for p=.25 and p=.5

Good idea to get sense of probabilities of outcome across groups

The lsmeans statement for predicted probabilities

The lsmeans statement is used to estimate marginal means

The ilink option allows transformation back the original response metric (here probabilities)

The at option allows specification of continuous covariates for estimation of means

The lsmeans statement for predicted probabilities proc plm source = logit; lsmeans prog / at hours= 1.51

ilink plots=none; lsmeans prog / at hours= 2 ilink plots=none; lsmeans prog / at hours= 2.5

ilink plots=none; run ;

The lsmeans statement for predicted probabilities

Predicted probabilities are the column “Mean”

Graphs of predicted probabilities

The effectplot statement by default plots the outcome in its original metric

We can get an idea of the simple effects and simple slopes in the probability metric with 2 effectplot statements proc plm restore=logit; effectplot interaction (x=prog) / at(hours = 1.51 2 2.5

) clm; effectplot slicefit (x=hours sliceby=prog) / clm; run ;

Graphs of predicted probabilities

Graphs of predicted probabilities

Concluding guidelines

Guidelines for using estimate statement to estimate simple slopes

Always put a 1 after the coefficient for slope variable

If interacted with continuous IV (not quadratic), put value of continuous IV after interaction coefficient

If interacted with categorical, put a 1 after relevant interaction dummy

If interacted with 3 way, make sure to include:

the coefficient alone both 2-way coefficients involving slope and either interactor

The 3-way coefficient involving all interactors

Follow the second rule above if interaction involves continuous (unless both are continuous, in which case apply the product of the 2 continuous interactors)

Follow the third rule if the interaction involves only dummy variables

To estimate differences, subtract values across coefficients

Use “e” to check values and coefficients

Use “joint” to perform a joint F-test

Use adj= to correct for multiple comparisons

Use exp to exponentiate estimates (for logistic and other non-linear models)

Concluding guidelines

Guidelines for using lsmestimate statement to estimate simple effects

Think of simple effects as differences between means

Assign one mean the value 1 and the other -1

Remember to use ordinal values for categorical predictors, not the actual numeric values

To compare simple effects, put two effects on the same row and reverse the values for one of them

Use joint for joint F-tests

Use adj= for multiple comparisons

It’s over!

Thank you for attending!