771Lec14-RelationExtraction - CSE

advertisement

CSCE 771

Natural Language Processing

Lecture 14

Relation Extraction

Topics

Relation Extraction

Readings: Chapter 22

March 4, 2013

NLTK 7.4-7.5

Overview

Last Time

NER

NLTK

Chunking Example 7.4 (code_chunker1.py),

chinking Example 7.5 (code_chinker.py)

Evaluation Example 7.8 (code_unigram_chunker.py)

Example 7.9 (code_classifier_chunker.py)

Today

Relation extraction

ACE: Freebase, DBPedia

Ontological relations

Rules for IS-A extracting

Supervised Relation Extraction for relations

Relation Bootstrapping

Unsupervised relation extraction

NLTK 7.5 Named Entity Recognition

Readings

–2–

NLTK Ch 7.4 - 7.5

CSCE 771 Spring 2013

Dear Dr. Mathews,

I have the following questions:

1. (c) Do you need the regular expression that will

capture the link inside href="..."?

(d) What kind of description you want? It is a python

function with no argument. Do you want answer like

that?

3. (f-g) Do you mean top 100 in terms of count?

4.(e-f) You did not show how to use nltk for HMM and

Brill tagging. Can you please give an example?

-Thanks

–3–

CSCE 771 Spring 2013

Relation Extraction

What is relation extraction?

Founded in 1801 as South Carolina College, USC is the

flagship institution of the University of South Carolina

System and offers more than 350 programs of study

leading to bachelor's, master's, and doctoral degrees

from fourteen degree-granting colleges and schools to

an enrollment of approximately 45,251 students,

30,967 on the main Columbia campus. … [wiki]

complex relation = summarization

focus on binary relation predicate(subject, object) or

triples <subj predicate obj>

–4–

CSCE 771 Spring 2013

Wiki Info Box –

structured data

template

• standard things about

Universities

• Established

• type

• faculty

• students

• location

• mascot

–5–

CSCE 771 Spring 2013

Focus on extracting binary relations

• predicate(subject, object) from predicate logic

• triples <subj relation object>

• Directed graphs

–6–

CSCE 771 Spring 2013

Why relation extraction?

create new structured KB

Augmenting existing: words -> wordnet, facts -> FreeBase or DBPedia

Support question answering: Jeopardy

Which relations

Automated Content Extraction (ACE) http://www.itl.nist.gov/iad/mig//tests/ace/

17 relations

ACE examples

–7–

CSCE 771 Spring 2013

Unified Medical Language System

(UMLS)

UMLS: Unified Medical 134 entities, 54 relations

–8–

http://www.nlm.nih.gov/research/umls/

CSCE 771 Spring 2013

UMLS semantic network

–9–

CSCE 771 Spring 2013

Current Relations in the UMLS

Semantic Network

isa

associated_with

physically_related_to

part_of

consists_of

contains

connected_to

interconnects

branch_of

tributary_of

ingredient_of

spatially_related_to

location_of

adjacent_to

surrounds

traverses

functionally_related_to

affects

– 10 –

…

…

temporally_related_to

co-occurs_with

precedes

conceptually_related_to

evaluation_of

degree_of

analyzes

assesses_effect_of

measurement_of

measures

diagnoses

property_of

derivative_of

developmental_form_of

method_of

…

CSCE 771 Spring 2013

Databases of Wikipedia Relations

• DBpedia is a crowd-sourced community effort

• to extract structured information from Wikipedia

• and to make this information readily available

• DBpedia allows you to make sophisticated queries

– 11 –

http://dbpedia.org/About

CSCE 771 Spring 2013

English version of the DBpedia

knowledge base

• 3.77 million things

• 2.35 million are classified in an ontology

• including:

•

•

•

•

•

•

– 12 –

including 764,000 persons,

573,000 places (including 387,000 populated places),

333,000 creative works (including 112,000 music albums,

72,000 films and 18,000 video games),

192,000 organizations (including 45,000 companies

and 42,000 educational institutions),

202,000 species and

5,500 diseases.

CSCE 771 Spring 2013

freebase

google (freebase wiki)

http://wiki.freebase.com/wiki/Main_Page

– 13 –

CSCE 771 Spring 2013

Ontological relations

Ontological relations

• IS-A hypernym

• Instance-of

• has-Part

• hyponym (opposite of hypernym)

– 14 –

CSCE 771 Spring 2013

How to build extractors

– 15 –

CSCE 771 Spring 2013

Extracting IS_A relation

(Hearst 1992) Atomatic Acquisition of hypernyms

Naproxen sodium is a nonsteroidal anti-inflammatory

drug (NSAID). [wiki]

– 16 –

CSCE 771 Spring 2013

Hearst's Patterns for IS-A extracting

Patterns for

<X IS-A Y>

“Y such as X”

“X or other Y”

“Y including X”

“Y, especially X”

– 17 –

CSCE 771 Spring 2013

Extracting Richer Relations

Extracting Richer Relations Using Specific Rules

Intuition: relations that commonly hold: located-in,

cures, owns

What relations hold between two entities

– 18 –

CSCE 771 Spring 2013

Fig 22.16 Pattern and Bootstrapping

– 19 –

CSCE 771 Spring 2013

Hand-built patterns for relations

Hand-built patterns for relations

Pros

Cons

– 20 –

CSCE 771 Spring 2013

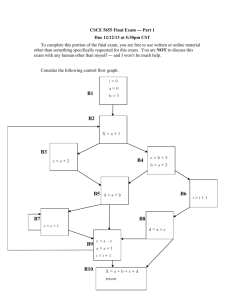

Supervised Relation Extraction

How to do Classification is supervise relation extraction

1 find all pairs of named entities

2. decides if they are realted

3,

– 21 –

CSCE 771 Spring 2013

ACE- Automated Content Extraction

• http://projects.ldc.upenn.edu/ace/

• Linguistic Data Consortium

• Entity Detection and Tracking (EDT) is

• Relation Detection and Characterization (RDC)

• Event Detection and Characterization (EDC)

• 6 classes of relations 17 overall

– 22 –

CSCE 771 Spring 2013

Word features for relation Extraction

Word features for relation Extraction

Headwords of M1 and M2

• Named Entity Type and

• Mention Level Features for relation extraction

•

– 23 –

name, pronoun, nominal

CSCE 771 Spring 2013

Parse Features for Relation

Extraction

Parse Features for Relation Extraction

base syntatic chuck seq from one to another

constituent path

Dependency path

– 24 –

CSCE 771 Spring 2013

Gazeteer and trigger word features for

relation extraction

Trigger list fo kinship relations

Gazeteer: name-list

– 25 –

CSCE 771 Spring 2013

Evaluation of Supervised Relation

Extraction

Evaluation of Supervised Relation Extraction

• P/R/F

Summary

+ hgh accuracies

- training set

models are brittle

don't generalize well

– 26 –

CSCE 771 Spring 2013

Semi-Supervised Relation Extraction

Seed-based or bootstrapping approaches to RE

No training set

Can you … do anything?

Bootsrapping

– 27 –

CSCE 771 Spring 2013

Relation Bootstrapping

Relation Bootstrapping (Hearst 1992)

Gather seed pairs of relation R

iterate

1. find sentences with pairs,

2. look at context...

3. use patterns to search for more pairs

– 28 –

CSCE 771 Spring 2013

Bootstrapping Example

– 29 –

CSCE 771 Spring 2013

Extract <author, book> pairs

Dipre: start with seeds

Find instances

Extract patterns

Now iterate

– 30 –

CSCE 771 Spring 2013

Snowball Algorithm

Agichtein, Gravano 2000

Snowball Algorithm by Agichtein, Gravano 2000

Distant supervision

Distant supervision paradigm

Like classified

– 31 –

CSCE 771 Spring 2013

Unsupervised relation extraction

Banko et al 2007 “Open information extraction from the

Web”

Extracting relations from the web with

• no training data

• no predetermined list of relations

• The Open Approach

1. Use parse data to train a “trust-worthy” classifier

2. Extract trustworthy relations among NPs

3. Rank relations based on text redundancy

– 32 –

CSCE 771 Spring 2013

Evaluation of Semi-supervised and

Unsupervised RE

Evaluation of Semi-supervised and Unsupervised RE

No gold std ... the web is not tagged

• no way to compute precision or recall

Instead only estimate precision

• draw sample check precision manually

• alternatively choose several levels of recall and

check the precision there

No way to check the recall?

• randomly select text sample and manually check

– 33 –

CSCE 771 Spring 2013

NLTK Info. Extraction

.

– 34 –

CSCE 771 Spring 2013

NLTK Review

NLTK 7.1-7.3

– 35 –

Chunking Example 7.4 (code_chunker1.py),

chinking Example 7.5 (code_chinker.py)

simple re_chunker

Evaluation Example 7.8 (code_unigram_chunker.py)

Example 7.9 (code_classifier_chunker.py

CSCE 771 Spring 2013

Review 7.4: Simple Noun Phrase Chunker

grammar = r"""

NP: {<DT|PP\$>?<JJ>*<NN>} # chunk

determiner/possessive, adjectives and nouns

{<NNP>+}

# chunk sequences of proper nouns

"""

cp = nltk.RegexpParser(grammar)

sentence = [("Rapunzel", "NNP"), ("let", "VBD"),

("down", "RP"), ("her", "PP$"), ("long", "JJ"),

("golden", "JJ"), ("hair", "NN")]

print cp.parse(sentence)

– 36 –

CSCE 771 Spring 2013

(S

(NP Rapunzel/NNP)

let/VBD

down/RP

(NP her/PP$ long/JJ golden/JJ hair/NN))

– 37 –

CSCE 771 Spring 2013

Review 7.5: Simple Noun Phrase Chinker

grammar = r"""

NP:

{<.*>+}

# Chunk everything

}<VBD|IN>+{

# Chink sequences of VBD and IN

"""

sentence = [("the", "DT"), ("little", "JJ"), ("yellow", "JJ"),("dog",

"NN"), ("barked", "VBD"), ("at", "IN"), ("the", "DT"), ("cat",

"NN")]

cp = nltk.RegexpParser(grammar)

print cp.parse(sentence)

– 38 –

CSCE 771 Spring 2013

>>>

(S

(NP the/DT little/JJ yellow/JJ dog/NN)

barked/VBD

at/IN

(NP the/DT cat/NN))

>>>

– 39 –

CSCE 771 Spring 2013

RegExp Chunker – conll2000

import nltk

from nltk.corpus import conll2000

cp = nltk.RegexpParser("")

test_sents = conll2000.chunked_sents('test.txt',

chunk_types=['NP'])

print cp.evaluate(test_sents)

grammar = r"NP: {<[CDJNP].*>+}"

cp = nltk.RegexpParser(grammar)

print cp.evaluate(test_sents)

– 40 –

CSCE 771 Spring 2013

ChunkParse score:

IOB Accuracy: 43.4%

Precision:

0.0%

Recall:

0.0%

F-Measure:

0.0%

ChunkParse score:

IOB Accuracy:

87.7%

Precision:

70.6%

Recall:

67.8%

F-Measure:

69.2%

– 41 –

CSCE 771 Spring 2013

Conference on Computational Natural

Language Learning

Conference on Computational Natural Language

Learning (CoNLL-2000)

http://www.cnts.ua.ac.be/conll2000/chunking/

CoNLL 2013 : Seventeenth Conference on

Computational Natural Language Learning

– 42 –

CSCE 771 Spring 2013

Evaluation Example 7.8 (code_unigram_chunker.py)

AttributeError: 'module' object has no attribute

'conlltags2tree'

– 43 –

CSCE 771 Spring 2013

code_classifier_chunker.py

NLTK was unable to find the megam file!

Use software specific configuration paramaters or set

the MEGAM environment variable.

For more information, on megam, see:

<http://www.cs.utah.edu/~hal/megam/>

– 44 –

CSCE 771 Spring 2013

7.4 Recursion in Linguistic Structure

– 45 –

CSCE 771 Spring 2013

code_cascaded_chunker

grammar = r"""

NP: {<DT|JJ|NN.*>+}

PP: {<IN><NP>}

# Chunk sequences of DT, JJ, NN

# Chunk prepositions followed by NP

VP: {<VB.*><NP|PP|CLAUSE>+$} # Chunk verbs and their

arguments

CLAUSE: {<NP><VP>}

# Chunk NP, VP

"""

cp = nltk.RegexpParser(grammar)

sentence = [("Mary", "NN"), ("saw", "VBD"), ("the", "DT"), ("cat",

"NN"),

("sit", "VB"), ("on", "IN"), ("the", "DT"), ("mat", "NN")]

print cp.parse(sentence)

– 46 –

CSCE 771 Spring 2013

>>>

(S

(NP Mary/NN)

saw/VBD

(CLAUSE

(NP the/DT cat/NN)

(VP sit/VB (PP on/IN (NP the/DT mat/NN)))))

– 47 –

CSCE 771 Spring 2013

A sentence having deeper nesting

sentence = [("John", "NNP"), ("thinks", "VBZ"), ("Mary", "NN"),

("saw", "VBD"), ("the", "DT"), ("cat", "NN"), ("sit", "VB"),

("on", "IN"), ("the", "DT"), ("mat", "NN")]

print cp.parse(sentence)

(S

(NP John/NNP)

thinks/VBZ

(NP Mary/NN)

saw/VBD

(CLAUSE

(NP the/DT cat/NN)

(VP sit/VB (PP on/IN (NP the/DT mat/NN)))))

– 48 –

CSCE 771 Spring 2013

Trees

print tree4[1]

(VP chased (NP the rabbit))

tree4[1].node

'VP‘

tree4.leaves()

['Alice', 'chased', 'the', 'rabbit']

tree4[1][1][1]

‘rabbitt’

tree4.draw()

– 49 –

CSCE 771 Spring 2013

Trees - code_traverse.py

def traverse(t):

try:

t.node

except AttributeError:

print t,

else:

# Now we know that t.node is defined

print '(', t.node,

for child in t:

traverse(child)

print ')',

t = nltk.Tree('(S (NP Alice) (VP chased (NP the rabbit)))')

traverse(t)

– 50 –

CSCE 771 Spring 2013

NLTK 7.5 Named Entity Recognition

sent = nltk.corpus.treebank.tagged_sents()[22]

print nltk.ne_chunk(sent, binary=True)

– 51 –

CSCE 771 Spring 2013

– 52 –

CSCE 771 Spring 2013