Research Design Choices and Causal Inferences

advertisement

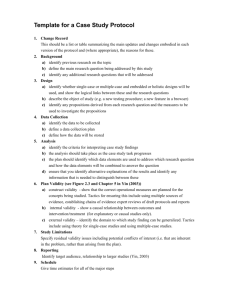

Research Design Choices and Causal Inferences Professor Alexander Settles Approaches and strategies of research design • Quantitative Strategies – Exploratory Studies • In-depth interviews • Focus Group studies – Descriptive Studies • Survey research • Relationship studies – Causal Studies • Experimental • Comparative Approaches and strategies of research design • Qualitative Strategies – Explanatory Studies • Case Studies • Ethnographic studies – Interpretive studies • Grounded theory – Critical Studies • Action research Gathering Qualitative Data • • • • • Observation Studies Participation Studies Interviewing Studies Archival studies Media Analysis What Tools Are Used in Designing Research? Language of Research Success of Research Clear conceptualization of concepts Shared understanding of concepts What Is Research Design? Blueprint Plan Guide Framework What Tools Are Used in Designing Research? MindWriter Project Plan in Gantt chart format Design in the Research Process Descriptors of Research Design Perceptual Awareness Purpose of Study Question Crystallization Descriptors Data Collection Method Experimental Effects Time Dimension Research Environment Topical Scope Degree of Question Crystallization Exploratory Study • Loose structure • Expand understanding • Provide insight • Develop hypotheses Formal Study • Precise procedures • Begins with hypotheses • Answers research questions Approaches for Exploratory Investigations • • • • • • • Participant observation Film, photographs Psychological testing Case studies Ethnography Expert interviews Document analysis Desired Outcomes of Exploratory Studies • Established range and scope of possible management decisions • Established major dimensions of research task • Defined a set of subsidiary questions that can guide research design • Developed hypotheses about possible causes of management dilemma • Learned which hypotheses can be safely ignored • Concluded additional research is not needed or not feasible Commonly Used Exploratory Techniques • Secondary Data Analysis • Experience Surveys • Focus Groups Experience Surveys • What is being done? • What has been tried in the past with or without success? • How have things changed? • Who is involved in the decisions? • What problem areas can be seen? • Whom can we count on to assist or participate in the research? Descriptors of Research Design Perceptual Awareness Purpose of Study Question Crystallization Descriptors Data Collection Method Experimental Effects Time Dimension Research Environment Topical Scope Data Collection Method • Monitoring • Communication Descriptors of Research Design Perceptual Awareness Purpose of Study Question Crystallization Descriptors Data Collection Method Experimental Effects Time Dimension Research Environment Topical Scope The Time Dimension • Cross-sectional • Longitudinal Descriptors of Research Design Perceptual Awareness Purpose of Study Question Crystallization Descriptors Data Collection Method Experimental Effects Time Dimension Research Environment Topical Scope The Topical Scope Statistical Study • Breadth • Population inferences • Quantitative • Generalizable findings Case Study • Depth • Detail • Qualitative • Multiple sources of information Descriptors of Research Design Perceptual Awareness Purpose of Study Question Crystallization Descriptors Data Collection Method Experimental Effects Time Dimension Research Environment Topical Scope The Research Environment • Field conditions • Lab conditions • Simulations Descriptors of Research Design Perceptual Awareness Purpose of Study Question Crystallization Descriptors Data Collection Method Experimental Effects Time Dimension Research Environment Topical Scope Purpose of the Study Reporting Casual Explanatory Descriptive Causal Predictive Descriptive Studies • • • • • Who? What? How much? When? Where? Descriptive Studies • Description of population characteristics • Estimates of frequency of characteristics • Discovery of associations among variables Descriptors of Research Design Perceptual Awareness Purpose of Study Question Crystallization Descriptors Data Collection Method Experimental Effects Time Dimension Research Environment Topical Scope Experimental Effects Ex Post Facto Study • After-the-fact report on what happened to the measured variable Experiment • Study involving the manipulation or control of one or more variables to determine the effect on another variable Causation and Experimental Design • Control/Matching • Random Assignment Causal Studies • Symmetrical • Reciprocal • Asymmetrical Understanding Casual Relationships Property Behavior Disposition Response Stimulus Asymmetrical Casual Relationships Stimulus-Response PropertyDisposition PropertyBehavior Disposition-Behavior Types of Asymmetrical Causal Relationships • Stimulus-response - An event or change results in a response from some object. – – – • Property-disposition - An existing property causes a disposition. – – – • Age and attitudes about saving. Gender attitudes toward social issues. Social class and opinions about taxation. Disposition-behavior – – – • A change in work rules leads to a higher level of worker output. A change in government economic policy restricts corporate financial decisions. A price increase results in fewer unit sales. Opinions about a brand and its purchase. Job satisfaction and work output. Moral values and tax cheating. Property-behavior -An existing property causes a specific behavior. – Stage of the family life cycle and purchases of furniture. – Social class and family savings patterns. – Age and sports participation. Evidence of Causality • Covariance between A and B • Time order of events • No other possible causes of B Descriptors of Research Design Perceptual Awareness Purpose of Study Question Crystallization Descriptors Data Collection Method Experimental Effects Time Dimension Research Environment Topical Scope Participants’ Perceptional Awareness • No Deviation perceived • Deviations perceived as unrelated • Deviations perceived as researcher induced Descriptors of Research Design Category Options The degree to which the research question has been crystallized • Exploratory study • Formal study The method of data collection • Monitoring • Communication Study The power of the researcher to produce effects in the variables under study • Experimental • Ex post facto The purpose of the study • Reporting • Descriptive • Causal-Explanatory • Causal-Predictive The time dimension • Cross-sectional • Longitudinal The topical scope—breadth and depth—of the study • Case • Statistical study The research environment • Field setting • Laboratory research • Simulation The participants’ perceptional awareness of the research activity • Actual routine • Modified routine Readings Review • Buchanan, D. A. & Bryman, A. (2007). Contextualizing methods choice in organizational research. 1. Choice of methods does not depend exclusively on links to research aims; 2. A method is not merely a technique for snapping reality into focus; choices of method frame the data windows through which phenomena are observed, influencing interpretative schemas and theoretical development. 3. Research competence thus involves addressing coherently the organizational, historical, political, ethical, evidential, and personal factors relevant to an investigation Organizational Properties • • • • Negotiated objectives. Layered permissions. Partisan conclusions. Politics of publishing. Conclusions • attributes of the organizational research setting or context, • the research tradition or history relevant to a particular study, • the inevitable politicization of the organizational researcher’s role, • constraints imposed by a growing concern with research ethics, • theoretical and audience-related issues in translating evidence into practice, and • personal preferences and biases with regard to choice of method. • Bergh, D. D. & Fairbank, J. F. (2002). Measuring and testing change in strategic management research • Reliability assumptions of change variables, correlations between the change variable and its initial measure, and selection of unbiased measurement alternatives. • Found that the typical approach used to measure and test change (as a simple difference between two measures of the same variable) is usually inappropriate and could lead to inaccurate findings and flawed conclusions. Recommendations • Researchers to screen their data in terms of: – reliability of component measures – correlation between the component variables – equality of component variable variances; and – correlation with the initial component variable. Validity and Reliability Part 2 Validity • Determines whether the research truly measures that which it was intended to measure or how truthful the research results are. Reliability • The extent to which results are consistent over time and an accurate representation of the total population under study is referred to as reliability and if the results of a study can be reproduced under a similar methodology, then the research instrument is considered to be reliable. Methods to Insure Validity • Face Validity- This criterion is an assessment of whether a measure appears, on the face of it, to measure the concept it is intended to measure. • Content validity concerns the extent to which a measure adequately represents all facets of a concept. • Criterion-related validity applies to instruments than have been developed for usefulness as indicator of specific trait or behavior, either now or in the future. • Construct Validity - which concerns the extent to which a measure is related to other measures as specified by theory or previous research. Does a measure stack up with other variables the way we expect it to? Reliability • Test-Retest Reliability • Inter-Item Reliability – use of multiple items to measure a single concept. • Inter-observer Reliability - the extent to which different interviewers or observers using the same measure get equivalent results. Graphic comparison How can validity be established? • Quantitative studies: – measurements, scores, instruments used, research design • Qualitative studies: – ways that researchers have devised to establish credibility: member checking, triangulation, thick description, peer reviews, external audits How can reliability be established? • Quantitative studies? – Assumption of repeatability • Qualitative studies? – Reframe as dependability and confirmability Reliability Validity Valid Reliable Not Valid Not Reliable Not Valid Methods to insure V&R • Triangulation – multiple methods or sources • Controlling for bias – Selection bias – Observer bias – Questionnaire bias – Omitted-variable bias – Confirmation bias Internal Validity • High internal validity means that changes in the dependent variable were caused by—not merely related to or correlated with—the treatment. • High internal validity can be attained only in an ideal true experimental design, or when steps have been taken to eliminate confounding factors or influences. Internal Validity Internal validity can be increased by: • Repeating an experiment many times, and by changing or adding independent variables; • Switching the control and experimental groups • Controlling confounding influences. Internal Validity Controlled experiments have the virtue of producing results with high internal validity, but they have the liability of low external validity External Validity External validity is the extent to which the cause and effect relationship can be generalized to other groups that were not part of the experiment. External Validity External validity is critically important in policy and program evaluation research because we would like our research findings to be generalizable. If external validity is low, then the results are not generalizable. Threats to Validity History confound — Any independent variable other than the treatment variable, that occurs between the pre-test and the post-test. Threats to Validity Maturation confound — Refers to the fact that as the experiment is conducted, people are growing older, and are getting more experienced. (Problem in longitudinal studies in particular) Threats to Validity Testing confound — Refers to different responses that an experimental subject may have to questions they are asked over and over again. Threats to Validity Instrumentation confound — Results from changing measurement instruments. (Example: use of different people to collect data, if there is no interrate reliability, you have changed the measurement instrument.) Threats to Validity Regression to the mean — Groups tend to increasingly resemble the mean over time. Threats to Validity Selection bias confound — When self-selection by individuals into groups is possible, a selection bias can result. Threats to Validity Diffusion of treatment confound — Occurs when a control group cannot be prevented from receiving the treatment in an experiment.