N06-Variance and Regression

advertisement

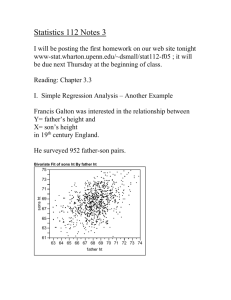

BIOINF 2118 N06-Variance and Regression Page 1 of 8 Properties of Variance (You don’t have to “center” X first; center it later by subtracting the squared mean.) Example: Bernoulli variance: (why?), so , and Therefore var(X)=0 if and only if X is constant. (Why?) For constants a and b, . If X1,..., X n are independent RVs, then . Example: the X 1,...,X n are i.i.d. Bernoulli’s. Their sum is binomial. The variance of a Bernoulli is pq. So the variance of a binomial is npq. Properties of Covariance and Correlation cov(X,Y) = E(XY) - E(X )E(Y) and If If then X and Y are linearly related. , then , depending on the sign of a. BIOINF 2118 N06-Variance and Regression Page 2 of 8 Correlation only measures linear relationship. If X and Y are independent, then cov(X,Y)=0. (Why?) The converse is NOT true. Example: . This is a quadratic relationship. Connections between variance and covariance. var(X+Y)=var(X) + var(Y) + 2 cov(X,Y). If X 1,...,X n are random variables, then . Example: Suppose X 1,...,X n are i.i.d., and you know only that they add up to S. First, what’s the sign of the correlation between any two of them? Now, what’s the correlation? HINT: the variance of the sum is zero. Marginal expectations and variances The marginal expectations and variances, in terms of the conditional expectations and variances: (IMPORTANT FORMULAS!) BIOINF 2118 N06-Variance and Regression Example: Suppose that has mean 0 and variance Page 3 of 8 where X has mean . X and and variance VX , and are independent. What is the conditional mean of Y? Conditional variance? Marginal mean and variance? More generally, for a function , What is “regression”? Situation: X and Y have a joint distribution. Goal: to predict the value of Y based upon the value of X. Examples: Predict a son’s height Y using the father’s height X. Predict the result Y of an expensive medical test using a cheaper test result X. Notation: our prediction, or decision, is d(X). How well does d perform? Criterion: We might want to minimize the mean squared error (MSE) . Then the value d that minimizes that expected value is the regression of Y on X, . So the conditional expectation (regression) is optimal (for this criterion). BIOINF 2118 N06-Variance and Regression Page 4 of 8 THEOREM The predictive function d that minimizes MSE (“expected loss”) is the regression of Y on X, The key trick: . Add & substract E[Y | X ] Proof: Regression to the mean: Take a look at the plot on the next page, fathers' heights with their sons' heights. If a father is 59 inches tall, what is the best guess for the son’s height? The answer is NOT 59 inches (the diagonal, the blue line). Instead we “regress”, compromising towards the grand mean, 70 inches. The green line is the “regression line”, E(son’s height | father’s height). The best guess is the conditional expectation E(son’s height | father’s height = 80) = 77. BIOINF 2118 N06-Variance and Regression Page 5 of 8 Many important applications: Research reproducibility problems X-axis: a z-score for a study result Y-axis: a z-score for a follow-up study result Better accuracy through larger sample sizes decreases the effect. Failing to publish non-significant results hides (but does not lessen) the decline. See the articles "The Truth Wears Off", "Unpublished results hide the decline effect" and "Assessing regression to the mean effects in health care initiatives" on the website. Serial measurements of patient clinical tests Today's abnormal result may often be followed tomorrow by a normal result. Better accuracy through better standards of practice decreases the effect. BIOINF 2118 N06-Variance and Regression Page 6 of 8 BIOINF 2118 N06-Variance and Regression Page 7 of 8 Code for the diagram fs.mean <- 70 fs.sd <- 5 fs.cor <- 0.7 sqrt( ### Mean height for sons & fathers ### Standard deviation of heights ### Correlation between heights fathers.and.sons.covariance <rbind( c(1, fs.cor), c(fs.cor, 1)) require(mvtnorm) * fathers.and.sons.covariance[2,2]) fs.intercept <- fs.mean + (0-fs.mean)*fs.slope abline(a=fs.intercept, b=fs.slope, fs.sd^2 * ###### Install package first! fathers.and.sons <- #### Generate the data rmvnorm(500, mean=c(fs.mean, fs.mean), sigma=fathers.and.sons.covariance) fathers.and.sons.covariance[1,1] col="darkgreen", lwd=3) ### Matrix conversion between a circle and an ellipse. fathers.and.sons.svd <svd(fathers.and.sons.covariance) fathers.and.sons.scaling <- colnames(fathers.and.sons) <- cq(father,son) fathers.and.sons.svd$u %*% head(fathers.and.sons) diag(sqrt(fathers.and.sons.svd$d)) %*% cor(fathers.and.sons); var(fathers.and.sons) fathers.and.sons.svd$v plot(fathers.and.sons) title("Height of fathers and sons") ### Which points are inside the 50% contour? ####### Major axis = diagonal: fs.scaledDistance <- apply(FUN=sum, MARGIN=1, abline(a=0, b=1, col="blue", lwd=3) ((fathers.and.sons-fs.mean) %*% #### Note the symmetry around the diagonal. solve(fathers.and.sons.scaling) ) ^2) fs.isInside <-(fs.scaledDistance < qchisq(0.5, 2)) ####### Regression line: conditional expectation: fs.slope <- fathers.and.sons.covariance[1,2] / points(fathers.and.sons[fs.isInside, ], col="red") ### Check: should be ~ 50% of points inside: BIOINF 2118 mean(fs.isInside) N06-Variance and Regression ### OK!!! #### Now let's plot the 50% contour: angles<-seq(0,2*pi,length=400) fathers.and.sons.contour.50 <- fs.mean + sqrt(qchisq(0.5, 2)) * cbind(cos(angles), sin(angles)) %*% fathers.and.sons.scaling lines(fathers.and.sons.contour.50, col="red") #### Add a legend to the plot: legend(55, 85, c("regression line", "major axis", "50% contour", "50% highest density"), lty=c(1,1,1,0), pch=c("","","", "o"), lwd=c(3,3,2,0), col=cq(darkgreen,blue,red,red) ) regression.to.the.mean.arrow = function(X) arrows(x0=X, x1=X, y0=X, y1=fs.intercept+fs.slope*X, lwd = 7) regression.to.the.mean.arrow(60) regression.to.the.mean.arrow(80) Page 8 of 8 legend("bottomright", "regression to the mean", lwd=6)