ppt

advertisement

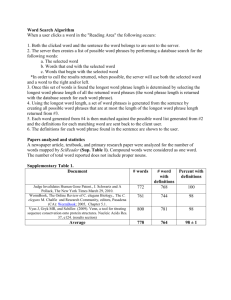

An Effective Approach to Document Retrieval via Utilizing WordNet and Recognizing Phrases Shuang Liu, Fang Liu, Clement Yu University of Illinois at Chicago Weiyi Meng Binghamton University Abstract Noun phrases in queries are identified and classified into four types. A document has a phrase if all content words in the phrase are within a window of a certain size. The window sizes for different types of phrases are different and are determined using a decision tree. Documents in response to a query are ranked with matching phrases given a higher priority. We utilize WordNet to disambiguate word senses of query terms and expand query. 2 Introduction Techniques involving phrases, general dictionaries (i.e. WordNet) and WSD have been investigated with mixed results in the past. Noun phrases are classified into four types: Proper names Dictionary phrases Simple phrases, which do not have any embedded phrases Complex phrases, which are more complicated phrases. A document has a phrase if all the content words in the phrase are within a window of a certain size, which depends on the type of the phrase. (i.e. the window size for a simple phrase is smaller than that for a complex phrase.) 3 Introduction A phrase is significant to be used if 1. 2. it is a proper name or a dictionary phrase, or it is a simple or complex phrase whose content words are highly positively correlated. The similarity measure between a query and a document has two components (phrase-sim, term-sim). Documents are ranked in descending order of (phrase-sim, term-sim). 4 Introduction For adjacent query words, the following information from WordNet is utilized to do WSD: synonym sets, hyponym sets, and their definitions. When the sense of a query word is determined, its synonyms, hyponyms, words or phrases from its definition, and its compound words are considered for possible addition to the query. We also impose pseudo-feedback by considering positively globally correlated (to a query term/phrase) terms. 5 Phrases Identification Noun phrases in a query are classified into one of the four types mentioned. Brill’s tagger is used to assign POS tags to words in a query. A document has a phrase if the content words in the phrase appear within a window of a certain size. The window sizes are learned from some training data. 6 Determining Phrases Types Proper names include names of people, places and organizations, and are recognized by the named entity recognizer Minipar [Lin94]. Dictionary phrases are phrases that can be found in dictionaries such as WordNet. A simple phrase has no embedded noun phrase, has two to four words, but at most two of them are content words. Ex: “school uniform” A complex phrase either has one or more dictionary phrases and/or simple phrases embedded in it, or there is a noun involved in some complicated way with other words in the phrase. Ex: “instruments of forecast weather” 7 Determining Window Sizes For each of the four types of phrases, the required window size varies. Intuitively, the content words of a dictionary phrase should be close together (ex: within 3 words apart), but this might not be true in practice. Ex: the query ”drugs for mental illness” contains “mental illness” a relevant document in a TREC collection contains a fragment “… was hospitalized for mental problems… and had been on lithium for his illness until recently” Suitable distances between content words of different types of phrases should be learned. 8 Determining Window Sizes 1. 2. 3. 4. For a set of training queries, we identify the types of phrases, and the distances of the content words of the phrases in all of the relevant documents and in the irrelevant having high similarities with the queries. The information is fed into a decision tree (C4.5), which produces a proper distance for each type of phrases. Learning results: proper name: 0 simple phrase: 48 dictionary phrases: 16 complex phrase: 78 These window sizes are obtained when TREC9 queries are trained on TREC WT10G data collection. 9 Determining Significant Phrases Proper names and dictionary phrases are assumed to be significant. A simple phrase or a complex phrase is significant if the content words within the phrase are highly positively correlated. P( phrase ) correlatio n(t1, t 2,..., tn) P(t ) i ti phrase P(t ) i ti phrase If correlation(t1,t2,…,tn)>5, the phrase is significant. 10 Phrase Similarity Computation The similarity of a document with a query has two components (phrase-sim, term-sim) The phrase-sim is more important than the term-sim. Each query term in the document contributes to term-sim. The term-sim is computed by Okapi formula. The phrase-sim of a document is the sum of idfs of distinct significant phrases occurs in the document. For a complex phrase, the phrase-sim is the idf of the complex phrase plus the idfs of the embedded significant phrases. 11 Word Sense Disambiguation Using WordNet Suppose two adjacent terms t1 and t2 in query form a phrase, the sense of t1 can be determined by executing the following steps: 1. 2. 3. If t2 or a synonym of t2 is found in the definition of a synset S of t1, S is determined to be the sense of t1. The definition of each synset of t1 is compared against the definition of each synset of t2, and the combination that have the most content words in common yields the sense for t1 and the sense for t2. If t2 or one of its synonyms appears in the definition of a synset S containing a hyponym of t1, then the sense of t1 is the synset S1 which has the descendant S. 12 Word Sense Disambiguation Using WordNet (cont.) 4. 5. 6. If a synset S1 of t1 have a hyponym synset U that contains a term h, which appears in the definition of a synset S2 of t2, then the senses of t1 and t2 are S1 and S2, respectively. If all the preceding steps fail, consider the surrounding terms in the query. If t1 has a dominant synset S, then the sense of t1 is S. 13 Query Expansion Using WordNet The sense of query term t1 is determined to be the synset S, the following four cases are considered for QE. 1. 2. Add Synonyms: For any term t’ in S, t’ is added to the query if either (a) S is a dominant synset of t’ or (b) t’ is highly correlated with t2. The weight of t’ is W(t’) = f(t’, S) / F(t’) Add Definition Words: If t1 is a single sense word, the first shortest noun phrase from the definition will be added if it is highly globally correlated with t1. 3. Add Hyponyms: The conditions are similar to “add synonyms”. 14 Query Expansion Using WordNet (cont.) 4. Add Compound Words: Suppose c is a compound word of a query term t1 and c has a dominant synset V. The word c can be added tu the query if: (a) The definition of V contains t1 as well as all terms that form a phrase with t1 in the query (b) The definition of V contains term t1, and c relates to t1 through a “member of “ relation. 15 Pseudo Relevance Feedback Two methods are used Using global correlations and WordNet global_correlation(ti, s) = idf(s) × log(correlation(ti, s)) s: a query concept ti: a concept in documents A term among the top 10 most highly globally terms is added to the query if (1) it has a single sense and (2) its definition contains some other query terms. Combining local and global correlations A term is brought in if it correlates highly with the query in the top ranked documents and globally with a query concept in the collection. 16 Modification of the Query and the Similarity Function Query expansion will result in a final Boolean query. ex: original query phrase = (t1 AND t2), t1 bring in t1’ and t2 bring in t2’ expanded = (t1 AND t2) or (t1’ AND t2) or (t1’ AND t2’) or (t1 AND t2’) When computing phrase-sim of a document, any occurrence of an expanded phrase is equivalent to an occurrence of original. The term-sim is computed based on the occurrences of t1, t2, t1’, t2’. 17 Experiment Setup 100 queries from the TREC9 and the TREC10 ad hoc queries sets and 100 queries from the TREC12 robust queries set are used. Only the title portion is used. Algorithms used: SO: the Standard Okapi formula for passage retrieval NO: the Okapi formula is modified by replacing pl and avgpl by Norm and AvgNorm respectively. NO+P: phrase-sim is computed NO+P+D: WSD techniques are used to expand the query NO+P+D+F: pseudo-feedback techniques are employed 18 Experiment Results The best-know results: 0.2078 in TREC9 [OMNH00] 0.2225 in TREC10 [AR02] In TREC12 0.2900 using Web data and the descriptions [Kwok03] 0.2692 using Web data and title [Yeung03] 0.2052 without Web data [ZhaiTao03] 19 Conclusions We provide an effective approach to process typical short queries and demonstrate that it yields significant improvements over existing algorithms in three TREC collections under the same experimental conditions. We plan to make use of Web data to achieve further improvement. 20