Schedules of Reinforcement

advertisement

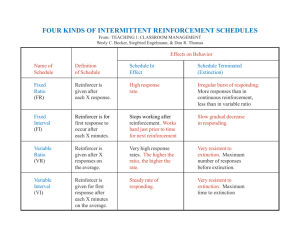

Schedules of Reinforcement: • Continuous reinforcement: – Reinforce every single time the animal performs the response – Use for teaching the animal the contingency – Problem: Satiation • Solution: only reinforce occasionally – – – – Partial reinforcement Can reinforce occasionally based on time Can reinforce occasionally based on amount Can make it predictable or unpredictable Partial Reinforcement Schedules • Fixed Ratio: every nth response is reinforced • Fixed interval: the first response after x amount of time is reinforced • Variable ratio: on average of every nth response is reinforced • Variable interval: the first response after an average of x amount of time is reinforced Differential Reinforcement schedules • Only reinforce some responses • Is a criteria regarding the rate or type of the response • Several examples: – DRO – DRL – DRH DRO: differential reinforcement of other behavior (responses) • Use when want to decrease a target behavior and increase anything BUT that response • Reinforce any response BUT the target response • Often used as alternative to extinction – E.g., SIB behavior – Reinforce anything EXCEPT hitting self DRH: differential reinforcement of High rates of responding • Use when want to maintain a high rate of responding • Reinforce as long as the rate of reinforcement remains at or above a set rate for X amount of time • Often used to maintain on-task behavior – E.g., data entry: must maintain so many keystrokes/min or begin to lose pay – Use in clinical setting for attention: as long as engaging in X academic behavior at or above a certain rate, then get a reinforcer DRL: differential reinforcement of LOW rates of responding • Use when want to maintain a low rate of responding • Reinforce as long as the rate of reinforcement remains at or below a set rate for X amount of time • Often used to control inappropriate behavior – E.g., talking out: as long as have only 3 talk outs per school day, then earn points on behavior chart – Use because it is virtually impossible to extinguish behavior, but then control it at lowest rate possible. Variations of Reinforcement Limited Hold • There is a limited time when the reinforcer is available: – Like a “fast pass”: earned the reinforcer, but must pick it up within 5 seconds or it is lost • applied when a faster rate of responding is desired with a fixed interval schedule • By limiting how long the reinforcer is available following the end of the interval, responding can be speeded up Time-based Schedules • Unlike typical schedules, NO response contingency • Passage of time provides reinforcement • Fixed Time or Variable Time schedules – FT 60 sec: every 60 seconds a reinforcer is delivered independent of responding – VT 60 sec schedule: on average of every 60 seconds…. • Often used to study superstitious behavior • Or: used as convenience once responding is established (organism may not pick up that contingency is gone) Contingency-Shaped vs. RuleGoverned Behaviors • Contingency-Shaped Behaviors—Behavior that is controlled by the schedule of reinforcement or punishment. • Rule-Governed Behaviors—Behavior that is controlled by a verbal or mental rule about how to behave. Operant Behavior can involve BOTH • Obviously, reinforcement schedules can control responding • So can “rules”: • heuristics • algorithms • concepts and concept formation • operant conditioning can have rules, for example, the factors affecting reinforcement. Comparison of Ratio and Interval Schedules: Why different patterns? • Similarities: – Both show fixed vs. variable effects – More pausing with fixed schedules…greater postreinforcement pause – Variable schedules produce faster, steadier responding • But: important differences – Reynolds (1975) • Compared pecking rate of pigeons on VI vs. VR schedules • FASTER responding for VR schedule Why faster VR than VI responding? • Second part of Reynolds (1975) – Used a yoked schedule: • On bird on VR, one on VI • Yoked the rate of reinforcement – When the bird on VR schedule was 1 response shy of reinforcer, waiting time ended for bird on VI schedule – Thus, both birds got same number of reinforcers • Even with this the bird on VI schedule pecked more slowly – Replications support this finding • In pigeons, rats, college students • Appears to be strong phenomena If a subject is reinforced for a response that occurs shortl Explanation 1: IRT reinforcement • IRTs: Inter-response times – If a subject is reinforced for responding that occurs shortly after the preceding response, then a short IRT is reinforced, long IRT is not – And vice versa: if reinforced for long IRTs, then make more long IRTs • Compare VR and VI schedules: – Short IRTs are reinforced on VR schedules – Long IRTs are more likely reinforced on VI schedules – Even when the rate of reinforcement is controlled! Explanation 2: Feedback functions • Molar vs. molecular explanations of behavior – Molar: • Global assessment • Animal compares behavior across long time horizon • Whole session or even across session assessment – Molecular: • • • • Momentary assessment Animal compares next response to last response Moment to moment assessment of setting But which does the animal do? – – – Answer is, as usual, both We momentarily maximize But we also engage in molar maximizing! Explanation 2: Feedback functions • Organisms do not base rate of responding only on rate of reinforcement directly tied to that responding • Instead, organisms compare within and across settings • Use CONTEXT to compare response rate – Again, momentary in some situations – More molar in others Explanation 2: Feedback functions • Feedback functions: – Reinforcement strengthens the relationship between the response and the reinforcer – Does this by providing information regarding this relationship • Feedback function of reward and punishment are critical for developing these contingency rules and more molar patterns of responding Feedback on VR vs VI schedules • Relationship between responding and reinforcement on VR schedule: – More responses = more reinforcers – The way to increase reinforcement rate is to increase response rate – In a sense, organism “is in charge” of its own payoff rate – Faster responding = more reinforcers Feedback on VR vs VI schedules • Relationship between responding and reinforcement on VI schedule: – Passage of time = reinforcer – No way “speed up” the reinforcement rate – In a sense, time “is in charge” of payoff rate – Faster responding does not “pay”, is not optimizing What happens when combine schedules of reinforcement? • Concurrent schedules • Conjunctive schedules • Chained schedules • And so on….. Concurrent Schedules • Two or more basic schedules operating independently at the same time for two or more different behaviors – organism has a choice of behaviors and schedules – You can take notes or daydream (but not really do both at same time) • Provides better analog for real-life situations Concurrent Schedules (cont’d) • When similar reinforcement is scheduled for each of the concurrent responses: – response receiving higher frequency of reinforcement will increase in rate – the response requiring least effort will increase in rate – the response providing the most immediate reinforcement will increase in rate • Important in applied situations! Multiple Schedules • Two or more basic schedules operating independently and ALTERNATING such that 1 is in effect when the other is not – organism is presented with first one schedule and then the other – You can go to Psy 463 or you attend P462, but you can’t go to both at the same time • • Organism makes comparisons ACROSS the schedules – Which is more reinforcing? – More responding for richer schedule • Again, provides better analog for real-life situations Chained Schedules • Two or more basic schedule requirements are in place, – one schedule occurring at a time – but in a specified sequence • Usually a cue that is presented to signal specific schedule – present as long as the schedule is in effect • Reinforcement for responding in the 1st component is the presentation of the 2nd • Reinforcement does not occur until the final component is performed Conjunctive Schedules • The requirements for two or more schedules must be met simultaneously – FI and FR schedule – Must complete the scheduled time to reinforcer, then must complete the FR requirement before get reinforcer • Task/interval interactions – When the task requirements are high and the interval is short, steady work throughout the interval will be the result – When task requirements are low and the interval long, many nontask behaviors will be observed Organism now “compares” across settings • With 2 or more schedules of reinforcement in effect, animal will compare the two schedules – Assume that the organism will maximize • Get the most reinforcement it can get out of the situations • Smart organisms will split their time between the various schedules or form an exclusive choice Organism now “compares” across settings • Conc VI VI schedules: – Two VI schedules in effect at the same time – One is better than the other: conc VI 60 VI 15 • VI 60 pays off 1 time per minute • VI 15 pays off 4 times per minute • What is the MAX amount of reinforcers (on average) an organism can earn per minute? • How should organism split its time? Organism now “compares” across settings • Conc VR VR schedules: – Two VR schedules in effect at the same time – One is better (richer) than the other: conc VR 10 VR5 • VR 10 pays off after an average of 10 responses • VR 5 pays off after an average of 5 responses • What is the MAX amount of reinforcers (on average) an organism can earn per minute? • How should organism split its time? Interesting phenomenon: Behavioral Contrast • Behavioral contrast – change in the strength of one response that occurs when the rate of reward of a second response, or of the first response under different conditions, is changed. • Reynolds, 1966 : Pigeon in operant chamber, pecks a key for food reward. • Equivalent Multiple Schedule: – VI 60 second schedule when key is red – VI 60 second schedule when key is green, – Food comes with equal frequency in either case. • Then: Schedules Change: – RED light predicts same VI 60 sec schedule – GREEN light predicts EXT in one phase – GREEN light predicts VI 15 sec schedule in next phase Behavior change in Behavioral Contrast • Positive contrast: occurs rate of responding to the red key goes up, even though the frequency of reward in red component remains unchanged. • Remember: Phase 1: mult VI 60 (red) VI 60 (green) mult VI 60 (red) EXT (green) • VI 60 for red key did NOT change, only the green key schedule changed • Negative contrast: occurs when the rate of responding to the red key goes DOWN even though the frequency of reward in the red component remains unchanged • Remember: Phase 1: mult VI 60 (red) VI 60 (green) mult VI 60 (red) VI 15 (green) • VI 60 for red key did NOT change, only the green key schedule changed Robust phenomenon • Contrast effect may occur following changes in the • Amount • frequency, • nature of the reward • Occurs with concurrent as well as multiple schedules • Shown to occur with various experimental designs and response measures (e.g. response rate, running speed) • Shown to occur across many species (can’t say all because not all have been tested!) Pullman Effect Spokane, Wa Seattle Pullman, Wa Reinforcement options in Pullman: • In Boston – Go out to bars (many, many options) – Take a warm bath (CONSTANT component) • In Pullman: – Go out to bar (1 bar, only 1 bar) – Take a warm bath • Remember: Pullman is: • 100 miles from Spokane • 500 miles from Seattle • Next “other” city over 100,000: – Minneapolis – Las Vegas • What happens to rate of warm bath taking in Pullman compared to Boston? Why behavioral contrast? • Why does the animal change its response rate to the unchanged/constant component? • Is this optimizing? – Remember, this is a VI schedule, not a VR schedule – If you use VR schedules, get exclusive choice to easier/faster schedule.