ASSESSING WRITING

advertisement

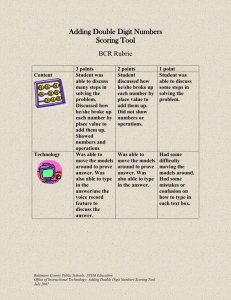

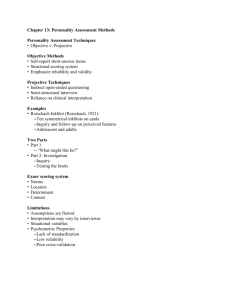

ASSESSING WRITING (1) Lecture 8 Teaching Writing in EFL/ESL Joy Robbins TODAY’S SESSION Your own experiences of assessment The purposes of assessment The concepts of reliability and validity in assessment 3 different approaches to the scoring of writing tests: 1. Holistic scoring 2. Analytic scoring 3. Primary and multiple trait scoring 2 ASSESSMENT: INTRODUCTORY DISCUSSION What’s the point of assessing writing? How have your teachers at school and university assessed your writing in your 1st and 2nd languages? Do you think there was any point in assessing you? Why (not)? In what ways have the scores and grades you have received on your writing (in L1 and L2) helped you improve your writing? If you are an experienced language teacher, what do you feel are your greatest challenges in evaluating student writing? If you aren’t an experienced teacher, what makes you nervous about assessing student writing? Why? 3 (Based on questions in Ferris & Hedgcock 1998: 227) WHAT’S THE POINT OF ASSESSMENT? Brindley (2001) lists the following purposes of assessment: selection: e.g. to determine whether learners have sufficient language proficiency to be able to undertake tertiary study; certification: e.g. to provide people with a statement of their language ability for employment purposes; accountability: e.g. to provide educational funding authorities with evidence that intended learning outcomes have been achieved and to justify expenditure; diagnosis: e.g. to identify learners’ strengths and weaknesses; instructional decision-making: e.g. to decide what material to present next or what to revise; motivation: e.g. to encourage learners to study harder. (p.138) 4 2 KEY TERMS Two key terms in the literature on testing and assessment are reliability and validity. Let’s have a closer look at what each of these mean… 5 RELIABILITY ‘reliability refers to the consistency with which a sample of student writing is assigned the same rank or score after multiple ratings by trained evaluators’ (Ferris & Hedgcock 1998: 230) For example: if we’re marking an essay out of 20, the test will be far more reliable if 2 markers both award an essay the same grade (or more or less the same grade), say 16 or 17. However, if 1 marker awards 10 and the other awards 15, the test isn’t reliable. The obvious way to try to achieve reliability is by designing criteria (e.g. for content, organization, grammar, etc.) which the markers refer to when they’re marking the essay 6 VALIDITY Validity refers to whether the test actually measures what it is supposed to measure Researchers have talked about several types of validity, for example: face validity content validity 7 FACE VALIDITY Face validity refers to how acceptable and credible a test is to its users (Alderson et al 1995) So if a test has high face validity, teachers and learners believe it tests what it is supposed to test A test would have low face validity among learners if they had been told a writing test was mainly assessing the quality of their ideas if they believed that teachers marked according to how good the students’ grammar was 8 CONTENT VALIDITY If a test has content validity, we have enough language to make a judgement about the student’s ability. So if a writing test is to have content validity, we need to be confident we have asked the student to do enough writing to display their writing skills 9 2 APPROACHES TO SCORING WRITING There are 2 main ways of scoring writing tests, the holistic approach and the analytic approach Let’s look at each of these in turn… 10 HOLISTIC SCORING Holistic scoring means that the assessor assesses the text generally, rather than focusing on 2 or 3 specific aspects The idea is that the assessor quickly reads through a text, gets a global impression, and awards a grade accordingly The holistic approach is supposed to respond to the writing positively, rather than negatively focusing on the things the writer has failed to do Let’s look at an example of holistic grading criteria... 11 HOLISTIC WRITING ASSESSMENT: AN EXAMPLE Have a look at the example of a holistic marking scheme I’ve given you on the handout, and discuss the questions… Afterwards, based on this example, make a list of pros and cons of using a holistic approach to assessing writing 12 HOLISTIC SCORING: ADVANTAGES Quick and easy, because there are few categories for the teacher to choose from 13 HOLISTIC SCORING: DISADVANTAGES Holistic scoring can’t provide the writing teacher with diagnostic information about students’ writing, because it doesn’t focus on tangible aspects of writing (e.g. organization, grammar, etc.) The holistic approach only produces a single score, so it’s less reliable than the analytical approach, which produces several scores (e.g. content, organization, grammar, etc.)…unless more than 1 assessor marks the tests A single score can be difficult to interpret for both teachers and students (‘What does 70% actually mean?’ ‘What did I do well?’ ‘What did I do badly?’) 14 HOLISTIC DISADVANTAGES (CONTD.) ‘…the same score assigned to two different texts may represent entirely distinct sets of characteristics even if raters’ scores reflect a strict and consistent application of the rubric. This can happen because a holistic score compresses a range of interconnected evaluations about all levels of the texts in question (i.e., content, form, style, etc.)’. (Ferris & Hedgcock 1998: 234) Even though assessors are supposed to assess a range of features in holistic scoring (e.g. style, content, organization, grammar, spelling, punctuation, etc.), this isn’t easy to do. So some assessors may (consciously or unconsciously) value 1 or 2 of these criteria as more important than the others, and give more weighting to these in their scores (Lumley & McNamara 1995; McNamara 1996). 15 ANALYTIC SCORING Analytic scoring separates different aspects of writing (e.g. organization, ideas, spelling) and grades them separately Let’s look at an example of analytic grading criteria... 16 ANALYTIC WRITING ASSESSMENT: AN EXAMPLE Have a look at the example of an analytic marking scheme I’ve given you on the handout, and discuss the questions… Afterwards, based on this example, make a list of pros and cons of using an analytic approach to assessing writing 17 ANALYTIC SCORING: ADVANTAGES Analytic schemes provide learners with much more meaningful feedback than holistic schemes. Teachers can hand students’ essays back with the criteria (e.g. marks out of 10 for organization, spelling, etc.) circled which the writing was awarded Analytic schemes can be designed to reflect the priorities of the writing course. So, for instance, if you have stressed the value of good organization on your course, you can weight the analytic criteria so that organization is worth 60% of the marks Because assessors are assessing specific criteria, it’s easier to train them than assessors who are using holistic schemes (Cohen 1994; McNamara 1996; Omaggio Hadley 1993; Weir 1990) Analytic assessment is more dependable than holistic assessment (Jonsson & Svingby, 2007: 135) 18 ANALYTIC SCORING: DISADVANTAGES Surely a piece of good writing can’t be judged on 3 or 4 criteria? Each of the scales may not be used separately (even though they should be). So, for instance, if the assessor gives a student a very high mark for the ‘ideas’ scale, this may influence the rest of the marks they award the student on the other scales Descriptors for each scale may be difficult to use (e.g. ‘What does ‘adequate organization’ mean?’) 19 PRIMARY AND MULTIPLE TRAIT SCORING We’ve seen how the analytic approach can be criticized for trying to assess a piece of writing on just 3 or 4 criteria… Although primary and multiple trait scoring also use specific criteria to assess writing, the advantage of this approach is that the criteria assessed depend on what kind of writing the student is doing So primary and multiple trait scoring involves ‘devising and deploying a scoring guide that is unique to each prompt and the student writing that it generates’. (Ferris & Hedgcock 1998: 241) 20 PRIMARY AND MULTIPLE TRAIT SCORING: EXAMPLES If the writing exam consisted of persuasive writing (e.g. Justify the case for the legalization of drugs), we might design a scoring scheme based exclusively on the ability to develop an argument If we were using primary trait scoring, just 1 trait would be assessed; if we were using multiple trait scoring, two or more traits would be assessed So in the example of the persuasive writing exam described above, we might design a scoring scheme which not only assessed the student’s ability to develop an argument, but also assessed the student’s use of counterargument, and the credibility of the sources they use to support their own argument, etc. 21 SAMPLE MULTIPLE TRAIT SCORING GUIDE (FERRIS & HEDGCOCK 2005: 317) Timed writing #3 – Comparative Analysis In their respective essays, Chang (2004) and Hunter (2004) express conflicting perspectives on how technology has influenced the education and training of the modern workforce. You will have 90 minutes in which to explain which author presents the most persuasive argument and why. On the basis of a brief summary of each author’s point of view, compare the two essays and determine which argument is the strongest for you. State your position clearly, giving each essay adequate coverage in your discussion. 22 SAMPLE MULTIPLE TRAIT SCORING GUIDE (FERRIS & HEDGCOCK 2005: 317) 23 MULTIPLE TRAIT SCORING: ADVANTAGES Multiple trait scoring doesn’t treat all writing as the same: it assesses (or should assess) the really important skills involved in different types of writing Providing the teacher has discussed the scoring criteria with the class before the exam, the students know exactly what they are being assessed on 24 MULTIPLE TRAIT SCORING: DISADVANTAGES Can be extremely time consuming to design specific assessment criteria for each type of writing (Perkins 1983) Scoring criteria would need to be extensively piloted to ensure they really are assessing the writing fairly Having discussed the holistic, analytic, and primary/multiple trait approaches, we’re now going to try scoring an assignment using the holistic approach… 25 APPLICATION AND DISCUSSION: HOLISTIC SCORING Use Ferris & Hedgcock’s holistic marking scheme to assess a paper written by a student on a pre-master’s academic English course at a UK university You need to do 2 things: 1. Give the paper a score based on the holistic criteria; 2. Write on the paper, making specific comments on the writing 26 APPLICATION AND DISCUSSION (CONTD.) In a pairs or groups, compare your score and comments with those of your colleagues. On what points did you agree or disagree? Why? If you disagreed, try to arrive at a consensus evaluation of the essay. After identifying the sources of your agreement and disagreement, formulate a list of future suggestions for using holistic scoring rubrics. (Ferris & Hedgcock 1998: 261) 27 REFERENCES Alderson JC et al (1995) Language Test Construction and Evaluation. Cambridge: Cambridge University Press. Brindley G (2001) Assessment. In R. Carter & D. Nunan (eds.), The Cambridge Guide to Teaching English to Speakers of Other Languages. Cambridge: Cambridge University Press, pp.137-143. Cohen A (1994) Assessing Language Ability in the Classroom (2nd ed.). Boston: Heinle & Heinle. Ferris D & Hedgcock JS (1998) Teaching ESL Composition: Purpose, Process, and Practice. Mahwah: Lawrence Erlbaum. Jonsson, A., & Svingby, G. (2007). The use of scoring rubrics: Reliability, validity and educational consequences. Educational Research Review, 2(2), 130-144. Lumley T & McNamara T (1995) Rater characteristics and rater bias: implications for training. Language Testing 12: 54-71. McNamara T (1996) Measuring Second Language Performance. London: Longman. Omaggio Hadley A (1994) Teaching Languages in Context (2nd ed.). Boston: Heinle & Heinle. Perkins K (1983) On the use of composition scoring techniques, objective measures, and objective tests to evaluate ESL writing ability. TESOL Quarterly 17: 651-671. Weir CJ (1990) Communicative Language Testing. New York: Prentice Hall. 28 THIS WEEK’S READING Chapters 5 and 6 of: Ferris D & Hedgcock JS (2005) Teaching ESL Composition: Purpose, Process, and Practice. Mahwah: Lawrence Erlbaum. Min H-T (2005) Training students to become successful peer reviewers. System 33: 293-308. 29 HOMEWORK TASK Use the analytic scoring scale to grade the presessional piece of writing you graded holistically earlier today… Then work through the following questions: How well do your analytic ratings match your holistic ratings? Where do the two sets of scores and comments differ? Why? Given the nature of the writing tasks you evaluated, which of the two scales do you feel is most appropriate? Why? How might you modify one or both of the scales to suit the students you teach? (Adapted from Ferris & Hedgcock 1998: 261-2) 30