Emotional Intelligence in the Workplace

advertisement

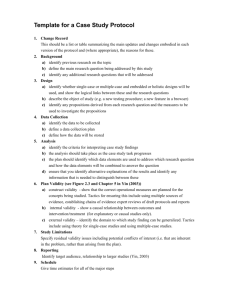

Psychological Measurement in Industry What Do Industrial-Organizational Psychologists Do? Industrial-organizational psychologists study organizations and seek ways to improve the functioning and human benefits of business. Psychology at Work I/O Psychologist Improve Organizational Functioning by: • Recruiting people that best fit your organization • Selecting the best people • Retaining the best people • Developing fair, legal, and efficient hiring procedures • Improving the skills of the people • Creating a diverse, qualified workforce Selection Process • Measure applicants’ qualifications • Select the best applicant to hire • For each selection method: –Describe the selection method –Rate the validity of the selection method: » Poor: validity coefficient = r ≈ .00 » Moderate: validity coefficient = r ≈ .25 » Good: validity coefficient = r ≈ .50 » Great: validity coefficient = r ≈ .75 • Evaluation of process Workplace Testing Settings • The government & the military –90% tested for federal jobs –80% tested for state, county, local government –Largest amount of testing in military –Over 1 mil. per year take ASVAB –Military: emphasis on placement not selection Workplace testing settings (cont.) • Testing for professional licensing –Over 2,000 occupations require licensing –In some states 25% of workforce is licensed –Require written & oral examinations –Tests prepared by licensing boards (local & national) –National exams better than local –“State of near chaos” –For the same job – diff’t exams, diff’t requirements –Little overlap between states Workplace testing settings (cont.) • Private organizations –Frequency of testing varies –Growing interest in testing w/ ease of web-based testing »Applicants are administered tests online »Info goes directly to hiring manager, no feedback to test-taker »E.g., Target, Blockbuster Approach for Examining Selection Methods • Describe the selection device and the information that can be collected about applicants • Describe how to develop and use the device appropriately • Describe the frequency of use, reliability, validity, costs, adverse impact, and face validity (applicant reactions) of each device • Depth and breadth of selection plan –Costs, who is involved, time frame, which jobs –Internal vs. external selection Selection Methods • Ability Tests • Job Knowledge Tests • Performance Tests and Work Samples • Personality Tests • Integrity Tests • Structured Interviews • Assessment Center Application Blanks & Résumés • Routinely used • Focuses on basic factual information: –Education, training, work history, skills, accomplishments, etc. • Used to screen out applicants who don’t meet minimum qualifications in terms of education, experience, etc. Application Blanks • Validity: poor (typically r < .20) –Why? • Problems: –Lack of agreement what to look for –Possible discrimination –Need to cross validate –Keys are not stable over time, need to update Biodata Questionnaires • Questionnaire on applicant’s life experiences –Example questions: »Did you ever build a model airplane that flew? »When you were a child, did you collect stamps? »Do you ever repair mechanical things in your home? –Answers are scored according to a scoring key • Validity: moderate (r ≈ .30) Experience & Accomplishments Questionnaires • Questionnaire focuses on applicant’s job-related experiences & accomplishments – Example questions: » For an Information Systems Analyst position: • Describe the types of IT systems problems you have encountered. • Describe your experience in testing hardware, software, or systems. – Validity: moderate (typically content validity) Employment Interviews • • • • Universal selection procedure Strong effect on selection decisions Preferred by managers Psychometric problems: –No consistency of questions –Questions unrelated to jobs –No objective scoring system –No interviewer training • Overall, the more standardized the interview, the better INTERVIEWS CAN..... • Assess Certain Characteristics • Assess Organisational & Team Fit • Satisfy Social Exchange Function • High Face Validly Employment Interview • Validity as typically done: poor (r < .20) • Types of employment interviews: –Unstructured: few (if any) pre-planned questions; commonly used; poor validity –Semi-structured: some pre-planned questions, but with flexibility to pursue lots of follow-ups; moderate validity –Structured: all questions are pre-planned; every applicant gets the same interview; some followup probes; answers are evaluated using numeric rating scales; good validity Structured Interviews • Standardized method of asking same job related questions of all applicants • Carefully planned and constructed based on job analysis • Responses are numerically evaluated • Detailed notes are taken Example of Structured Interviews – Situational: » Hypothetical scenarios » A sign of how they will behave » How would the interviewee behave in a critical situation? – Behavioral: » Past incidents » A sample of work behavior – better predictor » How did the interviewee behave in a specific job situation in the past? – Multiple raters – Composite ratings used to make decisions PROBLEMS WITH INTERVIEWS • Unstructured & Unplanned • Untrained & Biased Interviewers • Same Sex Bias • Structured Means Standardised or Artificial & Inflexible Why Interviews are Often not Valid Assessments • Poor wording of questions –No systematic scoring system used by interviewers—very subjective –Applicants have been trained to give the appropriate responses to such open-ended questions –Interviewer has no way to verify this information in short period of time of interview Attempts to Improve the Interview • Training Interviewers • Development of Appropriate Techniques: –Situational Interview –Behavior Description Interview Central Issues in Interview Training Programs • Creating an open- • communication • atmosphere • • Delivering questions consistently • Maintaining control • of the interview • Developing good speech behavior Learning listening skills Taking appropriate notes Keeping the conversation flowing and avoiding leading or intimidating the interviewee Interpreting, ignoring, or controlling the nonverbal cues of the interview Evaluation of the Interview • Unstructured interviews used frequently; structured ones used less frequently • More structured, more reliable and valid • Structured interviews are highly correlated with cognitive ability tests • Mixed adverse impact • Structured interviews are costly to develop and use • Might be appropriate for measuring person/organization fit Ability Tests • Measure what a person has learned up to that point in time (achievement) • Measure one’s innate potential capacity (aptitude) • Up to 50% of companies use some ability testing Ability Tests • • • • Mental (Cognitive) Ability Tests Mechanical Ability Tests Clerical Ability Tests Physical Ability Tests Cognitive Ability Tests - Main purpose: to determine one’s level of “g” or aptitudes depending on setting - Measure aptitudes relevant to the job - short, group administration - excellent predictor of job and training performance Typical Cognitive Abilities • Memory Span • Numerical Fluency • Verbal Comprehension • Spatial Orientation • Visualization • Figural Identification • Mechanical Ability • Conceptual Classification • Sematic Relations • General Reasoning • Intuitive Reasoning • Logical Evaluation • Ordering Example of Ability Tests - Wonderlic Personnel Test (measures “g”) - 50 items, 12 minutes - multiple choice - Items cover verbal, math, pictorial, analytical material - Highly reliable (alternate forms > .90) - Correlated with job performance measures - Correlated with WAIS Examples of Other Frequently Used Mental Ability Tests • Otis-Lennon Mental Ability Test • General Aptitude Test Battery (GATB) used by the US Employment Service • Employee Aptitude Survey (EAS) Advantages of Cognitive Ability Tests • Efficient • Useful across all jobs • Excellent levels of reliability and validity (.40 - .50) –Highest levels than any other tests –Estimated validity: ».58 for professional/managerial jobs ».56 for technical jobs ».40 for semi-skilled jobs ».23 for unskilled jobs –More complex job = higher validity Disadvantages of Cognitive Ability Tests • Lead to more adverse impact • May lack face validity –Questions aren’t necessarily related to job • May predict short-term performance better than long-term –can do vs. will do Frequently Used General Mechanical Ability Tests • Bennett Mechanical Comprehension Tests • MacQuarrie Test for Mechanical Ability • What they generally measure: –Spatial visualization –Perceptual speed and accuracy –Mechanical information Tests of Mechanical Comprehension - better than “g” for blue-collar jobs Good face validity Criterion validity w/ mech. job performance E.g., Bennett Mechanical Comprehension Test - 68 items - 30 minutes - Principles of physics & mechanics - Operations of common machines, tools, & vehicles - High internal consistency - Good criterion validity w/ job proficiency & training Clerical Ability Tests Predominately measures perceptual speed and accuracy in processing verbal and numerical data Examples: Minnesota Clerical Test Office Skills Test Clerical Tests - 2/3 of companies use written tests to hire & promote 60-80% of tests are clerical Specific vs. general E.g., Minnesota Clerical Test - 2 subtests: number comparison & name comparison - Long lists of pairs of numbers/names (decide if same) - Strict time limit - Reliable & valid for perceptual speed & accuracy - Good face validity Physical Ability Tests • Most measure muscular strength, cardiovascular endurance, and movement quality • Areas of concern: –Female applicants –Disabled applicants –Reduction of work-related injuries Ability Tests and Discrimination • Differential Validity –Are employment tests less valid for minority group members than non-minorities? –Research has found that differential validity does not exist Comparison of Mental Ability Tests and Other Selection Instruments Mental ability tests have high validity and low costs compared to other methods Biodata, structured interviews, trainability tests, work samples, and assessment centers have equal validity, less adverse impact, and more fairness to the applicant, but cost more Work Sample Tests • How do you perform job-relevant tasks? • 2 characteristics: –Puts applicant in a situation similar to a work situation – measures performance on tasks similar to real job tasks. –Is it a test of maximal vs. typical performance? • Range from simple to complex Work Sample Tests –Examples: »For telephone sales job, have applicants make simulated cold calls »For a construction job, have applicants locate errors in blueprints Work Sample Tests • Advantages: –Highest validity levels (r = .50s) –High face validity –Easy to demonstrate job-relatedness • Disadvantages: –Not appropriate for all jobs –Time-consuming to set up and administer –More predictive in short-term –Cannot use if applicant is not expected to know job before being hired Measuring Personality - Early research showed no validity - Recent research: 3 of Big 5 are predictive - Criterion validity: .15 - .25 (less than “g”) - Susceptible to faking – does not affect validity in predicting - Useful when dependability, integrity, responsibility are determinants of job success Myers-Briggs Type Indicator (MBTI) • Dimensions of personality: » Introversion Extroversion: source of energy » Intuition Sensation: innovation vs. practical » Thinking Feeling: impersonal principles vs. personal relationships » Judging Perceiving: closure vs. open options –Validity: poor for selection; might be okay, if carefully used, to help a team work better together The Big 5 Personality Dimensions –Validity: typically moderate for selection (r ≈ .25 with measures of overall job performance) –But, validity of personality inventories is hard to generalize »Some dimensions of personality may correlate more strongly with particular aspects of a particular job » Extraversion → success in sales » High conscientiousness & high openness to experience → success in job training » Low agreeableness, low conscientiousness, & low adjustment → more likely to engage in counterproductive work behaviors (e.g., abuse sick leave, break rules, drug abuse, workplace violence) Advantages of Personality Inventories • Intuitively appealing to managers (e.g., MBTI) • No adverse impact –Don’t show rates of differential selection • Efficient • Moderate reliability and validity –Validity = .20 - .30 Disadvantages of Personality Inventories • Response sets –Lie or socially desirable responding • All traits not equally valid for all jobs Integrity Testing • Why do it? –Employee theft estimated between $15 and $50 billion in 1990’s –Employee theft rate by industry: 5 to 58% –2% to 5% of each sales dollar charged to customers to offset theft losses Integrity Testing – Purpose: - theft is expensive also want to avoid laziness, violence, gossip Honesty may not be a stable trait Honesty testing is controversial May depend on the situation (perceived unfairness) - Viewed as coercive and inaccurate - Honesty is a strong value in our society Honesty & Integrity Tests • Employee Polygraph Act (1988) prohibits (with some exceptions) the use of polygraph tests of applicants or employees • Replaced by paper-and-pencil tests: –Overt integrity tests: measures attitudes about dishonest behavior »Question: “Everyone will steal if given the chance.” »Examples: • Pearson Reid London House: Personnel Selection Inventory (PSI) Disadvantages of Honesty & Integrity Tests • Fakable • High rates of false positives • Some states (e.g., Massachusetts) ban it Evaluation of Integrity Tests • False positives: 40 to 70%, especially if cutoff scores are set high • Validity: difficult to determine criteria to validate against (estimates ~.13 to .55) • Usefulness depends on the base rate of theft occurring in particular industries (estimates range from 5% to 58%) • Faking: not a major issue, but probably more so for overt tests • Applicant reactions are usually negative Assessment Center A procedure for measuring performance with a group of individuals (usually 12 to 24) that uses a series of devices, many of which are verbal performance tests Behavioral Dimensions Frequently Measured in Assessment Centers • Oral Communication • Planning and Organizing • Delegation • Control and Monitoring • Decisiveness • Initiative • Tolerance for stress • Adaptability • Tenacity ASSESSMENT CENTRES • Work-sample test for manager positions –Measures: leadership, communication, decisiveness, organizing & planning, etc. • Developed for WW2 Officer Selection Board • Simulation Exercises Measure Competencies • Various Techniques, Candidates, Assessors & Competencies Frequently Used Performance Tests in Assessment Centers • In-Basket • Role Plays • Leaderless Group Discussion • Case Analysis Evaluation of Assessment Centers • • • • Adverse Impact: not much Validity: .36 and .54 (performance tests) Acceptance to applicants: High Costly to develop and run DISADVANTAGES OF ASSESSMENT CENTRES • Poorly Defined Competencies & Exercises • Poor Training & Selection of Assessors • Poor Selection & Briefing of Candidates 360 Degree Instruments When a 360 makes sense • It is the best method to measure external behaviors. Things that are best observed and judged by others. Problems With 360 Degree Instruments Problems with using a 360: –Honesty –Knowledge: »observer’s biases and perceptions influence ratings »abilities can be hidden The Problem of Observer Reports THE IMPORTANCE OF VALIDITY • Face Validity • The method looks plausible • Criterion Validity • The method predicts performance • Content Validity • The method looks plausible to experts • Construct Validity • The method measures something meaningful Personnel Selection: Which are Most Predictive? AVERAGE VALIDITY A WORK SAMPLE TESTS .38 B INTELLIGENCE TESTS .38 to .51 C ASSESSMENT CENTERS .41 D PEER/SUPERVISORY RATINGS .41 to .49 E WORK HISTORY .24 to .35 F UNSTRUCTURED INTERVIEWS .15 to .38 G PERSONALITY INVENTORIES .15 to .31 H REFERENCE CHECKS .14 to .26 I TRAINING RATINGS .13 to .15 J SELF RATINGS .10 to .15 K EDUCATION / GPA .00 to .10 L INTERESTS / VALUES .00 to .10 M AGE to .54 to .50 .-.01 to .00 PREDICTIVE VALIDITY & SELECTION TESTS –Combinations of methods »“g” + work samples = .63 »“g” + structured interview = .63 »“g” + integrity tests = .65