EU Meeting, Greece, June 2001

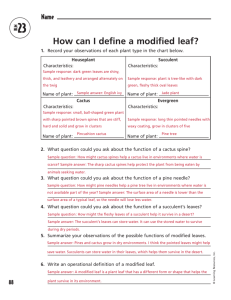

advertisement

Cactus:

A Framework for Numerical Relativity

Gabrielle Allen

Max Planck Institute for Gravitational Physics,

(Albert Einstein Institute)

What is Cactus?

CACTUS is a freely available, modular,

portable and manageable environment

for collaboratively developing parallel, highperformance multi-dimensional simulations

Cactus

remote steering

Plug-In “Thorns”

(modules)

extensible APIs

ANSI C

parameters

driver

scheduling

Core “Flesh”

input/output

error handling

interpolation

SOR solver

Fortran/C/C++

equations of state

black holes

make system

grid variables

wave evolvers

multigrid

boundary conditions

coordinates

Cactus in a Nutshell

Cactus acts a the “main” routine of your code, it takes care of e.g.

parallelism, IO, checkpointing, parameter file parsing for you (if you

want), and provides different computational infrastructure such as

reduction operators, interpolators, coordinates, elliptic solvers, …

Everything Cactus “does” is contained in thorns (modules), which you

need to compile-in. If you need to use interpolation, you need to

find and add a thorn which does interpolation.

It is very extensible, you can add you own interpolators, IO

methods etc.

Not all the computational infrastructure you need is necessarily

there, but hopefully all of the APIs etc are there to allow you to

add anything which is missing.

We’re trying to provide a easy-to-use environment for collaborative,

high-performance computing, from easy compilation on any machine,

to easy visualization of your output data.

Modularity:

“Plug-and-play” Executables

Computational Thorns

Numerical Relativity Thorns

PUGH

PAGH

ADMConstraint IDAxiBrillBH

Carpet

HLL

PsiKadelia

Zorro

CartGrid3D

Cartoon2D

AHFinder

Extract

Time

Boundary

Maximal

ADM

EllSOR

EllBase

SimpleExcision

ADM_BSSN

IOFlexIO

IOASCII

FishEye

ConfHyp

IOHDF5

IOJpeg

IDAnalyticBH

BAM_Elliptic

IOUtil

IOBasic

LegoExcision

IDLinearWaves

HTTPD

HTTPDExtra

TGRPETSc

IDBrillWaves

ISCO with AMR ??

FasterISCO

elliptic

Runsolver

ISCO??

with Excision

Einstein Toolkit: CactusEinstein

Infrastructure:

ADMBase, StaticConformal,

SpaceMask, ADMCoupling,

ADMMacros, CoordGauge

Initial Data:

IDSimple, IDAnalyticBH,

IDAxiBrillBH, IDBrillData,

IDLinearWaves

Evolution:

ADM, EvolSimple, Maximal

Analysis:

ADMConstraints, ADMAnalysis,

Extract, AHFinder,

TimeGeodesic, PsiKadelia,

IOAHFinderHDF

Other thorns available from

other groups/individuals

E.g. a few from AEI …

Excision

LegoExcision, SimpleExcision

AEIThorns

ADM_BSSN, BAM_Elliptic,

BAM_VecLap

PerturbedBH

DistortedBHIVP,

IDAxiOddBrillBH,

RotatingDBHIVP

Computational Toolkit

CactusBase

Boundary, IOUtil, IOBasic,

CartGrid3D, IOASCII, Time

CactusIO

IOJpeg

CactusConnect

CactusExternal

CactusElliptic

CactusUtils

HTTPD, HTTPDExtra

EllBase, EllPETSc, EllSOR

CactusPUGH

PUGH, PUGHInterp, PUGHSlab,

PUGHReduce

CactusPUGHIO

IOFlexIO, IOHDF5Util,

IOHDF5, IOStreamedHDF5,

IsoSurfacer, IOPanda

FlexIO, jpeg6b

NaNChecker

Computational Toolkit (2)

CactusBench

BenchADM

CactusTest

TestArrays, TestComplex,

TestCoordinates, TestInclude1,

TestInclude2, TestInterp,

TestReduce, TestStrings,

TestTimers

CactusWave

IDScalarWave, IDScalarWaveC,

IDScalarWaveCXX,

WaveBinarySource, WaveToyC,

WaveToyCXX, WaveToyF77,

WaveToyF90, WaveToyFreeF90

CactusExamples

HelloWorld, WaveToy1DF77,

WaveToy2DF77, FleshInfo,

TimerInfo

What Numerical Relativists Need From Their

Software …

Primarily, it should enable the physics they want to do, and

that means it must be:

Collaborative

Portable

Large scale !

High throughput

Easy to understand

and interpret results

Supported and

developed

Produce believed

results

Flexible

Reproducible

Have generic

computational toolkits

Incorporate other

packages/technologies

Easy to use/program

Large Scale

Typical run (but we want bigger!) needs 45GB

of memory:

Typical run makes 3000 iterations with 6000

Flops per grid point:

256 MB

(320 GB for 10GF every 50 time steps)

One simulation takes longer than queue times

Need 10-50 hours

Computing time is a valuable resource

Parallelism

Optimization

600 TeraFlops !!

Output of just one Grid Function at just one

time step

171 Grid Functions

400x400x200 grid

Requirements

One simulation: 2500 to 12500 SUs

Need to make each simulation count

Parallel/Fast IO,

Data Management,

Visualization

Checkpointing

Interactive

monitoring, steering,

visualization, portals

Produce Believable Results

Continually test with known/validated solutions

Open community:

Code changes

Using new thorns

Different machines

Different numbers of processors

The more people using your code, the better tested it will be

Open Source … not black boxes

Source code validates physical results which anyone can

reproduce

Diverse applications:

Modular structure helps construct generic thorns for Black

Holes, Neutron Stars, Waves, …

Other applications, …

Portability

Develop and run on many different

architectures

(laptop, workstations, supercomputers)

Set up and get going quickly

(new computer, visits, new job, wherever

you get SUs)

Use/Buy the most economical resource

(e.g. our new supercomputer)

Make immediate use of free (friendly

user) resources

(baldur, loslobos, tscini, posic)

Tools and infrastructure also important

Portability crucial for “Grid Computing”

RECENT GROUP

RESOURCES

Origin 2000 (NCSA)

Linux Cluster (NCSA)

Compaq Alpha (PSC)

Linux Cluster (AHPCC)

Origin 2000 (AEI)

Hitachi SR-8000 (LRZ)

IBM SP2 (NERSC)

Institute Workstations

Linux Laptops

Ed’s Mac

Very different

architectures, operating

systems, compilers and

MPI implementations

Easy to Use and Program

Program in favorite language (C,C++,F90,F77)

Hidden parallelism

Computational Toolkits

Good error, warning, info reporting

Modularity !! Transparent interfaces with other modules

Extensive parameter checking

Work in the same way on different machines

Interface with favorite visualization package

Documentation

Cactus User Community

Using and Developing Physics Thorns

Numerical Relativity

AEI

Southampton

Goddard

Tuebingen

Wash U

Penn State

TAC

EU Astrophysics

Network

Other Applications

RIKEN

Thessaloniki

SISSA

Chemical Engineering

(U.Kansas)

Portsmouth

Climate Modeling

(NASA,+)

NASA Neutron Star

Grand Challenge

Many others

who mail us at

cactusmaint

Bio-Informatics

(Canada)

Geophysics

(Stanford)

Plasma Physics

(Princeton)

Early Universe

(LBL)

Astrophysics

(Zeus)

Using Cactus

program YourCode

call

call

call

call

ReadParameterFile

SetUpCoordSystem

SetUpInitialData

OutputInitialData

do it=1,niterations

call EvolveMyData

call AnalyseData

call OutputData

end do

end

If your existing code has this

kind of structure

Split into subroutines

Clear argument lists

it should be relatively

straightforward to put into

Cactus.

Cactus will take care of the

parameter file, and (hopefully)

the coord system and IO, and

if you’re lucky you can take

someone else’s Analysis,

Evolution, Initial Data, …

modules.

Thorn Architecture

Main question: best way to divide up into thorns?

Thorn EvolveMyData

Parameter Files

and Test Suites

????

????

Configuration Files

Source Code

Fortran

Routines

Documentation!

C

Routines

C++

Routines

Make

Information

ADMConstraints: interface.ccl

# Interface definition for thorn ADMConstraints

implements: admconstraints

inherits: ADMBase, StaticConformal, SpaceMask, grid

USES INCLUDE: CalcTmunu.inc

USES INCLUDE: CalcTmunu_temps.inc

USES INCLUDE: CalcTmunu_rfr.inc

private:

real hamiltonian type=GF

{ ham } "Hamiltonian constraint"

real momentum type=GF

{ momx, momy, momz } "Momentum constraints"

ADMConstraints: schedule.ccl

schedule ADMConstraints_ParamCheck at CCTK_PARAMCHECK

{LANG: C}

"Check that we can deal with this metric_type and have enough

conformal derivatives"

schedule ADMConstraint_InitSymBound at CCTK_BASEGRID

{LANG: Fortran}

"Register GF symmetries for ADM Constraints"

schedule ADMConstraints at CCTK_ANALYSIS

{

LANG: Fortran

STORAGE: hamiltonian,momentum

TRIGGERS: hamiltonian,momentum

} "Evaluate ADM constraints"

ADMConstraints: param.ccl

# Parameter definitions for thorn ADMConstraints

shares: ADMBase

USES KEYWORD metric_type

shares: StaticConformal

USES KEYWORD conformal_storage

private:

BOOLEAN constraints_persist "Keep storage of ham and mom* around

for use in special tricks?"

{} "no"

BOOLEAN constraint_communication "If yes sychronise constraints"

{} "no"

KEYWORD bound "Which boundary condition to apply"

{

"flat" :: "Flat (copy) boundary condition"

"static" :: "Static (don't do anything) boundary condition"

} "flat"

ADMConstraints: Using it

MyRun.par:

ActiveThorns = “ … … ADMConstraints … …”

IOASCII::out3d_every = 10

IOASCII::out3d_vars = “ … ADMConstraints::hamiltonian…”

What do you get with Cactus?

Parameters: file parser, parameter ranges and checking

Configurable make system

Parallelization: communications, reductions, IO, etc.

Checkpointing: checkpoint/restore on different

machines, processor numbers

IO: different, highly configurable IO methods (ASCII,

HDF5, FlexIO, JPG) in 1D/2D/3D + geometrical objects

AMR when it is ready

(PAGH/Carpet/FTT/Paramesh/Chomba)

New computational technologies as they arrive, e.g.

New machines

Grid computing

I/O

Use our CVS server

Myths

If you’re not sure just ask:

Cactus doesn’t have periodic boundary conditions

Of course not!

Cactus gives different results on different numbers of processors

You shouldn’t need to do this! Please tell us.

If you use Cactus you have to let everyone have and use your code

Cactus can run on anything with a ANSI C compiler (e.g. Windows, Mac,

PlayStation2). Need MPI for parallelisation, F90 for many of our numrel

thorns.

To compile Cactus you need to edit this file, tweak that file,

comment out these lines, etc, etc

It has always had periodic boundary conditions.

Cactus doesn’t run on a **?** machine

email cactusmaint@cactuscode.org, users@cactuscode.org

It shouldn’t, check your code!!

Cactus makes your computers explode

Cactus Support

Users Guide, Thorn Guides on web pages

FAQ

Pages for different architectures, configuration details for

different machines.

Web pages describing different viz tools etc.

Different mailing lists (interface on web pages) …

cactusmaint@cactuscode.org

or users@cactuscode.org

or developers@cactuscode.org

or cactuseinstein@cactuscode.org

Cactus (Infrastructure) Team at AEI

General :

GridLab:

Thomas Radke, Annabelle Roentgen, Ralf Kaehler

PhD Students:

Tom Goodale, Ian Kelley, Oliver Wehrens, Michael Russell, Jason

Novotny, Kashif Rasul, Susana Calica, Kelly Davis

GriKSL:

Gabrielle Allen, David Rideout

Thomas Dramlitsch (Distributed Computing), Gerd Lanfermann

(Grid Computing), Werner Benger (Visualization)

Extended Family:

John Shalf, Mark Miller, Greg Daues, Malcolm Tobias, Erik

Schnetter, Jonathan Thornburg, Ruxandra Bonderescu

Development Plans

See

Currently working on 4.0 Beta 12 (release end of May)

www.cactuscode.org/Development/Current.html

New Einstein thorns

New IO thorns, standardize all IO parameters

Release of Cactus 4.0 planned for July (2002)

We then want to add (4.1):

Support for unstructured meshes (plasma physics)

Support for multi-model (climate modeling)

Dynamic scheduler

Cactus Communication Infrastructure

Better elliptic solvers/infrastructure

Cactus Developer Community

Developing Computational Infrastructure

AEI Cactus

Group

Argonne

National

Laboratory

Grants and Projects

The Users

NCSA

Konrad-Zuse

Zentrum

Global

Grid

Forum

U. Chicago

EGrid

Compaq

Sun

Microsoft

SGI

Clemson

DFN TiKSL

TAC

U. Kansas

Wash U

Lawrence

Berkeley

Laboratory

Intel

DFN GriKSL

EU GridLab

NSF KDI ASC

NSF GrADS

Direct Benefits

Visualization

Parallel I/O

Remote Computing

Portal

Optimization

Experts

Other Development Projects

AMR/FMR

Grid Portal

Grid Computing

Visualization (inc. AMR Viz)

Parallel I/O

Data description and management

Generic optimization

Unstructured meshes

Multi-model

What is the Grid? …

… infrastructure enabling the

integrated, collaborative use of

high-end computers, networks,

databases, and scientific

instruments owned and managed

by multiple organizations …

… applications often

involve large amounts of

data and/or computing,

secure resource sharing

across organizational

boundaries, not easily

handled by today’s

Internet and Web

infrastructures …

… and Why Bother With It?

AEI Numerical Relativity Group has access to high-end resources in

over ten centers in Europe/USA

They want:

How to make best use of these resources?

Easier use of these resources

Bigger simulations, more simulations and faster throughput

Intuitive IO and analysis at local workstation

No new systems/techniques to master!!

Provide easier access … no one can remember ten usernames, passwords,

batch systems, file systems, … great start!!!

Combine resources for larger productions runs (more resolution badly

needed!)

Dynamic scenarios … automatically use what is available

Better working practises: Remote/collaborative visualization, steering,

monitoring

Many other motivations for Grid computing ... Opens up possibilities

for a whole new way of thinking about applications and the

environment that they live in (seti@home, terascale desktops, etc)

Remote Monitoring/Steering:

Thorn which allows simulation to

any to act as its own web server

Connect to simulation from any

browser anywhere … collaborate

Monitor run: parameters, basic

visualization, ...

Change steerable parameters

Running example at

www.CactusCode.org

Wireless remote viz, monitoring

and steering

VizLauncher

From a web browser connected to the simulation, output

data (remote files/streamed data) automatically

launched into appropriate local visualization client

Application specific networks … shift vector fields,

apparent horizons, particle geodesics, …

Cactus ASC Portal

Part of NSF KDI Project

Astrophysics

Simulation

Collaboratory

Use any Web Browser !!

Portal (will) provides:

Single access to all

resources

Locate/build executables

Central/collaborative

parameter files, thorn lists

etc

Job submission/tracking

Access to new Grid

Technologies

www.ascportal.org

Remote Visualization

OpenDX

OpenDX

Amira

IsoSurfaces

and

Geodesics

Contour plots

(download)

LCA Vision

Grid

Functions

Streaming

HDF5

Amira

Remote Offline Visualization

Viz in

Berlin

Viz Client (Amira)

HDF5 VFD

DataGrid (Globus)

DPSS

HTTP

Downsampling,

hyperslabs

Only

what is

needed

DPSS

Server

4TB at

NCSA

FTP

Visualization

Client

FTP

Server

Web

Server

Remote

Data

Server

Dynamic Adaptive Distributed Computation

(T.Dramlitsch, with Argonne/U.Chicago)

GigE:100MB/sec

17

4

2

OC-12 line

12

5

2

12

(But only 2.5MB/sec)

SDSC IBM SP

1024 procs

5x12x17 =1020

5

NCSA Origin Array

256+128+128

5x12x(4+2+2) =480

These experiments:

Einstein Equations (but could be

any Cactus application)

Achieved:

First runs: 15% scaling

With new techniques: 70-85%

scaling, ~ 250GF

Dynamic Adaptation: Number

of ghostzones, compression, …

Paper describing this is a

finalist for the

“Gordon Bell Prize”

(Supercomputing 2001, Denver)

SDSC

Dynamic Grid Computing

Add more

resources

Free CPUs!!

Queue time over,

find new machine

RZG

LRZ

Archive data

Clone job with

steered parameter

SDSC

Calculate/Output

Physicist has new idea !

Invariants

S

Brill Wave

Found a horizon,

try out excision

S1

Calculate/Output

Grav. Waves

P1

Look for

horizon

S2

P2

Find best

resources

S1

P1

NCSA

Archive to LIGO

S2

public database

P2

Users View

GridLab:

Enabling Dynamic Grid Applications

EU Project (under final

negotiation with EC)

AEI, ZIB, PSNC, Lecce, Athens,

Cardiff, Amsterdam, SZTAKI,

Brno, ISI, Argonne, Wisconsin,

Sun, Compaq

Grid Application Toolkit

for application developers

and infrastructure

(APIs/Tools)

Develop new grid scenarios

for 2 main apps:

Numerical relativity

Grav wave data analysis

www.gridlab.org

GriKSL

www.griksl.org

Development of Grid Based Simulation and Visualization Tools

German DFN Funded

AEI and ZIB

Follow-on to TiKSL

Grid awareness of

applications

Description/manageme

nt of large scale

distributed data sets

Tools for remote and

distributed data

visualization