Author-Topic Models - Student Information

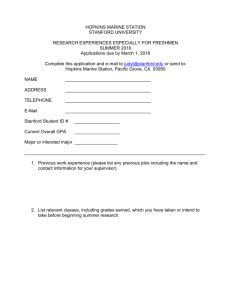

advertisement

Modeling Documents Amruta Joshi Department of Computer Science Stanford University 6th June 2005 Research in Algorithms for the InterNet 1 Outline Topic Models Topic Extraction2 Author Information Modeling Topics Modeling Authors Author Topic Model Inference Integrating topics and syntax Probabilistic Models Composite Model Inference Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 2 Motivation Identifying content of a document Identifying its latent structure More specifically Given a collection of documents we want to create a model to collect information about Authors Topics Syntactic constructs Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 3 Topics & Authors Why model topics? Observe topic trends How documents relate to one-another Tagging abstracts Why model authors’ interests? Identifying what author writes about Identifying authors with similar interests Authorship attribution Creating reviewer lists Finding unusual work by an author Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 4 Topic Extraction: Overview Supervised Learning Techniques Learn from labeled document collection But Unlabeled documents, Rapidly changing fields (Yang 1998) Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet rivers In floods, the banks of a river overflow 5 Topic Extraction: Overview Dimensionality Reduction Represent documents in Vector Space of terms Map to low-dimensionality Non-linear dim. reduction WEBSOM (Lagus et. al. 1999) Linear Projection LSI (Berry, Dumais, O’Brien 1995) Regions represent topics Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 6 Topic Extraction: Overview Cluster documents on semantic content Typically, each cluster has just 1 topic Aspect Model Topic modeled as distribution over words Documents generated from multiple topics Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 7 Author Information: Overview Analyzing text using Stylometry statistical analysis using literary style, frequency of word usage, etc Semantics Content of document Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet As doth the lion in the Capitol, A man no mightier than thyself or me … 8 Author Information: Overview Graph-based models D1 D2 Build Interactive ReferralWeb using citations D3 D4 Kautz, Selman, Shah 1997 Build Co-Author Graphs White & Smith Page-Rank for analysis Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 9 The Big Idea Topic Model Author Model Model topics as distribution over words Model author as distribution over words Author-Topic Model Probabilistic Model for both Model topics as distribution over words Model authors as distribution over topics Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 10 Bayesian Networks Pneumonia Tuberculosis nodes = random variables edges = direct probabilistic influence Lung Infiltrates XRay Sputum Smear Topology captures independence: XRay conditionally independent of Pneumonia given Infiltrates Slide Credit: Lisa Getoor, UMD College Park Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 11 Bayesian Networks Pneumonia Tuberculosis Lung Infiltrates XRay Sputum Smear P T P(I |P, T ) p t 0.7 0.3 p t 0.6 0.4 p t 0.2 0.8 p t 0.01 0.99 Associated with each node Xi there is a conditional probability distribution P(Xi|Pai:) — distribution over Xi for each assignment to parents If variables are discrete, P is usually multinomial P can be linear Gaussian, mixture of Gaussians, … Slide Credit: Lisa Getoor, UMD College Park Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 12 BN Learning P Inducer Data T I X S BN models can be learned from empirical data parameter estimation via numerical optimization structure learning via combinatorial search. Slide Credit: Lisa Getoor, UMD College Park Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 13 Generative Model Probabilistic Generative Process Mixture components Mixture weights Amruta Joshi, Stanford Univ. Statistical Inference Bayesian approach: use priors Mixture weights ~ Dirichlet( a ) Mixture components ~ Dirichlet( b ) Research in Algorithms for the InterNet 14 Bayesian Network for modeling document generation Doc 1 T1 … T2 Z Z TT w1 w2 … wv W Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet W 15 Topic Model: Plate Notation Document specific distribution over topics Document Topic Topic distribution over words z w T Word Nd D Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 16 Topic Model: Geometric Representation Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 17 Modeling Authors with words Uniform distribution over authors of doc Document ad Distribution of authors over words Author x Word w A Amruta Joshi, Stanford Univ. Nd Research in Algorithms for the InterNet D 18 Author-Topic Model Uniform distribution of documents over authors Document ad Author Distribution of authors over topics x Topic z A Topic distribution over words w T Amruta Joshi, Stanford Univ. Word Nd Research in Algorithms for the InterNet D 19 Inference Expectation Maximization But poor results (local Maxima) Gibbs Sampling Parameters: , Start with initial random assignment Update parameter using other parameters Converges after ‘n’ iterations Burn-in time Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 20 Inference and Learning for Documents Prob. that ith topic is assigned to topic j keeping other topic assn unchanged # of times word m is assigned to topic j Amruta Joshi, Stanford Univ. mj Research in Algorithms for the InterNet # of times topic j has occurred in document d dj 21 Matrix Factorization Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 22 Topic Model: Inference River River Stream Stream Bank Bank Money Money Loan Loan documents 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 Can we recover the original topics and topic mixtures from this data? Slide Credit: Padhraic Smyth, UC Irvine Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 23 Example of Gibbs Sampling Assign word tokens randomly to topics (●=topic 1; ●=topic 2 ) River River Stream Stream Bank Bank Money Money Loan Loan 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 Slide Credit: Padhraic Smyth, UC Irvine Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 24 After 1 iteration Apply sampling equation to each word token River River Stream Stream Bank Bank Money Money Loan Loan 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 Slide Credit: Padhraic Smyth, UC Irvine Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 25 After 4 iterations River River Stream Bank Stream Bank Money Money Loan Loan 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 Slide Credit: Padhraic Smyth, UC Irvine Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 26 After 32 iterations ● ● topic 1 stream .40 bank .35 river .25 River River Stream Bank Stream Bank topic 2 bank .39 money .32 loan .29 Money Money Loan Loan 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 Slide Credit: Padhraic Smyth, UC Irvine Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 27 Results Tested on Scientific Papers NIPS Dataset V=13,649 D=1,740 K=2,037 #Topics = 100 #tokens = 2,301,375 CiteSeer Dataset V=30,799 D=162,489 K=85,465 #Topics = 300 #tokens = 11,685,514 Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 28 Evaluating Predictive Power Perplexity Indicates ability to predict words on new unseen documents Lower the better Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 29 Results: Perplexity Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 30 Recap First Author Model Topic Model Then Author-Topic Model Next… Integrating Topics & Syntax Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 31 Integrating topics & syntax Probabilistic Models Short-range Syntactic Constraints Represented as distinct syntactic classes HMM, Probabilistic CFGs Long-range dependencies dependencies Semantic Constraints Represented as probabilistic distribution Bayes Model, Topic Model New Idea! Use both Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 32 How to integrate these? Mixture of Models Product of Models Each word exhibits either short or long range dependencies Each word exhibits both short or long range dependencies Composite Model Asymmetric All words exhibit short-range dependencies Subset of words exhibit long-range Research in Algorithms for the InterNet Amruta Joshi, Stanforddependencies Univ. 33 The Composite Model 1 Capturing asymmetry Replace probability distribution over words with semantic model Syntactic model chooses when to emit content word Semantic model chooses which word to emit Methods Syntactic component is HMM Semantic component is Topic model Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 34 Generating phrases 0.9 in with for on ... 0.5 0.4 0.1 network neural output networks ... image images object objects ... kernel support svm vector ... 0.9 0.2 0.7 used trained obtained described ... network used for images image obtained with kernel output described with objects neural network trained with svm images Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 35 The Composite Model 2 (Graphical) Doc’s distribution over topics Topics z1 z2 z3 z4 Words w1 w2 w3 w4 Classes c1 Amruta Joshi, Stanford Univ. c2 c3 c4 Research in Algorithms for the InterNet 36 The Composite Model 3 (d) : document’s distribution over topics Transitions between classes ci-1 and ci follow distribution (Ci-1) A document is generated as: For each word wi in document Draw zi from (d) Draw ci from (Ci-1) If ci=1, then draw wi from (zi), else draw wi from (ci) Amruta Joshi, Stanford Univ. d Research in Algorithms for the InterNet 37 Results Tested on Brown corpus (tagged with word types) Concatenated Brown & TASA corpus HMM & Topic Model 20 T Classes start/end Markers Class + 19 classes = 200 Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 38 Results Identifying Syntactic classes & semantic topics Clean separation observed Identifying function words & content words “control” : plain verb (syntax) or semantic word Part-of-Speech Tagging Identifying syntactic class Document Classification Brown corpus: 500 docs => 15 groups Results similar to plain Topic Model Research in Algorithms for the InterNet Amruta Joshi, Stanford Univ. 39 Extensions to Topic Model Integrating link information (Cohn, Hofmann 2001) Learning Topic Hierarchies Integrating Syntax & Topics Integrate authorship info with content (author-topic model) Grade-of-membership Models Random sentence generation Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 40 Conclusion Identifying its latent structure Document Content is modeled for – topic model Authorship - author topic model Syntactic Constructs – HMM Semantic Associations Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 41 Acknowledgements Prof. Rajeev Motwani Advice and guidance regarding topic selection T. K. Satish Kumar Help on Probabilistic Models Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 42 Thank you! Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 43 References Primary Steyvers, M., Smyth, P., Rosen-Zvi, M., & Griffiths, T. (2004). Probabilistic Author-Topic Models for Information Discovery. The Tenth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. Seattle, Washington. Steyvers, M. & Griffiths, T. Probabilistic topic models. (http://psiexp.ss.uci.edu/research/papers/SteyversGriffithsLSABookFormatted .pdf) Rosen-Zvi, M., Griffiths T., Steyvers, M., & Smyth, P. (2004). The Author-Topic Model for Authors and Documents. In 20th Conference on Uncertainty in Artificial Intelligence. Banff, Canada Griffiths, T.L., & Steyvers, M., Blei, D.M., & Tenenbaum, J.B. (in press). Integrating Topics and Syntax. In: Advances in Neural Information Processing Systems, 17. Griffiths, T., & Steyvers, M. (2004). Finding Scientific Topics. Proceedings of the National Academy of Sciences, 101 (suppl. 1), 5228-5235. Amruta Joshi, Stanford Univ. Research in Algorithms for the InterNet 44