Introduction to Design

advertisement

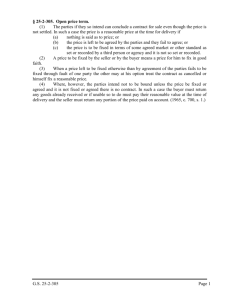

IT Safety and Reliability Professor Matt Thatcher Agenda for Today Brief review of the Case of the Killer Robot Overview of the: – Therac-25 accidents – Denver International Airport Baggage System Discussion of “How Good Is Good Enough?” – what are our social responsibilities? 2 Killer Robot Summary The general problems – – – – simple programming error inadequate safety engineering and testing poor HCI design lax culture of safety in Silicon Techtronics What would change in you replaced one of the characters with an “ethical” person? – would any of these problems have been solved? 3 Matt’s Humble Opinions Source of the problems – economic incentives » time pressures exclusive focus on meeting unrealistic deadlines there was no payoff to the dvmt team based on usability or safety measures valuing stock price over operator safety » cut corners keep your job, challenge decisions get fired – company culture » » » » poor communication all along the company hierarchy lots of unproductive, unresolved, and unaddressed conflict inability to consider alternatives choice of waterfall model instead of prototyping model as development methodology – inexperience and lack of critical skills in key positions » Johnson (hrdwr guy), Reynolds (data processing guy), Samuels (no exp in physics) Who is most responsible for: – setting appropriate economic incentives – creating an appropriate culture – putting people with the rights skills into the right jobs 4 Therac-25 Accidents (Basis of the Killer Robot Case) What was Therac-25? – released in 1983 – computerized radiation therapy machine used to treat cancer patients Who developed it? – Atomic Energy of Canada, Ltd and GCR (French-based company) What were the key advances of it over its predecessors (Therac-6 and Therac-20)? – move to more complete software-based control » faster set-up » safety checks were now controlled by software (instead of mechanical interlocks) 5 Therac-25 Accidents (What Happened?) Massively overdosed patients at least 6 times (3 died, 3 seriously disabled) – June 1985 » Marietta, Ga (Linda Knight, 61) – July 1985 » Hamilton, Ont (Donna Gartner, 40) – December 1985 » Yakima, Wash (Janis Tilman) – March 1986 » Tyler, Tx (Isaac Dahl, 33) – April 1986 » Tyler, Tx (Daniel McCarthy) – January 1987 » Yakima, Wash (Anders Engman) 6 Therac-25 Accidents (Example of Contributing UI Problems) The technician got the patient set up on the table, and went down the hall to start the treatment. She sat down at the terminal: » hit “x” to start the process she realized she made a mistake, since she needed to treat the patient with the electron beam, not the X-ray beam » hit the “Up” arrow, » selected the “Edit” command, » hit “e” for electron beam, and » hit “enter” (signifying she was ready to start treatment) » the system showed a “beam ready” prompt » she hit “b” to turn the beam therapy on » the system gave her an error message (Malfunction 54) » she overrode the error message It turns out that the UI showed that it was in electron mode but it was actually in a “hybrid” mode delivered more than 125 times the normal dose to the patient 7 Therac-25 Accidents (What Were the Problems?) The Problems – simple programming errors – inadequate safety engineering » ignored the software risks (almost no unit or integration testing at all) » operators were told it was impossible to overdose a patient – poor HCI design – lax culture of safety in the manufacturing co. – problems were not reported quickly to manufacturer or FDA » prompted a 1990 federal law 8 Friendly-Fire Tragedy (Afghanistan) 9 Denver International Airport’s Baggage System 10 Other System Failures Problems for individuals – billing inaccuracies (electricity, auto insurance) – database inaccuracies (sex crimes DB, 2000 Florida election) System failures – – – – – AT&T (1990) Galaxy 4 satellite (1998) London Stock Exchange (2000) Warehouse Manager New York School District 11 Increasing Reliability and Safety Overconfidence Software reuse Professional techniques UI an human factors Redundancy and self-checking Warranties Regulation of safety applications Self-regulation Take responsibility!!! 12 Critical Observation If it is true that: – information technology affects society AND – some choices in computing design are not completely constrained by mathematics, physics, chemistry, etc. THEN – designers, implementers, teachers, and managers of technology make choices that affect society 13 Principle Actors in Software Design Software Provider – person or organization that creates the software Software Buyer – person or organization responsible for obtaining the software for its intended use Software User – person actually using the software Society – people, other than providers, buyer, or users who can be affected by the software 14 Obligations of the Software Provider The Provider (itself) – profit and good reputation The Buyer – – – – – help buyer make an informed decision set testing goals and meet them provide warnings about untested areas of the software inform user about testing results and shortcomings provide a reasonable warranty on functionality and safety – – – – education/training about use and limitations of software provide technical support and maintenance provide reasonable protections provide clear instructions and user manuals The User Biggest Responsibility – answer the question “How Good is Good Enough” 15 Obligations of the Software Buyer The Provider – – – – – respect copyrights and don’t steal pay a fair price for the product use the product for the correct purpose don’t blame the provider for incorrect use make users available for observations, testing, and evaluation The Buyer (itself) – learn limitations of the software – perform systems acceptance testing (and audit testing) The User – ensure proper education/training is provided – ensure proper technical support and maintenance is provided – represent the user during development (be a communication link between user and developer) – make sure users are included in the design process – provide reasonable protections (and a safe working environment) Biggest Responsibility – make sure software is used for intended purpose (think about biometrics) 16 Obligations of the Software User The Provider – respect copyrights and don’t steal – read documentation The Buyer – communicate problems and provide feedback (about training, UI, functionality, etc.) – ensure appropriate use of software – make a good faith effort to learn the software and its limitations The User (herself) – help in the training process Biggest Responsibility – ensure that the software continues to perform as intended and report problems 17 Final Observation Software is treated like other commercials products Software differs in many critical respects from many other products – serious software errors can remain after rigorous testing because of the logical complexity of software – it is difficult to construct uniform software standards that can be subjected to regulation and inspection – software affects an increasingly large number of people due to the proliferation and flexibility of computers – any group can provide software since set-up costs are low 18 Summary Computer professionals must stop thinking of themselves as technicians Just like medical doctors, computer professionals are agents of change in people’s lives Computer professionals have some ethical responsibility for the changes they are creating in society But this responsibility is complicated because our position as workers in industry diffuses accountability We must recognize that part of our job includes larger issues of social responsibility 19