CARTA Assessment Retreat - Office of Academic Planning

advertisement

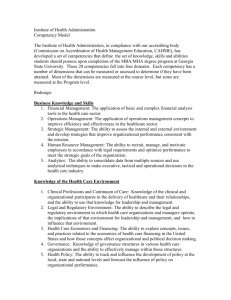

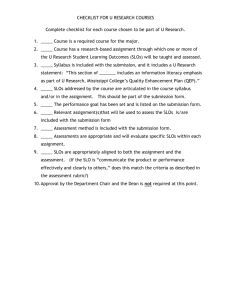

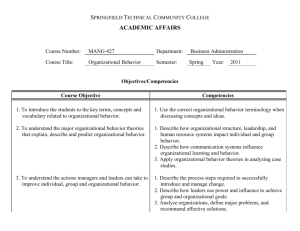

CARTA Assessment Retreat May 4, 2012 Office of Academic Planning and Accountability (APA) Florida International University Introduction • Susan Himburg, Director of Accreditation, APA • Mercedes Ponce, Director of Assessment, APA • Katherine Perez, Associate Director of Assessment, APA • Barbara Anderson & Claudia Grigorescu, GAs, APA Assessment in FIU Goal Setting Activity Retreat Goals Unit Goals What would you like to learn and accomplish in this retreat? What would you like to accomplish within your unit to enhance your current assessment processes? Retreat Agenda 9:00 a.m. – 9:15 a.m. Introduction Continental Breakfast Welcome 9:15 a.m. – 10:00 a.m. Assessment in FIU Activity: Retreat Goal Setting Overview of Assessment SLOs, POs, ALCs, CCOs, Global Learning TracDat Basics 10:00 a.m. – 11:00 a.m. Matrixes I: Effective Outcomes Streamlining Outcomes with Program Goals Tying Outcomes to Curriculum Activity: Writing SLOs and POs 11:00 a.m. – 12:00 p.m. Matrixes II: Effective Methods Choosing Instruments Introduction to Rubrics Activity: Creating a Rubric Using Results for Improvements Activity: Developing Improvement Strategies 12:00 p.m. – 1:00 p.m. Q & A Session Lunch Overview of Assessment: Structure • Assessment Cycles • Assessment Review Process • Types of Assessments – Programs – Colleges/Schools – Administrative Units • Continuous Improvement – Assessment-based improvement strategies – Documenting improvement strategies – Coding and analyzing improvement strategies Overview of Assessment: Cycle Step 1: Identify Specific Outcomes • Use program mission and goals to help identify outcomes • Use SMARTER Criteria for creating outcomes Step 2: Determine Assessment Methods • Determine how to assess the learning outcomes within the curriculum (by curriculum mapping) Outcomes Step 3: Gathering Evidence Improvement Strategies Assessment Methods • Collect evidence Step 4: Review & Interpret Results • Organize and process data • Discuss significance of data and report it Analysis of Results Data Collection Step 5: Recommend Improvement Actions • Collaborate with faculty to develop improvement strategies based on results Step 6: Implement Actions and Reassess • Follow-up on improvement strategies, implement them, and report progress • Restart the cycle to assess the impact of actions Overview of Assessment: Timeline Cycle A Deadline Task Due Fall 2012 Oct 15 2010 – 2012 Complete Reports 2012 – 2014 Plans Summer 2013 May 15 2012- 13 Interim Reports (results only) Fall 2014 Oct 15 2012 – 2014 Complete Reports 2014 – 2016 Plans Overview of Assessment: Assessment Committee • The University Assessment Committee is composed of two branches – Academic – Administrative • Role – Represent their academic and administrative units – Provide their unit with assessment guidance – – • Best practices • Deadlines • Connecting faculty & staff to appropriate assessment resources Enhance the culture of assessment at FIU Engage in dialogue with fellow experts to improve assessment practices Overview of Assessment: Types of Assessment • Academic Programs • Student Learning Outcomes (SLOs) • All academic programs • Miami-based programs • On-site, off-shore, and distance learning programs • Academic Learning Compacts (ALCs) • Program Outcomes (POs) • All academic programs • Miami-based programs • On-site, off-shore, and distance learning programs • Core Curriculum Outcomes (COs) • Global Learning (GL) • Administrative Assessments for Academic Units • Assessment Units Included • Dean’s Offices • Centers/Institutes Institutional Assessment of Learning Program Level Outcomes Student Learning Outcomes (SLOs) Skills Assessed: Content Knowledge, Critical Thinking, Communication Skills, Technology Academic Learning Compacts (ALCs) Required by the FLBOG for each baccalaureate degree program Curriculum Maps Course Level Outcomes Core Curriculum Outcomes (CCOs) Outcomes aligned to one of the core standards Global Learning Outcomes (GL) Specialized GL approved courses Outcomes aligned to each of the 3 learning outcomes Student Learning Outcomes (SLOs) • • • Student Learning Outcomes (SLOs) is a program related outcomes SLOs focus on students’ knowledge and skills expected upon completion of an academic degree program • “A learning outcome is a stated expectation of what someone will have learned” (Driscoll & Wood, 2007, p. 5) • “A learning outcome statement describes what students should be able to demonstrate, represent, or produce based on their learning histories” (Maki, 2004, p. 60) • “A learning outcome describes our intentions about what students should know, understand, and be able to do with their knowledge when they graduate” (Huba & Freed, 2000, p. 9-10) What should my students know or be able to do at the time of graduation? Global Learning Outcomes (GL) Global Learning for Global Citizenship = FIU's Quality Enhancement Plan (QEP) • Multi-year initiative enables students to act as engaged global citizens • Integrated global learning curriculum and co-curriculum • Minimum of two GL-designated courses for Undergraduate students These are the GL outcomes: Global Awareness: Knowledge of the interrelatedness of local, global, international, and intercultural issues, trends, and systems Global Perspective: Ability to develop a multi-perspective analysis of local, global, international, and intercultural problems Global Engagement: Willingness to engage in local, global, international, and intercultural problem solving Program Learning Outcomes (POs) • Program Outcomes (POs) focus on expected programmatic changes that will improve overall program quality for all stakeholders (students, faculty, staff) • Program outcomes illustrate what you want your program to do. These outcomes differ from learning outcomes in that you discuss what it is that you want your program to accomplish. (Bresciani, n.d., p. 3) • Program outcomes assist in determining whether the services, activities, and experiences of and within a program positively impact the individuals it seeks to serve. • Emphasizes areas such as recruitment, professional development, advising, hiring processes, and/or satisfaction rates. • How can I make this program more efficient? Administrative Assessment (AAs) • Administrative Areas Dean’s Office Centers/Institutes • Outcomes aligned to: Unit mission/vision Annual goals University mission/vision Strategic plan • Outcomes focus on each of the following areas (all 4 required for Dean’s Office): Administrative Support Services Educational Support Services Research Community Service • Student learning is also assessed for units providing learning services to students (e.g., workshops, seminars, etc.) Assessment Tracking Microsoft Word Forms TracDat: http://intranet.fiu.edu/tracdat/ Matrixes I: Effective Outcomes Student Learning Outcomes (SLOs) SMARTER Criteria • Specific – Is the expected behavior and skill clearly indicated? • Measureable – Can the knowledge/skill/attitude be measured? • Attainable – Is it viable given the program courses and resources? • Relevant – Does it pertain to the major goals of the program? • Timely – Can graduates achieve the outcome prior to graduation? • Evaluate – Is there an evaluation plan? • Reevaluate – Can it be evaluated after improvement strategies have been implemented? Student Learning Outcomes (SLOs) Student Learning Outcomes (cognitive, practical, or affective) 1. Can be observed and measured 2. Relates to student learning towards the end of the program (the graduating student) 3. Reflects an important higher order concept Formula: Who + Action Verb + What Theater majors will analyze and compare the relationships among the elements of theatrical performance: writing, directing, acting, design, and the audience function. Student Learning Outcomes (SLOs) Strong Examples • Theater majors will analyze and compare the relationships among the elements of theatrical performance: writing, directing, acting, design, and the audience function. • Students will be able to calculate equivalent exposures, using F-stop and shutter speeds. • Students will critically analyze building designs and conduct post occupancy evaluation studies. • Graduates will understand and evaluate interpretations of any of a variety of man-made forms in Western civilization from pre-history to Imperial Rome. Weak Examples • Students will be able to demonstrate skills from their art form. • Musicians tend to be creative, in tune with their minds, bodies, and emotions. • Appreciate the social, political, religious, and philosophical contexts of art objects. • Graduates will demonstrate a basic knowledge of artistic media and performance styles from both western and non-western traditions. • Develop an awareness of the cultural and historical dimensions of the wide variety of man-made forms in the period covered by this class. Program Outcomes (POs) Program Outcomes (efficiency measures) 1. Can be observed and measured 2. Related to program level goals that do not relate to student learning (e.g., student services, graduation, retention, faculty productivity, and other similar Formula: Who + Action Verb + What Full-time students will graduate from the Art History program within 6 years of program admission. Program Outcomes (POs) Strong Examples • The department’s advising office will schedule student appoints within 2 weeks of initial contact. • Students will be satisfied with services provided by the career placement office in the Department of Architecture. • Faculty in Music will be involved with a minimum of 2 public events per semester. Weak Examples • Graduation rates will increase. • Surveys will be used to assess student satisfaction. • Career services will work with student placements. Activity Writing SLOs and POs Streamlining Outcomes with Program Goals Program Mission and Goals •Question: Do the mission and goals match the knowledge/skills expected for graduates? •Task: Break down mission and goals; Verify these are reflected in the outcomes. Goals Accreditation Principles •Question: What are the competencies required for assessment and how do they match my program mission/goals? •Task: Review required competencies for accreditation or other constituencies; Streamline requirements and outcomes. Accreditation Course Outcomes Course Outcomes •Question: How are the program’s learning outcomes reflected in the courses? •Task: Review course syllabi and outcomes to check for alignment; Develop a curriculum map. Tying Outcomes to Curriculum: Curriculum Maps Curriculum maps help identify where within the curriculum learning outcomes are addressed and provide a means to determine whether the elements of the curriculum are aligned. Planning Curriculum Learning Outcomes Identifying Gaps Improvement Areas Measures Tying Outcomes to Curriculum: Curriculum Maps Collect All Relevant or Required Information EX: Course syllabi, curriculum requirements, and major learning competencies Collaborate with Faculty and Staff Members Delineate where the learning outcomes are taught, reviewed, reinforced, and/or evaluated within each of the required courses Identify Major Assignments within Courses Discuss how accurately they measure the learning outcomes Create a Curriculum Map Courses in one axis and learning outcomes in the other Make Changes as Appropriate If there are any gaps in teaching or assessing learning outcomes Tying Outcomes to Curriculum: Curriculum Maps •Introduced = indicates that students are introduced to a particular outcome •Reinforced = indicates the outcome is reinforced and certain courses allow students to practice it more •Mastered = indicates that students have mastered a particular outcome •Assessed = indicates that evidence/data is collected, analyzed and evaluated for program-level assessment Competency/Skill Introductory Course Content SLO 1 Introduced Content SLO 2 Content SLO 3 Methods Course Required Course 1 Required Course 2 Required Course 3 Introduced Reinforced Introduced Introduced Reinforced Introduced Introduced Required Course 4 Capstone Course Reinforced Mastery/Assessed Reinforced Mastery/Assessed Reinforced Critical Thinking SLO 1 Introduced Critical Thinking SLO 2 Introduced Introduced Mastery/Assessed Communication SLO 1 Introduced Reinforced Mastery/Assessed Communication SLO 2 Integrity / Values SLO 1 Integrity / Values SLO 2 Introduced Mastery/Assessed Introduced Introduced Reinforced Reinforced Mastery/Assessed Reinforced Mastery/Assessed Introduced *Adapted from University of West Florida, Writing Behavioral, Measurable Student Learning Outcomes CUTLA Workshop May 16, 2007. Matrixes II: Effective Methods Choosing Assessment Measures/Instruments 1. Identify Assessment Needs • What are you trying to measure or understand? Every thing from artifacts for student learning to program efficiency to administrative objectives. • Is this skill or proficiency a cornerstone of what every graduate in my field should be able know or do? 2. Match Purpose with Tools • What type of tool would best measure the outcome (e.g., assignment, exam, project, or survey)? • Do you already have access to such a tool? If so, where and when is it collected? 3. Define Use of Assessment Tool • When and where do you distribute the tool (e.g., in a capstone course right before graduation)? • Who uses the tool (e.g., students, alumni)? • Where will the participants complete the assessment? • How often do you use or will use the tool (e.g., every semester or annually)? Understanding Types of Measurements • • • Direct versus Indirect Measures • Direct Measure: Learning assessed using tools that measure direct observations of learning such as assignments, exams, and portfolios; Precise and effective at determining if students have learned competencies defined in outcomes • Indirect Measure: Learning assessed using tools that measure perspectives and opinions about learning such as surveys, interviews, and evaluations; Provide supplemental details that may help a program/department understand how students think about learning and strengths/weaknesses of a program Program Measures versus Course Measures • Program Measure: Provides data at the program level and enables department to understand overall learning experience; Includes data from exit exams and graduation surveys • Course Measure: Provides data at the course level and enables professors to determine competencies achieved at the end of courses; Includes data from final projects/presentations and pre-post exams Formative Measures versus Summative • Formative Measures: Assessing learning over a specific timeline, generally throughout the academic semester or year • Summative Measures: Assessing learning at the end of a semester, year or at graduation Examples of Measures/Instruments Direct Measures • Standardized exams • Exit examinations • Portfolios • Pre-tests and post-tests • Locally developed exams • Papers • Oral presentations • Behavioral observations • Thesis/dissertation • Simulations/case studies • Video taped/audio taped assignments Course Level •Essays •Presentations •Minute papers •Embedded questions •Pre-post tests Indirect Measures • Surveys or questionnaires • Student perception • Alumni perception • Employer perception • Focus groups • Interviews • Student records Program Level •Portfolios •Exit exams •Graduation surveys •Discipline specific national exams Institution-Level Assessments 1. NSSE FSSE Alumni Survey 2. 3. 4. Graduating Master’s and Doctoral Student Survey Graduating Senior Survey Student Satisfaction Survey Global Learning Perspectives Inventory Proficiency Profile (Kuh & Ikenberry, 2009, p. 10) Case Response Assessment Introduction to Rubrics Definition • Rubrics are tools used to score or assess student work using well-defined criteria and standards. Common Uses • Evaluate essays, short answer responses, portfolios, projects, presentations, and other similar artifacts. Benefits • Learning expectations clear for current and future faculty teaching the course • Transparency of expectations for students • Providing meaningful contextual data as opposed to only having grades or scores • Providing students with clearer feedback on performance (if scored rubrics are handed back to students) • Useful for measuring creativity, critical thinking, and other competencies requiring deep multidimensional skills/knowledge • Increase of inter-rater reliability by establishing clear guidelines for assessing student learning • Possibility of easy, repeated usage over time • Inexpensive development and implementation Steps for Developing Rubrics 1. Identify Competencies 2. Develop a Scale 3. Produce a Matrix • Narrow down the most important learning competencies you are trying to measure. Ask yourself what you wanted students to learn and why you created the assignment. • List the main ideas or areas that would specifically address the learning competencies you identified. • Think of the types of scores that would best apply to measuring the competencies (e.g., a 5 point scale from (1)Beginning to (5)Exemplary). • Scales depend on how they would apply to the assignment, the competencies addressed, and the expectations of the instructor. • Using the information gathered from the previous two steps, you can create a matrix to organize the information. • Optional: describe the proficiencies, behaviors, or skills each student will demonstrate depending on the particular criterion and its associated performance scale ranking or score. Rubric Template List All of the Competencies Measured Performance Scale 1 Unacceptable 2 Acceptable 3 Excellent Competency not demonstrated Competency demonstrated Competency demonstrated at an advanced level Criterion 2 Competency not demonstrated Competency demonstrated Competency demonstrated at an advanced level Criterion 3 Competency not demonstrated Competency demonstrated Competency demonstrated at an advanced level Criterion 1 AVERAGE POINTS POINTS Reporting Results Summary of Results Format Narrative Tables or charts Analysis/Interpretation of results Explain results in a narrative form by interpreting results or using qualitative analysis of the data. Every student learning outcome must have at least: One set of results One student learning improvement strategy (use of results) Reporting Results Non-Examples: Our students passed the dissertation defense on the first attempt. All the students passed the national exam. Criteria met. 1. 2. 3. Examples: 75% of the students (n=15) achieved a 3 or better on the 5 rubric categories for the capstone course research paper. Average score was: 3.45 Overall, 60% of students met the criteria (n=20) with a 2.65 total average. The rubric’s 4 criteria scores were as follows: 1. 2. o o o o Grammar: 3.10 (80% met minimum criteria) Research Questions: 2.55 (65% met minimum criteria) Knowledge of Topic: 2.50 (55% met minimum criteria) Application of Content Theories: 2.45 (60% met minimum criteria) Reporting Results Frequency of Student Results for all Four Categories of the Research Paper (N=20 Students) 1 NOVICE 2 APPRENTICE 3 PRACTITIONER 4 EXPERT TOTAL MEETING CRITERIA Grammar N=2 (10%) N=2 (10%) N=8 (40%) N=8 (40%) 3.10 average (62 points) 80% (n=16) met criteria Essay Structure N=4 (20%) N=3 (15%) N=11 (55%) N=2 (10%) 2.55 average (51 points) 65% (n=13) met criteria Coherence of Argument N=2 (10%) N=7 (35%) N=10 (50%) N=1 (5%) 2.50 average (50 points) 55% (n=11) met criteria Research Based Evidence N=3 (15%) N=5 (25%) N=12 (60%) N=0 (0%) 2.45 average (49 points) 60% (n=12) met criteria AVERAGE TOTAL 2.65 average score 65% (n=11) met criteria Reporting Results: Formulas N = 20 (students) 1 2 3 4 Grammar N=2 (10%) 2/20 = .10 .10 (100) = 10% N=2 (10%) 2/20 = .10 .10 (100) = 10% N=8 (40%) 8/20 = .40 .40 (100) = 40% N=8 (40%) 8/20 = .40 .40 (100) = 40% 3.10 average (62 points) 2(1) + 2(2) + 8(3) + 8(4) = 62 62/20 = 3.10 80% (n=16) met criteria 40% + 40% = 80% (8+8=16) Essay Structure N=4 (20%) 4/20 = .20 .20 (100) = 20% N=3 (15%) 3/20 = .15 .15 (100) = 15% N=11 (55%) 11/20 = .55 .55 (100) = 55% N=2 (10%) 2/20 = .10 .10 (100) = 10% 2.55 average (51 points) 4(1) + 3(2) + 11(3) + 2(4) = 51 51/20 = 2.55 65% (n=13) met criteria 55% + 10% = 65% (11+2=13) Coherence of Argument N=2 (10%) 2/20 = .10 .10 (100) = 10% N=7 (35%) 7/20 = .35 .35 (100) = 35% N=10 (50%) 10/20 = .50 .50 (100) = 50% N=1 (5%) 1/20 = .05 .05 (100) = 5% 2.50 average (50 points) 2(1) + 7(2) + 10(3) + 1(4) = 50 50/20 = 2.50 55% (n=11) met criteria 50% + 5% = 55% (10+1=11) Research Based Evidence N=3 (15%) 3/20 = .15 .15 (100) = 15% N=5 (25%) 5/20 = .25 .25 (100) = 25% N=12 (60%) 12/20 = .60 .60 (100) = 60% N=0 (0%) 0/20 = 0 0 (100) = 0% 2.45 average (49 points) 3(1) + 5(2) + 12(3) + 0(4) = 49 49/20 = 2.45 60% (n=12) met criteria 60% + 0% = 60% (12+0=12) AVERAGE TOTAL 3.10 + 2.55 + 2.50 + 2.45 = 10.6 10.6/4 = 2.65 80% + 65% + 55% + 60% = 260 260/4 = 65% 16 + 13+ 11+ 12= 43 43/4 =10.75 = 11 TOTAL MEETING CRITERIA 2.65 average score 65% (n=11) met criteria Using Results for Improvements DO DON’T •DO focus on making specific improvements based on faculty consensus. •DON’T focus on simply planning for improvements or making improvements without faculty feedback. •DO focus on improvements that will impact the adjoining outcome. •DON’T focus on improvements that are unrelated to the outcome. •DO use concrete ideas (e.g., include •DON’T write vague ideas or plan to plan. specific timelines, courses, activities, etc.). •DO state strategies that are sustainable and feasible. •DON’T use strategies that are impossible to complete within two years considering your resources. •DO use strategies that can improve the curriculum and help students learn outside of courses. •DON’T simply focus on making changes to the assessment measures used. Using Results for Improvements: Student Learning Curriculum Changes Course Objectives Within Course Activities • Mandate or create new courses • Eliminate/merge course(s) • Change degree requirements • Change course descriptions • Change syllabi to address specific learning outcomes • Add new assignments to emphasize specific competencies • Increase time spent teaching certain content • Change themes, topics, or units Using Results for Improvements: Student Learning University Resources Faculty- Student Interaction Resources for Students • Use outside resources to enhance student learning (e.g. refer students to the Center for Academic Excellence) • Publish or present joint papers • Provide feedback on student work, advising, office hours • Disseminate information (e.g. distributing newsletters, sharing publications, etc.) • Create/maintain resource libraries (e.g. books, publications, etc.) • Offer professional support or tutoring • Provide computer labs or software Using Results for Improvements: Program Outcomes • Obtain financial resources: funding, grants, etc. • Hire new faculty/staff • Reduce Spending • Change recruiment efforts/tactics • Increase enrollment • Change policies, values, missions, or conceptual frameworks of a program or unit • Add or expand services to improve quality • Add or expand processes to improve efficiency Using Results for Improvements: Program Outcomes • Conduct research • Gather and/or disseminate information • Produce publications or presentations • Create professional development opportunities • Attend professional conferences or workshops • Establish collaborations across stakeholders or disciplines • Provide services or establish links to the community • Acquire new equipment, software, etc. • Provide resources to specific groups Activity Developing Improvement Strategies Q & A Session Thank you for attending. Contact Us: Katherine Perez kathpere@fiu.edu 305-348-1418 Departmental Information: ie@fiu.edu 305-348-1796 PC 112