Architectural Trends - Ryerson Electrical & Computer Engineering

advertisement

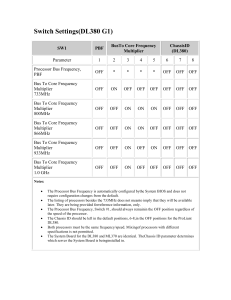

Overview of Parallel Architecture and Programming Models What is a Parallel Computer? A collection of processing elements that cooperate to solve large problems fast Some broad issues that distinguish parallel computers: • Resource Allocation: – – – • Data access, Communication and Synchronization – – – • how large a collection? how powerful are the elements? how much memory? how do the elements cooperate and communicate? how are data transmitted between processors? what are the abstractions and primitives for cooperation? Performance and Scalability – – how does it all translate into performance? how does it scale? 2 Why Parallelism? • Provides alternative to faster clock for performance • Assuming a doubling of effective per-node performance every 2 years, 1024-CPU system can get you the performance that it would take 20 years for a single-CPU system to deliver • Applies at all levels of system design • Is increasingly central in information processing • Scientific computing: simulation, data analysis, data storage and management, etc. • Commercial computing: Transaction processing, databases • Internet applications: Search; Google operates at least 50,000 CPUs, many as part of large parallel systems 3 How to Study Parallel Systems History: diverse and innovative organizational structures, often tied to novel programming models Rapidly matured under strong technological constraints The microprocessor is ubiquitous • Laptops and supercomputers are fundamentally similar! • Technological trends cause diverse approaches to converge • Technological trends make parallel computing inevitable • In the mainstream Need to understand fundamental principles and design tradeoffs, not just taxonomies • Naming, Ordering, Replication, Communication performance 4 Outline • Drivers of Parallel Computing • Trends in “Supercomputers” for Scientific Computing • Evolution and Convergence of Parallel Architectures • Fundamental Issues in Programming Models and Architecture 5 Drivers of Parallel Computing Application Needs: Our insatiable need for computing cycles • • • Scientific computing: CFD, Biology, Chemistry, Physics, ... General-purpose computing: Video, Graphics, CAD, Databases, TP... Internet applications: Search, e-Commerce, Clustering ... Technology Trends Architecture Trends Economics Current trends: • • • All microprocessors have support for external multiprocessing Servers and workstations are MP: Sun, SGI, Dell, COMPAQ... Microprocessors are multiprocessors. Multicore: SMP on a chip 6 Application Trends Demand for cycles fuels advances in hardware, and vice-versa • Cycle drives exponential increase in microprocessor performance • Drives parallel architecture harder: most demanding applications Range of performance demands • Need range of system performance with progressively increasing cost • Platform pyramid Goal of applications in using parallel machines: Speedup Speedup (p processors) = Performance (p processors) Performance (1 processor) For a fixed problem size (input data set), performance = 1/time Speedup fixed problem (p processors) = Time (1 processor) Time (p processors) 7 Scientific Computing Demand • Ever increasing demand due to need for more accuracy, higher-level modeling and knowledge, and analysis of exploding amounts of data • Example area 1: Climate and Ecological Modeling goals – – – By 2010 or so: • Simply resolution, simulated time, and improved physics leads to increased requirement by factors of 104 to 107. Then … • Reliable global warming, natural disaster and weather prediction By 2015 or so: • Predictive models of rainforest destruction, forest sustainability, effects of climate change on ecoystems and on foodwebs, global health trends By 2020 or so: • Verifiable global ecosystem and epidemic models • Integration of macro-effects with localized and then micro-effects • Predictive effects of human activities on earth’s life support systems • Understanding earth’s life support systems 8 Scientific Computing Demand • Example area 2: Biology goals • By 2010 or so: – • By 2015 or so: – – – – • Ex vivo and then in vivo molecular-computer diagnosis Modeling based vaccines Individualized medicine Comprehensive biological data integration (most data co-analyzable) Full model of a single cell By 2020 or so: – – – – Full model of a multi-cellular tissue/organism Purely in-silico developed drugs; personalized smart drugs Understanding complex biological systems: cells and organisms to ecosystems Verifiable predictive models of biological systems 9 Engineering Computing Demand Large parallel machines a mainstay in many industries • Petroleum (reservoir analysis) • Automotive (crash simulation, drag analysis, combustion efficiency), • Aeronautics (airflow analysis, engine efficiency, structural mechanics, electromagnetism), • Computer-aided design • Pharmaceuticals (molecular modeling) • Visualization – – in all of the above entertainment (movies), architecture (walk-throughs, rendering) • Financial modeling (yield and derivative analysis) • etc. 10 Learning Curve for Parallel Applications AMBER molecular dynamics simulation program • Starting point was vector code for Cray-1 • 145 MFLOP on Cray90, 406 for final version on 128-processor Paragon, 891 on 128-processor Cray T3D • 11 Commercial Computing Also relies on parallelism for high end • Scale not so large, but use much more wide-spread • Computational power determines scale of business that can be handled Databases, online-transaction processing, decision support, data mining, data warehousing ... TPC benchmarks (TPC-C order entry, TPC-D decision support) • Explicit scaling criteria provided: size of enterprise scales with system • Problem size no longer fixed as p increases, so throughput is used as a performance measure (transactions per minute or tpm) E-commerce, search and other scalable internet services • Parallel applications running on clusters • Developing new parallel software models and primitives Insight from automated analysis of large disparate data 12 TPC-C Results for Wintel Systems Performance 342K Price-Performance Performance (tpmC) 350,000 $90 $80 300,000 $70 234K 250,000 $60 165K 200,000 $50 $40 150,000 $30 100,000 50,000 $100 7K 12K 19K 40K 57K $20 Price-Perf ($/tpmC) 400,000 $10 0 $0 1996 4-way Cpq PL 5000 Pentium Pro 200 MHz 6,751 tpmC $89.62/tpmC Avail: 12-1-96 TPC-C v3.2 (withdrawn) 1997 1998 6-way 4-way Unisys AQ IBM NF 7000 HS6 PII Xeon 400 Pentium Pro MHz 200 MHz 18,893 tpmC 12,026 tpmC $29.09/tpmC $39.38/tpmC Avail: 12-29-98 Avail: 11-30-97 TPC-C v3.3 TPC-C v3.3 (withdrawn) (withdrawn) 1999 2000 8-way 8-way Cpq PL 8500 Dell PE 8450 PIII Xeon 550 PIII Xeon 700 MHz MHz 40,369 tpmC 57,015 tpmC $18.46/tpmC $14.99/tpmC Avail: 12-31-99 Avail: 1-15-01 TPC-C v3.5 TPC-C v3.5 (withdrawn) (withdrawn) 2001 2002 2002 32-way 32-way 32-way Unisys Unisys ES7000 NEC Express5800 ES7000 Xeon MP 2 GHz Itanium2 1GHz PIII Xeon 900 234,325 tpmC 342,746 tpmC MHz $11.59/tpmC $12.86/tpmC 165,218 tpmC Avail: 3-31-03 Avail: 3-31-03 $21.33/tpmC TPC-C v5.0 TPC-C v5.0 Avail: 3-10-02 TPC-C v5.0 Parallelism is pervasive • Small to moderate scale parallelism very important • Difficult to obtain snapshot to compare across vendor platforms • 13 Summary of Application Trends Transition to parallel computing has occurred for scientific and engineering computing Also occurred commercial computing • Database and transactions as well as financial • Scalable internet services (at least coarse-grained parallelism) Desktop also uses multithreaded programs, which are a lot like parallel programs Demand for improving throughput on sequential workloads • Greatest use of small-scale multiprocessors Solid application demand, keeps increasing with time Key challenge throughout is making parallel programming easier • Taking advantage of pervasive parallelism with multi-core systems 14 Drivers of Parallel Computing Application Needs Technology Trends Architecture Trends Economics 15 Technology Trends: Rise of the Micro Performance 100 Supercomputers 10 Mainframes Microprocessors Minicomputers 1 0.1 1965 1970 1975 1980 1985 1990 1995 The natural building block for multiprocessors is now also about the fastest! 16 General Technology Trends • Microprocessor performance increases 50% - 100% per year • Clock frequency doubles every 3 years • Transistor count quadruples every 3 years • Moore’s law: xtors per chip = 1.59year-1959 (originally 2year-1959) • Huge investment per generation is carried by huge commodity market • With every feature size scaling of n • we get O(n2) transistors • we get O(n) increase in possible clock frequency • We should get O(n3) increase in processor performance. • Do we? • See architecture trends 17 Die and Feature Size Scaling Die Size growing at 7% per year; feature size shrinking 25-30% 18 Clock Frequency Growth Rate (Intel family) • 30% per year 19 Transistor Count Growth Rate (Intel family) • Transistor count grows much faster than clock rate - 40% per year, order of magnitude more contribution in 2 decades • Width/space has greater potential than per-unit speed 20 How to Use More Transistors • Improve single threaded performance via architecture: • Not keeping up with potential given by technology (next) • Use transistors for memory structures to improve data locality • Doesn’t give as high returns (2x for 4x cache size, to a point) • Use parallelism • Instruction-level • Thread level • Bottom line: Not that single-threaded performance has plateaued, but that parallelism is natural way to stay on a better curve 21 Similar Story for Storage (Transistor Count) 23 Similar Story for Storage (DRAM Capacity) 24 Similar Story for Storage Divergence between memory capacity and speed more pronounced Capacity increased by 1000x from 1980-95, and increases 50% per yr • Latency reduces only 3% per year (only 2x from 1980-95) • Bandwidth per memory chip increases 2x as fast as latency reduces • Larger memories are slower, while processors get faster Need to transfer more data in parallel • Need deeper cache hierarchies • How to organize caches? • 25 Similar Story for Storage Parallelism increases effective size of each level of hierarchy, without increasing access time Parallelism and locality within memory systems too New designs fetch many bits within memory chip; follow with fast pipelined transfer across narrower interface • Buffer caches most recently accessed data • Disks too: Parallel disks plus caching Overall, dramatic growth of processor speed, storage capacity and bandwidths relative to latency (especially) and clock speed point toward parallelism as the desirable architectural direction 26 Drivers of Parallel Computing Application Needs Technology Trends Architecture Trends Economics 27 Architectural Trends Architecture translates technology’s gifts to performance and capability Resolves the tradeoff between parallelism and locality • Recent microprocessors: 1/3 compute, 1/3 cache, 1/3 off-chip connect • Tradeoffs may change with scale and technology advances Four generations of architectural history: tube, transistor, IC, VLSI • Here focus only on VLSI generation Greatest delineation in VLSI has been in scale and type of parallelism exploited 28 Architectural Trends in Parallelism Up to 1985: bit level parallelism: 4-bit -> 8 bit -> 16-bit • • • • • slows after 32 bit adoption of 64-bit well under way, 128-bit is far (not performance issue) great inflection point when 32-bit micro and cache fit on a chip Basic pipelining, hardware support for complex operations like FP multiply etc. led to O(N3) growth in performance. Intel: 4004 to 386 29 Architectural Trends in Parallelism Mid 80s to mid 90s: instruction level parallelism Pipelining and simple instruction sets, + compiler advances (RISC) • Larger on-chip caches • – • But only halve miss rate on quadrupling cache size More functional units => superscalar execution – But limited performance scaling N2 growth in performance • Intel: 486 to Pentium III/IV • 30 Architectural Trends in Parallelism After mid-90s • Greater sophistication: out of order execution, speculation, prediction – • Very wide issue processors – – • to deal with control transfer and latency problems Don’t help many applications very much Need multiple threads (SMT) to exploit Increased complexity and size leads to slowdown – – – Long global wires Increased access times to data Time to market Next step: thread level parallelism 31 Can Instruction-Level get us there? • Reported speedups for superscalar processors • • Horst, Harris, and Jardine [1990] ...................... 1.37 • Wang and Wu [1988] .......................................... 1.70 • Smith, Johnson, and Horowitz [1989] .............. 2.30 • Murakami et al. [1989] ........................................ 2.55 • Chang et al. [1991] ............................................. 2.90 • Jouppi and Wall [1989] ...................................... 3.20 • Lee, Kwok, and Briggs [1991] ........................... 3.50 • Wall [1991] .......................................................... 5 • Melvin and Patt [1991] ....................................... 8 • Butler et al. [1991] ............................................. 17+ Large variance due to difference in – – application domain investigated (numerical versus non-numerical) capabilities of processor modeled 32 ILP Ideal Potential 3 25 2.5 20 2 Speedup Fraction of total cycles (%) 30 15 1.5 10 1 5 0.5 0 0 0 1 2 3 4 5 6+ Number of instructions issued 0 5 10 15 Instructions issued per cycle • Infinite resources and fetch bandwidth, perfect branch prediction and renaming – real caches and non-zero miss latencies 33 Results of ILP Studies • Concentrate on parallelism for 4-issue machines • Realistic studies show only 2-fold speedup • More recent work examines ILP that looks across threads for parallelism 34 Architectural Trends: Bus-based MPs • Micro on a chip makes it natural to connect many to shared memory – dominates server and enterprise market, moving down to desktop • Faster processors began to saturate bus, then bus technology advanced – today, range of sizes for bus-based systems, desktop to large servers 70 CRAY CS6400 Sun E10000 60 Number of processors 50 40 SGI Challenge 30 Sequent B2100 Symmetry81 SE60 Sun E6000 SE70 Sun SC2000 20 AS8400 Sequent B8000 Symmetry21 SE10 10 Pow er SGI Pow erSeries 0 1984 1986 SC2000E SGI Pow erChallenge/XL 1988 SS690MP 140 SS690MP 120 1990 1992 SS1000 SE30 SS1000E AS2100 HP K400 SS20 SS10 1994 1996 P-Pro 1998 No. of processors in fully configured commercial shared-memory systems 35 Bus Bandwidth 100,000 Sun E10000 Shared bus bandwidth (MB/s) 10,000 SGI Sun E6000 Pow erCh AS8400 XL CS6400 SGI Challenge HPK400 SC2000E AS2100 SC2000 P-Pro SS1000E SS1000 SS20 SS690MP 120 SE70/SE30 SS10/ SS690MP 140 SE10/ 1,000 SE60 Symmetry81/21 100 SGI Pow erSeries Pow er Sequent B2100 Sequent B8000 10 1984 1986 1988 1990 1992 1994 1996 1998 36 Bus Bandwith: Intel Systems 37 Do Buses Scale? Buses are a convenient way to extend architecture to parallelism, but they do not scale • bandwidth doesn’t grow as CPUs are added Scalable systems use physically distributed memory External I/O P $ Mem Mem ctrl and NI XY Sw itch Z 38 Drivers of Parallel Computing Application Needs Technology Trends Architecture Trends Economics 39 Finally, Economics • • Fabrication cost roughly O(1/feature-size) • 90nm fabs cost about 1-2 billion dollars • So fabrication of processors is expensive Number of designers also O(1/feature-size) • 10 micron 4004 processor had 3 designers • Recent 90 nm processors had 300 • New designs very expensive • Push toward consolidation of processor types • Processor complexity increasingly expensive Cores reused, but tweaks expensive too • 40 Design Complexity and Productivity Design complexity outstrips human productivity 41 Economics Commodity microprocessors not only fast but CHEAP • Development cost is tens of millions of dollars • BUT, many more are sold compared to supercomputers • Crucial to take advantage of the investment, and use the commodity building block • Exotic parallel architectures no more than special-purpose Multiprocessors being pushed by software vendors (e.g. database) as well as hardware vendors Standardization by Intel makes small, bus-based SMPs commodity What about on-chip processor design? 42 What’s on a processing chip? • Recap: • Number of transistors growing fast • Methods to use for single-thread performance running out of steam • Memory issues argue for parallelism too • Instruction-level parallelism limited, need thread-level • Consolidation is a powerful force • All seems to point to many simpler cores rather than single bigger complex core • Additional key arguments: wires, power, cost 43 Wire Delay Gate delay shrinks, global interconnect delay grows: short local wires 44 Power Power dissipation in Intel processors over time 45 Power and Performance 46 Power Power grows with number of transistors and clock frequency Power grows with voltage; P = CV2f Going from 12V to 1.1V reduced power consumption by 120x in 20 yr Voltage projected to go down to 0.7V in 2018, so only another 2.5x Power per chip peaking in designs: - Itanium II was 130W, Montecito 100W - Power is first-class design constraint Circuit-level power techniques quite far along - clock gating, multiple thresholds, sleeper transistors 47 Power versus Clock Frequency Two processor generations; two feature sizes 48 Architectural Implication of Power • • Fewer transistors per core a lot more power efficient • Narrower issue, shorter pipelines, smaller OOO window • Get per-processor performance back on O(n3) curve • But lower single thread performance. What complexity to eliminate? • Speculation, multithreading, … ? • All good for some things, but need to be careful about power/benefit 49 ITRS Projections 50 ITRS Projections (contd.) #Procs on chip will outstrip individual processor performance 51 Cost of Chip Development Non-recurring engineering costs increasing greatly as complexity outstrips productivity 52 Recurring Costs Per Die (1994) 53 Summary: What’s on a Chip • Beyond arguments for parallelism based on commodity processors in general: • • Wire delay, power and economics all argue for multiple simpler cores on a chip rather than increasingly complex single cores Challenge: SOFTWARE. How to program parallel machines 54 Summary: Why Parallel Architecture? Increasingly attractive • Economics, technology, architecture, application demand Increasingly central and mainstream Parallelism exploited at many levels • Instruction-level parallelism Thread level parallelism and On-chip multiprocessing • Multiprocessor servers • Large-scale multiprocessors (“MPPs”) • Focus of this class: multiprocessor level of parallelism Same story from memory (and storage) system perspective • Increase bandwidth, reduce average latency with many local memories Wide range of parallel architectures make sense • Different cost, performance and scalability 55 Outline • Drivers of Parallel Computing • Trends in “Supercomputers” for Scientific Computing • Evolution and Convergence of Parallel Architectures • Fundamental Issues in Programming Models and Architecture 56 Scientific Supercomputing Proving ground and driver for innovative architecture and techniques • Market smaller relative to commercial as MPs become mainstream • Dominated by vector machines starting in 70s • Microprocessors have made huge gains in floating-point performance – – – – • high clock rates pipelined floating point units (e.g. mult-add) instruction-level parallelism effective use of caches Plus economics Large-scale multiprocessors replace vector supercomputers 57 Raw Uniprocessor Performance: LINPACK 10,000 CRAY CRAY Micro Micro n = 1,000 n = 100 n = 1,000 n = 100 1,000 T94 LINPACK (MFLOPS) C90 100 DEC 8200 Ymp Xmp/416 IBM Pow er2/990 MIPS R4400 Xmp/14se DEC Alpha HP9000/735 DEC Alpha AXP HP 9000/750 CRAY 1s IBM RS6000/540 10 MIPS M/2000 MIPS M/120 Sun 4/260 1 1975 1980 1985 1990 1995 2000 58 Raw Parallel Performance: LINPACK 10,000 MPP peak CRAY peak ASCI Red LINPACK (GFLOPS) 1,000 Paragon XP/S MP (6768) Paragon XP/S MP (1024) T3D CM-5 100 T932(32) Paragon XP/S CM-200 CM-2 Ymp/832(8) 1 C90(16) Delta 10 iPSC/860 nCUBE/2(1024) Xmp /416(4) 0.1 1985 1987 1989 1991 1993 1995 1996 • Even vector Crays became parallel: X-MP (2-4) Y-MP (8), C-90 (16), T94 (32) • Since 1993, Cray produces MPPs too (T3D, T3E) 59 Another View 60 Top 10 Fastest Computers (Linpack) Rank Site Computer Processors Year Rmax 1 DOE/NNSA/LLNL USA IBM BlueGene 131072 280600 2 NNSA/Sandia Labs, USA Cray Red Storm, Opteron 26544 2006 101400 3 IBM Research, USA, IBM Blue Gene Solution 40960 2005 91290 4 DOE/NNSA/LLNL, USA ASCI Purple - IBM eServer p5 12208 2006 75760 5 Barcelona Center, Spain IBM JS21 Cluster, PPC 970 10240 2006 62630 6 NNSA/Sandia Labs, USA Dell Thunderbird Cluster 9024 2006 53000 7 CEA, France Bull Tera-10 Itanium2 Cluster 9968 8 NASA/Ames, USA SGI Altix 1.5 GHz, Infiniband 10160 9 GSIC Center, Japan NEC/Sun Grid Cluster (Opteron) 11088 2006 47380 10 Oak Ridge Lab, USA Cray Jaguar XT3, 2.6 GHz dual 10424 2006 43480 2005 2006 2004 52840 51870 • NEC Earth Simulator (top for 5 lists) moves down to #14 • #10 system has doubled in performance since last year 61 Top 500: Architectural Styles 62 Top 500: Processor Type 63 Top 500: Installation Type 64 Top 500 as of Nov 2006: Highlights • NEC Earth Simulator (top for 5 lists) moves down to #14 • #10 system has doubled in performance since last year • #359 six months ago was #500 in this list • Total performance of top 500 up from 2.3 Pflops a year ago to 3.5 Pflops • Clusters are dominant at this scale: 359 of top 500 labeled as clusters • Dual core processors growing in popularity: 75 use Opteron dual core, and 31 Intel Woodcrest • IBM is top vendor with almost 50% of systems, HP is second • IBM and HP have 237 out of the 244 commercial and industrial installations • US has 360 of the top 500 installations, UK 32, Japan 30,Germany 19, China 18 65 Top 500 Linpack Performance over Time 66 Another View of Performance Growth 67 Another View of Performance Growth 68 Another View of Performance Growth 69 Another View of Performance Growth 70 Processor Types in Top 500 (2002) 71 Parallel and Distributed Systems 72 Outline • Drivers of Parallel Computing • Trends in “Supercomputers” for Scientific Computing • Evolution and Convergence of Parallel Architectures • Fundamental Issues in Programming Models and Architecture 73 History Historically, parallel architectures tied to programming models • Divergent architectures, with no predictable pattern of growth. Application Software Systolic Arrays Dataflow System Software Architecture SIMD Message Passing Shared Memory • Uncertainty of direction paralyzed parallel software development! 74 Today Extension of “computer architecture” to support communication and cooperation • OLD: Instruction Set Architecture • NEW: Communication Architecture Defines • Critical abstractions, boundaries, and primitives (interfaces) • Organizational structures that implement interfaces (hw or sw) Compilers, libraries and OS are important bridges between application and architecture today 75 Modern Layered Framework CAD Database Multiprogramming Shared address Scientific modeling Message passing Data parallel Compilation or library Operating systems support Communication hardware Parallel applications Programming models Communication abstraction User/system boundary Hardware/software boundary Physical communication medium 76 Parallel Programming Model What the programmer uses in writing applications Specifies communication and synchronization Examples: • Multiprogramming: no communication or synch. at program level • Shared address space: like bulletin board • Message passing: like letters or phone calls, explicit point to point • Data parallel: more regimented, global actions on data – Implemented with shared address space or message passing 77 Communication Abstraction User level communication primitives provided by system • Realizes the programming model • Mapping exists between language primitives of programming model and these primitives Supported directly by hw, or via OS, or via user sw Lot of debate about what to support in sw and gap between layers Today: • Hw/sw interface tends to be flat, i.e. complexity roughly uniform • Compilers and software play important roles as bridges today • Technology trends exert strong influence Result is convergence in organizational structure • Relatively simple, general purpose communication primitives 78 Communication Architecture = User/System Interface + Implementation User/System Interface: Comm. primitives exposed to user-level by hw and system-level sw • (May be additional user-level software between this and prog model) • Implementation: Organizational structures that implement the primitives: hw or OS • How optimized are they? How integrated into processing node? • Structure of network • Goals: • • • • • Performance Broad applicability Programmability Scalability Low Cost 79 Evolution of Architectural Models Historically, machines were tailored to programming models • Programming model, communication abstraction, and machine organization lumped together as the “architecture” Understanding their evolution helps understand convergence • Identify core concepts Evolution of Architectural Models: Shared Address Space (SAS) • Message Passing • Data Parallel • Others (won’t discuss): Dataflow, Systolic Arrays • Examine programming model, motivation, and convergence 80 Shared Address Space Architectures Any processor can directly reference any memory location • Communication occurs implicitly as result of loads and stores Convenient: • Location transparency • Similar programming model to time-sharing on uniprocessors Except processes run on different processors – Good throughput on multiprogrammed workloads – Naturally provided on wide range of platforms • History dates at least to precursors of mainframes in early 60s • Wide range of scale: few to hundreds of processors Popularly known as shared memory machines or model • Ambiguous: memory may be physically distributed among processors 81 Shared Address Space Model Process: virtual address space plus one or more threads of control Portions of address spaces of processes are shared Virtual address spaces for a collection of processes communicating via shared addresses Load P1 Machine physical address space Pn pr i v at e Pn P2 Common physical addresses P0 St or e Shared portion of address space Private portion of address space P2 pr i vat e P1 pr i vat e P0 pr i vat e • Writes to shared address visible to other threads (in other processes too) • Natural extension of uniprocessor model: conventional memory operations for comm.; special atomic operations for synchronization • OS uses shared memory to coordinate processes 82 Communication Hardware for SAS • • Also natural extension of uniprocessor Already have processor, one or more memory modules and I/O controllers connected by hardware interconnect of some sort • Memory capacity increased by adding modules, I/O by controllers I/O devices Mem Mem Mem Interconnect Mem I/O ctrl I/O ctrl Interconnect Processor Processor Add processors for processing! 83 History of SAS Architecture “Mainframe” approach • • • Motivated by multiprogramming Extends crossbar used for mem bw and I/O Originally processor cost limited to small – • • later, cost of crossbar Bandwidth scales with p High incremental cost; use multistage instead P P I/O C I/O C M M M M “Minicomputer” approach • • • • • • Almost all microprocessor systems have bus Motivated by multiprogramming, TP I/O Used heavily for parallel computing C Called symmetric multiprocessor (SMP) Latency larger than for uniprocessor Bus is bandwidth bottleneck – • caching is key: coherence problem Low incremental cost I/O C M M $ $ P P 84 Example: Intel Pentium Pro Quad CPU P-Pro module 256-KB Interrupt L2 $ controller Bus interface P-Pro module P-Pro module PCI bridge PCI bus PCI I/O cards PCI bridge PCI bus P-Pro bus (64-bit data, 36-bit address, 66 MHz) Memory controller MIU 1-, 2-, or 4-way interleaved DRAM All coherence and multiprocessing glue integrated in processor module • Highly integrated, targeted at high volume • Low latency and bandwidth • 85 Example: SUN Enterprise P $ P $ $2 $2 CPU/mem cards Mem ctrl Bus interf ace/sw itch Gigaplane bus (256 data, 41 address, 83 MHz) I/O cards • 2 FiberChannel SBUS SBUS SBUS 100bT, SCSI Bus interf ace Memory on processor cards themselves – 16 cards of either type: processors + memory, or I/O But all memory accessed over bus, so symmetric • Higher bandwidth, higher latency bus • 86 Scaling Up M M M Network $ $ P P Network “Dance hall” $ M $ M $ P P P M $ P Distributed memory • Problem is interconnect: cost (crossbar) or bandwidth (bus) • Dance-hall: bandwidth still scalable, but lower cost than crossbar – • Distributed memory or non-uniform memory access (NUMA) – • latencies to memory uniform, but uniformly large Construct shared address space out of simple message transactions across a general-purpose network (e.g. read-request, read-response) Caching shared (particularly nonlocal) data? 87 Example: Cray T3E External I/O P $ Mem Mem ctrl and NI XY Sw itch Z Scale up to 1024 processors, 480MB/s links • Memory controller generates comm. request for nonlocal references • – • Communication architecture tightly integrated into node No hardware mechanism for coherence (SGI Origin etc. provide this) 88 Caches and Cache Coherence Caches play key role in all cases • Reduce average data access time • Reduce bandwidth demands placed on shared interconnect But private processor caches create a problem • Copies of a variable can be present in multiple caches • A write by one processor may not become visible to others – They’ll keep accessing stale value in their caches • Cache coherence problem • Need to take actions to ensure visibility 89 Example Cache Coherence Problem P2 P1 u=? $ P3 u=? 4 $ u=7 $ 5 3 u:5 u:5 1 I/O devices u:5 2 Memory Processors see different values for u after event 3 • With write back caches, value written back to memory depends on happenstance of which cache flushes or writes back value when • – • Processes accessing main memory may see very stale value Unacceptable to programs, and frequent! 90 Cache Coherence Reading a location should return latest value written (by any process) Easy in uniprocessors • Except for I/O: coherence between I/O devices and processors • But infrequent, so software solutions work Would like same to hold when processes run on different processors • E.g. as if the processes were interleaved on a uniprocessor But coherence problem much more critical in multiprocessors • Pervasive and performance-critical • A very basic design issue in supporting the prog. model effectively It’s worse than that: what is the “latest” value with indept. processes? • Memory consistency models 91 SGI Origin2000 P P P P L2 cache (1-4 MB) L2 cache (1-4 MB) L2 cache (1-4 MB) L2 cache (1-4 MB) Sy sAD bus Sy sAD bus Hub Hub Directory Main Memory (1-4 GB) Directory Main Memory (1-4 GB) Interconnection Netw ork Hub chip provides memory control, communication and cache coherence support • Plus I/O communication etc 92 Shared Address Space Machines Today • Bus-based, cache coherent at small scale • Distributed memory, cache-coherent at larger scale • • Without cache coherence, are essentially (fast) message passing systems Clusters of these at even larger scale 93 Message-Passing Programming Model Match ReceiveY, P, t AddressY Send X, Q, t AddressX Local process address space Local process address space ProcessP Process Q Send specifies data buffer to be transmitted and receiving process • Recv specifies sending process and application storage to receive into • – Optional tag on send and matching rule on receive Memory to memory copy, but need to name processes • User process names only local data and entities in process/tag space • In simplest form, the send/recv match achieves pairwise synch event • – • Other variants too Many overheads: copying, buffer management, protection 94 Message Passing Architectures Complete computer as building block, including I/O • Communication via explicit I/O operations Programming model: directly access only private address space (local memory), comm. via explicit messages (send/receive) High-level block diagram similar to distributed-memory SAS But comm. needn’t be integrated into memory system, only I/O • History of tighter integration, evolving to spectrum incl. clusters • Easier to build than scalable SAS • Can use clusters of PCs or SMPs on a LAN • Programming model more removed from basic hardware operations • Library or OS intervention 95 Evolution of Message-Passing Machines 101 001 100 000 111 Early machines: FIFO on each link 011 110 010 Hw close to prog. Model; synchronous ops • Replaced by DMA, enabling non-blocking ops • – Buffered by system at destination until recv Diminishing role of topology Store&forward routing: topology important • Introduction of pipelined routing made it less so • Cost is in node-network interface • Simplifies programming • 96 Example: IBM SP-2 Pow er 2 CPU IBM SP-2 node L2 $ Memory bus General interconnection netw ork f ormed fom r 8-port sw itches 4-w ay interleaved DRAM Memory controller MicroChannel bus I/O DMA i860 NI DRAM NIC Made out of essentially complete RS6000 workstations • Network interface integrated in I/O bus (bw limited by I/O bus) • – Doesn’t need to see memory references 97 Example Intel Paragon i860 i860 L1 $ L1 $ Intel Paragon node Memory bus (64-bit, 50 MHz) Mem ctrl DMA Driver Sandia’ s Intel Paragon XP/S-based Super computer 2D grid netw ork w ith processing node attached to every sw itch • NI 4-w ay interleaved DRAM 8 bits, 175 MHz, bidirectional Network interface integrated in memory bus, for performance 98 Toward Architectural Convergence Evolution and role of software have blurred boundary • Send/recv supported on SAS machines via buffers • Can construct global address space on MP using hashing • Software shared memory (e.g. using pages as units of comm.) Hardware organization converging too • Tighter NI integration even for MP (low-latency, high-bandwidth) • At lower level, even hardware SAS passes hardware messages – Hw support for fine-grained comm makes software MP faster as well Even clusters of workstations/SMPs are parallel systems • Fast system area networks (SAN) Programming models distinct, but organizations converged • Nodes connected by general network and communication assists • Assists range in degree of integration, all the way to clusters 99 Data Parallel Systems Programming model • Operations performed in parallel on each element of data structure • Logically single thread of control, performs sequential or parallel steps • Conceptually, a processor associated with each data element Architectural model • Array of many simple, cheap processors with little memory each – Processors don’t sequence through instructions • Attached to a control processor that issues instructions • Specialized and general communication, cheap global synchronization Control processor Original motivations Matches simple differential equation solvers • Centralize high cost of instruction fetch/sequencing • PE PE PE PE PE PE PE PE PE 100 Application of Data Parallelism Each PE contains an employee record with his/her salary If salary > 100K then • salary = salary *1.05 else salary = salary *1.10 • Logically, the whole operation is a single step • Some processors enabled for arithmetic operation, others disabled Other examples: • Finite differences, linear algebra, ... • Document searching, graphics, image processing, ... Some recent machines: Thinking Machines CM-1, CM-2 (and CM-5) • Maspar MP-1 and MP-2, • 101 Evolution and Convergence Rigid control structure (SIMD in Flynn taxonomy) • SISD = uniprocessor, MIMD = multiprocessor Popular when cost savings of centralized sequencer high 60s when CPU was a cabinet • Replaced by vectors in mid-70s • – More flexible w.r.t. memory layout and easier to manage Revived in mid-80s when 32-bit datapath slices just fit on chip • No longer true with modern microprocessors • Other reasons for demise Simple, regular applications have good locality, can do well anyway • Loss of applicability due to hardwiring data parallelism • – MIMD machines as effective for data parallelism and more general Prog. model converges with SPMD (single program multiple data) Contributes need for fast global synchronization • Structured global address space, implemented with either SAS or MP • 102 Convergence: Generic Parallel Architecture A generic modern multiprocessor Netw ork Communication assist (CA) Mem $ P • Node: processor(s), memory system, plus communication assist • Network interface and communication controller • Scalable network • Communication assist provides primitives with perf profile • Build your programming model on this • Convergence allows lots of innovation, now within framework • Integration of assist with node, what operations, how efficiently... 103 Outline • Drivers of Parallel Computing • Trends in “Supercomputers” for Scientific Computing • Evolution and Convergence of Parallel Architectures • Fundamental Issues in Programming Models and Architecture 104 The Model/System Contract Model specifies an interface (contract) to the programmer • Naming: How are logically shared data and/or processes referenced? • Operations: What operations are provided on these data • Ordering: How are accesses to data ordered and coordinated? • Replication: How are data replicated to reduce communication? Underlying implementation addresses performance issues • Communication Cost: Latency, bandwidth, overhead, occupancy We’ll look at the aspects of the contract through examples 105 Supporting the Contract CAD Database Multiprogramming Shared address Scientific modeling Message passing Data parallel Compilation or library Operating systems support Communication hardware Parallel applications Programming models Communication abstraction User/system boundary Hardware/software boundary Physical communication medium Given prog. model can be supported in various ways at various layers In fact, each layer takes a position on all issues (naming, ops, performance etc), and any set of positions can be mapped to another by software Key issues for supporting programming models are: • What primitives are provided at comm. abstraction layer • How efficiently are they supported (hw/sw) • How are programming models mapped to them 106 Recap of Parallel Architecture Parallel architecture is important thread in evolution of architecture • At all levels • Multiple processor level now in mainstream of computing Exotic designs have contributed much, but given way to convergence • Push of technology, cost and application performance • Basic processor-memory architecture is the same • Key architectural issue is in communication architecture – How communication is integrated into memory and I/O system on node Fundamental design issues • Functional: naming, operations, ordering • Performance: organization, replication, performance characteristics Design decisions driven by workload-driven evaluation • Integral part of the engineering focus 107