Data

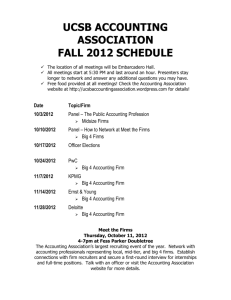

advertisement

Expert mission on the Evaluation of SMEs and Entrepreneurship Policies and Programmes Resources pack Jerusalem and Neve Ilan, Israel 18-20th March 2014 Stephen Roper – stephen.roper@wbs.ac.uk Christian Rammer - rammer@zew.de Types of evaluation of SME policy Types of SME policy evaluation Type 1 2 3 4 Title Measuring take-up Comments Data needed Provides an indication of popularity but no real idea of outcomes Scheme management or impact data Recipient evaluation Subjective assessment Popularity and recipients' idea of the usefulness or value of the scheme. Very often informal or unstructured. Subjective assessments of the scheme by recipients. Often categorical, less frequently numeric Control group Matched control group Recipient data Recipient data Impacts measured relative to a 'typical' control group of potential Recipient and control recipients of the scheme group data 6 Impacts measured relative to a 'matched' control group similar to Recipient and control recipients in terms of some characteristics (e.g. size, sector) group data Survey data Econometric Impacts estimated using multivariate econometric or statistical recipients and nonstudies approaches and allowing for sample selection recipients 7 Experimental Impacts estimated using random allocation to treatment and Approaches control groups or alternative treatment groups 5 Control and treatment group data Examples of SME policy evaluation Level 5 evaluation: Danish Growth Houses – matched control groups • • • • • • Focus – Danish Growth Houses provide advisory and brokering services for high growth firms. Regionally distributed in Denmark. Publicly funded initiative with some EU support in less developed areas. Key competency is business advice and support. Aim – estimate value for money of Danish Growth House network – all companies using network Sept 2008 to March 2011. Assessment April 2013. To include displacement and multiplier effects and identify NPV. Data – control group matched 2650 assisted firms (admin data) against firms from business registry data (done by Statistics Denmark) to get growth differential Methodology – direct effect using matched control group approach. Matching on size, sector, area, ownership and growth track record adjusted for selection bias (50 per cent). Estimated displacement and multiplier effects. Key results – estimated effect was an NPV of around 2.6 over two years – so very effective - and also estimate job creation Source: Fact sheet from the Irish Group (2013) ‘Calculation of the socio-economic yield of investments in Vaeksthuset’, Copenhagen. Provided by the Danish Business Administration. Level 5 Evaluation Example: EXIST: University Start-up Initiative - - - - Focus ̶ EXIST facilitates entrepreneurship at universities by funding of centres (= staff) + events/promotion + start-up support (financial + consulting); 1999 – ongoing; several phases (I to IV); Phase III: 48 (regional) projects involving 143 universities/research centres Aim ̶ impact analysis after completion of Phase III (suplementing an ongoing evaluation that monitors activities and progress made) Approach ̶ qualitative (interviews) and quantitative (control group) Data (1) ̶ interviews with various stakeholders; detailed analysis of the centres' characteristics and history Data (2) ̶ survey of a representative sample of start-ups at the beginning of the programme (firms founded 1996-2000; n=20,100; 1,346 surveyed spin-offs surveyed again in 2007) and during Phase III (start-ups founded 2001-2006; n=10,300) Methodology ̶ identifying start-ups with university background in regions with EXIST centres and other university regions over time (controlling for university/regional characteristics) Key results ̶ institutional setting (“scientific culture“) drives entrepreneurship orientation, little impact of the initiative; no impact on the number of start-ups, but impact on the type of start-ups (larger, stronger R&D focus) Source ̶ Egeln, J. et al. (2009), Evaluation des Existenzgründungsprogramms EXIST III, ZEW-Wirtschaftsanalysen 95, Baden-Baden: Nomos. Level 6 evaluation: Business Links consultancy and advice – econometric evaluation • Focus - Business Links was UK national government service providing either intensive consultancy/advice support or light-touch support. Support period 2003-04. Impact period 2005-06. Surveys 2007 • Aim – assess cost effectiveness of business support. Econometric estimates of impact. Survey based estimates of displacement, multipliers etc. to get NPV. • Data - Evaluation survey-based, 1000 firms intensive, 1000 firms lighttouch and 1000 firms matched control. • Methodology - 2- stage Heckman selection models (using information sources as identifying factor) – Probit models for membership of intensive v control group – Regression models for log growth support • Key results – intensive support raised employment growth rate significantly – no sales growth impact • Source: Mole, K.F.; M. Hart; S. Roper; and D. Saal. 2008. Differential Gains from Business Link Support and Advice: A Treatment Effects Approach. Environment and Planning C 26:316-334. Business Links evaluation – long term follow-up using administrative data • Not an evaluation as such but follow up of groups using business register data • Able to monitor growth over longer period – 2003 assisted, 2010 study • Evidence: clearly ‘Intensive Assist’ have grown rapidly and more sustained way • Conclusion – support had significant long-term effects (but not controlled properly so care needed in interpretation! Level 6 evaluation: Interim evaluation of Knowledge Connect – innovation voucher scheme (2010) • • • • • • Focus – Impact Evaluation Framework (IEF) compliant evaluation of local innovation voucher programme in London intended to increase collaboration between SMEs and higher education. Study period April 2008 to Sept 2009. Survey first quarter 2010. Aim – Interim and formative impact and value for money evaluation including assessment of displacement and multiplier effects – combined into final NPV calculation. Key aims to test rationale for continuation and suggest developmental areas. Included assessment of Strategic Added Value. Data – telephone interviews with 175 recipients and 151 matched non-recipients and around 10 in-depth case studies. Mixed methods approach. Methodology – 2 stage modelling of impact as well as subjective assessment of additionality and partial additionality. Survey based estimates of displacement and multiplier effects to give NPV. Modelling of behavioural effects. Key results – positive effects but under-performing target and low NPV. Some behavioural effects. Short time line perhaps responsible and may under-estimate benefits. Poor admin data made following up clients difficult. Source: The Evaluation Partnership/Warwick Business School (2010) ‘Interim Evaluation of Knowledge Connect – Final report’. Level 7 evaluation: Growth Accelerator Pilot Programme (on-going) • • • • • • Focus – evaluation of leadership and management (LM) support and coaching for high growth small firms through the UK Growth Accelerator programme. Intervention 2014. Survey follow-up 2015 and 2016 (probably). Impact periods 12 and 24 months Aim – Impact evaluation (no assessment of displacement or multiplier effects) Data – administrative and baseline survey data with benchmarking material from the coaching process. 300 in each arm of the trial initially Methodology – RCT with stratified randomisation (sector, size, geography). Firms either receive (incentivised) LM training and coaching at second stage or nothing. (Initial LM training is provided free) Key results – n/a Source: programme documents, UK Growth Accelerator information at: http://www.growthaccelerator.com/ Firms targeted and offered L+M Suitable and in size band Take up L+M Randomisation Incentives applied Take up L&M and coaching Not offered any more from GA Treatment Group 'Control' Group Level 7 evaluation: Creative Credits an experimental evaluation • • • • • • Focus – experiment to: (a) evaluate effectiveness of new voucher to link SMEs and creative enterprises – novel instrument; and, (b) test value of RCT+ approach (qual and quant elements) Aim – Impact and value for money evaluation (no assessment of displacement or multiplier effects) Data – survey data for treatment and control group. 4 surveys over 2 years. 150 treatment and 470 control group (applicants but who did not get voucher) Methodology – RCT with simple randomisation Firms either receive voucher or not. Also 2stage Heckman modelling to check results. Needed extensive chasing to maintain participation in study Key results – positive additionality (innovation and growth) after 6 months but no discernible effect after 12 months Source: An Experimental Approach to Industrial Policy Evaluation: the case of Creative Credits, Hasan Bakhski et al, June 2013, ERC Research Paper 4. Evaluating the Consultancy Programme • Develop Logic Models for each initiative: – output of funding activities – short-term, medium-term, long-term outcome – contingency factors • Etablish a integrated monitoring system – firms approaching MAOF: motivation, source of information – results of diagnosis – surveying firms on attitudes, behaviour, output at three points in time: at diagnosis, post-consultancy, after 2 years (potentially as part of another diagnosis) – put all into a database Evaluating the Consultancy Programme • Early, formative evaluation – sustainability effects of the new 2-year approach – effects on attitudes and behaviour of different types of consultancy • Impact evaluation – establish a control group: match several „twins“ for each funded firm (e.g. from D&B, business register) – short surveys of control group at beginning and end of 2-year period (past performance, information about MAOF) – displacement and multiplier effects (through second control group survey) – net effect: NPV based on employment differences to control group (survival and growth) Evaluating the Consultancy Programme Now something speculative ... • Randomisation of allocating consulting support • Firms can choose between a) consulting service based on diagnosis (and having to pay a part of consulting costs) b) getting a consulting service assigned randomly (and not having to pay) alternatively b) choosing a consulting service they think is most appropriate for them and pay less than for a)