GG 313 Lecture 6

advertisement

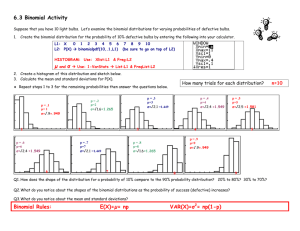

GG 313 Lecture 6 Probability Distributions When we sample many phenomena, we find that the probability of occurrence of an event will be distributed in a way that is easily described by one of several wellknown functions called “probability distributions”. We will discuss these functions and give examples of them. UNIFORM DISTRIBUTION The uniform distribution is simple, it’s the probability distribution found when all probabilities are equal. For example: Consider the probability of throwing an “x” using a 6-sided die: P(x), x=1,2,3,4,5,6. If the die is “fair”, then P(x)=1/6, x=1,2,3,4,5,6. This is a discrete probability distribution, since x can only have integer or fixed values. P(x) is always ≥ 0 and always ≤ 1. If we add up the probabilities for all x, the sum = 1: n P(x ) 1 i i1 In most cases, the probability distribution is not uniform, and some events are more likely than others. For example, we may want to know the probability of hitting a target x times in n tries. We can’t get the solution unless we know the probability of hitting the target in one try: P(hit)=p. Once we know P(hit), we can calculate the probability distribution: P(x)=pxq(n-x), where q=1-p. This is the number of combinations of n things taken x at a time, nCx. Recall that this is the probability where the ORDER of the x hits matters, which is not what we want. We want the number of permutations as defined earlier: n x nx n x P(x) p q p (1 p) nx , x 1,2, x x n Recall that: n binomial coefficients x n! x!(n x)! This is known as the binomial probability distribution, used to predict the probability of success in x events out of n tries. Using our example above, what is the probability of hitting a target x times in n tries if p=0.1 and n=10? 10 x 10! 10x P(x) 0.1 0.9 0.1x 0.910x x!(10 x)! x We can write an easy m-file for this: % binomial distribution n=10 p=0.1 xx=[0:n]; for ii=0:n; px(ii+1)=(factorial(n)/(factorial(xx(ii+1))*factorial(nxx(ii+1))))*p^xx(ii+1)*(1-p)^(n-xx(ii+1)); end plot(xx,px) sum(px) % check to be sure sure that the sum =1 P=0.1 The probability of 1 hit is 0.38 with p=0.1 What if we change the probability of hitting the target in any one shot to 0.3. What is the most likely number of hits in 10 shots? p=0.3 Continuous Probability Distributions Continuous populations, such as the temperature of the atmosphere, depth of the ocean, concentration of pollutants, etc., can take on any value with in their range. We may only sample them at particular values, but the underlying distribution is continuous. Rather than the SUM of the distribution equaling 1.0, for continuous distributions the integral of the distribution (the area under the curve) over all possible values must equal 1. P(x)dx 1 P(x) is called a probability density function, or PDF. Because they are continuous, the probability of any particular value being observed is zero. We discuss instead the probability of a value being between two limits, a- and a+: P(a ) a a P(x)dx As 0, the probability also approaches zero. also define the cumulative probability distribution We giving the probability that an observation will have a value less than or equal to a. This distribution is bounded by 0≤p(x) ≤ 1. Pc (a) As a, Pc(a) 1. a P(x)dx The normal distribution While any continuous function with an area under the curve of 1 can be a probability distribution, but in reality, some functions are far more common than others. The Normal Distribution, or Gaussian Distribution, is the most common and most valued. This is the classic “bellshaped curve”. It’s distribution is defined by: 1 p(x) e 2 2 1 x 2 Where µ and are the mean and standard deviation defined earlier. We can make a Matlab m-file to generate the normal distribution: % Normal distribution xsigma=1.; xmean=7; xx=[0:.1:15]; xx=(xx-xmean)/xsigma; px=(1/(xsigma*sqrt(2*pi)))*exp(-0.5*xx.^2); xmax=max(px) xsum=sum(px)/10. plot(xx,px) Or, make an Excel spreadsheet plot: 0.4 sigma=1, mean=7 0.35 sigma=1,5, mean=8 sigma=2.25, mean=9 0.3 sigma=3.375, mean=10 sigma=5.1, mean=11 0.25 sigma=7.6, mean=12 0.2 sigma=11.4, mean=13 0.15 0.1 0.05 0 0 2 4 6 8 10 12 14 16 18 20 Note that smaller values of sigma are sharper (have smaller kurtosis). We can define a new variable that will normalize the distribution to =1 and =0: zi xi And the defining equation reduces to: 1 p(x) e 2 2 1 x 2 , 1 p(z) e 2 z2 2 The values on the x axis are now equivalent to standard deviations: x=±1 = ±1 standard deviation, etc. This distribution is very handy. We expect 68.27% of our results to be within 1 standard deviation of the mean, 95.45% to be within 2 standard deviations, and 99.73% to be within 3 standard deviations. This is why we can feel reasonably confident about eliminating points that are more than 3 standard deviations away from the mean. We also define the cumulative normal distribution: P(z a) let : a p(z)dz p(z)dz and dz 2du, then z2 u 2 2 P(z a) 1/2 0 1 a 2 0 e u2 2 a 0 1 p(z)dz 1/2 2 a 0 e z2 2 dz a a du 1/2 erf 1/21 erf 2 2 2 1 Erf(x) is called the error function. For any value z, z Pc (z) 1/21 erf 2 1 Cumulative Normal distribution 0.75 Pc(z) 0.5 0.25 0 -4 -2 0 z 2 4 We easily see that the probability of a result being between a and b is: b a Pc (a z b) Pc (b) Pc (a) 1/2erf erf 2 2 Example: Estimates of the strength of olivine yield a normal distribution given by µ=1.0*1011 Nm and =1.0 *1010 Nm. What is the probability that a sample estimate will be between 9.8*1010 Nm and 1.1*1011 Nm? xi First convert to normal scores zi And calculate with the formula above. DO THIS NOW, either in Excel or Matlab - The normal distribution is a good approximation to the binomial distribution for large n (actually, when np and (1-p)n >5). The mean and standard deviation of the binomial distribution become: np and np(1 p) So that: Pb x 1 2np1 p) exp 1(xnp )2 2np(1 p ) I had a difficult time getting this to work in Excel because the term -(x-np)2 is evaluated as (-(x-np))2 0.2 0.18 0.16 normal binomial 0.14 0.12 0.1 0.08 0.06 0.04 0.02 0 0 2 4 6 8 10 12 14 N=20, p=0.5, µ=np=10, =np(1-p)=2.236 16 Poisson distribution One way to think of a Poisson distribution is that it is like a normal distribution that gets pushed close to zero but can’t go through zero. For large means, they are virtually the same. The Poisson distribution is a good approximation to the binomial distribution that works when the probability of a single event is small but when n is large. P(x) np xe x! , x 0,1,2,3, n where Is the rate of occurrence. This is used to evaluate the probability of rare events. 0.4 Poisson Distribution 0.35 lambda=2 lambda=4 lambda=8 0.3 lambda=12 lambda=16 0.25 lambda=20 lambda=1 0.2 0.15 0.1 0.05 0 0 2 4 6 8 10 12 14 16 18 -0.05 Note that the Poisson distribution approaches the normal distribution for large . 20 Example: The number of floods in a 50-year period on a particular river has been shown to follow a Poisson distribution with =2.2. That is the most likely number of floods in a 50 year period is a bit larger than 2. What is the probability of having at least 1 flood in the next 50 years? The probability of having NO floods (x=0) is e-2.2, or 0.11. The probability of having at least 1 flood is (1-P(0))=0.89. The Exponential Distribution x P(x) e And: Pc (x) 1 e x % exponential distribution lambda=.5 % lambda=rate of occurrence xx=[0:1:20]; px=lambda*exp(-1*xx*lambda); plot(xx,px) hold cum=1-exp(-1*xx*lambda) plot(xx,cum) Pc(x) Exponential Distribution P(x) Example: The height of Pacific seamounts has approximately an exponential distribution with Pc(h)=1-e-h/340, where h is in meters. Which predicts the probability of a height less than h meters. What’s the probability of a seamount with a height greater than 4 km? Pc(4000)=1-e-4000/340 which is approximately 0.99999 so the probability of a large seamount is 0.00001. (Which I don’t believe….) Log-Normal Distribution In some situations, distributions are greatly skewed, a situation seen in some situations, such as grain size distributions and when errors are large and propagate as products, rather than sums. In such cases, taking the log of the distribution may result in a normal distribution. The statistics of the normal distribution can be obtained and exponentiated to obtain the actual values of uncertainty.