GPU Computing

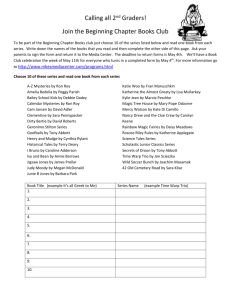

advertisement

Outline

• GPU Computing

• GPGPU-Sim / Manycore Accelerators

• (Micro)Architecture Challenges:

–

Branch Divergence (DWF, TBC)

–

On-Chip Interconnect

2

What do these have in common?

3

Source: AMD Hotchips 19

5

GPU Computing

• Technology trends => want “simpler” cores (less power).

• GPUs represent an extreme in terms of computation per

unit area.

• Current GPUs tend to work well for applications with

regular parallelism (e.g., dense matrix multiply).

• Research Questions: Can we make GPUs better for a

wider class of parallel applications? Can we make

them even more efficient?

4

• Split problem between CPU and GPU

GPU (most computation here)

CPU (sequential code “accelerator”)

6

Heterogeneous Computing

CPU

spawn

GPU

CPU

CPU

done

spawn

GPU

Time

9

CUDA Thread Hierarchy

• Kernel = grid

of blocks of

warps of

threads

• scalar threads

8

CUDA Example [Luebke]

Standard C Code

void saxpy_serial(int n, float a, float *x, float *y)

{

for (int i = 0; i < n; ++i)

y[i] = a*x[i] + y[i];

}

// Invoke serial SAXPY kernel

main() { … saxpy_serial(n, 2.0, x, y); }

CUDA Example [Luebke]

CUDA code

__global__ void saxpy_parallel(int n, float a, float *x, float *y)

{

int i = blockIdx.x*blockDim.x + threadIdx.x;

if(i<n)

y[i]=a*x[i]+y[i];

}

main() {

// omitted: allocate and initialize memory

// Invoke parallel SAXPY kernel with 256 threads/block

int nblocks = (n + 255) / 256;

saxpy_parallel<<<nblocks, 256>>>(n, 2.0, x, y);

// omitted: transfer results from GPU to CPU

}

GPU Microarchitecture Overview

(10,000’)

GPU

Shader

Core

Shader

Core

Shader

Core

Shader

Core

Interconnection Network

Memory

Controller

Memory

Controller

GDDR

GDDR

Memory

Controller

Off-chip DRAM

GDDR

13

Single Instruction, Multiple Thread (SIMT)

All threads in a kernel grid run same “code”. A given block in

kernel grid runs on single “shader core”.

A Warp in a block is a set of threads grouped to execute in SIMD

lock step

Using stack hardware and/or predication can support different

branch outcomes per thread in warp.

Thread Warp

Common PC

Scalar Scalar Scalar

Thread Thread Thread

W

X

Y

Scalar

Thread

Z

Thread Warp 3

Thread Warp 8

Thread Warp 7

SIMD Pipeline

15

“Shader Core” Microarchitecture

Heavily multithreaded: 32 “warps” each representing 32 scalar threads

Designed to tolerate long latency operations rather than avoid them.

14

“GPGPU-Sim” (ISPASS 2009)

• GPGPU simulator developed by my group at UBC

• Goal: platform for architecture research on manycore

accelerators running massively parallel applications.

• Support CUDA’s “virtual instruction set” (PTX).

• Provide a timing model with “good enough” accuracy for

architecture research.

10

GPGPU-Sim Usage

Input: Unmodified CUDA or OpenCL application

Output: Clock cycles required to execute + statistics that can

be used to determine where cycles were lost due to

“microarchitecture level” inefficiency.

Accuracy vs. hardware (GPGPU-Sim 2.1.1b)

HW - GPGPU-Sim Comparison

250

GPGPU-Sim IPC

200

150

100

Correlation

~0.90

50

0

0

50

100

150

Quadro FX 5800 IPC

200

250

(Architecture simulators give up accuracy to enable flexibility-can explore more of the design space)

11

GPGPU-Sim Visualizer (ISPASS 2010)

17

GPGPU-Sim w/ SASS (decuda) + uArch Tuning

(under development)

HW - GPGPU-Sim Comparison

~0.976 correlation

on subset of CUDA

SDK that currently

runs.

250.00

GPGPU-Sim IPC

200.00

Currently adding in

Support for Fermi

Correlation

uArch

~0.95

150.00

100.00

Don’t ask when it

Will be available

50.00

0.00

0.00

50.00

100.00

150.00

200.00

250.00

Quadro FX5800 IPC

12

First Problem: Control flow

Group scalar threads

into warps

Branch divergence

when threads

inside warps want

to follow different

execution paths.

Branch

Path A

Path B

16

Current GPUs: Stack-Based Reconvergence

(Building upon Levinthal & Porter, SIGGRAPH’84)

Our version: Immediate postdominator reconvergence

Stack

AA/1111

Reconv. PC

TOS

TOS

TOS

BB/1111

C/1001

C

D/0110

D

E

E

B

E

A

20 G

D

C

E

F

Thread Warp

EE/1111

Next PC

Active Mask

1111

0110

1001

Common PC

Thread Thread Thread Thread

1

2

3

4

G/1111

G

A

B

C

D

E

G

A

Time

17

Dynamic Warp Formation

(MICRO’07 / TACO’09)

Consider multiple warps

Opportunity?

Branch

Path A

Path B

21

18

Dynamic Warp Formation

Idea: Form new warp at divergence

Enough threads branching to each path to create full new warps

19

Dynamic Warp Formation: Example

A

x/1111

y/1111

A

x/1110

y/0011

B

x/1000

Execution of Warp x

at Basic Block A

x/0110

C y/0010 D y/0001 F

E

Legend

A

x/0001

y/1100

Execution of Warp y

at Basic Block A

D

A new warp created from scalar

threads of both Warp x and y

executing at Basic Block D

x/1110

y/0011

x/1111

G y/1111

A

A

B

B

C

C

D

D

E

E

F

F

G

G

A

A

Baseline

Time

Dynamic

Warp

Formation

A

A

B

B

C

D

E

E

F

G

G

A

A

Time

23

Dynamic Warp Formation: Implementation

New Logic

Modified

Register File

21

Thread Block Compaction (HPCA 2011)

DWF Pathologies:

Starvation

• Majority Scheduling

– Best Performing in Prev. Work

– Prioritize largest group of threads with

same PC

• Starvation, Poor Reconvergence

– LOWER SIMD Efficiency!

• Key obstacle: Variable Memory

Latency

B: if (K > 10)

C:

K = 10;

else

D:

K = 0;

E: B = C[tid.x] + K;

Time

C

1 2 7 8

C

5 -- 11 12

D

E

9 2

1

6 7

3 8

4

D

E

-5 -1011

-- 12

-E

1 2 3 4

1000s cycles

E

5 6 7 8

D

9 6 3 4

E 9 10 11 12

D

-- 10 -- -E

9 6 3 4

E

-- 10 -- --

26

DWF Pathologies:

Extra Uncoalesced Accesses

• Coalesced Memory Access = Memory SIMD

– 1st Order CUDA Programmer Optimization

• Not preserved by DWF

E: B = C[tid.x] + K;

No DWF

With DWF

E

E

E

E

E

E

#Acc = 3

0x100

1 2 3 4

0x140

5 6 7 8

9 10 11 12

0x180

#Acc = 9

0x100

1 2 7 12

0x140

9 6 3 8

5 10 11 4

0x180

Memory

Memory

L1 Cache Absorbs

Redundant

Memory Traffic

L1$ Port Conflict

27

DWF Pathologies:

Implicit Warp Sync.

• Some CUDA applications depend on the

lockstep execution of “static warps”

Warp 0

Warp 1

Warp 2

Thread 0 ... 31

Thread 32 ... 63

Thread 64 ... 95

– E.g. Task Queue in Ray Tracing

Implicit

Warp

Sync.

int wid = tid.x / 32;

if (tid.x % 32 == 0) {

sharedTaskID[wid] = atomicAdd(g_TaskID, 32);

}

my_TaskID = sharedTaskID[wid] + tid.x % 32;

ProcessTask(my_TaskID);

28

Observation

• Compute kernels usually contain

divergent and non-divergent

(coherent) code segments

• Coalesced memory access

usually in coherent code

segments

– DWF no benefit there

Coherent

Divergent

Static

Warp

Divergence

Dynamic

Warp

Reset Warps

Coales. LD/ST

Static

Coherent

Warp

Recvg

Pt.

29

Thread Block Compaction

• Block-wide Reconvergence Stack

Thread

Warp

Block

0 0

Warp 1

Warp 2

PC RPC AMask

Active

PC RPC

MaskAMask PC RPC AMask

E -- 1111

1111 1111

E 1111

-1111

E E -- Warp

11110

D E 0011

0011 0100

D 1100

E 0100

DD

E E Warp

1100U

1

C E 1100

1100 1011

C 0011

E 1011

CD

C

E E Warp

0011X

T

2

C Warp Y

– Regroup threads within a block

• Better Reconv. Stack: Likely Convergence

– Converge before Immediate Post-Dominator

• Robust

– Avg. 22% speedup on divergent CUDA apps

– No penalty on others

30

Thread Block Compaction

Implicit

Warp Sync.

– Whole block moves between coherent/divergent code

– Block-wide stack to track exec. paths reconvg.

• Run a thread block like a warp

• Barrier at branch/reconverge pt.

– All avail. threads arrive at branch

– Insensitive to warp scheduling

• Warp compaction

Starvation

Extra Uncoalesced

Memory Access

– Regrouping with all avail. threads

– If no divergence, gives static warp arrangement

31

Thread Block Compaction

PC RPC

Active Threads

B

E

- 1 2 3 4 5 6 7 8 9 10 11 12

D E -- -- -3 -4 -- -6 -- -- -9 10

-- -- -C E -1 -2 -- -- -5 -- -7 -8 -- -- 11

-- 12

--

A: K = A[tid.x];

B: if (K > 10)

C:

K = 10;

else

D:

K = 0;

E: B = C[tid.x] + K;

B

B

B

1 2 3 4

5 6 7 8

9 10 11 12

B

B

B

1 2 3 4

5 6 7 8

9 10 11 12

C

C

1 2 7 8

5 -- 11 12

D

D

9 6 3 4

-- 10 -- --

C

C

C

1 2 -- -5 -- 7 8

-- -- 11 12

E

E

E

1 2 3 4

5 6 7 8

9 10 11 12

D

D

D

-- -- 3 4

-- 6 -- -9 10 -- --

E

E

E

1 2 7 8

5 6 7 8

9 10 11 12

Time

32

Thread Block Compaction

• Barrier every basic block?! (Idle pipeline)

• Switch to warps from other thread blocks

– Multiple thread blocks run on a core

– Already done in most CUDA applications

Branch

Block 0

Block 1

Warp Compaction

Execution

Execution

Execution

Block 2

Execution

Time

33

Microarchitecture Modifications

• Per-Warp Stack Block-Wide Stack

• I-Buffer + TIDs Warp Buffer

– Store the dynamic warps

• New Unit: Thread Compactor

– Translate activemask to compact dynamic warps

Branch Target PC

Block-Wide

Fetch

Valid[1:N]

I-Cache

Warp

Buffer

Decode

Stack

Thread

Compactor Active

Pred.

Mask

Issue

ScoreBoard

ALU

ALU

ALU

ALU

RegFile

MEM

Done (WID)

34

Likely-Convergence

• Immediate Post-Dominator: Conservative

– All paths from divergent branch must merge there

• Convergence can happen earlier

– When any two of the paths merge

A:

B:

C:

D:

E:

F:

while (i < K) {

X = data[i];

if ( X = 0 )

result[i] = Y;

B

else if ( X = 1 )

break;

i++;

iPDom of A

}

return result[i];

A

Rarely

Taken

C

E

D

F

• Extended Recvg. Stack to exploit this

– TBC: 30% speedup for Ray Tracing

35

Experimental Results

• 2 Benchmark Groups:

– COHE = Non-Divergent CUDA applications

– DIVG = Divergent CUDA applications

COHE

DIVG

DWF

TBC

0.6

0.7

0.8

0.9

1

1.1

1.2

Serious Slowdown from

pathologies

No Penalty for COHE

22% Speedup on DIVG

1.3

IPC Relative to Baseline

Per-Warp Stack

36

Next:

How should on-chip interconnect be designed?

(MICRO 2010)

36

Throughput-Effective Design

Two approaches:

• Reduce Area

• Increase performance

Look at properties of bulk-synchronous

parallel (aka “CUDA”) workloads

38

0.0021

0.0020

0.0019

0.0018

0.0017

0.0016

0.0015

0.0014

0.0013

0.0012

LESS AREA

(Total Chip Area)-1 [1/mm2]

Throughput vs inverse of Area

Constant

IPC/mm2

Checkerboard

Thr. Eff.

Ideal NoC

HIGHER THROUGHPUT

Baseline Mesh

(Balanced Bisection Bandwidth)

2x BW

190

210

230

250

270

290

Average Throughput [IPC]

39

Many-to-Few-to-Many Traffic Pattern

MC input

bandwidth

core injection

bandwidth

MC output

bandwidth

C

0

C

n

MC0

MC1

reply network

C

2

request network

C

1

C

0

C

1

C

2

MCm

C

n

40

Exploit Traffic Pattern Somehow?

• Keep bisection bandwidth

same, reduce router area…

• Half-Router:

– Limited connectivity

• No turns allowed

– Might save ~50% of router

crossbar area

Half-Router

Connectivity

41

Checkerboard Routing, Example

• Routing from a halfrouter to a half-router

– even # of columns away

– not in the same row

• Solution: needs two turns

– (1) route to an

intermediate full-router

using YX

– (2)then route to the

destination using XY

42

Multi-port routers at MCs

• Increase the injection ports of Memory Controller

routers

–

–

–

–

Only increase terminal BW of the few nodes

No change in Bisection BW

Minimal area overhead (~1% in NoC area)

Speedups of up to 25%

• Reduces the bottleneck at the few nodes

North

West

North

Injection

Router

East

South

Router

Memory

Controller

Ejection

South

East

43

Results

Speedup

• HM speedup 13% across 24 benchmarks

• Total router area reduction of 14.2%

70%

60%

50%

40%

30%

20%

10%

0%

-10%

AES BIN HSP NE NDL HW LE HIS LU SLA BP CON NNC BLK MM LPS RAY DG SS TRA SR WP MUM LIB FWT SCP STC KM CFD BFS RD

HM

44

Next:

GPU Off-chip Memory Bandwidth Problem

(MICRO’09)

24

Background: DRAM

DRAM

Column Decoder

Row Buffer

Row Decoder

Row Access:

Activate a row of DRAM

bank and load into row

buffer (slow)

Column Access:

Read and write data in

row buffer (fast)

Precharge:

Write row buffer data

back into row (slow)

Memory

Controller

Memory

Array

46

Background: DRAM Row Access Locality

Definition: Number of accesses to a row between row switches

“row switch”

Bank

RA RA RA RA

tRP

Precharge Row A

tRCD

Activate Row B

Pre...Row B

Precharge

RB RB

Act..

tRC

(GDDR uses multiple banks to hide latency)

tRC = row cycle time

tRP = row precharge time

tRCD = row activate time

Row access locality Achievable DRAM Bandwidth

Performance

47

Interconnect Arbitration Policy: Round-Robin

RowY

RowA

RowA

RowX

RowC

RowB

RowB

Memory Controller 0

N

W Router E

S

RowC

RowB

RowA

Memory Controller 1

RowB

RowA

RowX

RowY

48 48

DRAM Access

Locality

The Trend: DRAM Access Locality in Many-Core

Before Interconnect

After Interconnect

Good

Pre-interconnect access locality

Post-interconnect access locality

Bad

8

16

32

64

Number of Cores

Inside the interconnect, interleaving of memory request

streams reduces the DRAM access locality seen by the

memory controller

49 49

Today’s Solution: Out-of-Order Scheduling

Request Queue

Youngest

Row A

Row B

Row A

Row A

Row B

Oldest

Row A

Opened

Row:

AB

Opened

Switching

Row:

Row

Queue size needs to increase

as number of cores increase

Requires fully-associative logic

Circuit issues:

o Cycle time

o Area

o Power

DRAM

50 50

Interconnect Arbitration Policy: HG

RowY

RowA

RowA

RowX

RowC

RowB

RowB

Memory Controller 0

N

W Router E

S

RowC

RowB

RowB

Memory Controller 1

RowA

RowA

RowX

RowY

51 51

Results – IPC Normalized to FR-FCFS

FIFO

BFIFO

BFIFO+HG

BFIFO+HMHG4

FR-FCFS

100%

80%

60%

40%

20%

0%

fwt

lib

mum

neu

nn

ray

red

sp

wp

HM

Crossbar network, 28 shader cores, 8 DRAM controllers,

8-entry DRAM queues:

BFIFO: 14% speedup over regular FIFO

BFIFO+HG: 18% speedup over BFIFO, within 91% of FRFCFS

52 52

48

Thank you. Questions?

aamodt@ece.ubc.ca

http://www.gpgpu-sim.org