Introduction, Review of Linear Algebra

advertisement

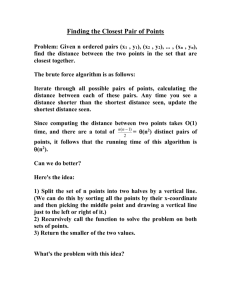

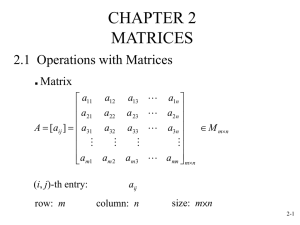

Stats 346.3 Stats 848.3 Multivariate Data Analysis Instructor: W.H.Laverty Office: 235 McLean Hall Phone: 966-6096 Lectures: MWF 12:30am - 1:20pm Biol 123 Evaluation: Assignments, Term tests - 40% Final Examination - 60% Dates for midterm tests: 1. Friday, February 06 2. Friday, March 20 Each test and the Final Exam are Open Book Students are allowed to take in Notes, texts, formula sheets, calculators (laptop computers.) Text: Stat 346 –Multivariate Statistical Methods – Donald Morrison Not Required - I will give a list of other useful texts that will be in the library Bibliography 1. Cooley, W.W., and Lohnes P.R. (1962). Multivariate Procedures for the Behavioural Sciences, Wiley, New York. 2. Fienberg, S. (1980), Analysis of Cross-Classified Data , MIT Press, Cambridge, Mass. 3. Fingelton, B. (1984), Models for Category Counts , Cambridge University Press. 4. Johnson, R.A. and Wichern D.W. Applied Multivariate Statistical Analysis , Prentice Hall. 5. Morrison, D.F. (1976), Multivariate Statistical Methods , McGraw-Hill, New York. 6. Seal, H. (1968), Multivariate Statistical Analysis for Biologists , Metheun, London 7. Alan Agresti (1990) Categorical Data Analysis, Wiley, New York. • The lectures will be given in Power Point • They are now posted on the Stats 346 web page Course Outline Introduction Review of Linear Algebra and Matrix Analysis Chapter 2 Review of Linear Statistical Theory Chapter 1 Multivariate Normal distribution •Multivariate Data plots •Correlation - sample estimates and tests •Canonical Correlation Chapter 3 Mean Vectors and Covariance matrices •Single sample procedures •Two sample procedures •Profile Analysis Chapter 4 Multivariate Analysis of Variance (MANOVA) Chapter 5 Classification and Discrimination •Discriminant Analysis •Logistic Regression (if time permits) •Cluster Analysis Chapters 6 The structure of correlation •Principal Components Analysis (PCA) •Factor Analysis Chapter 9 Multivariate Multiple Regression (if time permits) References TBA Discrete Multivariate Analysis (if time permits) References: TBA Introduction Multivariate Data • • • We have collected data for each case in the sample or population on not just one variable but on several variables – X1, X2, … Xp This is likely the situation – very rarely do you collect data on a single variable. The variables maybe 1. Discrete (Categorical) 2. Continuous (Numerical) • The variables may be 1. Dependent (Response variables) 2. Independent (Predictor variables) A chart illustrating Statistical Procedures Independent variables Dependent Variables Categorical Continuous Categorical Multiway frequency Analysis (Log Linear Model) Discriminant Analysis Continuous ANOVA (single dep var) MANOVA (Mult dep var) Continuous & Categorical ?? MULTIPLE REGRESSION (single dep variable) MULTIVARIATE MULTIPLE REGRESSION (multiple dependent variable) ?? Continuous & Categorical Discriminant Analysis ANACOVA (single dep var) MANACOVA (Mult dep var) ?? Multivariate Techniques Multivariate Techniques can be classified as follows: 1. Techniques that are direct analogues of univariate procedures. • • • There are univariate techniques that are then generalized to the multivariate situarion e. g. The two independent sample t test, generalized to Hotelling’s T2 test ANOVA (Analysis of Variance) generalized to MANOVA (Multivariate Analysis of Variance) 2. Techniques that are purely multivariate procedures. • • Correlation, Partial correlation, Multiple correlation, Canonical Correlation Principle component Analysis, Factor Analysis - These are techniques for studying complicated correlation structure amongst a collection of variables 3. Techniques for which a univariate procedures could exist but these techniques become much more interesting in the multivariate setting. • Cluster Analysis and Classification - • Here we try to identify subpopulations from the data Discriminant Analysis - In Discriminant Analysis, we attempt to use a collection of variables to identify the unknown population for which a case is a member An Example: A survey was given to 132 students • Male=35, • Female=97 They rated, on a Likert scale • 1 to 5 • their agreement with each of 40 statements. All statements are related to the Meaning of Life Questions and Statements 1. How religious/spiritual would you say you are? 2. To have trustworthy and intimate friend(s) 3. To have a fulfilling career 4. To be closely connected to family 5. To share values/beliefs with others in your close circle or community 6. To have and raise children 7. To continually set short and long-term, achievable goals for yourself 8. To feel satisfied with yourself (feel good about yourself) 9. To live up to the expectations of family and close friends 10. To contribute to world peace Statements - continued 11. To be involved in an intimate relationship with a significant person 12. To give of yourself to others. 13. To be able to plan and take time for leisure. 14. To act on your own personal beliefs, despite outside pressure. 15. To be seen as physically attractive. 16. To feel confident in choosing new experiences to better yourself. 17. To care about the state of the physical/natural environment. 18. To take responsibility for your mistakes. 19. To make restitution for you mistakes, if necessary. 20. To be involved with social or political causes. 21. To keep up with media and popular-culture trends. 22. To adhere to religious practices based on tradition or rituals. 23. To use your own creativity in a way that you believe is worthwhile. 24. The meaning of life is found in understanding ones ultimate purpose for life. 25. The meaning of life can be discovered through intentionally living a life that glorifies a Spiritual being. 26. There is a reason for everything that happens. 27. Obtaining things in life that are material and tangible is only part of discovering the meaning of life. 28. People unearth the same basic values when attempting to find the meaning of life. 29. It is more important to cultivate character than to be consumed with outward rewards, or, awards. 30. Some aims or goals in life are more valuable than other goals. 31. The purpose of life lies in promoting the ends of truth, beauty, and goodness. 32. A meaningful life is one that contributes to the well-being of others. 33. The meaning of life is the same as a happy life. 34. The meaning of life is found in realizing my potential. 35. Life has purpose only in the everyday details of living. 36. There is no, one, universal way of obtaining a meaningful life for all people. 37. People passionately desire different things. Obtaining these things contributes to making life more meaningful for them. 38. What contributes to a meaningful life varies according to each person (or group). 39. Lives can be meaningful even without the existence of a God or spiritual realm. 40. Our lives have no significance, but we must live as if they do. Cluster Analysis of n = 132 university students using responses from Meaning of Life questionnaire (40 questions) 80 70 60 50 40 30 Linkage Distance 20 10 0 Cases Fig. 1. Dendrogram showing clustering using Ward`s method of Euclidean distances Discriminant Analysis of n = 132 university students into the three identified populations 8 Semi-Religious Religious Humanistic Optimistic F2 (Discriminant function 2) 7 6 5 4 3 2 Pessimistic 1 Religious Non-religious 0 -4 -3 -2 -1 0 1 2 F1 (Discriminant function 1) Fig. 4. Cluster map 3 4 5 6 A Review of Linear Algebra With some Additions Matrix Algebra Definition An n × m matrix, A, is a rectangular array of elements a1n a11 a12 a a a 21 22 2n A aij amn am1 am 2 n = # of columns m = # of rows dimensions = n × m Definition A vector, v, of dimension n is an n × 1 matrix rectangular array of elements v1 v 2 v vn vectors will be column vectors (they may also be row vectors) A vector, v, of dimension n v1 v 2 v vn can be thought a point in n dimensional space v3 v1 v v2 v3 v2 v1 Matrix Operations Addition Let A = (aij) and B = (bij) denote two n × m matrices Then the sum, A + B, is the matrix a11 b11 a12 b12 a b a b 21 21 22 22 A B aij bij am1 bm1 am 2 bm 2 a1n b1n a2 n b2 n amn bmn The dimensions of A and B are required to be both n × m. Scalar Multiplication Let A = (aij) denote an n × m matrix and let c be any scalar. Then cA is the matrix ca11 ca12 ca ca 21 22 cA caij cam1 cam 2 ca1n ca2 n camn Addition for vectors v3 v1 w1 v w v2 w2 v3 w3 w1 w w2 w3 v1 v v2 v3 v1 v2 Scalar Multiplication for vectors v3 cv1 cv cv2 cv3 v1 v v2 v3 v2 v1 Matrix multiplication Let A = (aij) denote an n × m matrix and B = (bjl) denote an m × k matrix Then the n × k matrix C = (cil) where m cil aij b jl j 1 is called the product of A and B and is denoted by A∙B In the case that A = (aij) is an n × m matrix and B = v = (vj) is an m × 1 vector m Then w = A∙v = (wi) where wi aij v j j 1 is an n × 1 vector A w3 v3 v1 v v2 v3 w2 v2 w1 w w2 Av w3 w1 v1 Definition An n × n identity matrix, I, is the square matrix 1 0 0 1 I In 0 0 Note: 1. AI = A 2. IA = A. 0 0 1 Definition (The inverse of an n × n matrix) Let A denote the n × n matrix a1n a11 a12 a a a 21 22 2n A aij ann an1 an 2 Let B denote an n × n matrix such that AB = BA = I, If the matrix B exists then A is called invertible Also B is called the inverse of A and is denoted by A-1 The Woodbury Theorem 1 1 A BCD A A B C DA B DA 1 1 1 1 where the inverses 1 A ,C 1 and C DA B 1 1 1 1 exist. Proof: 1 1 1 1 1 1 Let H A A B C DA B DA Then all we need to show is that H(A + BCD) = (A + BCD) H = I. H A BCD 1 A A B C DA B DA1 A BCD 1 1 1 1 1 A A A B C DA B DA1 A 1 1 1 1 1 A BCD A B C DA B DA BCD 1 1 1 1 1 1 I A B C DA B D 1 1 1 1 A BCD A B C DA B DA1BCD I A1 BCD 1 1 1 1 1 A B C DA B I DA1BC D I A1 BCD 1 1 1 1 1 1 A B C DA B C DA B CD 1 1 1 1 1 I A BCD A BCD I The Woodbury theorem can be used to find the inverse of some pattern matrices: Example: Find the inverse of the n × n matrix b a a b a a a 1 0 a 0 1 b a b 0 0 1 1 b a I a 1 1 1 1 0 1 1 0 1 1 a 1 1 1 A BCD 1 1 1 where A b a I 1 1 B 1 1 I hence A ba 1 C a 11 D 1 1 1 C a 1 1 1 1 1 1 1 C DA B 1 1 1 I a b a 1 1 n b a an b a n 1 a b a a b a a b a and 1 Thus a b a C DA B b a n 1 1 1 1 Now using the Woodbury theorem 1 1 A BCD A A B C DA B DA 1 1 1 1 1 1 1 a b a 1 1 1 I I 1 1 1 I ba b a b a n 1 ba 1 1 1 1 a 1 1 I 1 ba b a b a n 1 1 Thus 1 b a a b a a 1 0 0 1 1 ba 0 0 c d d a a b 0 1 1 1 1 0 a b a b a n 1 1 1 1 d c d d d c 1 1 1 where a d b a b a n 1 1 a and c b a b a b a n 1 1 a 1 b a n 2 1 b a b a n 1 b a b a n 1 Note: for n = 2 a a d 2 b a b a b a 2 1 b b and c 2 b a b a b a2 1 b a 1 Thus 2 2 a b b a b a a b Also b a a b a a a b a a a b b a a bc n 1 ad bd ac (n 2)ad bd ac (n 2)ad 1 a b a a b a b a a bd ac (n 2)ad bc n 1 ad bd ac (n 2)ad a c a d b d d c d d d c bd ac (n 2)ad bd ac (n 2)ad bc n 1 ad Now a d b a b a n 1 1 b a n 2 and c b a b a n 1 2 b a n 2 n 1 a b bc n 1 ad b a b a n 1 b a b a n 1 b b a n 2 n 1 a 2 b a b a n 1 b2 ab n 2 n 1 a 2 2 1 2 b ab n 2 n 1 a and b (n 2)a a a b a n 2 bd ac (n 2)ad b a b a n 1 b a b a n 1 0 This verifies that we have calculated the inverse Block Matrices Let the n × m matrix A11 A n m n q A21 q p A12 A22 m p be partitioned into sub-matrices A11, A12, A21, A22, Similarly partition the m × k matrix B11 B mk m p B21 p l B12 B22 k l Product of Blocked Matrices Then A11 A B A21 A12 B11 A22 B21 A11B11 A12 B21 A21B11 A22 B21 B12 B22 A11B12 A12 B22 A21B12 A22 B22 The Inverse of Blocked Matrices Let the n × n matrix A11 A n n n p A21 p p A12 A22 n p be partitioned into sub-matrices A11, A12, A21, A22, Similarly partition the n × n matrix B11 B n n n p B21 p Suppose that B = A-1 p B12 B22 n p Product of Blocked Matrices Then A11 A B A21 A12 B11 A22 B21 A11B11 A12 B21 A21B11 A22 B21 Ip 0 n p p 0 p n p I n p B12 B22 A11B12 A12 B22 A21B12 A22 B22 Hence A11B11 A12 B21 I A11B12 A12 B22 0 1 2 A21B11 A22 B21 0 A21B12 A22 B22 I From (1) 3 4 A11 A12 B21 B111 B111 From (3) 1 1 A22 A21 B21B111 0 or B21B111 A22 A21 Hence or A11 A12 A221 A21 B111 B11 A11 A12 A A21 1 22 1 1 1 A A A A22 A A A A21 A11 1 11 1 11 12 1 21 11 12 using the Woodbury Theorem Similarly 1 B22 A22 A A A 1 1 1 1 1 A22 A22 A21 A11 A12 A22 A21 A12 A22 1 21 11 12 From A21B11 A22 B21 0 3 A221 A21 B11 B21 0 and B21 A A21 B11 A A21 A11 A12 A A21 1 22 1 22 1 22 1 similarly B12 A A B22 A A A22 A A A 1 11 12 1 11 12 1 21 11 12 1 Summarizing A11 A n n n p A21 A12 A22 p Let n p p B11 Suppose that A-1 = B n p B21 p p B12 B22 n p then 1 1 B11 A11 A12 A A21 A A A A22 A A A A21 A111 1 22 1 1 11 1 11 12 1 21 11 12 1 B22 A22 A A A A A A21 A11 A12 A A21 A12 A221 1 21 11 12 1 22 1 22 1 22 B12 A A B22 A A A22 A21 A111 A12 1 11 12 1 11 12 1 B21 A A21 B11 A A21 A11 A12 A A21 1 22 1 22 1 22 1 Example Let aI A p cI p p a bI 0 dI c p 0 Find A-1 = B 0 0 b 0 d b a 0 0 d c B11 n p B21 p p 0 B12 B22 n p A11 aI , A12 bI , A21 cI , A22 dI B11 aI bI I cI a bcd I 1 d 1 1 B22 dI cI I bI d bca I 1 a 1 1 d ad bc a ad bc B12 A111 A12 B22 1a I (bI ) ad abc I ad bbc I 1 B21 A22 A21B11 d1 I (cI ) addbc I ad cbc I d ad bc I 1 hence A c ad bc I ad bbc I a I ad bc I I The transpose of a matrix Consider the n × m matrix, A a11 a A aij 21 am1 a12 a22 am 2 a1n a2 n amn then the m × n matrix,A (also denoted by AT) a11 a21 a a22 12 A a ji am1 am 2 is called the transpose of A am1 am 2 amn Symmetric Matrices • An n × n matrix, A, is said to be symmetric if A A Note: AB BA 1 1 1 AB B A A 1 A 1 The trace and the determinant of a square matrix Let A denote then n × n matrix a11 a12 a a 21 22 A aij an1 an 2 Then n tr A aii i 1 a1n a2 n ann a11 a12 also a a 21 22 A det an1 an 2 a1n a2 n the determinant of A ann n aij Aij j 1 where Aij cofactor of aij the determinant of the matrix after deleting i th row and j th col. a11 a12 det a11a22 a12 a21 a21 a22 Some properties 1. I 1, tr I n 2. AB A B , tr AB tr BA 3. 4. 1 A 1 A A11 A A21 1 A A A A A12 22 11 12 22 A21 A22 A11 A22 A21 A111 A12 A22 A11 if A12 0 or A21 0 Some additional Linear Algebra Inner product of vectors Let x and y denote two p × 1 vectors. Then. x y x1 , y1 , x p x1 y1 yp p xi yi i 1 xp yp Note: 2 x x x1 x the length of x 2 p Let x and y denote two p × 1 vectors. Then. cos x y angle between x and y x x y y x y Note: Let x and y denote two p × 1 vectors. Then. cos x y if between x y 0 xand 0angle andy 2 x x y y Thus if x y 0, then x and y are orthogonal . x y 2 Special Types of Matrices 1. Orthogonal matrices – A matrix is orthogonal if P'P = PP' = I – In this cases P-1=P' . – Also the rows (columns) of P have length 1 and are orthogonal to each other Suppose P is an orthogonal matrix then PP PP I Let x and y denote p × 1 vectors. Let u Px and v Py Then uv Px Py xPPy xy and u u Px Px xPPx xx Orthogonal transformation preserve length and angles – Rotations about the origin, Reflections Example The following matrix P is orthogonal 1 1 P 1 1 3 2 3 3 1 2 1 6 0 2 6 1 6 Special Types of Matrices (continued) 2. Positive definite matrices – A symmetric matrix, A, is called positive definite if: 2 2 x Ax a11x1 ann xn 2a12 x1 x2 2a12 xn 1 xn 0 for all x 0 – A symmetric matrix, A, is called positive semi definite if: xAx 0 for all x 0 If the matrix A is positive definite then the set of points, x , that satisfy x Ax c where c 0 are on the surface of an n dimensiona l ellipsoid centered at the origin, 0. Theorem The matrix A is positive definite if A1 0, A2 0, A3 0,, An 0 where a11 a12 a13 a11 a12 A1 a11 , A2 , A3 a12 a22 a23 , a12 a22 a13 a23 a33 a11 a12 a1n a a a 12 22 2n and An A a1n a2 n ann Special Types of Matrices (continued) 3. Idempotent matrices – A symmetric matrix, E, is called idempotent if: EE E – Idempotent matrices project vectors onto a linear subspace EEx Ex x Ex Definition Let A be an n × n matrix Let x and be such that Ax x with x 0 then is called an eigenvalue of A and and x is called an eigenvector of A and Note: A I x 0 If A I 0 then x A I 0 0 1 thus A I 0 is the condition for an eigenvalue. a11 A I det an1 = 0 ann a1n = polynomial of degree n in . Hence there are n possible eigenvalues 1, … , n Thereom If the matrix A is symmetric then the eigenvalues of A, 1, … , n,are real. Thereom If the matrix A is positive definite then the eigenvalues of A, 1, … , n, are positive. Proof A is positive definite if xAx 0 if x 0 Let and x be an eigenvalue and corresponding eigenvector of A. then Ax x xx and xAx xx , or 0 xAx Thereom If the matrix A is symmetric and the eigenvalues of A are 1, … , n, with corresponding eigenvectors x1 , , xn i.e. Axi i xi If i ≠ j then xix j 0 Proof: Note xj Axi i xj xi and xiAx j j xix j 0 i j xix j hence xix j 0 Thereom If the matrix A is symmetric with distinct eigenvalues, 1, … , n, with corresponding eigenvectors x1 , , xn Assume xixi 1 then A 1 x1 x1 x1 , n xn xn 0 x1 1 PDP , xn 0 n xn then A 1 x1 x1 n xn xn proof Note xixi 1 and xix j 0 if i j x1 PP x1 , xn 1 0 x1x1 , xn xn x1 0 I 1 x1xn xn xn P is called an orthogonal matrix therefore P P and PP PP I . thus x1 I PP x1 , , xn x1 x1 xn xn xn now Axi 1 xi and Axi xi i xi xi Ax1 x1 A x1 x1 1 1 Axn xn 1 x1 x1 n xn xn xn xn 1x1x1 n xn xn A 1 x1 x1 n xn xn Comment The previous result is also true if the eigenvalues are not distinct. Namely if the matrix A is symmetric with eigenvalues, 1, … , n, with corresponding eigenvectors of unit length x1 , , xn then A 1 x1 x1 x1 , n xn xn 0 x1 1 PDP , xn 0 n xn An algorithm for computing eigenvectors, eigenvalues of positive definite matrices • Generally to compute eigenvalues of a matrix we need to first solve the equation for all values of . – |A – I| = 0 (a polynomial of degree n in ) • Then solve the equation for the eigenvector , x , Ax x Recall that if A is positive definite then A 1 x1 x1 n xn xn where x1 , x2 ,, xn are the orthogonal eigenvecto rs of length 1. i.e. xi xi 1 and xi x j 0 if i j and 1 2 n 0 are the eigenvalue s It can be shown that 2 2 2 2 A 1 x1 x1 2 x2 x2 n xn xn m m m m and that A 1 x1 x1 2 x2 x2 n xn xn m m m m n 2 1 x1 x1 x2 x2 xn xn 1 x1 x1 1 1 Thus for large values of m m A a constant x1 x1 The algorithim 1. Compute powers of A - A2 , A4 , A8 , A16 , ... 2. Rescale (so that largest element is 1 (say)) 3. Continue until there is no change, The m resulting matrix will be A cx1 x1 m 4. Find b so that A b b cx1 x1 5. Find x1 1 b and 1 using Ax1 1 x1 b b To find x2 and 2 Note : A 1 x1 x1 2 x2 x2 n xn xn 6. Repeat steps 1 to 5 with the above matrix to find x2 and 2 7. Continue to find x3 and 3 , x4 and 4 ,, xn and n Example A= eigenvalue eignvctr 5 4 2 4 10 1 1 12.54461 0.496986 0.849957 0.174869 2 1 2 2 3.589204 0.677344 -0.50594 0.534074 3 0.866182 0.542412 -0.14698 -0.82716 Differentiation with respect to a vector, matrix Differentiation with respect to a vector Let x denote a p × 1 vector. Let f x denote a function of the components of x . df x dx1 df x dx df x dx p Rules 1. Suppose f x ax a1x1 an xn f x x1 a1 df x then a dx f x a p x p 2. Suppose f x xAx a11 x12 2a12 x1 x2 2a13 x1 x3 a pp x2p 2a p 1, p x p 1 x p f x x1 df x 2 Ax then dx f x x p i.e. f x xi 2ai1 x1 2aii xi 2aip x p Example 1. Determine when f x xAx bx c is a maximum or minimum. solution df x dx 2 Ax b 0 or x 12 A1b 2. Determine when f x xAx is a maximum if xx 1. Assume A is a positive definite matrix. solution let g x xAx 1 xx is the Lagrange multiplier. dg x dx 2 Ax 2 x 0 or Ax x This shows that x is an eigenvector of A. and f x xAx xx Thus x is the eigenvector of A associated with the largest eigenvalue, . Differentiation with respect to a matrix Let X denote a q × p matrix. Let f (X) denote a function of the components of X then: f X x11 df X f X xij dX f X x q1 f X x1 p f X x pp Example Let X denote a p × p matrix. Let f (X) = ln |X| then d ln X dX X 1 Solution X xi1 X i1 xij X ij xip X ip Note Xij are cofactors ln X xij 1 X ij = (i,j)th element of X-1 X Example Let X and A denote p × p matrices. Let f (X) = tr (AX) then Solution p d tr AX p dX tr AX aik xki k 1 k 1 tr AX xij a ji A Differentiation of a matrix of functions Let U = (uij) denote a q × p matrix of functions of x then: du11 dx dU duij dx dx duq1 dx du1 p dx duqp dx Rules: 1. 2. 3. d aU dx dU a dx d U V dx d UV dx dU dV dx dx dU dV V U dx dx 4. d U 1 dx Proof: U 1 dU 1 U dx U 1U I 1 dU 1 dU U U 0 dx dx p p 1 dU 1 dU U U dx dx 1 dU 1 dU U U 1 dx dx 5. dtrAU dU tr A dx dx p p Proof: tr AU aik uki i 1 k 1 tr AU x 6. dtrAU dx 1 uki dU aik tr A x dx i 1 k 1 p p 1 dU 1 tr AU U dx dtrAX 1 ij 1 tr E X AX 1 dxij 7. E kl kl kl (eij ) where eij 1 i k , j l 0 otherwise Proof: dtrAX dxij 8. 1 1 dX 1 1 ij tr AX X tr AX E X 1 dx ij ij 1 tr E X AX 1 dtrAX 1 X 1 AX 1 dX The Generalized Inverse of a matrix Recall B (denoted by A-1) is called the inverse of A if AB = BA = I • A-1 does not exist for all matrices A • A-1 exists only if A is a square matrix and |A| ≠ 0 • If A-1 exists then the system of linear equations Ax b has a unique solution 1 xA b Definition B (denoted by A-) is called the generalized inverse (Moore – Penrose inverse) of A if 1. ABA = A 2. BAB = B 3. (AB)' = AB 4. (BA)' = BA Note: A- is unique Proof: Let B1 and B2 satisfying 1. ABiA = A 2. BiABi = Bi 3. (ABi)' = ABi 4. (BiA)' = BiA Hence B1 = B1AB1 = B1AB2AB1 = B1 (AB2)'(AB1) ' = B1B2'A'B1'A'= B1B2'A' = B1AB2 = B1AB2AB2 = (B1A)(B2A)B2 = (B1A)'(B2A)'B2 = A'B1'A'B2'B2 = A'B2'B2= (B2A)'B2 = B2AB2 = B2 The general solution of a system of Equations Ax b The general solution x A b I A A z b I where A A z is arbitrary Suppose a solution exists Ax0 b Let x A b I A A z then Ax A Ab I A A z AA b A AA A z AA Ax0 Ax0 b Calculation of the Moore-Penrose g-inverse Let A be a p×q matrix of rank q < p, then A AA A Proof thus also A A A A 1 A A A A 1 A A I AA A AI A and A AA IA A A A I is symmetric and AA A A A 1 A is symmetric 1 Let B be a p×q matrix of rank p < q, then B B BB Proof thus BB B B BB 1 BB BB 1 I BB B IB B and B BB B I B BB I is symmetric also and B B B BB 1 B is symmetric 1 Let C be a p×q matrix of rank k < min(p,q), then C = AB where A is a p×k matrix of rank k and B is a k×q matrix of rank k then C B BB Proof 1 AA A 1 A A CC AB B BB is symmetric, as well as 1 1 A A A A 1 A 1 1 1 C C B BB A A A AB B BB B 1 Also CC C A A A A AB AB C 1 1 1 and C CC B BB B B BB A A A 1 1 B BB A A A C References 1. Matrix Algebra Useful for Statistics, Shayle R. Searle 2. Mathematical Tools for Applied Multivariate Analysis, J. Douglas Carroll, Paul E. Green