CIS-496 / IS Auditing

advertisement

Chapter 4:

Systems Development &

Maintenance Activities

PARTICIPANTS

Systems professionals

End users

Stakeholders

ACCOUNTANTS & AUDITORS

Internal

IT

ACCOUNTANTS/AUDITORS

Why are accountants/auditors involved?

Experts in financial transaction processes

Quality of AIS is determined in SDLC

How are accountants involved?

Users (e.g., user views and accounting

techniques)

Members of SDLC development team

(e.g., Control Risk being minimized)

Auditors (e.g., auditable systems, auditing with

specific technique)

IS Development

In-house development staffs

Purchase commercial systems

General Rule: Never build if you can

acquire a system that will provide

80% of your needs.

Qstn: When would you want to build

your own system?

TRENDS IN COMMERCIAL

SOFTWARE

Trends in commercial software

Relatively low cost for general

purpose software

Industry-specific vendors

Businesses too small to have inhouse IS staff

Downsizing & DDP (cloud

computing)

TYPES OF COMMERCIAL

SYSTEMS

Turnkey systems (alpha and beta testing)

General accounting systems

Special-purpose systems

Purpose is to improve productivity

Enterprise systems (ERP)

Example banking

Office automation systems

Typically in modules

SAP, Peoplesoft, Baan, Oracle

Vendor-supported systems

Hybrids (custom system, commercial

software)

Office automation systems

Vendor-supported systems

Healthcare

COMMERCIAL SYSTEMS

Advantages

Implementation time

Cost

Reliability

Disadvantages

Independence

Customization needs

Maintenance

SYSTEMS DEVELOPMENT LIFE

CYCLE (SDLC)

Some company may satisfy its information

needs by purchasing commercial software

and develop other system in-house.

Both are necessary and enhanced by formal

procedures that lend structure to the decision

making process

SDLC “best practices” for system

development.

SYSTEMS DEVELOPMENT LIFE

CYCLE (SDLC)

New systems

1.

2.

3.

4.

5.

6.

7.

8.

Systems planning

Systems analysis

Conceptual systems design

System evaluation and selection

Detailed design

System programming and testing

System implementation

System maintenance

SDLC -- Figure 4-1 [p.141]

SYSTEMS DEVELOPMENT LIFE

CYCLE (SDLC)

SYSTEMS PLANNING– PHASE I

PURPOSE:

To link individual systems projects to

the strategic objectives of the firm.

Link individual projects to strategic objectives of the firm Figure 4-2 [p.142]

Who does it?

Steering committee

CEO, CFO, CIO, senior mgmt., auditors, external parties

Ethics and auditing standards limit when auditors

can serve on this committee

Long-range planning: 3-5 years

Allocation of resources – broad (=strategic budget & other

resources allocation: system resources hr, hw,sw, telco)

To link individual systems projects to the strategic

objectives of the firm

SYSTEMS PLANNING-PHASE I

Level 1 = Strategic systems planning

Why?

1.

2.

3.

4.

A changing plan is better than no plan

Reduces crises in systems development

Provides authorization control for SDLC

It works!

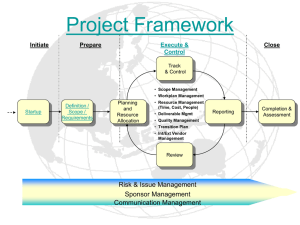

Level 2 = Project planning

Project proposal

Project schedule

Project Proposal

Report

What you have found

Recommendations

Financially feasible

Problem Definition

Nature of the problem:

Separate problem from

symptoms of problem

Scope of the project:

Establish boundaries..

Budget and schedule

Objectives of the project:

What user thinks system

should do

Resulting Management Decision

Drop

Fix a simple problem

Authorize the analysis phase

SYSTEMS PLANNING-PHASE I

Auditor’s

role in systems

planning

Auditability

Security

Controls

Reduce the risks (unneeded,

inefficient, ineffective and

fraudulent system)

SYSTEMS PLANNING-PHASE I

SUMMARY

Identify user’s needs

Preparing proposals

Evaluating proposals

Prioritizing individual projects

Scheduling work

Project Plan – allocates resources to specific project

Project Proposal – Go or not

Project Schedule – represents mgmt’s commitment

SYSTEMS ANALYSISPHASE II

PURPOSE:

Effectively identify and analyze the needs

of the users for the new system.

Data Gathering And / Survey

Written documents. Reviewing key documents

(see list, p. 147, 182)

Interviews

Structured

Unstructured

Questionnaires (Iterations are needed)

Observation

Visits by appointment

Participant observation

Sampling

SYSTEMS ANALYSISPHASE II

Survey

Disadvantages:

step

Tar pit syndrome

Thinking inside the box

Advantages:

•

•

•

Identify aspects to keep

Forcing analysts to understand the

system

Isolating the root of problem symptoms

SYSTEMS ANALYSISPHASE II

Gathering facts

Data sources

Users

Data stores

Processes

Data flows

Controls

Transaction volumes

Error rates

Resource costs

Bottlenecks

Redundant operations

SYSTEMS ANALYSISPHASE II

Systems analysis report

Figure 4-3 (p.148)

Auditor’s role

CAATTs (e.g., embedded modules) Advanced

audit features cannot be added easily to the

existing systems due to technical (3GL: Cobol

does not support ALE) auditor should analyze

what is best suited for the systems

CONCEPTUAL SYSTEMS DESIGNPHASE III

PURPOSE: Develop alternative systems that satisfy

system requirements identified during system

analysis

1. Top-down (structured design)

[see Figure 4-4, p.150]

Designs general rather than specific

Enough details for design to demonstrate differences

Example: Figure 4-5, p. 151

Object-oriented approach (OOD)

2.

Reusable objects

Creation of modules (library, inventory of objects)

3. Auditor’s role

special auditability features

SDLC

Major system aspects

Centralized or distributed

Online or batch

PC-based?

How will input be

captured?

Necessary reports

SDLC

Make or buy decision

Packaged software

Custom software

Meet at least 75% of requirements?

Change business procedures for part or all of remainder?

Customize for part of all of remainder?

Programmers write code

Outsourcing

System is developed by external organization

SDLC

Build a prototype

Does not need true functionality

Limited working system of subset

Output looks like anticipated system output

Working model that can be modified and finetuned

Uses high-level software tools – CASE

(Computer-Aided Software Engineering)

Best for small-scale systems

SDLC

Presentation

All alternatives

Selected plan

Prototype of the system

Obtain authorization to proceed

SYSTEM EVALUATION & SELECTION–

PHASE IV

PURPOSE: Process that seeks to identify the optimal solution from

the alternatives

Perform detailed feasibility study

1.

Technical feasibility [existing IT or new IT?]

Economic feasibility

Legal feasibility (SOX and SAS 109 : privacy and

confidentiality of information)

Operational feasibility

Degree of compatibility between the firm’s existing procedures

and personnel skills, and requirements of the new system

Schedule feasibility [implementation]

Perform a cost-benefit analysis

2.

Identify costs

Identify benefits

Compare the two

SYSTEM EVALUATION & SELECTION-PHASE IV

Cost-Benefit Analysis: Costs

•

•

•

•

•

•

•

•

ONE-TIME COSTS:

Hardware acquisition

Site preparation

Software acquisition

Systems design

Programming

Testing

Data conversion

Training

RECURRING COSTS:

• Hardware maintenance

• Software maintenance

• Insurance

• Supplies

• Personnel

• Allocated existing IS

SYSTEM EVALUATON & SELECTION–PHASE IV

Cost-Benefit Analysis: Benefits

•

•

TANGIBLE:

Increased revenues

•

Increased sales in existing

markets

•

Expansion into new

markets

Cost Reduction 1

•

Labor reduction

•

Operating cost reduction

•

Supplies

•

overhead

•

Reduced inventories

•

Less expensive eqpt.

•

Reduced eqpt. maint.

•

•

•

•

•

•

•

•

INTANGIBLE 2:

Increased customer

satisfaction

Improved employee

satisfaction

More current information

Improved decision making

Faster response to

competitors’ actions

More effective operations

Better internal and external

communications

Improved control

environment

Cost-Benefit Analysis: Comparison

NPV 1

Payback 2

[Table 4-4]

NPV of Benefits (over life of system) – NPV costs

(over life of system) = NPV

If NPV > 0, economically feasible

When choosing between projects, choose the one

with the greatest NPV

[Figures 4-7a, 7b]

DETAILED DESIGN–PHASE V

PURPOSE: Produce a detailed description of the

proposed system that satisfies system

requirements identified during systems analysis

and is in accordance with conceptual design.

User views (Output requirements & Input

requirements )

Files and databases

Systems processing

Systems controls and backup

DETAILED DESIGN– PHASE V

Quality Assurance

“Walkthrough”

The process of inspecting algorithms and source code by

following paths through the algorithms or code as

determined by input conditions and choices made along

the way

Algorithms : A process or set of rules to be followed in

calculations or other problem-solving operations, esp. by

a computer exp: a basic algorithm for division (9:3 = 3

9:3=2 IF THEN ELSE)

Quality assurance group (programmers, system

analysts, users and internal auditors)

DETAILED DESIGN – PHASE V

Detailed Design Report

Designs for input screens and source documents

Designs for screen outputs, reports, operational

documents

Normalized database

Database structures and diagrams

Data flow diagrams (DFD’s)

Database models (ER, Relational)

Data dictionary

Processing logic (flow charts)

SYSTEM PROGRAMMING & TESTING– PHASE VI

Program the Application

Procedural languages (3GLs: COBOL, C)

Event-driven languages (VB aka GUI)

OO languages (Java)

Hybrid (C++ : to bridge 3GL and OOP)

Programming the system

Test the application {Figure 4-8]

Testing methodology

Testing offline before deploying online

Test data

Why?

Can provide valuable future benefits

SYSTEMS IMPLEMENTATION–

PHASE VII

PURPOSE: Database structures are created and

populated with data, applications are coded and

tested, equipment is purchased and installed,

employees are trained, the system is documented,

and the new system is installed.

Testing the entire system (modules are tested as a

whole system)

Documenting the system

Designer and programmer documentation

Operator documentation (run manual)

User documentation (user skills varied: online

tutorials, help features)

SYSTEMS IMPLEMENTATION–

PHASE VII

Conversion

The transfer of data from its current form to the

format or medium required by the new system

Converting the databases

Validation

Reconciliation

Backup

Converting the new system

Auditor involvement virtually stops!

Cold turkey cutover (big bang)

Phased cutover (modular)

Parallel operation cutover

SYSTEMS IMPLEMENTATION–

PHASE VII

Post-Implementation Review

Reviewed by independent team to measure

the success of the system

Systems design adequacy [see list p. 170, 203]

Accuracy of time, cost, and benefit estimates

[see list p. 170, 204]

Auditor’s role?

SYSTEMS IMPLEMENTATION–

PHASE VII

Auditors’ Role

Provide technical expertise

AIS: GAAP, GAAS, SEC, IRS

Legal

Social / behavioral

IS/IT (if capable)

Specify documentation standards

Verify control adequacy

Effective and efficient ways to limit application

testing

COSO – SAS No. 78 – PCAOB Standard #1

Impact on scope of external auditors

SYSTEMS MAINTENANCE–PHASE VIII

PURPOSE: Changing systems to

accommodate changes in user needs

80/20 rule

Importance of documentation?

Facilitate efficient changes

Preliminary

Feasibility

Project

Authorization

Project

Proposal

Systems

Planning

Systems

Analysis

System

Analysis Rpt

Conceptual

Design

DFD

(general)

Systems

Selection

Detailed

Design

System

Implementation

Feasibility

Study

Detailed

Design Rpt

Post-Impl.

Review

DFD

(Detail)

ER

Diagram

Program

Flowcharts

Project

Schedule

System

Cost-Benefit

Selection Rpt

Analysis

Relational

Model

Documentation

Normalized

Data

User

Acceptance Rpt

CONTROLLING & AUDITING THE SDLC

A materially flawed financial application will eventually

corrupt financial data, which will then be incorrectly

reported in the financial statements. Therefore, the

accuracy and integrity of the IS directly affects the accuracy

of the client’s financial data

A properly functioning system development process

ensures only needed application are created, properly

specified and have adequate controls and thoroughly tested

before implemented

The system maintenance process ensures that only

legitimate changes are made to applications and are tested

before implemented

If else, application testing and substantive testing is in

place

CONTROLLING & AUDITING THE SDLC

Controlling New Systems

Development

Systems authorization activities (economic

justification and feasibility)

User specification activities (involvement)

Technical design activities

Documentation is evidence of controls

Documentation is a control!

Internal audit participation (control and liaison

users and system pro)

User test and acceptance procedures (assurance

group)

Audit objectives (p.206)

Audit procedures (p.206)

CONTROLLING & AUDITING THE SDLC

Audit Objectives & Procedures

Audit objectives

Verify SDLC activities are applied consistently and in

accordance with management’s policies

Verify original system is free from material errors and

fraud

Verify system necessary and justified

Verify documentation adequate and complete

Audit procedures

How verify SDLC activities applied consistently?

How verify system is free from material errors and fraud?

How verify system is necessary?

How verify system is justified?

How verify documentation is adequate and complete?

See page 174 for a list

CONTROLLING & AUDITING THE SDLC

Controlling Systems Maintenance

Four minimum controls:

Formal authorization

Technical specifications

Retesting

Updating the documentation

When maintenance cause extensive changes to

program logic additional control such as

involvement of internal auditor and user,

acceptance testing may be needed

CONTROLLING & AUDITING THE SDLC

Controlling Systems Maintenance

Source program library (SPL) controls

Why? What trying to prevent?

Unauthorized access

Unauthorized program changes

SPLMS [Figure 4-13, p. 177]

SPLMS Controls

Storing programs on the SPL

Retrieving programs for maintenance purposes

Detecting obsolete programs

Documenting program changes (audit trail)

CONTROLLING & AUDITING THE SDLC

Controlled SPL Environment

Password control

Separate test libraries

Audit trail and management reports

On a specific program

Describing software changes

Program version numbers

Controlling access to maintenance [SPL]

commands

CONTROLLING & AUDITING THE SDLC

Audit Objectives & Procedures

Audit objectives

Detect any unauthorized program changes

Verify that maintenance procedures protect

applications from unauthorized changes

Verify applications are free from material

errors

Verify SPL are protected from unauthorized

access

CONTROLLING & AUDITING THE SDLC

Audit Objectives & Procedures

Audit procedures

Figure 4-14, p.179

Identify unauthorized changes

Reconcile program version numbers

Confirm maintenance authorization

Identify application errors

Reconcile source code [after taking a sample]

Review test results

Retest the program

Testing access to libraries

Review programmer authority tables

Test authority table

End Chapter 4:

Systems Development &

Maintenance Activities

Evaluating Asset safeguarding & Data

Integrity

Auditor attempts to determine whether assets

could be destroyed, damaged or used for

unauthorized purposes and how well the

completeness, soundness (a state or

condition free from damage or decay ), purity

and veracity (truth) of data are maintained

How to evaluate that?

The evaluation process involves the auditor

making a complex global judgment using

evidence collected on the strength and

weakness of internal control (IC)

How to evaluate IC?

Common measures are: the dollar (or other

currency) lost (asset), quantity error (data)

Dynamic System

Since a system of IC usually contains

stochastic elements the measures should be

expressed probabilistically

Both qualitative and quantitative approach

can be used when making evaluation

decision

How to evaluate that?

Qualitative risk assessment—Ranks threats

by nondollar values and is based more on

scenario, intuition, and experience

Quantitative risk assessment—Deals with

dollar amounts. It attempts to assign a cost

(monetary value) to the elements of risk

assessment and the assets and threats of a

risk analysis.

Example 1

NIST 800-26, a document that uses confidentiality, integrity, and

availability as categories for a loss.

It then rates each loss according to a scale of low, medium or

high. A rating of low, medium, or high is subjective.

In this example, the following categories are defined:

Low—Minor inconvenience; can be tolerated for a short period of

time but will not result in financial loss.

Medium—Can result in damage to the organization, cost a

moderate amount of money to repair, and result in negative

publicity.

High—Will result in a loss of goodwill between the company,

client, or employee; may result in a large legal action or fine, or

cause the company to significantly lose revenue or earnings

Example 1

The flipside is when performing a qualitative assessment is

that you are not working with dollar values; therefore, this

lacks the rigor (detail) that accounting teams and

management typically prefer.

Example 1

The types of qualitative assessment techniques

include these:

The Delphi Technique: a structured communication

technique, originally developed as a systematic,

interactive forecasting method which relies on a

panel of experts.

Facilitated Risk Assessment Process (FRAP): A

subjective process that obtains results by asking a

series of questions. It places risks into one of 26

categories. FRAP is designed to be completed in a

matter of hours, making it a quick process to

perform.

Example 2

Performing a quantitative risk assessment

involves quantifying all elements of the

process, including asset value, impact, threat

frequency, safeguard effectiveness,

safeguard costs, uncertainty, and probability.

How to quantify?

Determine the asset value (AV) for each information

asset.

Identify threats to the asset.

Determine the exposure factor (EF) for each

information asset in relation to each threat.

Calculate the single loss expectancy (SLE).

Calculate the annualized rate of occurrence (ARO).

Calculate the annualized loss expectancy (ALE)

Some considerations

The advantage of a quantitative risk assessment is

that it assigns dollar values, which is easy for

management to work with and understand.

However, a disadvantage of a quantitative risk

assessment is that it is also based on dollar

amounts.

Consider that it’s difficult, if not impossible, to assign

dollar values to all elements. Therefore, some

qualitative measures must be applied to quantitative

elements.

Even then, this is a huge responsibility; therefore, a

quantitative assessment is usually performed with

the help of automated software tools

STEP BY STEP

Determine the exposure factor: This is a

subjective potential percentage of loss to a

specific asset if a specific threat is realized.

This is usually in the form of a percentage,

similar to how weather reports predict the

likelihood of weather conditions

Calculate the single loss expectancy (SLE): The

SLE value is a dollar figure that represents the

organization’s loss from a single loss or the loss of

this particular information asset.

Single Loss Expectancy = Asset Value ×

Exposure Factor

Items to consider when calculating the SLE include

the physical destruction or theft of assets, loss of

data, theft of information, and threats that might

delay processing.

Assign a value for the annualized rate of

occurrence (ARO): The ARO represents the

estimated frequency at which a given threat

is expected to occur. Simply stated, how

many times is this expected to happen in one

year?

Assign a value for the annualized loss expectancy

(ALE): The ALE is an annual expected financial loss

to an organization’s information asset because of a

particular threat occurring within that same calendar

year.

Annualized Loss Expectancy (ALE) =

Single Loss Expectancy (SLE) × Annualized Rate

of Occurrence (ARO)

The ALE is typically the value that senior

management needs to assess to prioritize resources

and deter-mine what threats should receive the most

attention

Analyze the risk to the organization—The

final step is to evaluate the data and decide

to accept, reduce, or transfer the risk

Data Integrity Controls

Referential integrity guarantees that all foreign keys

reference existing primary keys

Controls in most databases should prevent the

primary key from being deleted when it is linked to

existing foreign keys

Entity integrity the primary keys are names of banks

entity integrity ensures that each tuple contains a

primary key. Without the capability to associate each

primary key with a bank, entity integrity cannot be

maintained and the database is not intact

Data Integrity Controls

STUDENTS

Student ID

Last Name

First Name

Phone No.

Advisor

No.

333-33-3333

Simpson

Alice

333-3333

1418

111-11-1111

Sanders

Ned

444-4444

1418

123-45-6789

Moore

Artie

555-5555

1503

ADVISORS

Advisor No.

Last Name

First Name

Office No.

1418

Howard

Glen

420

1419

Melton

Amy

316

1503

Zhang

Xi

202

1506

Radowski

J.D.

203

Advisor No. is a foreign key in the STUDENTS table. Every

incident of Advisor No. in the STUDENTS table either matches

an instance of the primary key in the ADVISORS table or is null.

Student ID

333-33-3333

111-11-1111

123-45-6789

STUDENTS

Last

First

Name

Name

Simpson Alice

Sanders Ned

Moore

Artie

Course ID Course

1234

ACCT-3603

1235

ACCT-3603

1236

MGMT-2103

Phone

No.

333-3333

444-4444

555-5555

COURSES

Section

1

2

1

Day

MWF

TR

MW

Time

8:30

9:30

8:30

STUDENT x COURSE

SCID

333333333-1234

333333333-1236

111111111-1235

111111111-1236

Note that within each table, there are no

duplicate primary keys and no null primary

keys.

Consistent with the entity integrity rule.

Evaluating information system

effectiveness and efficiency

Why study effectiveness?

Problems have arisen or criticisms have been voiced

in connection with a system;

Some indicators of the ineffectiveness of the

hardware and software being used may prompt the

review;

Management may wish to implement a system initially

developed in one division throughout the

organization, but may want to first establish its

effectiveness;

Post-implementations review to determines whether

new system is meeting its objectives

Indicators of System Ineffectiveness

excessive down time and idle time

slow system response time

excessive maintenance costs

inability to interface with new hardware/software

unreliable system outputs

slow system response time

data loss

excessive run costs

frequent need for program maintenance and modification

user dissatisf. with output format, content or timeliness

System Quality!

Measures of System Quality typically focus

on performance characteristics of the system

under study

Quality: Ease of use, Interface consistency,

Maintainability, Response Time, System

Reliability

Two approaches to measurement of system

effectiveness

Goal-centered

•

view - does system achieve goals set out?

Conflicts as to priorities, timing etc. can lead to objectives met

in the short run by sacrificing fundamental system qualities,

leading to long run decline of effectiveness of the system

System

resource view - desirable qualities of a system

are identified and their levels are measured.

•

If the qualities exist, then information system objectives, by

inference, should be met. By measuring the qualities of the system

may get a better, longer-term view of a system's effectiveness.

The

main problem– measuring system qualities is much

more difficult than measuring goal achievement.

2 Types of Evaluations for System Effectiveness

Relative

evaluation - auditor compares the state of goal

accomplish. after the system implemented, with the state

of goal accomplishment before system implemented.

•

Improved task accomplishment, and

•

Improved quality of working life.

Absolute

evaluation - the auditor assesses the size of the

goal accomplish. after the system has been implemented.

•

Operational effectiveness,

•

Technical effectiveness, and

•

Economic effectiveness.

Task Accomplishment - an effective I/S

improves the task accomplishment of its users.

Providing

specific measures of past accomplishment that

auditor can use to evaluate IS is difficult.

Performance

measures for task accomplishment differ

across applications and sometimes across organizations.

•

For a manufacturing control system might be:

number

of units output,

number

of defective units reworked, units scrapped

amount

of down time/idle time.

Important

to trace task accomplishment over time.

System may appear to have improved for a short time

after implementation, but fall into disarray thereafter.

Quality of Working Life

High

quality of working life for users of a system is a

major objective in the design process. Unfortunately,

there is less agreement on the definition and

measurement of the concept of quality of working life.

Different

groups have different vested interests - some

productivity, some social

Operational Effectiveness Objectives

Auditor

examines how well a system meets its goals

from the viewpoint of a user who interacts with the

system on a regular basis. Four main measures:

Frequency of use,

Nature of use,

Ease of use, and

User satisfaction.

Frequency and Nature of Use

Frequency

- employed widely, but problematic

•

sometimes a high quality system leads to low frequency of use

because the system permits more work to be accomplished in a

shorter period of time.

•

sometimes a poor quality system leads to a low frequency of use

since users dislike the system

Nature

- can use systems in many ways

•

lowest level: treat as black box providing solutions

•

highest level: use to redefine how tasks, jobs performed and viewed

Ease of Use and User Satisfaction

Ease

of use - positive correlation betw. users' feelings about

systems and the degree to which the systems were easy

to use. In evaluating ease of use, it is important to

identify the primary and secondary users of a system.

•

Terminal location, flexibility of reporting, ease of error correction

User

satisfaction - has become an important measure of

operational effectiveness because of the difficulties and

problems associated with measures of frequency of use,

nature of use, and ease of use.

•

problem finding, problem solving, input, processing, report form

Technical Effectiveness Objectives Has

the appropriate hardware and software technology

been used to support a system, or, whether a change in

the support hardware or software technology would

enable the system to meet its goals better.

•

Hardware performance can be measured using hardware monitors

or more gross measures such as system response time, down time.

•

Software effectiveness can be measured by examining

the history of program maintenance, modification and

run time resource consumption. The history of program

repair maintenance indicates the quality of logic existing

in a program; i.e., extensive error correction implies:

inappropriate design, coding or testing; failure to use

structured approaches, etc.

Major

problem: hardware and software not independent

Economic Effectiveness Objectives -

Requires the identification of costs and benefits and the

proper evaluation of costs and benefits - a difficult task since

costs and benefits depend on the nature of the IS.

•

For example, some of the benefits expected and derived from an IS

designed to support a social service environment

would differ significantly from a system designed to

support manufacturing activities. Some of the most

significant costs and benefits may be intangible and

difficult to identify, and next to impossible to value.

Comparison of Audit Approaches

Effectiveness

audit - express an opinion on whether a

system achieves the goals set for the system. These

goals may be quite broad or specific.

(having the right system Quality to ensure the right executions met

the right tasks to met the right goals)

Audits

of system efficiency - whether maximum output is

achieved at minimum cost or with minimum input

assuming a given level of quality.