pptx

advertisement

Building Soft Clusters of Data via the Information Bottleneck Method

Russell J.

1

Ricker ,

Albert E.

1

Parker ,

Tomáš

1

Gedeon ,

Alexander G.

2

Dimitrov

1Montana

Abstract

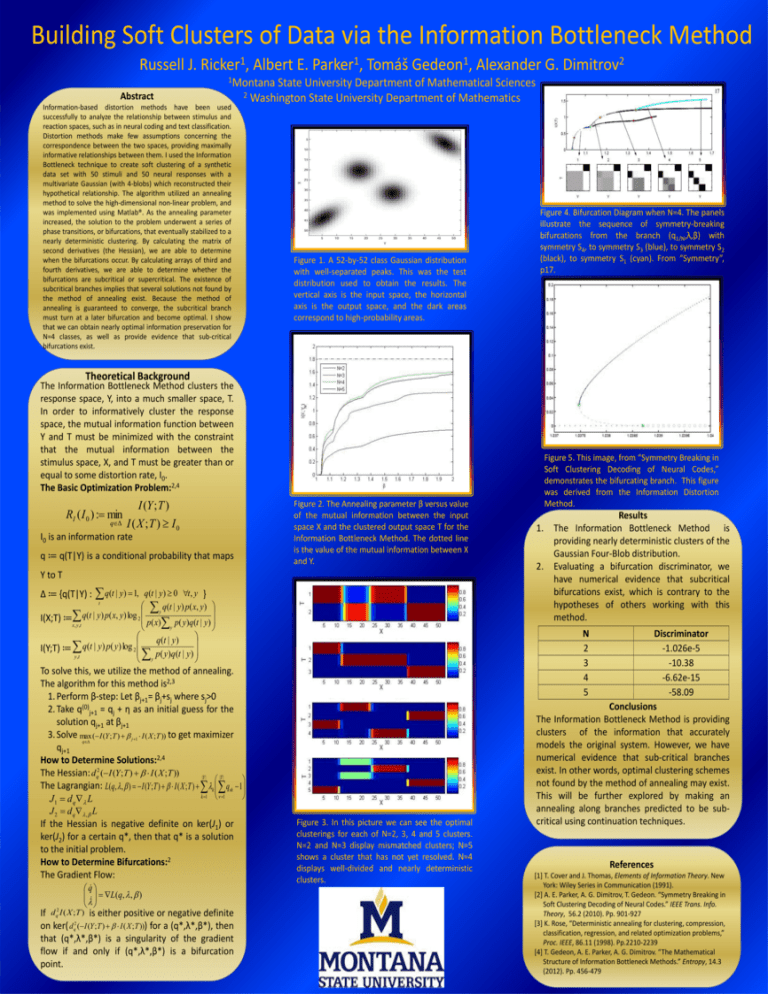

Information-based distortion methods have been used

successfully to analyze the relationship between stimulus and

reaction spaces, such as in neural coding and text classification.

Distortion methods make few assumptions concerning the

correspondence between the two spaces, providing maximally

informative relationships between them. I used the Information

Bottleneck technique to create soft clustering of a synthetic

data set with 50 stimuli and 50 neural responses with a

multivariate Gaussian (with 4-blobs) which reconstructed their

hypothetical relationship. The algorithm utilized an annealing

method to solve the high-dimensional non-linear problem, and

was implemented using Matlab®. As the annealing parameter

increased, the solution to the problem underwent a series of

phase transitions, or bifurcations, that eventually stabilized to a

nearly deterministic clustering. By calculating the matrix of

second derivatives (the Hessian), we are able to determine

when the bifurcations occur. By calculating arrays of third and

fourth derivatives, we are able to determine whether the

bifurcations are subcritical or supercritical. The existence of

subcritical branches implies that several solutions not found by

the method of annealing exist. Because the method of

annealing is guaranteed to converge, the subcritical branch

must turn at a later bifurcation and become optimal. I show

that we can obtain nearly optimal information preservation for

N=4 classes, as well as provide evidence that sub-critical

bifurcations exist.

State University Department of Mathematical Sciences

2 Washington State University Department of Mathematics

Figure 1. A 52-by-52 class Gaussian distribution

with well-separated peaks. This was the test

distribution used to obtain the results. The

vertical axis is the input space, the horizontal

axis is the output space, and the dark areas

correspond to high-probability areas.

Figure 4. Bifurcation Diagram when N=4. The panels

illustrate the sequence of symmetry-breaking

bifurcations from the branch (q1/N,λ,β) with

symmetry S4, to symmetry S3 (blue), to symmetry S2

(black), to symmetry S1 (cyan). From “Symmetry”,

p17.

Theoretical Background

The Information Bottleneck Method clusters the

response space, Y, into a much smaller space, T.

In order to informatively cluster the response

space, the mutual information function between

Y and T must be minimized with the constraint

that the mutual information between the

stimulus space, X, and T must be greater than or

equal to some distortion rate, I0.

The Basic Optimization Problem:2,4

I (Y ; T )

RI ( I 0 ) : min

q I ( X ; T ) I

0

I0 is an information rate

q ≔ q(T|Y) is a conditional probability that maps

Figure 2. The Annealing parameter β versus value

of the mutual information between the input

space X and the clustered output space T for the

Information Bottleneck Method. The dotted line

is the value of the mutual information between X

and Y.

Y to T

q(t | y) 1,

q(t | y) 0 t , y }

t

y q(t | y) p( x, y)

q

(

t

|

y

)

p

(

x

,

y

)

log

2

I(X;T) ≔

p( x) p( y)q(t | y)

x , y ,t

y

q

(

t

|

y

)

q

(

t

|

y

)

p

(

y

)

log

I(Y;T) ≔

2

p

(

y

)

q

(

t

|

y

)

y ,t

y

Δ ≔ {q(T|Y) :

J 2 d q , L

q

L(q, , )

d q2 I ( X ; T )

If

is either positive or negative definite

on ker( dq2 (I (Y ;T ) I ( X ;T ))) for a (q*,λ*,β*), then

that (q*,λ*,β*) is a singularity of the gradient

flow if and only if (q*,λ*,β*) is a bifurcation

point.

1.

2.

Results

The Information Bottleneck Method is

providing nearly deterministic clusters of the

Gaussian Four-Blob distribution.

Evaluating a bifurcation discriminator, we

have numerical evidence that subcritical

bifurcations exist, which is contrary to the

hypotheses of others working with this

method.

N

2

3

4

5

To solve this, we utilize the method of annealing.

The algorithm for this method is2,3

1. Perform β-step: Let βj+1= βj+sj where sj>0

2. Take q(0)j+1 = qj + η as an initial guess for the

solution qj+1 at βj+1

3. Solve max

( I (Y ; T ) j 1 I ( X ; T )) to get maximizer

q

qj+1

How to Determine Solutions:2,4

2

The Hessian: dq (I (Y ; T ) I ( X ;T ))

|Y |

|T |

The Lagrangian: L(q, , ) I (Y ; T ) I ( X ; T ) k qk 1

k 1 1

J1 d q L

If the Hessian is negative definite on ker(J1) or

ker(J2) for a certain q*, then that q* is a solution

to the initial problem.

How to Determine Bifurcations:2

The Gradient Flow:

Figure 5. This image, from “Symmetry Breaking in

Soft Clustering Decoding of Neural Codes,”

demonstrates the bifurcating branch. This figure

was derived from the Information Distortion

Method.

Figure 3. In this picture we can see the optimal

clusterings for each of N=2, 3, 4 and 5 clusters.

N=2 and N=3 display mismatched clusters; N=5

shows a cluster that has not yet resolved. N=4

displays well-divided and nearly deterministic

clusters.

Discriminator

-1.026e-5

-10.38

-6.62e-15

-58.09

Conclusions

The Information Bottleneck Method is providing

clusters of the information that accurately

models the original system. However, we have

numerical evidence that sub-critical branches

exist. In other words, optimal clustering schemes

not found by the method of annealing may exist.

This will be further explored by making an

annealing along branches predicted to be subcritical using continuation techniques.

References

[1] T. Cover and J. Thomas, Elements of Information Theory. New

York: Wiley Series in Communication (1991).

[2] A. E. Parker, A. G. Dimitrov, T. Gedeon. “Symmetry Breaking in

Soft Clustering Decoding of Neural Codes.” IEEE Trans. Info.

Theory, 56.2 (2010). Pp. 901-927

[3] K. Rose, “Deterministic annealing for clustering, compression,

classification, regression, and related optimization problems,”

Proc. IEEE, 86.11 (1998). Pp.2210-2239

[4] T. Gedeon, A. E. Parker, A. G. Dimitrov. “The Mathematical

Structure of Information Bottleneck Methods.” Entropy, 14.3

(2012). Pp. 456-479