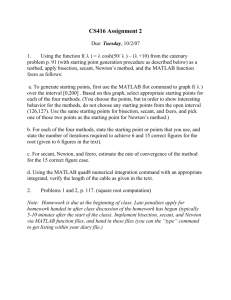

Lecture 3

advertisement

Numerical Computation

Lecture 3: Algorithm Convergence and

Root Finding Methods

United International College

Review

• During our Last Class we covered:

– Matlab: Basic Programming principles. Matlab m

function files

Matlab Practice

• Class Exercise: Triangular numbers are

numbers of the form n*(n+1)/2, with n a

positive integer.

– Write a Matlab M-file function to find the

triangular numbers up to n=20.

– Try to do this in as few of lines of code as possible.

Today

• We will cover:

– Algorithm Convergence

– Root Finding: Bisection Method, Newton’s

Method

Algorithm Convergence

• Definition: We say that a numerical algorithm to

solve some problem is convergent if the numerical

solution generated by the algorithm approaches the

actual solution as the number of steps in the

algorithm increases.

• Definition: Stability of an algorithm refers to the

ability of a numerical algorithm to reach the correct

solution, even if small errors are introduced during

the execution of the algorithm.

Algorithm Stability

• Stable algorithm: Small changes in the initial

data produce small changes in the final result

• Unstable or conditionally stable algorithm:

Small changes in the initial data may produce

large changes in the final result

Algorithm Stability

• Example: Consider

p(x) = x5 – 10x4 + 40x3 – 80x2 + 80x – 32.

Note that p(x) = (x –2)5

Does Matlab roots command use a stable algorithm?

Algorithm Stability

• Example: Use the fzero Matlab function.

Does Matlab

fzero command

use a stable

algorithm?

Algorithm Error Analysis

• To calculate convergence, we usually try to

estimate the error in the numerical solution

compared to the actual solution. We try to

find out how fast this error is decreasing (or

increasing!)

Algorithm Error Analysis

• What types of errors can occur in an

algorithm?

• Rounding vs. truncation error

– Rounding error: introduced by finite precision

calculations in the computer arithmetic, e.g. pi =

3.14159 is rounded to 3.1416

– Truncation error: introduced into algorithm via

problem simplification, e.g. Taylor Series truncation

Algorithm Error Disaster!!

• Explosion of the Ariane 5

• On June 4, 1996 an unmanned Ariane 5 rocket launched by

the European Space Agency exploded just forty seconds after

lift-off. The cause of the failure was a software error in the

inertial guidance system.

• A 64 bit floating point number relating to the horizontal

velocity of the rocket with respect to the platform was

converted to a 16 bit signed integer. The number was larger

than 32,768, the largest integer storeable in a 16 bit signed

integer, and thus the conversion failed.

(source http://ta.twi.tudelft.nl/users/vuik/wi211/disasters.html)

Algorithm Rate of Convergence

• To get an idea of how fast an algorithm

convergences, we measure the rate at which the

sequence of approximations generated by the

algorithm converge to some value.

Definition:

Algorithm Rate of Convergence

• Alternatively, we can consider how fast the error

goes to zero from one iteration of the algorithm to

the next.

• Definition: Let εn be the error in the nth iteration of

the algorithm. That is, εn = |αn - α |.

• Definition: We say that the algorithm has linear

convergence if |n+1| = C |n|

• Definition: We say that the algorithm has quadratic

convergence if |n+1| = C |n|2

• Which convergence type is faster?

Break

Finding Roots (Zeroes) of Functions

• Given some function f(x) , find location x

where f(x)=0. This is called a root (or zero) of

f(x)

• For success we will need:

– Starting position x0, hopefully close to solution x

– Well-behaved function f(x)

Finding Roots (Zeroes) of Functions

• Notes:

– There may be multiple roots of f(x). That is why

we need to specify an initial guess of x0 .

– If f(x) is not continuous, we may miss a root no

matter what root-finding algorithm we try.

– The roots may be real numbers or complex

numbers. In this course we will consider only

functions with real roots.

Finding Roots (Zeroes) of Functions

• What else can go wrong?

Tangent point:

very difficult

to find

Singularity:

brackets don’t

surround root

Pathological case:

infinite number of

roots – e.g. sin(1/x)

Bisection method

• Simplest Root finding method. Based on

Intermediate Value Theorem: If f(x) is

continuous on [a, b] then for any y such that y

is between f(a) and f(b) there is a c in [a, b]

such that f(c) = y.

Bisection method

• Given points a and b that bracket a root, find

x = ½ (a+ b)

and evaluate f(x)

• If f(x) and f(b) have the same sign (+ or -) then

b x else a x

• Stop when a and b are “close enough”

• If function is continuous, this will succeed

in finding some root.

Bisection Matlab Function

function v = bisection(f, a, b, eps)

% Function to find root of (continuous) f(x) within [a,b]

% Assumes f(a) and f(b) bracket a root

k = 0;

while abs(b-a) > eps*abs(b)

x = (a + b)/2;

if sign(f(x)) == sign(f(b))

b = x;

else

a = x;

end

k = k + 1; root = x;

v = [root k];

end

Bisection Matlab Function

f = inline('x^2-2')

bisection2(f, 1, 2, 0.01)

ans =

1.4141 7.0000

bisection2(f, 1, 2, 0.005)

ans =

1.4180 8.0000

bisection2(f, 1, 2, 0.0025)

ans =

1.4160 9.0000

bisection2(f, 1, 2, 0.00125)

ans =

1.4150 10.0000

Is there a pattern?

Bisection Convergence

• Convergence rate:

– Error bounded by size of [a… b] interval

– Interval shrinks in half at each iteration

– Therefore, error cut in half at next iteration:

|n+1| = ½ |n|

– This is linear convergence

Bisection Convergence

f = inline('x^2-2')

bisection2(f, 1, 2, 0.01)

ans =

1.4141 7.0000

bisection2(f, 1, 2, 0.005)

ans =

1.4180 8.0000

bisection2(f, 1, 2, 0.0025)

ans =

1.4160 9.0000

bisection2(f, 1, 2, 0.00125)

ans =

1.4150 10.0000

Root Finding Termination

• Let pn be the approximation generated by the

nth iteration of a root-finding algorithm. How

do we know when to stop?

• Bisection – uses a version of the second

condition.

Newton’s Method

• Newton's method is an iterative method for root

finding.

• Starting from some guess, x0, one iteration of the

algorithm produces a number x1, which is supposed

to be closer to a root

• Iterating further yields x2 , x3 , …, xn

• Newton's method uses “linearization" to find an

approximate root. From Taylor's Theorem,

f(x + h) ≈ f(x) + f’(x)h

Newton’s Method

• We try to find an h such that

0 = f(x + h) = f(x) + f ‘(x)h

• Then,

• So, if xk is our current guess, the next guess should

be xk+1 = xk + h, or

Newton’s Method - Visually

• Start with guess x1

f(x1)

1

2

3

Slope = derivative at 1

4

x2

x1

• Class Exercise: Show that this picture is correct!

Newton’s Method

• Note:

– Newton’s method assumes f(x) is differentiable

– Near the root, we cannot have f’(x) = 0

– Method can easily get confused!

Newton’s Method - Algorithm

function v = newton( f, fprime, x0 , eps)

%Newton's method

% Assumes f is differentiable

k = 0;

xprev = x0;

xnext = x0 - f(x0)/fprime(x0);

while abs(xnext - xprev) > eps*abs(xnext)

xprev = xnext;

xnext = xnext - f(xnext)/fprime(xnext);

k = k + 1;

v = [xnext k];

end

Newton’s Method - Convergence

• Theorem (Newton's Method Convergence) If f(x) has

f’(x) and f’’(x) continuous, and r is a simple root of

f(x), then there is some D such that if |x0 – r| < D

then Newton’s method will converge quadratically to

r.

• Proof: It can be shown (using Taylor Series) that

Newton’s Method - Convergence

• For example,

• If e0 = 0.01, we would expect e1 = 0.0001 = 10-3

(approximately) and e2 = 10-6

• So, which algorithm is faster, Bisection or Newton’s

Method?

Practice

• Class Exercise: Determine the number of iterations

needed to achieve an approximation to the solution

of x3 – 2x -1 = 0 lying within the interval [1.5, 2.0] and

accurate to 10-2 .

– Using the Bisection Method

– Using Newton’s Method (x0 = 2)

• Estimate using theory, then try using Matlab