Data parallel accelerators

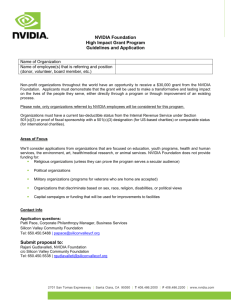

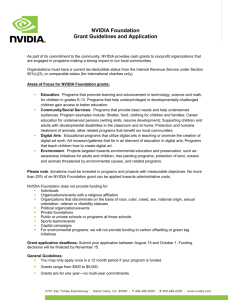

advertisement

GPGPUs Data Parallel Accelerators Dezső Sima 2010 November Ver. 1.0 (Updated 30/11/2010) © Dezső Sima 2010 1. Introduction (1) Aim Introduction and overview in a very limited time Contents 1.Introduction 2. Basics of the SIMT execution 3. Overview of GPGPUs 4. Overview of data parallel accelerators 5. Microarchitecture of GPGPUs (examples) 5.1 AMD/ATI RV870 (Cypress) 5.2 Nvidia Fermi 6. Integrated CPU/GPUs 6.1 AMD Fusion APU line 6.2 Intel Sandy Bridge 7. References 1. The emergence of GPGPUs 1. Introduction (2) Rrepresentation of objects by triangels Vertex Edge Surface Vertices • have three spatial coordinates • supplementary information necessary to render the object, such as • • • • color texture reflectance properties etc. 1. Introduction (3) Main types of shaders in GPUs Shaders Vertex shaders Transform each vertex’s 3D-position in the virtual space to the 2D coordinate, at which it appears on the screen Pixel shaders (Fragment shaders) Geometry shaders Calculate the color of the pixels Can add or remove vertices from a mesh 1. Introduction (4) DirectX version Pixel SM Vertex SM Supporting OS 8.0 (11/2000) 1.0, 1.1 1.0, 1.1 Windows 2000 8.1 (10/2001) 1.2, 1.3, 1.4 1.0, 1.1 Windows XP/ Windows Server 2003 9.0 (12/2002) 2.0 2.0 9.0a (3/2003) 2_A, 2_B 2.x 9.0c (8/2004) 3.0 3.0 Windows XP SP2 10.0 (11/2006) 4.0 4.0 Windows Vista 10.1 (2/2008) 4.1 4.1 Windows Vista SP1/ Windows Server 2008 11 (in development) 5.0 5.0 Table: Pixel/vertex shader models (SM) supported by subsequent versions of DirectX and MS’s OSs [18], [21] 1. Introduction (3) Convergence of important features of the vertex and pixel shader models Subsequent shader models introduce typically, a number of new/enhanced features. Differences between the vertex and pixel shader models in subsequent shader models concerning precision requirements, instruction sets and programming resources. Shader model 2 [19] • Different precision requirements Vertex shader: FP32 (coordinates) Pixel shader: FX24 (3 colors x 8) • Different instructions • Different resources (e.g. registers) Shader model 3 [19] • Unified precision requirements for both shaders (FP32) with the option to specify partial precision (FP16 or FP24) by adding a modifier to the shader code • Different instructions • Different resources (e.g. registers) 1. Introduction (3) Shader model 4 (introduced with DirectX10) [20] • Unified precision requirements for both shaders (FP32) with the possibility to use new data formats. • Unified instruction set • Unified resources (e.g. temporary and constant registers) Shader architectures of GPUs prior to SM4 GPUs prior to SM4 (DirectX 10): have separate vertex and pixel units with different features. Drawback of having separate units for vertex and pixel shading Inefficiency of the hardware implementation (Vertex shaders and pixel shaders often have complementary load patterns [21]). 1. Introduction (5) Unified shader model (introduced in the SM 4.0 of DirectX 10.0) Unified, programable shader architecture The same (programmable) processor can be used to implement all shaders; • the vertex shader • the pixel shader and • the geometry shader (new feature of the SMl 4) 1. Introduction (6) Figure: Principle of the unified shader architecture [22] 1. Introduction (7) Based on its FP32 computing capability and the large number of FP-units available the unified shader is a prospective candidate for speeding up HPC! GPUs with unified shader architectures also termed as GPGPUs (General Purpose GPUs) or cGPUs (computational GPUs) 1. Introduction (8) Figure: Peak SP FP performance of Nvidia’s GPUs vs Intel’ P4 and Core2 processors [11] 1. Introduction (9) Figure: Bandwidth values of Nvidia’s GPU’s vs Intel’s P4 and Core2 processors [11] 1. Introduction (10) Figure: Contrasting the utilization of the silicon area in CPUs and GPUs [11] 2. Basics of the SIMT execution 2. Basics of the SIMT execution (1) Main alternatives of data parallel execution Data parallel execution SIMD execution SIMT execution • One dimensional data parallel execution, • Two dimensional data parallel execution, i.e. it performs the same operation i.e. it performs the same operation on all elements of given on all elements of given FX/FP input vectors FX/FP input arrays (matrices) • is massively multithreaded, and provides • data dependent flow control as well as • barrier synchronization Needs an FX/FP SIMD extension of the ISA E.g. 2. and 3. generation superscalars Needs an FX/FP SIMT extension of the ISA and the API GPGPUs, data parallel accelerators Figure: Main alternatives of data parallel execution 2. Basics of the SIMT execution (2) Scalar, SIMD and SIMT execution Scalar execution SIMD execution SIMT execution Domain of execution: single data elements Domain of execution: elements of vectors Domain of execution: elements of matrices (at the programming level) Figure: Domains of execution in case of scalar, SIMD and SIMT execution Remark SIMT execution is also termed as SPMD (Single_Program Multiple_Data) execution (Nvidia) 2. Basics of the SIMT execution (3) Key components of the implementation of SIMT execution • Data parallel execution • Massive multithreading • Data dependent flow control • Barrier synchronization 2. Basics of the SIMT execution (4) Data parallel execution Performed by SIMT cores SIMT cores execute the same instruction stream on a number of ALUs (i.e. all ALUs of a SIMT core perform typically the same operation). SIMT core Fetch/Decode ALU ALU ALU ALU ALU ALU ALU ALU Figure: Basic layout of a SIMT core SIMT cores are the basic building blocks of GPGPU or data parallel accelerators. During SIMT execution 2-dimensional matrices will be mapped to blocks of SIMT cores. 2. Basics of the SIMT execution (5) Remark 1 Different manufacturers designate SIMT cores differently, such as • streaming multiprocessor (Nvidia), • superscalar shader processor (AMD), • wide SIMD processor, CPU core (Intel). 2. Basics of the SIMT execution (6) Each ALU is allocated a working register set (RF) Fetch/Decode ALU ALU ALU ALU ALU ALU ALU ALU RF RF RF RF RF RF RF RF Figure: Main functional blocks of a SIMT core 2. Basics of the SIMT execution (7) SIMT ALUs perform typically, RRR operations, that is ALUs take their operands from and write the calculated results to the register set (RF) allocated to them. RF ALU Figure: Principle of operation of the SIMD ALUs 2. Basics of the SIMT execution (8) Remark 2 Actually, the register sets (RF) allocated to each ALU are given parts of a large enough register file. RF RF RF RF RF RF RF RF ALU ALU ALU ALU ALU ALU ALU ALU ALU ALU ALU ALU Figure: Allocation of distinct parts of a large register set as workspaces of the ALUs 2. Basics of the SIMT execution (9) Basic operation of recent SIMT ALUs • execute basically SP FP-MADD (simple precision i.e. 32-bit. Multiply-Add) instructions of the form axb+c , RF • are pipelined, capable of starting a new operation every new clock cycle, (more precisely, every shader clock cycle), That is, without further enhancements their peak performance is 2 SP FP operations/cycle ALU • need a few number of clock cycles, e.g. 2 or 4 shader cycles, to present the results of the SP FMADD operations to the RF, 2. Basics of the SIMT execution (10) Additional operations provided by SIMT ALUs • • • FX operations and FX/FP conversions, DP FP operations, trigonometric functions (usually supported by special functional units). 2. Basics of the SIMT execution (11) Massive multithreading Aim of massive multithreading to speed up computations by increasing the utilization of available computing resources in case of stalls (e.g. due to cache misses). Principle • Suspend stalled threads from execution and allocate ready to run threads for execution. • When a large enough number of threads are available long stalls can be hidden. 2. Basics of the SIMT execution (12) Multithreading is implemented by creating and managing parallel executable threads for each data element of the execution domain. Same instructions for all data elements Figure: Parallel executable threads for each element of the execution domain 2. Basics of the SIMT execution (13) Effective implementation of multithreading if thread switches, called context switches, do not cause cycle penalties. Achieved by • providing separate contexts (register space) for each thread, and • implementing a zero-cycle context switch mechanism. 2. Basics of the SIMT execution (14) SIMT core Fetch/Decode CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX Actual context CTX CTX CTX CTX CTX CTX CTX CTX Context switch CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX CTX ALU ALU ALU ALU ALU ALU ALU ALU ALU ALU ALU ALU Register file (RF) Figure: Providing separate thread contexts for each thread allocated for execution in a SIMT ALU 2. Basics of the SIMT execution (15) Data dependent flow control Implemented by SIMT branch processing In SIMT processing both paths of a branch are executed subsequently such that for each path the prescribed operations are executed only on those data elements which fulfill the data condition given for that path (e.g. xi > 0). Example 2. Basics of the SIMT execution (16) Figure: Execution of branches [24] The given condition will be checked separately for each thread 2. Basics of the SIMT execution (17) First all ALUs meeting the condition execute the prescibed three operations, then all ALUs missing the condition execute the next two operatons Figure: Execution of branches [24] 2. Basics of the SIMT execution (18) Figure: Resuming instruction stream processing after executing a branch [24] 2. Basics of the SIMT execution (19) Barrier synchronization Lets wait all threads for completing all prior instructions before executing the next instruction. Implemented e.g. in AMD’s Intermediate Language (IL) by the fence threads instruction [10]. Remark In the R600 ISA this instruction is coded by setting the BARRIER field of the Control Flow (CF) instruction format [7]. 2. Basics of the SIMT execution (20) Principle of SIMT execution Host kernel0<<<>>>() Device Each kernel invocation lets execute all thread blocks (Block(i,j)) kernel1<<<>>>() Figure: Hierarchy of threads [25] 3. Overview of GPGPUs 3. Overview of GPGPUs (1) Basic implementation alternatives of the SIMT execution GPGPUs Data parallel accelerators Dedicated units Programmable GPUs supporting data parallel execution with appropriate with appropriate programming environments programming environment Have display outputs E.g. Nvidia’s 8800 and GTX lines AMD’s HD 38xx, HD48xx lines No display outputs Have larger memories than GPGPUs Nvidia’s Tesla lines AMD’s FireStream lines Figure: Basic implementation alternatives of the SIMT execution 3. Overview of GPGPUs (2) GPGPUs AMD/ATI’s line Nvidia’s line 90 nm 80 nm G80 Shrink 65 nm Shrink Enhanced arch. G92 55 nm G200 Shrink 40 nm R600 RV670 Enhanced arch. Enhanced arch. Fermi 40 nm Figure: Overview of Nvidia’s and AMD/ATI’s GPGPU lines RV770 Shrink Enhanced arch. RV870 3. Overview of GPGPUs (3) NVidia 10/07 11/06 9/09 G80 G92 GT200 Fermi 90 nm/681 mtrs 65 nm/754 mtrs 65 nm/1400 mtrs 40 nm/3000 mtrs Cores Cards 6/08 8800 GTS 96 ALUs 320-bit 8800 GTX 8800 GT GTX260 GTX280 128 ALUs 384-bit 112 ALUs 256-bit 192 ALUs 448-bit 240 ALUs 512-bit 11/07 6/07 CUDA Version 1.0 512 ALUs 384-bit 6/08 Version 1.1 Version 2.0 AMD/ATI Cores 5/07 11/07 5/08 R500 R600 R670 RV770 RV870 55 nm/956 mtrs 40 nm/2100 mtrs 80 nm/681 mtrs Cards 9/09 11/05 55 nm/666 mtrs (Xbox) HD 2900XT HD 3850 HD 3870 HD 4850 HD 4870 HD 5870 48 ALUs 320 ALUs 512-bit 320 ALUs 256-bit 320 ALUs 256-bit 800 ALUs 256-bit 800 ALUs 256-bit 1600 ALUs 256-bit 12/08 OpenCL+ OpenCL 11/07 Brooks+ Brook+ 6/08 RapidMind 3870 support 2005 2006 2007 Figure: Overview of GPGPUs 2008 2009 3. Overview of GPGPUs (4) 8800 GTS 8800 GTX 8800 GT GTX 260 GTX 280 Core G80 G80 G92 GT200 GT200 Introduction 11/06 11/06 10/07 6/08 6/08 IC technology 90 nm 90 nm 65 nm 65 nm 65 nm Nr. of transistors 681 mtrs 681 mtrs 754 mtrs 1400 mtrs 1400 mtrs Die are 480 mm2 480 mm2 324 mm2 576 mm2 576 mm2 Core frequency 500 MHz 575 MHz 600 MHz 576 MHz 602 MHz 96 128 112 192 240 1.2 GHz 1.35 GHz 1.512 GHz 1.242 GHz 1.296 GHz 3 3 Computation No.of ALUs Shader frequency No. FP32 inst./cycle 3* (but only in a few issue cases) Peak FP32 performance 346 GLOPS 512 GLOPS 508 GLOPS 715 GLOPS 933 GLOPS Peak FP64 performance – – – – 77/76 GLOPS 1600 Mb/s 1800 Mb/s 1800 Mb/s 1998 Mb/s 2214 Mb/s Mem. interface 320-bit 384-bit 256-bit 448-bit 512-bit Mem. bandwidth 64 GB/s 86.4 GB/s 57.6 GB/s 111.9 GB/s 141.7 GB/s Mem. size 320 MB 768 MB 512 MB 896 MB 1.0 GB Mem. type GDDR3 GDDR3 GDDR3 GDDR3 GDDR3 Mem. channel 6*64-bit 6*64-bit 4*64-bit 8*64-bit 8*64-bit Mem. contr. Crossbar Crossbar Crossbar Crossbar Crossbar SLI SLI SLI SLI SLI PCIe x16 PCIe x16 PCIe 2.0x16 PCIe 2.0x16 PCIe 2.0x16 10 10 10 10.1 subset 10.1 subset Memory Mem. transfer rate (eff) System Multi. CPU techn. Interface MS Direct X Table: Main features of Nvidia’s GPGPUs 3. Overview of GPGPUs (5) HD 2900XT HD 3850 HD 3870 HD 4850 HD 4870 Core R600 R670 R670 RV770 RV770 Introduction 5/07 11/07 11/07 5/08 5/08 80 nm 55 nm 55 nm 55 nm 55 nm Nr. of transistors 700 mtrs 666 mtrs 666 mtrs 956 mtrs 956 mtrs Die are 408 mm2 192 mm2 192 mm2 260 mm2 260 mm2 Core frequency 740 MHz 670 MHz 775 MHz 625 MHz 750 MHz 320 320 320 800 800 740 MHz 670 MHz 775 MHz 625 MHz 750 MHz 2 2 2 2 2 Peak FP32 performance 471.6 GLOPS 429 GLOPS 496 GLOPS 1000 GLOPS 1200 GLOPS Peak FP64 performance – – – 200 GLOPS 240 GLOPS 1600 Mb/s 1660 Mb/s 2250 Mb/s 2000 Mb/s 3600 Mb/s (GDDR5) 512-bit 256-bit 256-bit 265-bit 265-bit 105.6 GB/s 53.1 GB/s 720 GB/s 64 GB/s 118 GB/s Mem. size 512 MB 256 MB 512 MB 512 MB 512 MB Mem. type GDDR3 GDDR3 GDDR4 GDDR3 GDDR3/GDDR5 Mem. channel 8*64-bit 8*32-bit 8*32-bit 4*64-bit 4*64-bit Mem. contr. Ring bus Ring bus Ring bus Crossbar Crossbar Multi. CPU techn. CrossFire CrossFire X CrossFire X CrossFire X CrossFire X Interface PCIe x16 PCIe 2.0x16 PCIe 2.0x16 PCIe 2.0x16 PCIe 2.0x16 10 10.1 10.1 10.1 10.1 IC technology Computation No. of ALUs Shader frequency No. FP32 inst./cycle Memory Mem. transfer rate (eff) Mem. interface Mem. bandwidth System MS Direct X Table: Main features of AMD/ATIs GPGPUs 3. Overview of GPGPUs (6) Price relations (as of 10/2008) Nvidia GTX260 GTX280 ~ 300 $ ~ 600 $ AMD/ATI HD4850 HD4870 ~ 200 $ na 4. Overview of data parallel accelerators 4. Overview of data parallel accelerators (1) Data parallel accelerators Implementation alternatives of data parallel accelerators On card implementation On-die integration Recent implementations E.g. Future implementations GPU cards Intel’s Heavendahl Data-parallel accelerator cards AMD’s Torrenza integration technology AMD’s Fusion integration technology Trend Figure: Implementation alternatives of dedicated data parallel accelerators 4. Overview of data parallel accelerators (2) On-card accelerators Card implementations Single cards fitting into a free PCI Ex16 slot of the host computer. E.g. Nvidia Tesla C870 Nvidia Tesla C1060 AMD FireStream 9170 Desktop implementations 1U server implementations Usually 4 cards Usually dual cards mounted into a 1U server rack, mounted into a box, connected two adapter cards connected to an that are inserted into adapter card that is inserted into a two free PCIEx16 slots of a server through two switches free PCI-E x16 slot of the and two cables. host PC through a cable. Nvidia Tesla D870 Nvidia Tesla S870 Nvidia Tesla S1070 AMD FireStream 9250 Figure:Implementation alternatives of on-card accelerators 4. Overview of data parellel accererators (2) FB: Frame Buffer Figure: Main functional units of Nvidia’s Tesla C870 card [2] 4. Overview of data parellel accererators (3) Figure: Nvida’s Tesla C870 and AMD’s FireStream 9170 cards [2], [3] 4. Overview of data parellel accererators (4) Figure: Tesla D870 desktop implementation [4] 4. Overview of data parellel accererators (5) Figure: Nvidia’s Tesla D870 desktop implementation [4] 4. Overview of data parellel accererators (6) Figure: PCI-E x16 host adapter card of Nvidia’s Tesla D870 desktop [4] 4. Overview of data parellel accererators (7) Figure: Concept of Nvidia’s Tesla S870 1U rack server [5] 4. Overview of data parellel accererators (8) Figure: Internal layout of Nvidia’s Tesla S870 1U rack [6] 4. Overview of data parellel accererators (9) Figure: Connection cable between Nvidia’s Tesla S870 1U rack and the adapter cards inserted into PCI-E x16 slots of the host server [6] 4. Overview of data parellel accererators (10) NVidia Tesla Card 6/07 6/08 C870 C1060 G80-based 1.5 GB GDDR3 0.519 GLOPS GT200-based 4 GB GDDR3 0.936 GLOPS 6/07 Desktop D870 G80-based 2*C870 incl. 3 GB GDDR3 1.037 GLOPS 6/07 IU Server S870 S1070 G80-based 4*C870 incl. 6 GB GDDR3 2.074 GLOPS GT200-based 4*C1060 16 GB GDDR3 3.744 GLOPS 6/07 CUDA 6/08 Version 1.0 11/07 6/08 Version 1.01 Version 2.0 2007 Figure: Overview of Nvidia’s Tesla family 2008 4. Overview of data parellel accererators (11) AMD FireStream 11/07 Card 6/08 9170 9170 RV670-based 2 GB GDDR3 500 GLOPS FP32 ~200 GLOPS FP64 Shipped 6/08 9250 9250 RV770-based 1 GB GDDR3 1 TLOPS FP32 ~300 GFLOPS FP64 Shipped 12/07 Stream Computing SDK Version 1.0 Brook+ ACM/AMD Core Math Library CAL (Computer Abstor Layer) Rapid Mind 2007 10/08 2008 Figure: Overview of AMD/ATI’s FireStream family 4. Overview of data parellel accererators (12) Nvidia Tesla cards AMD FireStream cards Core type C870 C1060 9170 9250 Based on G80 GT200 RV670 RV770 Introduction 6/07 6/08 11/07 6/08 Core frequency 600 MHz 602 MHz 800 MHz 625 MHz ALU frequency 1350 MHz 1296 GHz 800 MHz 325 MHZ 128 240 320 800 Peak FP32 performance 518 GLOPS 933 GLOPS 512 GLOPS 1 TLOPS Peak FP64 performance – – ~200 GLOPS ~250 GLOPS 1600 Gb/s 1600 Gb/s 1600 Gb/s 1986 Gb/s 384-bit 512-bit 256-bit 256-bit 768 GB/s 102 GB/s 51.2 GB/s 63.5 GB/s Mem. size 1.5 GB 4 GB 2 GB 1 GB Mem. type GDDR3 GDDR3 GDDR3 GDDR3 PCI-E x16 PCI-E 2.0x16 PCI-E 2.0x16 PCI-E 2.0x16 171 W 200 W 150 W 150 W Core No. of ALUs Memory Mem. transfer rate (eff) Mem. interface Mem. bandwidth System Interface Power (max) Table: Main features of Nvidia’s and AMD/ATI’s data parallel accelerator cards 4. Overview of data parellel accererators (13) Price relations (as of 10/2008) Nvidia Tesla C870 D870 S870 ~ 1500 $ ~ 5000 $ ~ 7500 $ C1060 ~ 1600 $ S1070 ~ 8000 $ AMD/ATI FireStream 9170 ~ 800 $ 9250 ~ 800 $ 5. Microarchitecture of GPGPUs (examples) 5.1 AMD/ATI RV870 (Cypress) 5.2 Nvidia Fermi 5.1 AMD/ATI RV870 5.1 AMD/ATI RV870 (1) AMD/ATI RV870 (Cypress) Radeon 5870 graphics card Introduction: Sept. 22 2009 Availability: now Performance figures: SP FP performance: 2.72 TFLOPS DP FP performance: 544 GFLOPS (1/5 of SP FP performance) OpenCL 1.0 compliant 5.1 AMD/ATI RV870 (4) Architecture overview RV870 20 cores 16 ALUs/core 5 EUs/ALU 1600 EUs (Stream processing units) Figure: Architecture overview [42] 8x32 = 256 bit GDDR5 153.6 GB/s 5.1 AMD/ATI RV870 (2) Radeon series/5800 ATI Radeon HD 4870 ATI Radeon HD 5850 ATI Radeon HD 5870 Manufacturing Process 55-nm 40-nm 40-nm # of Transistors 956 million 2.15 billion 2.15 billion Core Clock Speed 750MHz 725MHz 850MHz # of Stream Processors 800 1440 1600 Compute Performance 1.2 TFLOPS 2.09 TFLOPS 2.72 TFLOPS Memory Type GDDR5 GDDR5 GDDR5 Memory Clock 900MHz 1000MHz 1200MHz Memory Data Rate 3.6 Gbps 4.0 Gbps 4.8 Gbps Memory Bandwidth 115.2 GB/sec 128 GB/sec 153.6 GB/sec Max Board Power 160W 170W 188W Idle Board Power 90W 27W 27W Figure: Radeon Series/5800 [42] 5.1 AMD/ATI RV870 (3) Radeon 4800 series/5800 series comparison ATI Radeon HD 4870 ATI Radeon HD 5850 ATI Radeon HD 5870 Manufacturing Process 55-nm 40-nm 40-nm # of Transistors 956 million 2.15 billion 2.15 billion Core Clock Speed 750MHz 725MHz 850MHz # of Stream Processors 800 1440 1600 Compute Performance 1.2 TFLOPS 2.09 TFLOPS 2.72 TFLOPS Memory Type GDDR5 GDDR5 GDDR5 Memory Clock 900MHz 1000MHz 1200MHz Memory Data Rate 3.6 Gbps 4.0 Gbps 4.8 Gbps Memory Bandwidth 115.2 GB/sec 128 GB/sec 153.6 GB/sec Max Board Power 160W 170W 188W Idle Board Power 90W 27W 27W Figure: Radeon Series/5800 [42] 5.1 AMD/ATI RV870 (6) The HD 5870 card Figure: The 5870 card [41] 5.1 AMD/ATI RV870 (7) http://www.tomshardware.com/gallery/two-cypress-gpus,0101-230369-7179-0-0-0-jpg-.html 5.1 AMD/ATI RV870 (8) ATI 5970 http://www.tomshardware.com/gallery/two-cypress-gpus,0101-230369-7179-0-0-0-jpg-.html 5.1 AMD/ATI RV870 (9) ATI 5970 5970 P r e v i o u s P h o t o 4 / 3 3 N e x t http://www.tomshardware.com/gallery/two-cypress-gpus,0101-230369-7179-0-0-0-jpg-.html 5.1 AMD/ATI RV870 (10) HD 5970 HD 5870 P h o t o 1 / 3 3 N e x t HD 5850 Source: Tomshardware u m p s , I n t e l S o a r s , N v i d i a M a k e s a C o m e b a c k F i r s t A M D R a 5.1 AMD/ATI RV870 (11) 5.1 AMD/ATI RV870 (12) http://www.rumorpedia.net/wp-content/uploads/2010/11/amdmobilityroadmap.png 5.2 Nvidia Fermi 5.2 Nvidia Fermi (1) NVidia’s Fermi Introduced: 30. Sept. 2009 at NVidia’s GPU Technology Conference Available: 1 Q 2010 5.2 Nvidia Fermi (2) Fermi’s overall structure NVidia: 16 cores (Streaming Multiprocessors) Each core: 32 ALUs 6x Dual Channel GDDR5 (384 bit) Figure: Fermi’s overall structure [40] 5.2 Nvidia Fermi (3) Layout of a core (SM) 1 SM includes 32 ALUs called “Cuda cores” by NVidia) Cuda core (ALU) Figure: Layout of a core [40] 5.2 Nvidia Fermi (4) A single ALU (“Cuda core”) SP FP:32-bit FX: 32-bit DP FP • IEEE 754-2008-compliant • Needs 2 clock cycles DP FP performance: ½ of SP FP performance!! Figure: A single ALU [40] 5.2 Nvidia Fermi (5) Fermi’s system architecture Figure: Fermi’s system architecture [39] 5.2 Nvidia Fermi (6) Contrasting Fermi and GT 200 Figure: Contrasting Fermi and GT 200 [39] 5.2 Nvidia Fermi (7) The execution of programs utilizing GP/GPUs Host kernel0<<<>>>() Device Each kernel invocation executes a grid of thread blocks (Block(i,j)) kernel1<<<>>>() Figure: Hierarchy of threads [25] 5.2 Nvidia Fermi (8) Global scheduling in Fermi Figure: Global scheduling in Fermi [39] 5.2 Nvidia Fermi (9) Fermi chip alternatives Shipped about 4/2010 1/2001 11/2010 http://www.techreport.com/articles.x/19934 5.2 Nvidia Fermi (10) GeForce GTX 460/480/580 graphics cards GTX 460 is based on the GF 104 GTX 480 is based on the GF 100 GTX 580 is based on the GF 110 http://www.techreport.com/articles.x/19934 5.2 Nvidia Fermi (11) A pair of GeForce 480 cards http://www.techreport.com/articles.x/18682 5.2 Nvidia Fermi (12) NVidia Geforce 480 card (about March 2010) 6. Integrated CPU/GPUs 6.1 AMD Fusion APU line 6.2 Intel Sandy Bridge 6.1 AMD Fusion APU line (Accelerated Processing Unit) 6.1 AMD Fusion APU line (1) bp.blogspot.com/_qNFgsHp2dCk/RjxipyC-dcI/AAAAAAAAATQ/5KzO63udKB4/s1600-h/4622_large_stars http://blogs.amd.com/fusion/2010/11/09/simply-put-it%E2%80%99s-all-about-velocity/ph4/ 6.1 AMD Fusion APU line (2) 6.1 AMD Fusion APU line (3) http://blogs.amd.com/fusion/2010/11/09/simply-put-it%E2%80%99s-all-about-velocity/ph5/ 6.1 AMD Fusion APU line (4) LLano die 4 Bulldozer core GPU with DX11 http://www.pcgameshardware.com/?menu=browser&article_id=699300&image_id=1220883 6.1 AMD Fusion APU line (5) http://blogs.amd.com/fusion/2010/11/09/simply-put-it%E2%80%99s-all-about-velocity/ph_server/ 6.1 AMD Fusion APU line (6) http://www.itextreme.hu/hirek/2010,08,24/az_amd_legujabb_processzor_tervei_bobcat_es_bulldozer AMD Bulldozer 6.1 AMD Fusion APU line (7) Xbit labs 6.1 AMD Fusion APU line (8) AMD Bulldozer Xbit labs 6.1 AMD Fusion APU line (9) 6.1 AMD Fusion APU line (10) 6.1 AMD Fusion APU line (11) http://www.cdrinfo.com/sections/news/Details.aspx?NewsId=28748 6.2 Intel’s integrated CPU/GPU lines 6.2 Intel’s integrated CPU/GPU lines (1) Intel’s i3/i5/i7 mobile processors (announced 1/2010) (32 nm CPU/45 nm discrete GPU) 6.2 Intel’s integrated CPU/GPU lines (2) Intel’s i3/i5/i7 mobile processors 32 nm CPU 45 nm GPU http://www.anandtech.com/show/2921 6.2 Intel’s integrated CPU/GPU lines (3) Source: Intel 6.2 Intel’s integrated CPU/GPU lines (4) 6.2 Intel’s integrated CPU/GPU lines (5) 7. References (1) 6. References [1]: Torricelli F., AMD in HPC, HPC07, http://www.altairhyperworks.co.uk/html/en-GB/keynote2/Torricelli_AMD.pdf [2]: NVIDIA Tesla C870 GPU Computing Board, Board Specification, Jan. 2008, Nvidia [3] AMD FireStream 9170, http://ati.amd.com/technology/streamcomputing/product_firestream_9170.html [4]: NVIDIA Tesla D870 Deskside GPU Computing System, System Specification, Jan. 2008, Nvidia, http://www.nvidia.com/docs/IO/43395/D870-SystemSpec-SP-03718-001_v01.pdf [5]: Tesla S870 GPU Computing System, Specification, Nvida, http://jp.nvidia.com/docs/IO/43395/S870-BoardSpec_SP-03685-001_v00b.pdf [6]: Torres G., Nvidia Tesla Technology, Nov. 2007, http://www.hardwaresecrets.com/article/495 [7]: R600-Family Instruction Set Architecture, Revision 0.31, May 2007, AMD [8]: Zheng B., Gladding D., Villmow M., Building a High Level Language Compiler for GPGPU, ASPLOS 2006, June 2008 [9]: Huddy R., ATI Radeon HD2000 Series Technology Overview, AMD Technology Day, 2007 http://ati.amd.com/developer/techpapers.html [10]: Compute Abstraction Layer (CAL) Technology – Intermediate Language (IL), Version 2.0, Oct. 2008, AMD 6. References (2) [11]: Nvidia CUDA Compute Unified Device Architecture Programming Guide, Version 2.0, June 2008, Nvidia [12]: Kirk D. & Hwu W. W., ECE498AL Lectures 7: Threading Hardware in G80, 2007, University of Illinois, Urbana-Champaign, http://courses.ece.uiuc.edu/ece498/al1/ lectures/lecture7-threading%20hardware.ppt#256,1,ECE 498AL Lectures 7: Threading Hardware in G80 [13]: Kogo H., R600 (Radeon HD2900 XT), PC Watch, June 26 2008, http://pc.watch.impress.co.jp/docs/2008/0626/kaigai_3.pdf [14]: Nvidia G80, Pc Watch, April 16 2007, http://pc.watch.impress.co.jp/docs/2007/0416/kaigai350.htm [15]: GeForce 8800GT (G92), PC Watch, Oct. 31 2007, http://pc.watch.impress.co.jp/docs/2007/1031/kaigai398_07.pdf [16]: NVIDIA GT200 and AMD RV770, PC Watch, July 2 2008, http://pc.watch.impress.co.jp/docs/2008/0702/kaigai451.htm [17]: Shrout R., Nvidia GT200 Revealed – GeForce GTX 280 and GTX 260 Review, PC Perspective, June 16 2008, http://www.pcper.com/article.php?aid=577&type=expert&pid=3 [18]: http://en.wikipedia.org/wiki/DirectX [19]: Dietrich S., “Shader Model 3.0, April 2004, Nvidia, http://www.cs.umbc.edu/~olano/s2004c01/ch15.pdf [20]: Microsoft DirectX 10: The Next-Generation Graphics API, Technical Brief, Nov. 2006, Nvidia, http://www.nvidia.com/page/8800_tech_briefs.html 6. References (3) [21]: Patidar S. & al., “Exploiting the Shader Model 4.0 Architecture, Center for Visual Information Technology, IIIT Hyderabad, http://research.iiit.ac.in/~shiben/docs/SM4_Skp-Shiben-Jag-PJN_draft.pdf [22]: Nvidia GeForce 8800 GPU Architecture Overview, Vers. 0.1, Nov. 2006, Nvidia, http://www.nvidia.com/page/8800_tech_briefs.html [23]: Graphics Pipeline Rendering History, Aug. 22 2008, PC Watch, http://pc.watch.impress.co.jp/docs/2008/0822/kaigai_06.pdf [24]: Fatahalian K., “From Shader Code to a Teraflop: How Shader Cores Work,” Workshop: Beyond Programmable Shading: Fundamentals, SIGGRAPH 2008, [25]: Kanter D., “NVIDIA’s GT200: Inside a Parallel Processor,” 09-08-2008 [26]: Nvidia CUDA Compute Unified Device Architecture Programming Guide, Version 1.1, Nov. 2007, Nvidia [27]: Seiler L. & al., “Larrabee: A Many-Core x86 Architecture for Visual Computing,” ACM Transactions on Graphics, Vol. 27, No. 3, Article No. 18, Aug. 2008 [28]: Kogo H., “Larrabee”, PC Watch, Oct. 17, 2008, http://pc.watch.impress.co.jp/docs/2008/1017/kaigai472.htm [29]: Shrout R., IDF Fall 2007 Keynote, Sept. 18, 2007, PC Perspective, http://www.pcper.com/article.php?aid=453 6. References (4) [30]: Stokes J., Larrabee: Intel’s biggest leap ahead since the Pentium Pro,” Aug. 04. 2008, http://arstechnica.com/news.ars/post/20080804-larrabeeintels-biggest-leap-ahead-since-the-pentium-pro.html [31]: Shimpi A. L. C Wilson D., “Intel's Larrabee Architecture Disclosure: A Calculated First Move, Anandtech, Aug. 4. 2008, http://www.anandtech.com/showdoc.aspx?i=3367&p=2 [32]: Hester P., “Multi_Core and Beyond: Evolving the x86 Architecture,” Hot Chips 19, Aug. 2007, http://www.hotchips.org/hc19/docs/keynote2.pdf [33]: AMD Stream Computing, User Guide, Oct. 2008, Rev. 1.2.1 http://ati.amd.com/technology/streamcomputing/ Stream_Computing_User_Guide.pdf [34]: Doggett M., Radeon HD 2900, Graphics Hardware Conf. Aug. 2007, http://www.graphicshardware.org/previous/www_2007/presentations/ doggett-radeon2900-gh07.pdf [35]: Mantor M., “AMD’s Radeon Hd 2900,” Hot Chips 19, Aug. 2007, http://www.hotchips.org/archives/hc19/2_Mon/HC19.03/HC19.03.01.pdf [36]: Houston M., “Anatomy if AMD’s TeraScale Graphics Engine,”, SIGGRAPH 2008, http://s08.idav.ucdavis.edu/houston-amd-terascale.pdf [37]: Mantor M., “Entering the Golden Age of Heterogeneous Computing,” PEEP 2008, http://ati.amd.com/technology/streamcomputing/IUCAA_Pune_PEEP_2008.pdf 6. References (5) [38]: Kogo H., RV770 Overview, PC Watch, July 02 2008, http://pc.watch.impress.co.jp/docs/2008/0702/kaigai_09.pdf [39]: Kanter D., Inside Fermi: Nvidia's HPC Push, Real World Technologies Sept 30 2009, http://www.realworldtech.com/includes/templates/articles.cfm? ArticleID=RWT093009110932&mode=print [40]: Wasson S., Inside Fermi: Nvidia's 'Fermi' GPU architecture revealed, Tech Report, Sept 30 2009, http://techreport.com/articles.x/17670/1 [41]: Wasson S., AMD's Radeon HD 5870 graphics processor, Tech Report, Sept 23 2009, http://techreport.com/articles.x/17618/1 [42]: Bell B., ATI Radeon HD 5870 Performance Preview , Firing Squad, Sept 22 2009, http://www.firingsquad.com/hardware/ ati_radeon_hd_5870_performance_preview/default.asp Principle of operation of the G80/G92/Fermi GPGPUs Akrout (AMD) CPU/GPU industry dynamics and technologies 2010 Sept 1+cpu/gpu+industry+dynamics&hl=hu&gl=hu&pid=bl&srcid=ADGEESjU5ox06YHjhIpK_U05Vn52vs-EfH http://www.cdrinfo.com/sections/news/Details.aspx?NewsId=28748 http://blogs.pcmag.com/miller/2010/11/amds_graphics_roadmap_for_2011.php 6. References (5) Microarchitecture of a Fermi core 6. References (5) Principle of operation of the G80/G92 GPGPUs The key point of operation is work scheduling Work scheduling • Scheduling thread blocks for execution • Segmenting thread blocks into warps • Scheduling warps for execution 6. References (5) CUDA Thread Block Thread scheduling in NVidia’s GPGPUs All threads in a block execute the same kernel program (SPMD) Programmer declares block: CUDA Thread Block Threads have thread id numbers within block Block size 1 to 512 concurrent threads Block shape 1D, 2D, or 3D Block dimensions in threads Thread program uses thread id to select work and address shared data Threads in the same block share data and synchronize while doing their share of the work Threads in different blocks cannot cooperate Each block can execute in any order relative to other blocs! Thread Id #: 0123… m Thread program Courtesy: John Nickolls, NVIDIA llinois.edu/ece498/al/lectures/lecture4%20cuda%20threads%20part2%20spring%202009.ppt#316,2, 6. References (5) Scheduling thread blocks for execution TPC t0 t1 t2 … tm SM0 MT IU SP TPC: Thread Processing Cluster (Texture Processing Cluster) SM1 t0 t1 t2 … tm Up to 8 blocks can be assigned to an SM for execution MT IU SP Blocks Blocks Shared Memory Shared Memory TF A device may run thread blocks sequentially or even in parallel, if it has enough resources for this, or usually by a combination of both. A TPC has Texture L1 2 SMs in the G80/G92 3 SMs in the G200 L2 Memory Figure: Assigning thread blocks to streaming multiprocessors (SM) for execution [12] 6. References (5) Segmenting thread blocks into warps • Threads are scheduled for execution in groups of 32 threads, called the warps. • For scheduling each thread block is subdivided into warps. • At any point of time up to 24 warps can be maintained by the scheduler. Remark Block 1 Warps … t0 t1 t2 … t31 … Block 2 Warps … t0 t1 t2 … t31 … Streaming Multiprocessor The number of threads constituting a warp is an implementation decision and not part of the CUDA programming model. Instruction L1 Data L1 Instruction Fetch/Dispatch Shared Memory SP SP SP SP SFU SFU SP SP SP SP Figure: Segmenting thread blocks in warps [12] 6. References (5) Scheduling warps for execution • The warp scheduler is a zero-overhead scheduler • Only those warps are eligible for execution whose next instruction has all operands available. SM multithreaded Warp scheduler • Eligible warps are scheduled • coarse grained (not indicated in the figure) • priority based. time warp 8 instruction 11 warp 1 instruction 42 All threads in a warp execute the same instruction when selected. warp 3 instruction 95 .. . warp 8 instruction 12 4 clock cycles are needed to dispatch the same instruction to all threads in the warp (G80) warp 3 instruction 96 Figure: Scheduling warps for execution [12] 5.3 Intel’s Larrabee 5.2 Intel’s Larrabee (1) Larrabee Part of Intel’s Tera-Scale Initiative. • Objectives: High end graphics processing, HPC Not a single product but a base architecture for a number of different products. • Brief history: Project started ~ 2005 First unofficial public presentation: 03/2006 (withdrawn) First brief public presentation 09/07 (Otellini) [29] First official public presentations: in 2008 (e.g. at SIGGRAPH [27]) Due in ~ 2009 • Performance (targeted): 2 TFlops 5.2 Intel’s Larrabee (2) NI: New Instructions Figure: Positioning of Larrabee in Intel’s product portfolio [28] 5.2 Intel’s Larrabee (3) Figure: First public presentation of Larrabee at IDF Fall 2007 [29] 5.2 Intel’s Larrabee (4) Basic architecture Figure: Block diagram of the Larrabee [30] • Cores: In order x86 IA cores augmented with new instructions • L2 cache: fully coherent • Ring bus: 1024 bits wide 5.2 Intel’s Larrabee (5) Figure: Block diagram of Larrabee’s cores [31] 5.2 Intel’s Larrabee (6) Larrabee’ microarchitecture [27] Derived from that of the Pentium’s in order design 5.2 Intel’s Larrabee (7) Main extensions • 64-bit instructions • 4-way multithreaded (with 4 register sets) • addition of a 16-wide (16x32-bit) VU • increased L1 caches (32 KB vs 8 KB) • access to its 256 KB local subset of a coherent L2 cache • ring network to access the coherent L2 $ and allow interproc. communication. Figure: The anchestor of Larrabee’s cores [28] 5.2 Intel’s Larrabee (8) New instructions allow explicit cache control • to prefetch data into the L1 and L2 caches • to control the eviction of cache lines by reducing their priority. the L2 cache can be used as a scratchpad memory while remaining fully coherent. 5.2 Intel’s Larrabee (9) The Scalar Unit • supports the full ISA of the Pentium (it can run existing code including OS kernels and applications) • provides new instructions, e.g. for • bit count • bit scan (it finds the next bit set within a register). 5.2 Intel’s Larrabee (10) The Vector Unit Mask registers have one bit per bit lane, to control which bits of a vector reg. or memory data are read or written and which remain untouched. VU scatter-gather instructions (load a VU vector register from 16 non-contiguous data locations from anywhere from the on die L1 cache without penalty, or store a VU register similarly). Numeric conversions 8-bit, 16-bit integer and 16 bit FP data can be read from the L1 $ or written into the L1 $, with conversion to 32-bit integers without penalty. L1 D$ becomes as an extension of the register file Figure: Block diagram of the Vector Unit [31] 5.2 Intel’s Larrabee (11) ALUs • ALUs execute integer, SP and DP FP instructions • Multiply-add instructions are available. Figure: Layout of the 16-wide vector ALU [31] 5.2 Intel’s Larrabee (12) Task scheduling performed entirely by software rather than by hardware, like in Nvidia’s or AMD/ATI’s GPGPUs. 5.2 Intel’s Larrabee (13) SP FP performance 2 operations/cycle 16 ALUs 32 operations/core At present no data available for the clock frequency or the number of cores in Larrabee. Assuming a clock frequency of 2 GHz and 32 cores SP FP performance: 2 TFLOPS 5.2 Intel’s Larrabee (14) Figure: Larrabee’s software stack (Source Intel) Larrabee’s Native C/C++ compiler allows many available apps to be recompiled and run correctly with no modifications.