Midterm Review

advertisement

Midterm Review

Econ 240A

1

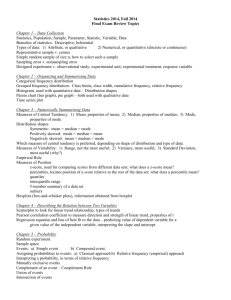

The Big Picture

2

The Classical Statistical Trail

Rates &

Proportions

Inferential

Statistics

Descriptive

Statistics

Probability

Discrete Random

Application

Binomial

Variables

Power 4-#4

Discrete Probability Distributions; Moments

Descriptive Statistics

Power One-Lab One

Concepts

central tendency: mode, median, mean

dispersion: range, inter-quartile range,

standard deviation (variance)

Are central tendency and dispersion

enough descriptors?

4

Concepts

Normal Distribution

– Central tendency: mean or average

– Dispersion: standard deviation

Non-normal distributions

Density Function for the Standardized Normal Variate

Draw a Histogram

0.45

80

0.4

Frequency

0.35

Density

0.3

0.25

0.2

60

40

20

0.15

0

0.1

15

0.05

-4

-3

-2

-1

45

60

75

90 105 120

Bills

0

-5

30

0

1

2

3

4

5

Standard Deviations

5

The Classical Statistical Trail

Rates &

Proportions

Descriptive

Statistics

Inferential

Statistics

Classicall

Application

Modern

Probability

Discrete Random

Binomial

Variables

Power 4-#4

Discrete Probability Distributions; Moments

Exploratory Data Analysis

Stem and Leaf Diagrams

Box and Whiskers Plots

7

Weight Data

Males:

140 145 160 190 155 165 150 190 195 138

160 155 153 145 170 175 175 170 180 135 170 157

130 185 190 155 170 155 215 150 145 155 155 150

155 150 180 160 135 160 130 155 150 148 155 150

140 180 190 145 150 164 140 142 136 123 155

Females:

140 120 130 138 121 125 116 145 150 112 125 130

120 130 131 120 118 125 135 125 118 122 115 102

8

115 150 110 116 108 95 125 133 110 150 108

9

Box Diagram

median

First or lowest quartile;

25% of observations below

Upper or highest quartile

25% of observations above

10

3rd Quartile + 1.5* IQR = 156 + 46.5 = 202.5; 1st value below =195

11

The Classical Statistical Trail

Rates &

Proportions

Inferential

Statistics

Descriptive

Statistics

Probability

Discrete Random

Application

Binomial

Variables

Power 4-#4

Discrete Probability Distributions; Moments

Power Three - Lab Two

Probability

13

Operations on events

The event A and the event B both

occur: ( A B)

Either the event A or the event B

( A B)

occurs or both do:

The event A does not occur, i.e.not

A:

A

14

Probability statements

Probability of either event A or event B

p( A B) p( A) p( B) p( A B)

– if the events are mutually exclusive, then

p( A B) 0

probability of event B

p( B) 1 p ( B )

15

Conditional Probability

Example: in rolling two dice, what is

the probability of getting a red one

given that you rolled a white one?

– P(R1/W1) ?

16

In rolling two dice, what is the probability of getting a red one given

that you rolled a white one?

17

Conditional Probability

Example: in rolling two dice, what is

the probability of getting a red one

given that you rolled a white one?

– P(R1/W1) ?

p( R1 / W 1) p( R1 W 1) / p(W 1) (1 / 36) /(1 / 6)

18

Independence of two events

p(A/B) = p(A)

– i.e. if event A is not conditional on event

B

– then p A B p( A) * p( B)

19

The Classical Statistical Trail

Rates &

Proportions

Inferential

Statistics

Descriptive

Statistics

Probability

Discrete Random

Application

Binomial

Variables

Power 4-#4

Discrete Probability Distributions; Moments

Power 4 – Lab Two

21

Three flips of a coin; 8 elementary outcomes

H

p

p

p

H

1-p

H

H

T

p

1-p

T

T

H

H

T

1-p

p

T

H

1-p

T

T

3 heads

2 heads

2 heads

1 head

2 heads

1 head

1 head

0 heads

22

The Probability of Getting k Heads

The probability of getting k heads

(along a given branch) in n trials is:

pk *(1-p)n-k

The number of branches with k heads

in n trials is given by Cn(k)

So the probability of k heads in n trials

is Prob(k) = Cn(k) pk *(1-p)n-k

This is the discrete binomial distribution

where k can only take on discrete

values of 0, 1, …k

23

Expected Value of a discrete

random variable

E(x) =

n

x(i) * p[ x(i)]

i 0

the expected value of a discrete

random variable is the weighted

average of the observations where

the weight is the frequency of that

observation

24

Variance of a discrete random

variable

VAR(xi) =

n

2

{[

x

(

i

)

E

[

x

(

i

)]

}

p[ x(i )]

i 0

the variance of a discrete random

variable is the weighted sum of each

observation minus its expected

value, squared,where the weight is

the frequency of that observation

25

Lab Two

The Binomial Distribution, Numbers & Plots

– Coin flips: one, two, …ten

– Die Throws: one, ten ,twenty

The Normal Approximation to the Binomial

– As n

∞, p(k)

N[np, np(1-p)]

– Sample fraction of successes:

ˆp k / n, E ( pˆ ) np / n p,Var ( pˆ ) np(1 p) / n 2

pˆ ~ N [ p, p(1 p) / n]

26

Lab Three and Power 5,6

1/ 2[( z 0) /1]2

f ( z) [1/ 2 ] * e

Z~N(0,1)

Prob(1.96≤z≤1.96)=0.95

ˆ

ˆ

ˆ

Density Function for the Standardized Normal Variate

0.45

0.4

0.35

Density

0.3

0.25

0.2

2.5%

0.15

z [ p E ( p )] / pˆ

2.5%

0.1

0.05

0

-5

-4

-3

-2

-1

0

1

2

3

-1.96Standard Deviations 1.96

4

5

ˆ pˆ pˆ (1 pˆ ) / n

prob (1.96 [ pˆ E ( pˆ )] / ˆ pˆ 1.96) 0.95

prob (1.96 *ˆ pˆ pˆ p 1.96 *ˆ pˆ ) 0.95

prob (1.96 * ˆ pˆ p pˆ 1.96 *ˆ pˆ ) 0.95

prob ( pˆ 1.96 *ˆ pˆ p pˆ 1.96 *ˆ pˆ ) 0.95

27

Hypothesis Testing: Rates & Proportions

One-tailed test:

Step #1:hypotheses

H0 : p f

Ha : p f

One-tailed test:

Step #2: test statistic

z [ pˆ E ( pˆ )] / ˆ pˆ [ pˆ f ] / ˆ pˆ ,

ˆ pˆ pˆ (1 pˆ ) / n

Density Function for the Standardized Normal Variate

One-tailed test:

Step #3: choose

e.g. = 5%

0.4

Z=1.645

0.35

0.3

Density

Step # 4: this determines

The rejection region for H0

0.45

Reject if

0.25

( pˆ f ) / ˆ 1.645

0.2

0.15

5%

0.1

0.05

0

-5

-4

-3

-2

-1

0

1

Standard Deviations

2

3

4

28

5

Remaining Topics

Interval estimation and hypothesis

testing for population means, using

sample means

Decision theory

Regression

– Estimators

OLS

Maximum lilelihood

Method of moments

– ANOVA

29

Midterm Review Cont.

Econ 240A

30

Last Time

31

The Classical Statistical Trail

Rates &

Proportions

Inferential

Statistics

Descriptive

Statistics

Probability

Discrete Random

Application

Binomial

Variables

Power 4-#4

Discrete Probability Distributions; Moments

Remaining Topics

Interval estimation and hypothesis

testing for population means, using

sample means

Decision theory

Regression

– Estimators

OLS

Maximum lilelihood

Method of moments

– ANOVA

33

Lab Three

Power 7

Population

Random variable x

Distribution f(m, 2

f?

Pop.

Sample

Sample Statistic:

x ~ N (m , )

2

Sample Statistic

n

s 2 ( xi x ) 2 /( n 1)

i 1

34

f(x) in this example is Uniform

X~U(0.5, 1/12)

E(x) = 0.5

Var(x) = 1/12

f(x)

0

x

Nonetheless, from the central

Limit theorem, the sample mean

Has a normal density

1

x ~ N [0.5, (1 / 12) / n ]

Density Function for the Standardized Normal Variate

0.45

z [ x E ( x )] / x

0.4

0.35

z [ x 0.5] / (1 / 12) / n

Density

0.3

0.25

0.2

0.15

0.1

0.05

0

-5

-4

-3

-2

-1

0

1

Standard Deviations

2

3

4

5

35

Histogram of 50 Sample Means, Uniform, U(0.5, 1/12)

0.

95

M

or

e

0.

85

0.

75

0.

65

0.

55

0.

45

0.

35

0.

25

0.

15

20

15

10

5

0

0.

05

Frequency

Histogram of 50 sample means

Sample Mean

Average of the 50 sample means: 0.4963

36

Inference

ingeneral , x ~ f ( m , 2 )

fromthecentra lim ittheoremweknow

x ~ N [ m , 2 / n]

Density Function for the Standardized Normal Variate

so, z [ x m ] /( / n )

0.45

0.4

Pr ob(1.96 z 1.96) 0.95

Pr ob(1.96( / n ) ( x m ) 1.96( / n )) 0.95

Z=-1.96

Density

Pr ob(1.96 ( x m ) /( / n ) 1.96) 0.95

0.35

0.3

0.25

Z=1.96

0.2

2.5%

Pr ob(1.96( / n ) ( m x ) 1.96( / n )) 0.95

0.15

2.5%

0.1

0.05

Pr ob( x 1.96( / n ) ( m ) x 1.96( / n )) 0.95

0

-5

-4

-3

-2

-1

0

1

2

3

Standard Deviations

37

4

5

Confidence Intervals

If the population variance is known, use the

normal distribution and z

z ( x m ) /( / n )

If the population variance is unknown, use

Student’s t-distribution and t

t ( x m ) /( s / n )

where, s

i n

2

(

x

x

)

/( n 1)

i

i 1

38

t-distribution

Text p.253

Normal

compared to t

t distribution

as smple size

grows

39

Appendix B

Table 4

p. B-9

40

Hypothesis

tests

Step two: choose the test statistic

Step One: state the

hypotheses

H0 : m v

You choose v

Ha : m v

2-tailed test

Step Three: choose the size

Of the Type I error, =0.05

Density Function for the Standardized Normal Variate

z [ x E ( x )] / x

z [ x v] /( / n )

Step four: reject the null hypothesis

if the test statistic is in the

Rejection region

0.45

0.4

Density

Z=-1.96

0.35

Z=1.96

0.3

0.25

0.2

2.5%

2.5%

0.15

0.1

0.05

0

-5

-4

-3

-2

-1

0

1

2

3

4

5

Standard Deviations

41

True State of Nature

p = 0.5

Accept null

Decision

Reject null

No Error

1-

Type I error

C(I)

P > 0.5

Type II error b

C(II)

No Error

1-b

E[C] = C(I)* + C(II)* b

42

Regression Estimators

Minimize the sum of squared

residuals

Maximum likelhood of the sample

Method of moments

43

Minimize the sum of squared residuals

n

n

Min. eˆ ( yi yˆ i )

i 1

2

i

2

i 1

n

2

ˆ

( yi aˆ bxi )

i 1

n

eˆ / aˆ 0, y aˆ bˆx

i 1

2

i

44

Maximum likelihood

n

2

2

ˆ

ˆ

ln Lik / 0, [ ei ] / n

2

i 1

Method of moments

n

n

n

bˆ ( yi y )( xi x ) / n( xi x ) 2

2

ˆ

b ( yi y )( xi x ) / ( xi x )

i 1

i 1

i 1

i 1

45

Inference in Regression

Interval estimation

t [bˆ Ebˆ] / ˆ bˆ [bˆ b] / ˆ bˆ

prob(t.025 [bˆ b] / ˆ bˆ t.975 ) 0.95

prob(ˆ bˆt.025 bˆ b ˆ bˆt.975 ) 0.95

prob(ˆ bˆ t.025 b bˆ ˆ bˆt.975 ) 0.95

prob(bˆ ˆ bˆ t.025 b bˆ ˆ bˆt.975 ) 0.95

46

Estimated Coefficients, Power 8

taˆ [aˆ E (aˆ )] / ˆ aˆ (1.204 0) / 0.727 1.41

Coefficients

Standard

Error

t Stat

P-value

Lower 95%

Upper

95%

-1.02377776

0.727626534

-1.40701

0.167999648

-2.499472762

0.451917

0.06565026

0.001086328

60.43316

8.58311E-38

0.063447085

0.067853

â

Intercept

b̂

X Variable 1

47

Appendix B

Table 4

p. B-9

48

Inference in Regression

Hypothesis testing

Step One

State the hypothesis

H0 : b 0

Ha : b 0

Step Three

Choose the size of the

Type I error,

Step Two

Choose the test statistic

t [bˆ b] / ˆ bˆ

Step Four

Reject the null hypothesis if the

Test statistic is in the rejection region

49

![2*V[X]=1/n2*np(1-p)=p(1-p)/n 1833.0 5.05.0 !8!6 !14 )6 ( = = = XP](http://s3.studylib.net/store/data/008711824_1-0d6d751ef61e41cbf10ab5a47ea15653-300x300.png)